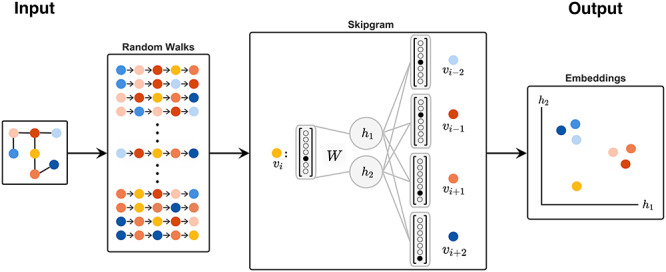

Figure 1.

This shows the process of learning a simple graph embedding using DeepWalk. From an input graph, a fixed number of random walks are generated from each node with a predetermined length. The embeddings for each node are then learned using the Skipgram objective, where a node on the random walk is given as input to a single layer neural network. The input is compressed down to an  -dimensional representation (here,

-dimensional representation (here,  ) with an embedding matrix

) with an embedding matrix  , and then used to predict which nodes surround it on the walk. That is, a node

, and then used to predict which nodes surround it on the walk. That is, a node  is used to predict the surrounding nodes on the walk within a given context window (here, size two):

is used to predict the surrounding nodes on the walk within a given context window (here, size two):  and

and  . After training, this lower dimensional representation for each node, which can be easily retrieved from

. After training, this lower dimensional representation for each node, which can be easily retrieved from  , is then used as the embedding for each node. Note that DeepWalk chooses the next node in the random walk uniformly at random, and therefore can return to previous nodes in the walk, whereas node2vec introduces a parameter to control the probability of doing so.

, is then used as the embedding for each node. Note that DeepWalk chooses the next node in the random walk uniformly at random, and therefore can return to previous nodes in the walk, whereas node2vec introduces a parameter to control the probability of doing so.