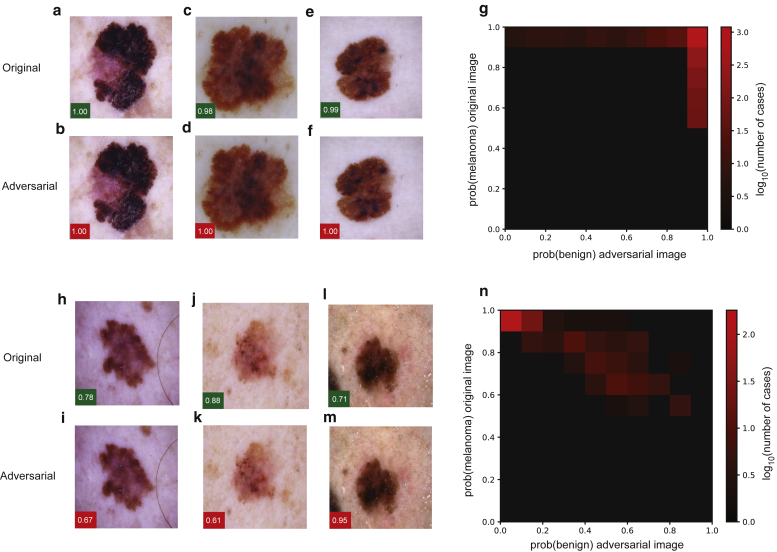

Figure 1.

FGSM and three-pixel attacks on deep-learning systems for skin cancer diagnosis. (a–g) Attacks were implemented against a pretrained Inception, version 3, network that was fine tuned for the differentiation of melanoma from benign melanocytic nevi. Adversarial attack with the FGSM. Examples of (a, c, e) original and (b, d, f) perturbed images are shown. Green boxes indicate the confidence (i.e., the output of the network in favor of this class after softmax transformation) of the network in predicting melanoma for the original images, and red boxes indicate the confidence in the prediction of a benign nevus for the adversarial images. (g) Image illustrates the dependency of successful adversarial attacks on initial classification by the network. For each image in the validation set, after the softmax transformation, the output of the final classification layer of the network is plotted for the original image (y-axis) versus the adversarial image (x-axis). (h–n) Adversarial attack through modification of three pixels within the input image. Examples of (h, j, l) original and (i, k, m) perturbed images are shown along with the dependency of the successful adversarial attacks on the original classification by the (n) network plotted as in g. FGSM, fast gradient sign method.