Abstract

Background

Mobile medical applications (Apps) offer innovative solutions for patients’ self-monitoring and new patient management opportunities. Prior to routine clinical application feasibility and acceptance of disease surveillance using an App that includes electronic (e) patient-reported outcome measures (PROMs) warrant evaluation. Therefore, we performed a proof-of-concept study in which rheumatoid arthritis (RA) patients used an App (RheumaLive) to document their disease.

Methods

Accurate PROM reporting via an App in comparison to paper-based versions was investigated to exclude media bias. Sixty participants recruited from 268 consecutive RA outpatients completed paper-based and electronic PROMs (Hannover Functional Questionnaire/derived HAQ; modified RA disease activity index) using the App at baseline and follow-up visits. Between visits, patients used their App on their own smartphone according to their preferences. The equivalence of PROM data and user experiences from patients and physicians were evaluated.

Results

Patients’ (78.3% female) mean (SD) age was 50.1 (13.1) years, disease duration 10.5 (9.1) years, and paper-based HAQ 0.78 (0.59). Mean confidence in Apps scored 3.5 (1.1, Likert scale 1 to 6). ePROMs’ scores obtained by patients’ data entry in the App were equivalent to paper-based ones and preferred by the patients. After 3 months, the App retention rate was 71.7%. Patients' overall satisfaction with the App was 2.2 (0.9, Likert scale 1 to 6). Patients and physicians valued the App, i.e., for patient-physician interaction: 87% reported that it was easier for them to document the course of the disease using the App than “only” answering questions about their current health during routine outpatient visits. Further App use was recommended in 77.3% of the patients, and according to physicians, in seven patients, the App use contributed to an increased adherence to therapy.

Conclusion

Our study provides an essential basis for the broader implementation of medical Apps in routine care. We demonstrated the feasibility and acceptance of disease surveillance using a smartphone App in RA. App use was convincing as a reliable option to perform continuous, remote monitoring of disease activity and treatment efficacy.

Trial registration

ClinicalTrials.gov, NCT02565225. Registered on September 16, 2015 (retrospectively registered).

Keywords: Rheumatoid arthritis, Electronic patient reported outcomes, Mobile Apps, Digital health

Introduction

In rheumatology, patient-reported outcome measures (PROMs) have been recognized as key outcome measures and indispensable prerequisites for improving the quality of care [1, 2]. Digital equivalents (ePROMs) have been designed to support assessments of clinical and related problems as well as the effects of treatment [3–6]. Digital health applications are recognized as important tools for modern health care systems, and encouraging prospects for the use of mobile medical applications (Apps) have been described and reviewed [7–10]. Due to the “Digital Healthcare Act” since 2020, Germany is the first country worldwide that has enabled physicians to prescribe Apps that are reimbursed by health insurances [11]. However, Apps for rheumatology are missing in that register to date.

Assessment studies in ePROMs found that computer and paper-based measures usually produce equivalent scores [12, 13]. However, a Cochrane review was not able to give clear recommendations whether Apps may influence questionnaires’ responses, and further evaluations are still recommended with a restriction if there are only minimal changes [14, 15]. Meanwhile, “Digital Healthcare Act”-associated requirements request to demonstrate equivalence in order to proof benefit [11]. Therefore, before introducing mobile Apps into clinical routine care and modern patient management concepts, PROMs obtained by paper–pencil and App versions still need to be longitudinally evaluated as data equivalence cannot be presumed [16]. In addition, patients’ capability to use a smartphone App for remote monitoring over time needs to be tested, especially in rheumatic diseases that are typically associated with functional or pain-related impairments [17].

Our aim was to prove usability and feasibility for disease surveillance and App implementation in routine care. In our proof-of-concept study, we intended to demonstrate equivalence of PROMs’ scores assessed by the RheumaLive App in comparison to paper-pencil versions in cross-sectional and follow-up assessments. RA patients’ capability to handle data entry in the smartphone App, retention rates, user experiences, and preferences of patients and physicians were investigated.

Material and methods

The RheumaLive App was developed in German as an electronic diary for RA patients. In December 2020, it was relaunched as RheCORD. Besides diary functions, for e.g. medication, pain and morning stiffness RheumaLive implied two self-administered PROMs: the Hannover Functional Questionnaire (FFbH), which can be converted into Health Assessment Questionnaire (HAQ) values [18] that will be reported in the following, and the modified RA disease activity index questionnaire (RADAI) [19]. The RADAI—in the paper-based and electronic version—was applied to our outpatients for the first time.

Common control elements enabled data entry. Integrated algorithms calculated questionnaires’ scores. Patients’ individual follow-up data was visible to the App-user at a glance via generated PDF files customizable to personal interests.

Within our proof-of-concept study, “Mobile medically supervised patient management in rheumatoid arthritis patients using DocuMed.rh and RheumaLive App (MiDEAR),” patients and caring rheumatologists evaluated the use, usability, and feasibility of RheumaLive in routine care. MiDEAR relied on the assessment of “real-life” data and the “bring your own device” (BYOD) concept, which is an important aspect for the generalizability of the results. Patients were consecutively recruited from our single-center outpatient clinics caring for approximately 500 RA patients per year. Eligibility criteria were age above 18 years of age, diagnosis of RA (ICD-10-Code M05.* or M06.*), good German language skills, and owner of a smartphone with an operating system that was compatible for App use.

After user training, patients downloaded the App from a project-related website. They were advised to document data voluntarily on not-pre-specified intervals in their own App. They were followed-up approximately every 3 months (depending on their individually scheduled routine outpatient visits at our clinic) for 9 months. At baseline, experience with mobile devices and Apps as well as history of computer/internet use were self-documented via a modified paper-based assessment [13]. We present data from baseline and after 3 months.

Patients’ clinical and sociodemographic data were assessed according to the standard operation procedures at our clinic (e.g., Disease Activity Score of 28 joints (DAS28), medication) via our web-based patient documentation system. For further comparison, standard questions used in the national database (NDB) of the German Rheumatism Research Centre were included. Patients’ vocational education as a proxy for socioeconomic status was assessed in compliance with the NDB and the German educational system.

For the study of the media bias in PROM scores depending on the application used, RheumaLive was additionally installed on a dedicated smartphone. Patients answered both paper-based and electronic PROMs included in RheumaLive at each outpatient visit (baseline and follow-up) in random order and under similar conditions. The study coordinator gave standardized instructions at baseline, logged into the App on the clinics’ smartphone, and selected the forms. Patients answered their questions and saved the data themselves. As most patients had used the RheumaLive App between baseline and follow-up on their own device, patients were familiar with it at follow-up visits, and staff assistance was only provided on demand.

Patients and physicians reported on their IT-literacy at baseline. In follow-ups, they evaluated the App (use) assessing acceptability, usability, user satisfaction, and clinical relevance of the platform through paper-based questionnaires including i.e. questions to satisfaction, App design, and usefulness for patient-physician interaction. Where applicable Likert scales from 1 to 6 where used that based on the German school grading system.

To confirm representativeness of the study cohort for a general RA population in rheumatologic specialized care, characteristics of all RA patients of the NDB were compared to our study participants with regard to gender, age, education, comorbidities, and disease-specific scores and treatment.

We obtained each patient’s signed informed consents and ethical approval from the local ethic committee (local MiDEAR study number 4378). MiDEAR was registered to ClinicalTrials.gov (NCT02565225).

Statistical analyzes

As MiDEAR was performed as a proof-of-concept study, the sample size was determined to consider limitations due to available funding, yet allow for statistical analysis. However, the achieved power > 0.99 for the main outcomes (data equivalence of paper-based and ePROMs) omitting adjustment for multiple testing (α = 0.05) showed that the number of persons included was sufficient.

Values are expressed as percentages for discrete variables, or as mean (standard deviation, SD), range, or median for continuous variables. To detect differences of paper-based PROMs and ePROMs, we tested for equivalence (“two one-sided tests” (TOST) procedure). Reported minimal clinically important differences (MCIDs) of the PROMs were used for upper and lower equivalence bounds (HAQ: 0.22 for RA in clinical trials and RADAI: 1.49) [20, 21]. Kruskal Wallis tests were performed for further group comparisons. All statistical tests were performed two-tailed, p values less than 0.05 were considered significant. Statistical computations used IBM SPSS Statistics version 25 for descriptive data analysis and SAS version 9.4 for test statistics.

Results

According to the “BYOD” concept n = 268 consecutive RA outpatients were explored for possession of smartphones and/or tablets, n = 156 (58.2%) remained eligible for the study. Of those, 96 (61.5%) patients denied to participate, and the most common reasons were incompatible operating systems (29.2%), lack of interest in Apps (15.6%), and missing language skills (17.7%). Sociodemographic and clinical data of all non-participants are provided in Table 1.

Table 1.

Sociodemographic and clinical data of participants owning a smartphone and non-participants

| Non-participants (n = 208) |

MiDEAR participants BL (n = 60) |

MiDEAR participant follow-up (N = 51) |

NDB (n = 8481) |

|

|---|---|---|---|---|

| Age (mean (SD)) years | 61.2 (14.8) | 50.1 (13.1)* | 49.1 (12.3)* | 62.6 (14.0) |

| Female (n (%)) | 150 (72.1) | 47 (78.3)* | 40 (78.4)* | 6369 (75.1) |

| Disease duration (mean (SD)) years | 10.0 (10.1) | 10.5 (9.1)* | 10.5 (9.7)* | 13.3 (10.3) |

| DAS28 (mean (SD)) baseline | 2.7 (1.1) | 2.6 (1.0)* | 2.6 (1.0)* | 2.8 (1.0) |

| DAS28 (mean (SD)) follow-up | n.a. | n.app. | 2.5 (0.8) | n.a. |

|

HAQ (mean (SD)) (Paper-based for MIDEAR participants) |

1.21 (0.71) | 0.78 (0.59)* | 0.69 (0.50)* | 0.88 (0.69) |

| HAQ (mean (SD)) follow-up (Paper-based for MIDEAR participants) | n.a. | n.app. | 0.69 (0.50) | n.a. |

| Number of co-morbidities (median (IQR)) | 2 (1.0–3.0) | 2 (1.0–3.8)* | 2 (0–3.0) * | 2 (1.0–4.0) |

| Education: University entrance diploma (n (%)) | 59 (28.4) | 30 (50.0)* | 28 (54.9)* | 1120 (24.4) |

| Medication | ||||

| NSAIDs (n (%)) | 85 (40.9) | 28 (46.7) | 24 (47.1) | 2782 (48.6) |

| Glucocorticoids (n (%)) | 121 (58.2) | 30 (50.0) | 26 (51.0) | 2717 (47.5) |

| csDMARDs alone (n (%)) | 115 (55.3) | 36 (60.0) | 30 (58.8) | 3485 (61.1) |

| Biological DMARD either alone or in combination with csDMARD (n (%)) | 67 (32.2) | 20 (33.3) | 17 (33.3) | 1576 (27.6) |

n.a. not available, n.app. not applicable, *values refer to baseline assessments, BL baseline, NDB national database

Sixty patients agreed to participate and to complete the paper-based and electronic PROMs in RheumaLive on the clinic’s smartphone at baseline. At the second visit after 3.5 months (median) the project had been terminated by 17 patients (28.3%); reasons were not App-dependent (e.g., time constraints, new relevant comorbidity demanding their attention). However, n = 51 still agreed to fill in paper-based PROMs and ePROMs.

Clinical and sociodemographic data

Studied patients’ sociodemographic as well as clinical data are depicted in Table 1. Considering MCID, disease activity according to DAS28 remained stable between baseline and first follow-up in 74.5% (n = 38) of the patients.

In comparison with reference data from the NDB our participants were about 12 years younger, while non-participants were similar. Forty-seven participants were female, expected proportion from NDB was forty-five. Patients participating in MiDEAR had a by 3 years shorter disease duration, DAS28 was comparable. For HAQ, a difference was seen, while non-participants had a worse mean HAQ than reported in the NDB, participants had a slightly better HAQ. With regard to educational status, patients from our center generally seem to differ from the NDB (24%), since both for non-participants (28%) and participants (50%), the proportion with university entrance diploma was higher.

Mobile device-related data

Operating systems on patients’ own devices were iOS (n = 34) and Android (n = 26) of different release numbers. Patients were familiar with their devices for 3.0 (2.4) years. Previous experience with mobile devices was self-rated by the patients in pre-given categories as beginners (15%, n = 9), laypersons (11.7%, n = 7), users (68.3%, n = 41), and professionals (5.0%, n = 3). Self-rated confidence in Apps was on average 3.5 (SD = 1.1) on a Likert scale from 1 (very high) to 6 (very low).

Comparison of data acquisition modes

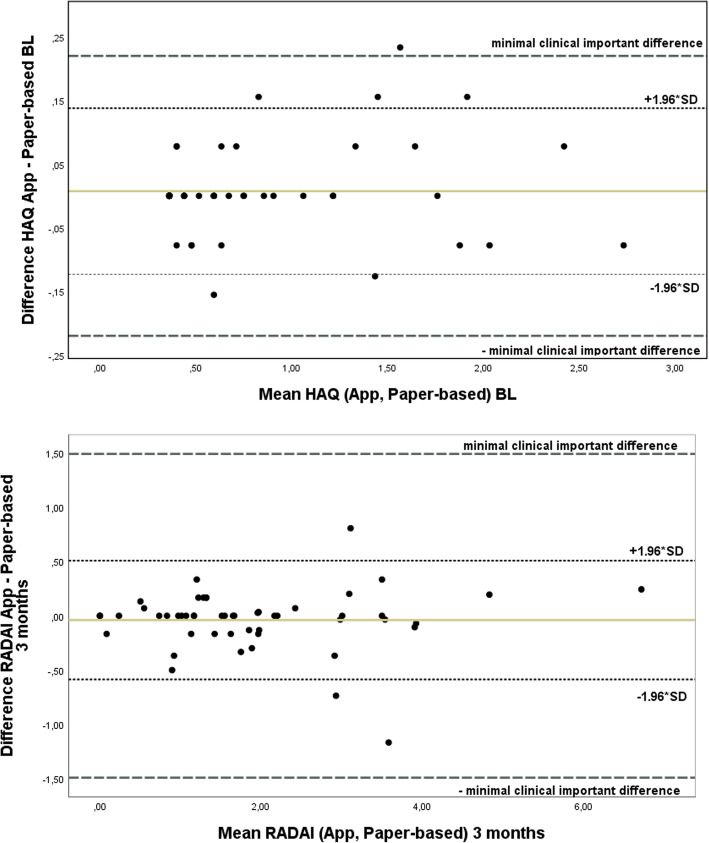

In the entire group scores obtained by direct data entry in the App did not differ significantly from the scores obtained by the paper-based questionnaires, neither at baseline nor at the follow-up visits, see Table 2 and Fig. 1.

Table 2.

Mean differences of derived HAQ and RADAI scores obtained by paper-respectively App-based questionnaires

| PROMs score | Mean differences (SD) App – paper-based |

p value of equivalence tests |

|---|---|---|

| Baseline | ||

| Derived HAQ (n = 57) | 0.01 (0.07) | < 0.0001 |

| RADAI (n = 58) | 0.00 (0.46) | < 0.0001 |

| Follow-up | ||

| Derived HAQ (n = 51) | 0.03 (0.14) | < 0.0001 |

| RADAI (n = 51) | −0.04 (0.28) | < 0.0001 |

Fig. 1.

Bland-Altman plots for HAQ at baseline (BL) and RADAI after 3 months

Analyses of score differences were performed for subgroups to investigate potential dependencies of the equality of paper-based and ePROMs. Score differences were robust for gender, age groups, and patients’ vocational education as a proxy for socioeconomic status (detailed data not shown). These findings were stable at follow-up. Furthermore, patients were divided by disease activity (DAS28 < 2.6 (baseline (BL) n = 33, follow-up n = 24), DAS28 2.6–3.1 (BL n = 10, follow-up n = 13), and DAS28 ≥ 3.2 (BL n = 16, follow-up n = 11)). Neither at baseline nor at follow-up score, differences for RADAI were significantly different between DAS28 groups (p > 0.05). Paper-based and ePROM score differences for HAQ were significantly different between the DAS28 groups at baseline due to higher values in patients with severe activity, but not at follow-up (p = 0.01 resp. p = 0.07). Differences between the two modes at baseline in the group with DAS28 ≥ 3.2 were still within the MCID. When patients were split up by functional capacity groups, paper-based and electronic score differences were not statistically different between the HAQ groups.

Experiences using the App PROMs and patients’ preferences

Overall, missing data for paper-based and ePROMS were recorded in n = 3 patients each. Although the paper-based and ePROMs allowed missing answers, only few missing data were recorded for both the paper-based and ePROMs (maximum 4 items in n = 4); missing answers diminished in the follow-up visits. According to underlying algorithms, missings rarely (n = 1 with 4 missings in paper-based FFbH) limited the calculation of scores.

Five patients reported a lagging iPhone’s touchscreen while entering data, and no other major difficulties occurred. Only one patient felt that the RA had compromised the handling of the App between first and second visit.

At follow-up visits, 42 participants had evaluated the App. The majority (n = 27, 84.4%) preferred completing ePROMs rather than paper-based forms. Overall satisfaction with the App was rated as 2.2 (0.9) (mean (SD), n = 37, Likert scale 1–6), and the App was described by 92.3% (n = 36/39) as easy to use. The App design was valued as logically structured by 97.3% (n = 36/37), easy to understand 94.6% (n = 35/37), and as appealing by 83.8% (n = 31/37) of the patients.

Patients considered the App as useful (2.1 (1.1), mean (SD), Likert scale 1–6) i.e., for their patient-physician interaction: 63.2% (n = 24/38) reported that it was easier or at least partially easier (23.7%, n = 9/38) for them to document the course of the disease using the app than “only” answering questions about their current health during routine outpatient visits. In mean, patients filled 14.3 times (IQR 1.25–15.0) eRADAIs and 10.6 (IQR 1.5–11.5) times eFFbH respectively between baseline and follow-up. No statistically significant correlations to sociodemographic data or DAS28 were notable.

In addition, physicians evaluated the App in 22 patients. They valued the use of the App after 3 months as generally useful for the patients to document their disease progression apart from outpatient visits (2.0 (0.9) (mean (SD)), Likert scale 1–6). In 40.9% (n = 9), the App helped them to assess the course of the disease. The documentation with the App had a (partial) influence (n = 16) on the interaction during the outpatient visits. The influence was rated positively in 66.7% (n = 10/15). Further App use was recommended in 77.3% (n = 17). According to the physicians, the App contributed (partially) to an increase in the adherence to therapy in seven patients.

Discussion

This study evaluated cross-sectional and follow-up assessments of ePROMs in an App compared to paper-based versions in patients with RA. Validation of “new” electronic versions of outcome instruments has been recommended and are required in Germany for reimbursement issues, as properties of the original instrument cannot be taken for granted and might change [2, 11, 16, 22]. Evaluation of accurate PROM reporting via an App is substantial for its use in routine care although other less arduous approaches like expert screen reviews have been proposed [15, 23, 24].

We showed that data acquisition of well-established PROMs (FFbH/HAQ; RADAI) using the RheumaLive App is a legitimate alternative to paper-pencil formats in RA patients that are willing to use a disease-related mobile App in a BYOD concept. MiDEAR produced relevant data for regulatory aspects, as e.g., medical device regulation and reimbursement issues [11, 25]. The two application modes produced concordant scores in a cross-sectional investigation but also in follow-ups even for a not yet applied PROM (modified RADAI). Thus, RheumaLive permitted regular, valid assessments of disease activity, and functional capacity even over time and is compliant with some of the later developed EULAR points to consider for mobile health applications [11, 26]. Our data are in line with a systematic review that revealed that highest pooled agreement of electronic and paper-based measures can be reported for paper-based versus touch screen assessments [14].

The App enabled RA patients to incorporate PROMs into their daily routine. As reported from other studies on ePROM assessments, our participants preferred electronic over paper-based versions [13, 22, 27]. Major App handling problems were not observed in our study, although using a relatively small touchscreen display (< 4.5 in.). Only one patient reported problems, although these could be expected more often in RA due to impaired hand function or stiffness. Larger screen sizes or the use of styluses for input can offer enhanced usability even to more functionally limited RA patients and still offer enhanced mobility. Our data is in line with results from the COmPASS study [28], and an evaluation of a touchscreen App in psoriatic arthritis patients showing that the Apps were regarded as suitable alternatives for PROM assessments [29]. De Souza et al. co-designed with patients a “hospital-specific” App that also includes the HAQ for RA patients. It was valued by them and expected to reduce non-attendance [30]. Walker et al. rated web-based patient assessments as useful for cost-effective monitoring between outpatient visits [28]. Further positive results and benefits from mHealth interventions have recently been summarized by Seppen et al. [31]. In line with those findings our rheumatologists, who e.g., valued the App data for permitting broader views on the course of the disease, reported some positive effect on the patient-physician interaction and on therapy adherence, and recommended its further use. Our data showed that App use for self-reporting of symptoms is feasible longitudinally, and retention rate after 3 months was still high in MiDEAR. Thus, as reported from other ePROM studies, the App use might contribute to optimized patient–doctor interactions and care [31, 32].

Similar to work from others having reported on App use in arthritis, MiDEAR additionally described needs, requirements, and liked but also disliked features from RA patients’ and physicians’ perspectives that were incorporated in the App update [33–35].

Missing data can badly affect score calculation and limit PROMs’ usefulness. The RheumaLive App minimized unintentional non-response by an error prompt. While data saving with missing values was permitted, the user was not able to see a score value if the number of missing items was too high for calculations according to the algorithms. While leaving ePROMs’ questions unanswered has been regarded as an important feature of personal choice, this opportunity was used only in the vast minority of our cases [36]. We feel this is a typical example of potential superiority of Apps that include PROMs over the traditional paper forms. In addition, Apps facilitate additional real-time, time-stamped, and long-term systematic patient-centered data collection and they might serve modern management strategies in the rigid health care systems in the next years [37–39]. A similar approach has been used with electronic diaries in clinical trials to transmit self-measured clinical parameters, and furthermore, diaries on smartphones have been reported to be convenient for users [40–42].

When applying Apps for clinical use the issues “efficacy of treatment” and “protection of patient safety” need to be raised [43]. Apps are increasingly regarded as medical devices [25, 44], and “Conformité Européenne (CE) marking” is regarded as a prerequisite before spreading an App in health care [45]. RheumaLive had been registered as a medical device to the German Institute for Medical Documentation and Information database (registration number DE/CA21/STAR Healthcare/2015/01/A/0001, document number 00132034). In order to meet the requirements for Germany’s “Digital Healthcare Act,” proof of benefit still needs to be provided [11]. Until today, no disease-specific App for RA patients meets these requirements, see digital health application (DiGA) directory (https://diga.bfarm.de/de).

When starting the proof-of-concept study, no methodology to develop health Apps for RA patients was available. Thus, RheumaLive had been developed without taking all stakeholders’ needs and requirements into account. This approach should be omitted according to the recently published EULAR points to consider for the development, evaluation, and implementation of mobile health applications [8, 26].

In general, it should be kept in mind that Apps for mobile devices are of a particular (clinical) value as these are common, carried around with the patients most of the time, and usually stick to their owners [46]. For high patients’ acceptance of ePROMs and the “conservation of instrument measurement equivalence,” the BYOD approach has been recommended [24]. However, when Apps with PROMs are commonly used, some patients will be unfamiliar with scores depicted to them requiring their education [4]. More educational content has recently also been identified for the next iteration step of an App developed by Kristjansdottir et al. [47].

For App use in routine care, it is necessary to take special needs (e.g., those of elderly, accessibility to people with higher degrees of disabilities) into account and need to be in line with further recently published recommendations [26]. Long-term data accessibility is of major concern in management of chronic diseases. The rapid progress in mobile devices and operating systems requires at least permanent updates to permit access of user data over many years. Even if interoperability of developments is said to be ensured by the manufacturers, "new" and current standards (e.g., Fast Healthcare Interoperability Resources (FHIR)) need to be taken into account. In addition, feasibility aspects are device-, operating system-, and App-specific and can only be generalized to a certain extent.

Limitations

Our data represent data from a tertiary center; studies in larger cohorts and different clinical settings are warranted. Patients showed low and stable disease activity and good functional capacity at both investigations, data from samples with higher disease activity, and/or lower functional limitations are warranted as active arthritis may limit use of mobile devices.

The differences in age, disease duration, and education level in comparison to non-participants and to the average RA population captured in the national database indicate that younger, well-educated patients seemed to be attracted to and willing to accept the use of new technology in their own health management. The number of our patients may be too small and the deviations from the broader RA population suggest caution in generalizing the results, especially for populations with very different characteristics, e.g., social or economic marginalization such as in developing countries. Though getting less likely as we are facing more IT-savvy and well IT-equipped patients, not every RA patient might fulfill the prerequisites to use Apps (e.g., operating system to old).

Conclusion

In MiDEAR, we demonstrated feasibility and acceptance of disease surveillance in RA by the use of established ePROMs in a smartphone App. Continuous remote monitoring of disease activity, disability, and treatment efficacy via this App apart from punctual physician visits seems feasible. In the future, the rapidly emerging use of digital health Apps promises amelioration of patients’ self-empowerment and adherence in the caring process in rheumatology. Encouraging forthcoming aspects like incorporation of passively generated data from mobile devices/sensors need further evaluation.

Acknowledgements

Data collection was supported by Meike Dieckert. We are grateful for the participation of patients and physicians from our clinic supporting the evaluation of the RheumaLive App.

Authors’ contributions

All authors were involved in the MiDEAR project and made substantial contributions to the conception and design of the work, the acquisition, the analysis, and the interpretation of the work. JR, CN, AB, GC, and MS: study and protocol design, study conduction, manuscript conception, manuscript writing, data acquisition, statistical data analysis and data interpretation, and manuscript review. DH: provision of the data of the NDB sample, manuscript writing, and manuscript review. RW: statistical analysis and data interpretation, manuscript review. The authors read and approved the final manuscript.

Authors’ information

Not applicable.

Funding

This Investigator Initiated Study was supported by an unrestricted grant from the UCB Pharma GmbH, Monheim, Germany. Open Access funding enabled and organized by Projekt DEAL.

Availability of data and materials

The data are available on reasonable request.

Declarations

Ethics approval and consent to participate

The proof-of-concept study was conducted in accordance with ethical principles of the Declaration of Helsinki and Good Clinical Practice guidelines and was approved by the center’s institutional ethics committee. All patients provided written informed consent.

Consent for publication

Not applicable.

Competing interests

See funding. HA, AB, DH, CN and RW declare that they have no competing interests. GC, JGR and MS received unrestricted grants from UCB Pharma. JGR received a speaker`s fee from UCB Pharma. MS was medical adviser for UCB Pharma.

Footnotes

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Richter JG, Kampling C, Schneider M. Electronic patient reported outcome measures (e-PROMS) In: El Miedany Y, editor. Patient reported outcome measures in rheumatic diseases. Cham: Springer; 2016. pp. 371–388. [Google Scholar]

- 2.USA FDA. Guidance for industry patient-reported outcome measures: use in medical product development to support labeling claims. December 2009. https://www.fda.gov/downloads/drugs/guidances/ucm193282.pdf. Accessed 28 Jan 2021. [DOI] [PMC free article] [PubMed]

- 3.Chua RM, Mecchella JN, Zbehlik AJ. Improving the measurement of disease activity for patients with rheumatoid arthritis: validation of an electronic version of the routine assessment of patient index data 3. Int J Rheumatol. 2015;2015:1–4. doi: 10.1155/2015/834070. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Richter JG, Chehab G, Schneider M. Electronic health records in rheumatology: emphasis on automated scoring and additional use. Clin Exp Rheumatol. 2016;34(5 Suppl 101):S62–S68. [PubMed] [Google Scholar]

- 5.Snyder CF, Aaronson NK. Use of patient-reported outcomes in clinical practice. Lancet. 2009;374(9687):369–370. doi: 10.1016/S0140-6736(09)61400-8. [DOI] [PubMed] [Google Scholar]

- 6.Valderas JM, Kotzeva A, Espallargues M, Guyatt G, Ferrans CE, Halyard MY, Revicki DA, Symonds T, Parada A, Alonso J. The impact of measuring patient-reported outcomes in clinical practice: a systematic review of the literature. Qual Life Res. 2008;17(2):179–193. doi: 10.1007/s11136-007-9295-0. [DOI] [PubMed] [Google Scholar]

- 7.Azevedo A, de Sousa HM, Monteiro JA, Lima AR. Future perspectives of Smartphone applications for rheumatic diseases self-management. Rheumatol Int. 2015;35(3):419–431. doi: 10.1007/s00296-014-3117-9. [DOI] [PubMed] [Google Scholar]

- 8.Grainger R, Townsley H, Langlotz T, Taylor W. Patient-clinician co-design co-participation in design of an app for rheumatoid arthritis management via telehealth yields an app with high usability and acceptance. Stud Health Technol Inform. 2017;245:1223. [PubMed] [Google Scholar]

- 9.Luo D, Wang P, Lu F, Elias J, Sparks JA, Lee YC. Mobile Apps for individuals with rheumatoid arthritis: a systematic review. J Clin Rheumatol. 2019;25(3):133–141. doi: 10.1097/RHU.0000000000000800. [DOI] [PubMed] [Google Scholar]

- 10.Seppen BF, den Boer P, Wiegel J, Ter Wee MM, van der Leeden M, de Vries R, et al. Asynchronous mHealth interventions in rheumatoid arthritis: systematic scoping review. JMIR Mhealth Uhealth. 2020;8:e19260. doi: 10.2196/19260. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Gesetz für eine bessere Versorgung durch Digitalisierung und Innovation (Digitale-Versorgung-Gesetz - DVG) vom 18.12.2019. BGBl Teil I Nr. 49:2562.https://www.bgbl.de/xaver/bgbl/start.xav?startbk=Bundesanzeiger_BGBl&start=%2F%2F%2A%5B%40attr_id=%27bgbl119s2562.pdf%27%5D#_bgbl_%2F%2F*%5B%40attrid%3D%27bgbl119s2562.pdf%27%5D1618139003609

- 12.Gwaltney CJ, Shields AL, Shiffman S. Equivalence of electronic and paper-and-pencil administration of patient-reported outcome measures: a meta-analytic review. Value Health. 2008;11(2):322–33. 10.1111/j.1524-4733.2007.00231.x. [DOI] [PubMed]

- 13.Richter JG, Becker A, Koch T, Nixdorf M, Willers R, Monser R, Schacher B, Alten R, Specker C, Schneider M. Self-assessments of patients via tablet PC in routine patient care: comparison with standardised paper questionnaires. Ann Rheum Dis. 2008;67(12):1739–1741. doi: 10.1136/ard.2008.090209. [DOI] [PubMed] [Google Scholar]

- 14.Muehlhausen W, Doll H, Quadri N, Fordham B, O’Donohoe P, Dogar N, Wild DJ. Equivalence of electronic and paper administration of patient-reported outcome measures: a systematic review and meta-analysis of studies conducted between 2007 and 2013. Health Qual Life Outcomes. 2015;13:46. doi: 10.1186/s12955-015-0362-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Marcano Belisario JS, Jamsek J, Huckvale K, O'Donoghue J, Morrison CP, Car J. Comparison of self-administered survey questionnaire responses collected using mobile apps versus other methods. Cochrane Database Syst Rev. 2015;7:MR000042. doi: 10.1002/14651858.MR000042.pub2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Coons SJ, Gwaltney CJ, Hays RD, Lundy JJ, Sloan JA, Revicki DA, Lenderking WR, Cella D, Basch E, ISPOR ePRO Task Force Recommendations on evidence needed to support measurement equivalence between electronic and paper-based patient-reported outcome (PRO) measures: ISPOR ePRO good research practices task force report. Value Health. 2009;12(4):419–429. doi: 10.1111/j.1524-4733.2008.00470.x. [DOI] [PubMed] [Google Scholar]

- 17.Wang J, Wang Y, Wei C, Yao NA, Yuan A, Shan Y, Yuan C. Smartphone interventions for long-term health management of chronic diseases: an integrative review. Telemed J E Health. 2014;20(6):570–583. doi: 10.1089/tmj.2013.0243. [DOI] [PubMed] [Google Scholar]

- 18.Lautenschlager J, Mau W, Kohlmann T, Raspe HH, Struve F, Bruckle W, Zeidler H. Comparative evaluation of a German version of the Health Assessment Questionnaire and the Hannover Functional Capacity Questionnaire. Z Rheumatol. 1997;56(3):144–155. doi: 10.1007/s003930050030. [DOI] [PubMed] [Google Scholar]

- 19.Stucki G, Liang MH, Stucki S, Bruhlmann P, Michel BA. A self-administered rheumatoid arthritis disease activity index (RADAI) for epidemiologic research. Psychometric properties and correlation with parameters of disease activity. Arthritis Rheum. 1995;38(6):795–798. doi: 10.1002/art.1780380612. [DOI] [PubMed] [Google Scholar]

- 20.Andresen J, Hülsemann JL. Rheumatoide Arthritis. In: Kuipers JG, Zeidler H, Köhler L, editors. Medal Rheumatologie. Kriterien für die Klassifikation, Diagnose, Aktivität und Prognose rheumatologischer Erkrankungen. Friedrichshafen: Wiskon; 2006. pp. 9–11. [Google Scholar]

- 21.Behrens F, Koehm M, Schwaneck EC, Schmalzing M, Gnann H, Greger G, Tony HP, Burkhardt H. Use of a “critical difference” statistical criterion improves the predictive utility of the Health Assessment Questionnaire-Disability Index score in patients with rheumatoid arthritis. BMC Rheumatol. 2019;3(1):51. doi: 10.1186/s41927-019-0095-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Campbell N, Ali F, Finlay AY, Salek SS. Equivalence of electronic and paper-based patient-reported outcome measures. Qual Life Res. 2015;24(8):1949–1961. doi: 10.1007/s11136-015-0937-3. [DOI] [PubMed] [Google Scholar]

- 23.Muehlhausen W, Byrom B, Skerritt B, McCarthy M, McDowell B, Sohn J. Standards for instrument migration when implementing paper patient-reported outcome instruments electronically: recommendations from a qualitative synthesis of cognitive interview and usability studies. Value Health. 2018;21(1):41–48. doi: 10.1016/j.jval.2017.07.002. [DOI] [PubMed] [Google Scholar]

- 24.Byrom B, Doll H, Muehlhausen W, Flood E, Cassedy C, McDowell B, Sohn J, Hogan K, Belmont R, Skerritt B, McCarthy M. Measurement equivalence of patient-reported outcome measure response scale types collected using bring your own device compared to paper and a provisioned device: results of a randomized equivalence trial. Value Health. 2018;21(5):581–589. doi: 10.1016/j.jval.2017.10.008. [DOI] [PubMed] [Google Scholar]

- 25.Regulation (EU) 2017/745 of the European Parliament and of the Council of 5 April 2017 on medical devices, amending Directive 2001/83/EC, Regulation (EC) No 178/2002 and Regulation (EC) No 1223/2009 and repealing Council Directives 90/385/EEC and 93/42/EEC.

- 26.Najm A, Nikiphorou E, Kostine M, Richez C, Pauling JD, Finckh A, Ritschl V, Prior Y, Balážová P, Stones S, Szekanecz Z, Iagnocco A, Ramiro S, Sivera F, Dougados M, Carmona L, Burmester G, Wiek D, Gossec L, Berenbaum F. EULAR points to consider for the development, evaluation and implementation of mobile health applications aiding self-management in people living with rheumatic and musculoskeletal diseases. RMD Open. 2019;5(2):e001014. doi: 10.1136/rmdopen-2019-001014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Heiberg T, Kvien TK, Dale Ø, Mowinckel P, Aanerud GJ, Songe-Møller AB, Uhlig T, Hagen KB. Daily health status registration (patient diary) in patients with rheumatoid arthritis: a comparison between personal digital assistant and paper-pencil format. Arthritis Rheum. 2007;57(3):454–460. doi: 10.1002/art.22613. [DOI] [PubMed] [Google Scholar]

- 28.Walker UA, Mueller RB, Jaeger VK, Theiler R, Forster A, Dufner P, Ganz F, Kyburz D. Disease activity dynamics in rheumatoid arthritis: patients’ self-assessment of disease activity via WebApp. Rheumatology (Oxford) 2017;56(10):1707–1712. doi: 10.1093/rheumatology/kex229. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Salaffi F, Di Carlo M, Carotti M, Farah S, Gutierrez M. The Psoriatic Arthritis Impact of Disease 12-item questionnaire: equivalence, reliability, validity, and feasibility of the touch-screen administration versus the paper-and-pencil version. Ther Clin Risk Manag. 2016;12:631–642. doi: 10.2147/TCRM.S101619. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.de Souza S, Galloway J, Simpson C, Chura R, Dobson J, Gullick NJ, et al. Patient involvement in rheumatology outpatient service design and delivery: a case study. Health Expect. 2017;20(3):508–18. 10.1111/hex.12478. Epub 2016 Jun 27. [DOI] [PMC free article] [PubMed]

- 31.Seppen BF, Wiegel J, L'ami MJ, Duarte Dos Santos Rico S, Catarinella FS, Turkstra F, et al. Feasibility of Self-monitoring rheumatoid arthritis with a Smartphone App: results of two mixed-methods pilot studies. JMIR Form Res. 2020;4:e20165. doi: 10.2196/20165. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Williams CA, Templin T, Mosley-Williams AD. Usability of a computer-assisted interview system for the unaided self-entry of patient data in an urban rheumatology clinic. J Am Med Inform Assoc. 2004;11(4):249–259. doi: 10.1197/jamia.M1527. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Azevedo A, Bernardes M, Fonseca J, Lima A. Smartphone application for rheumatoid arthritis self-management: cross-sectional study revealed the usefulness, willingness to use and patients’ needs. Rheumatol Int. 2015;35(10):1675–1685. doi: 10.1007/s00296-015-3270-9. [DOI] [PubMed] [Google Scholar]

- 34.Geuens J, Geurts L, Swinnen TW, Westhovens R, Vanden AV. Mobile health features supporting self-management behavior in patients with chronic arthritis: mixed-methods approach on patient preferences. JMIR Mhealth Uhealth. 2019;7(3):e12535. doi: 10.2196/12535. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Najm A, Lempp H, Gossec L, Berenbaum F, Nikiphorou E. Needs, experiences, and views of people with rheumatic and musculoskeletal diseases on self-management mobile health apps: mixed methods study. JMIR Mhealth Uhealth. 2020;8(4):e14351. doi: 10.2196/14351. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Bischoff-Ferrari HA. Validation and patient acceptance of a computer touch screen version of the WOMAC 3.1 osteoarthritis index. Ann Rheum Dis. 2005;64(1):80–84. doi: 10.1136/ard.2003.019307. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Bennett AV, Jensen RE, Basch E. Electronic patient-reported outcome systems in oncology clinical practice. CA Cancer J Clin. 2012;62:336–347. doi: 10.3322/caac.21150. [DOI] [PubMed] [Google Scholar]

- 38.Chung AE, Basch EM. Incorporating the patient’s voice into electronic health records through patient-reported outcomes as the “review of systems”. J Am Med Inform Assoc. 2015;22(4):914–916. doi: 10.1093/jamia/ocu007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Jensen RE, Rothrock NE, DeWitt EM, Spiegel B, Tucker CA, Crane HM, et al. The role of technical advances in the adoption and integration of patient-reported outcomes in clinical care. Med Care. 2015;53(2):153–159. doi: 10.1097/MLR.0000000000000289. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Sternfeld B, Jiang S, Picchi T, Chasan-Taber L, Ainsworth B, Quesenberry CP. Evaluation of a cell phone–based physical activity diary. Med Sci Sports Exerc. 2012;44(3):487–495. doi: 10.1249/MSS.0b013e3182325f45. [DOI] [PubMed] [Google Scholar]

- 41.Bastyr EJ, Zhang S, Mou J, Hackett AP, Raymond SA, Chang AM. Performance of an electronic diary system for intensive insulin management in global diabetes clinical trials. Diabetes Technol Ther. 2015;17(8):571–579. doi: 10.1089/dia.2014.0407. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Domańska B, Vansant S, Mountian I. A companion app to support rheumatology patients treated with Certolizumab Pegol: results from a usability study. JMIR Form Res. 2020;4(7):e17373. doi: 10.2196/17373. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Hirsch IB, Parkin CG. Unknown safety and efficacy of smartphone bolus calculator apps puts patients at risk for severe adverse outcomes. J Diabetes Sci Technol. 2016;10(4):977–980. doi: 10.1177/1932296815626457. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Bundesinstitut für Arzneimittel und Medizinprodukte. Orientierungshilfe Medical Apps. 2015. https://www.bfarm.de/DE/Medizinprodukte/Abgrenzung/MedicalApps/_node.html. Accessed 28 Jan 2021.

- 45.Directive 2007/47/EC of the European Parliament and of the Council of 5 September 2007 amending Council Directive 90/385/EEC on the approximation of the laws of the Member States relating to active implantable medical devices, Council Directive 93/42/EEC concerning medical devices and Directive 98/8/EC concerning the placing of biocidal products on the market (Text with EEA relevance).

- 46.Klasnja P, Pratt W. Healthcare in the pocket: mapping the space of mobile-phone health interventions. J Biomed Inform. 2012;45(1):184–198. doi: 10.1016/j.jbi.2011.08.017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Kristjansdottir OB, Børøsund E, Westeng M, Ruland C, Stenberg U, Zangi HA, Stange K, Mirkovic J. Mobile app to help people with chronic illness reflect on their strengths: formative evaluation and usability testing. JMIR Form Res. 2020;4(3):e16831. doi: 10.2196/16831. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The data are available on reasonable request.