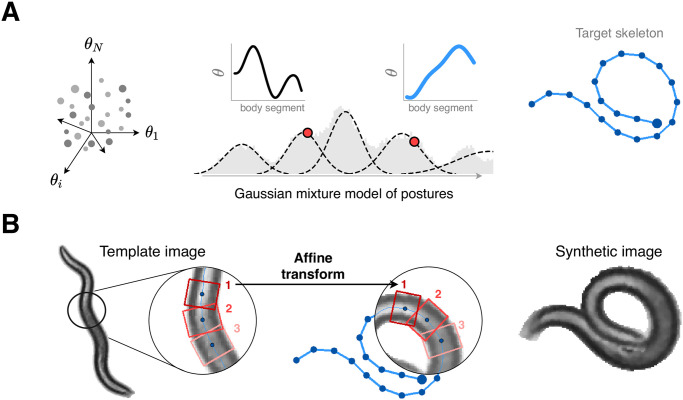

Fig 2. We combine a generative model of worm posture with textures extracted from real video to create realistic yet synthetic images for a wide variety of naturalistic postures, including coils, thus avoiding the need for manually-annotated training data.

(A) We model the high-dimensional space of worm posture (left) by Gaussian mixtures (middle) constructed from a core set of previously analyzed worm shapes [9]. To each generated posture we add a global orientation (chosen uniformly between 0 and 2π), and we randomly assign the head to one end of the centerline. (right) We use the resulting centerline (angle coordinates) to construct the posture skeleton (pixel coordinates). (B) We warp small rectangular pixel patches along the body of a real template image (left) to the target centerline (middle), producing a synthetic worm image (right). Overlapping pixels are alpha-blended to connect the patches seamlessly. Unwanted pixels protruding from the target worm body are masked and the background pixels are set to a uniform color. Finally, the image is cleaned of artifacts through a medium blur filter.