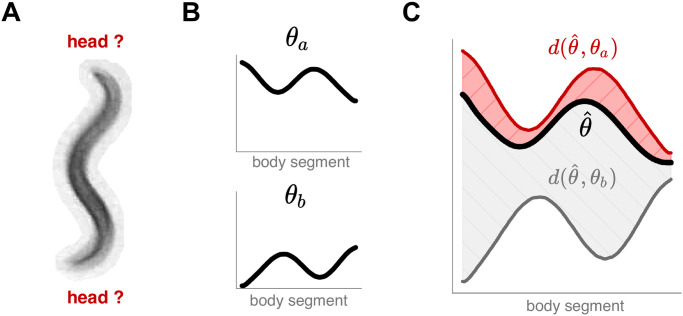

Fig 3. We train a convolutional network to associate worm images with an unoriented centerline to overcome head-tail ambiguities which are common due to worm behaviors and imaging environments.

(A) An example image with a seemingly symmetrical worm body. (B) We associate each training image to two possible centerline geometries, resulting in two equivalent labels: θa and θb = flip(θa) + π, corresponding to a reversed head/tail orientation. (C) We compare the output centerline to each training centerline through the root mean squared error of the angle difference d(θ1, θ2) (Eq 2) and assign the overall error as .