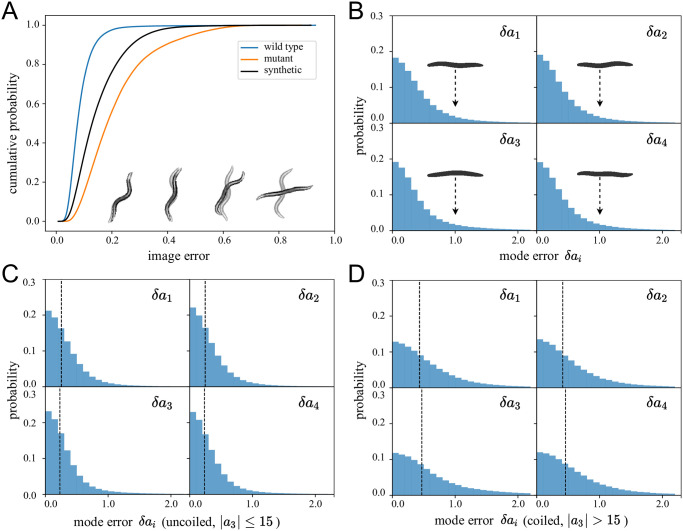

Fig 5. Quantifying the error in pose estimation.

(A) We show the cumulative image error of predicted images for different datasets. We predict 24 videos totaling over 600k frames from N2 wild-type and AQ2934 mutant datasets and calculate the image error between the original image and the two possible predictions, and keep the lowest value between the two. For the error calculations here we bypass the postprocessing step so no result is discarded. For interpretability we also draw representative worm image pairs for different error values and note that predictions overwhelmingly result in barely discernible image errors. On average, the N2 predictions have a lower image error than the mutant which exhibit much more coiled challenging postures. We also generate new synthetic images (using N2 as templates, 600k values) not seen during the training and predict them in the same way. The image error for the synthetic images (which generally include a higher fraction of complex, coiled shapes) is on average worse than the N2 type, but better than the mutant. (B) Our synthetic training approach also allows for a direct comparison between input and output centerlines, here quantified through the difference in eigenworm mode values. As with the images, the differences are also small so that even in the large-error tail of the distribution the “error worms” (worm shapes representing mode values with δai = 1.0) are essentially flat. The median mode errors are . (C, D) We additionally show the mode errors for synthetic images separated into (C) uncoiled (a3 ≤ 15) and (D) coiled (a3 > 15) shapes. Dashed lines denote median error values of and . The errors are small in all cases.