Abstract

Under the prevailing circumstances of the global pandemic of COVID-19, early diagnosis and accurate detection of COVID-19 through tests/screening and, subsequently, isolation of the infected people would be a proactive measure. Artificial intelligence (AI) based solutions, using Convolutional Neural Network (CNN) and exploiting the Deep Learning model’s diagnostic capabilities, have been studied in this paper. Transfer Learning approach, based on VGG16 and ResNet50 architectures, has been used to develop an algorithm to detect COVID-19 from CT scan images consisting of Healthy (Normal), COVID-19, and Pneumonia categories. This paper adopts data augmentation and fine-tuning techniques to improve and optimize the VGG16 and ResNet50 model. Further, stratified 5-fold cross-validation has been conducted to test the robustness and effectiveness of the model. The proposed model performs exceptionally well in case of binary classification (COVID-19 vs. Normal) with an average classification accuracy of more than 99% in both VGG16 and ResNet50 based models. In multiclass classification (COVID-19 vs. Normal vs. Pneumonia), the proposed model achieves an average classification accuracy of 86.74% and 88.52% using VGG16 and ResNet50 architectures as baseline, respectively. Experimental results show that the proposed model achieves superior performance and can be used for automated detection of COVID-19 from CT scans.

Keywords: COVID-19, Convolutional Neural Network, Transfer learning, VGG16, ResNet50, CT scan images

1. Introduction

The viral disease COVID-19, which broke-out from Wuhan China, has spread worldwide as a global pandemic. As of 09 Jan 21, more than 87.5 millions of people have been infected including 1.9 million deaths worldwide due to the pandemic [1]. COVID-19 is one of the single largest events in human history which has affected in such proportion globally. The pandemic has not only affected routine activities of the people but has also led to the mental and psychological stress apart from substantial financial loss, economic stagnation, and health liabilities [2], [3]. So far, no proven vaccine has been developed for the disease, though concerted efforts are in-progress. The asymptomatic COVID-19 transmission cases have also been reported, though they are less contagious than the symptomatic cases [4]. These circumstances demand the regular check-ups of a large number of people that may lead to an extra burden. Being a viral infection, early identification, and isolation of COVID-19 patients is vital in breaking the chain of its spread and efficient handling of the present situation.

Presently Reverse Transcription–Polymerase Chain Reaction (RT-PCR) and rapid tests are being conducted for early detection of the disease [5]. However, the sensitivity shown by these tests is not optimal. There are many false-positive and false-negative results, which defeats the very purpose of early identification and isolation of the patients [6], [7]. This further delays in making the right decision and suitable action taken. Shortage and timely availability of sufficient test-kits to conduct mass level tests is also a significant concern in many countries [8]. Several studies have been performed for prediction of the spread and continuous monitoring of the COVID-19 pandemic [9], [10], [11], [12].

The X-ray, computerized tomography (CT) scan, ultrasound screening tests are also being used by radiologists to examine the patients to detect COVID-19 infection. While evaluating the CT scan images, specific and consistent patterns/features have been identified in almost all COVID-19 patients. Two significant patterns, one ground glass opacities in the early stage and other one is pulmonary embolism demonstrating linear consolidation in the latter stages, have been identified as prominent signs in detecting the infection of virus [13], [14]. It is important to note that COVID-19 develops symptoms similar to Pneumonia, as both are viral diseases, affecting the lungs and leading to breathing problems. Therefore, it becomes very challenging and bewildering to differentiate between COVID-19 and Pneumonia. In pandemic scenarios, this manual method of evaluating images takes much time and needs intensive human resources. As an alternative, the need of the hour is to find an automatic system/tool to have early and precise detection of COVID-19 disease to control its spread.

Machine learning, deep learning and AI based approaches have been used for detection and classifications various diseases [15], [16], [17]. Thus, as an alternative, AI-based solutions can provide efficient solutions that can help in automatic learning of features/patterns from CT scan images, which can augment the capabilities of radiologists in better decision-making and more effective management of the situation. Deep Learning models [18], based on CNN are highly effective and have shown promising results in various medical imaging applications [19], [20], [21]. CNN has made possible the development of deep neural network architectures consisting of several intermediate layers. In contrast to the traditional Machine Learning algorithms, where input features are required to be fed as input to the algorithm, CNN has automatic feature learning capabilities. Technology improvements in terms of large data handling and high-speed Graphical Processing Units (GPU) have worked as a catalyst in augmenting the performance. CNN based deep learning models have shown promising results across diverse fields and are fundamental to almost all image recognition tasks. CNN consists of several intermediate layers, where initially low-level features are learned, and higher-level or fine-grade features are learned in deeper stages. Fundamentally, CNN based model consists of two levels. The first level is feature extraction layer cascaded with several layers of convolution, activation, and MaxPooling operations, which helps in learning unique and specific features from an input. The second level consists of fully connected layers that perform the actual classification task [22].

The development of any deep learning algorithm from scratch requires resources in terms of a high-speed GPU processor for execution, large number of input images for training, and time to fine-tune and optimize the model parameters for specific tasks. Therefore, in this paper, transfer learning-based architectures have been used as a base model to reduce complexity, which has been optimized and fine-tuned further to improve the performance of the COVID-19 detection algorithm. There are a number of easily accessible top-performing models, namely VGG16/VGG19 [23], ResNet50 [24], Inception [25], Xception [26], InceptionResNet [27] and DenseNet [28], which can be integrated into a new image classification task. The weights of these models, which have been trained optimally on millions of images for similar kinds of image recognition tasks, can be easily loaded into the algorithm. Based on requirements, the weights of model can be re-trained, or new convolution layers can be incorporated into the algorithm to increase the model capacity. Further, model performance can be improved by assimilating BatchNormalization, regularization, and tuning of the neural network Hyperparameters.

Since the spread of COVID-19, several deep learning-based models have been proposed to detect the disease. Models have been implemented using X-ray [29], [30], [31], [32], [33], [34] or CT scan [35], [36] images. Since the pandemic is in-continuation and spreading at a very high rate, there is a lack of a large number of good quality-labeled radio-graphic images to train a neural network. Given this, the dataset in almost all proposed models are highly unbalanced view paucity of adequate COVID-19 images. It is also pertinent to mention that developing a deep learning model from scratch needs many resources and concerted efforts to optimize it. Therefore, most of the proposed models available in the literature have been built-upon using transfer learning-based architecture. COVID-19 detection model for three classes, consisting of Coronavirus, Bacterial Pneumonia and Normal categories, has been proposed in [29], [37], [35], [38]. In [29], the proposed DarkCOVIDNet model based on the Darknet-19 model has been evaluated using X-ray images and 5-fold cross-validation. The authors in [37] have introduced a COVID-Net network architecture, and [35] has studied various transfer learning-based models, including ResNet50 and VGG16 using X-ray images and single fold cross-validation. A similar analysis has been carried out in [38] using X-ray, CT scan, and ultrasound images applying various transfer learning models.

A hybrid approach based on the integration of artificial neural networks and fuzzy logic has been described in [39] for diagnosis of pulmonary diseases using chest X-ray images. The author performs the classification task by feeding grayscale histogram features, grey-level co-occurrence matrix, texture-based features, and local binary pattern texture features into the artificial neural network. The same authors have undertaken the classification of COVID-19 using the same texture features and neural networks in [40]. A seven-layer convolutional neural network-based COVID-19 diagnosis model has been proposed in [41] using 14-way data augmentation and introduced stochastic pooling. In reference [42], six different pretrained models have been experimented with by making the number of layers adaptive and adding two new fully connected layers in each model. The authors have fused the features from the best two pretrained models using discriminant correlation analysis. The authors in [43] have used different pretrained models as feature extractors combined with machine learning algorithms to perform the automatic detection using chest X-ray images. Automated detection of COVID-19 using CNN-based U-Net architecture has been proposed in [44] that consists of a sequence of convolutional blocks where the output of a block is concatenated with the output from a previous block in a specific pattern before feeding to the next block. A model based on Multiple Kernels-Extreme Learning Machine and neural network using chest CT images has been proposed in [45] for COVID-19 detection based on DenseNet201 transfer learning architecture. In this study, the final output class is predicted using the majority voting of the outputs from three different activation functions. CVID-19 detection model proposed by [46] uses ShuffleNet and SqueezeNet architectures as a feature extractor and Multiclass Support Vector Machine as a classifier.

In this study, extensive experiments have been performed through the proposed model and the main contributions are as follows:

-

1.

Transfer learning-based architectures (VGG16 and ResNet50), originally developed on ImageNet dataset, have been studied and experimented with to detect COVID-19 disease from CT scan images.

-

2.

The dataset has been prepared by collecting three different categories of images consisting of Normal, Pneumonia, and COVID-19 from authentic websites [47], [48], [49].

-

3.

Several performance improvement techniques and data augmentation have been incorporated in the proposed model to improve the performance of standard VGG16 and ResNet50 models.

-

4.

To make the standard pretrained models more specific and relevant for COVID-19 detection, convolutional blocks of VGG16 and ResNet50 architectures have been re-trained.

-

5.

A new convolutional block consisting of Convolutional layer, BatchNormalization, MaxPooling, and Dropout has been incorporated into the proposed model to minimize the overfitting and hence, the generalization error.

Rest of the work is organized as follows: The details of dataset preparation, proposed neural network architecture, data augmentation, and performance improvements incorporated into the baseline VGG16 and ResNet50 models have been discussed in Section II. The discussions and analysis of results obtained from the experiments are presented in Section III. Finally, Section IV concludes the paper by proposing some future scopes.

2. Experimental design of COVID-19 detection model

2.1. Dataset

The major challenge in training and validating the proposed model is the availability of authentic, labeled, and class-balanced COVID-19 CT scan images. Most of the available open-source datasets are either unorganized or highly unbalanced, which would affect the learning and prediction capabilities of deep learning models significantly. Moreover, the available numbers of COVID-19 CT scan images are minimal and insufficient. Therefore, for experiment purposes, the dataset is obtained by downloading CT scan images from the authentic websites [47], [48], [49]. From these images, a class-balanced dataset consisting of 400 images each of COVID-19 and Normal categories is prepared by performing required pre-processing that consists of resizing and conversion to a common image format (PNG). Further, since COVID-19 has similar symptoms as Pneumonia and both are viral disease, a new dataset comprising three categories of COVID-19, Pneumonia, and Normal (Healthy) images, is prepared to assess the robustness and effectiveness of the proposed model. Two hundred fifty images exhibiting Pneumonia have been downloaded from the website [49] to perform the 3-class classification task. All input images are resized to 224x224 pixels.

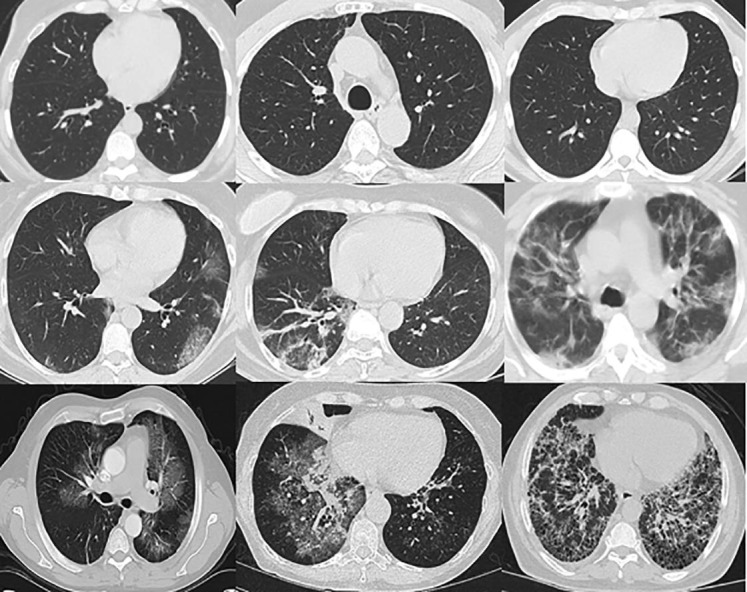

Sample images from each class are shown in Fig. 1 . The complete dataset is divided in a ratio of 80:20 for training and testing of the neural network. Training dataset has been further divided in a ratio of 80:20 for model training and validation purpose. Detailed information about the training and testing samples is listed in Table 1 .

Fig. 1.

Sample CT scan images used for training and evaluation of the proposed COVID-19 detection model. First row: Normal; second row: COVID-19, third row: Pneumonia.

Table 1.

Details of number of images used for training, validation and testing of the proposed COVID-19 detection model.

2.2. Proposed deep learning architecture

In this section, standard VGG16 and ResNet50 models a baseline models have been described, and various performance improvement techniques have been discussed. These techniques have been integrated with the VGG16 and ResNet50 model carefully to obtain the proposed deep learning model in detecting COVID-19 from the CT scan images.

2.2.1. VGG16 and ResNet50 Model

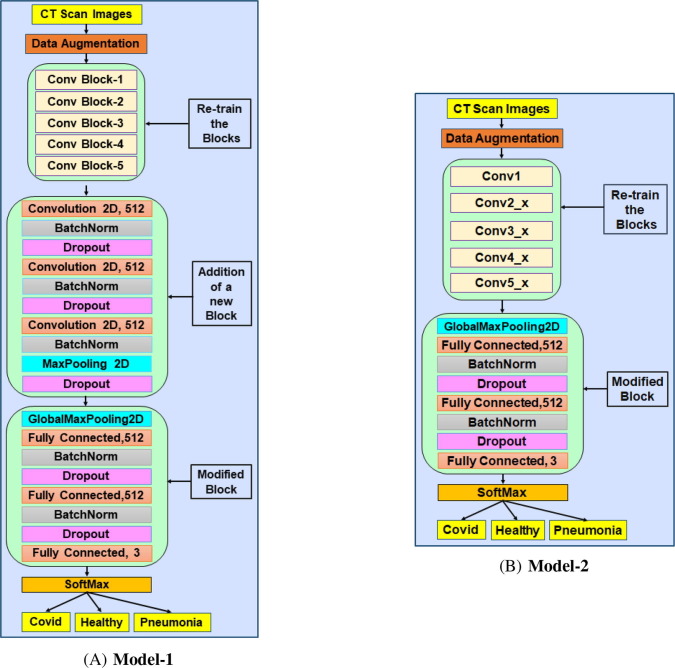

VGG16 model was the winner of the ILSVRC-2014 annual computer vision competition and developed by the Visual Graphics Group (VGG) from Oxford. It has been trained over a dataset of 14 million images corresponding to 1000 categories. It consists of 5 convolution blocks (Conv Block-1 to Conv Block-5) and Fully Connected layers, as shown in Fig. 2 , and details of each block are shown in Table 2 . It is pertinent to note that BatchNormalization and Dropout techniques were not incorporated in the original VGG16 model. The model is flexible, simple in implementation, and still very powerful in performance. Further, as an improvement to the VGG16, ResNet50 architecture was proposed, which was the winner of several tracks in ILSVRC & COCO 2015 competitions. With the increase in deep learning models’ depth, a vanishing gradient problem has been observed, causing accuracy saturation and degradation in subsequent stages. ResNet50 architecture has addressed this problem by introducing a deep residual learning framework and by incorporating shortcut connections. We observe that ResNet50 obtains accuracy gain from increased depths with lower complexity than VGG nets. The details of layers of ResNet50 model is shown in Table 2 and Fig. 2.

Fig. 2.

Process flow architecture of the proposed neural network models: (A) Model-1, based on VGG16 architecture; (B) Model-2, based on ResNet50 architecture.

Table 2.

Details of convolutional building blocks: VGG16 architecture (Left) and ResNet50 architecture (Right).

| Layer Name | Output Size | Filter Details |

|---|---|---|

| [size, numbers] | ||

| MaxPooling | ||

| MaxPooling | ||

| MaxPooling | ||

| MaxPooling | ||

| MaxPooling | ||

| FC | 1000 | , |

| Softmax | ||

| , 64, stride 2 | ||

| MaxPool, stride 2 | ||

| FC | 1000 | AveragePool, |

| FC-1000, Softmax |

2.2.2. Data augmentation and fine-tuning

Data augmentation and various performance improvement techniques have been implemented to enhance the learning capabilities of the baseline models. Details are enumerated as follows:

-

•

Data Augmentation: Data augmentation has been applied to improve the diverse feature learning capabilities of the model by creating more number of distinct images from the training dataset. It is pertinent to mention that the performance of deep learning model generalizes well on new images when trained on large number of training dataset. Data augmentation introduces random variations in the dataset at each iteration of the optimization process. It has been applied carefully to augment the dataset while preserving the quality. Various types of data augmentation techniques have been applied to the training dataset, such as horizontal flip, vertical flip, rotation, width/height shift, zoom, and shear.

-

•

Re-train the weights of VGG16 and ResNet50 Model: VGG16, and ResNet50 were originally developed to classify millions of images into the thousands of categories. Re-training of the models’ convolution blocks has been carried out to improve the model weights specific to the detection of COVID-19 disease.

-

•

Fine-Tuning of the Model: Hyperparameters of the model have been fine-tuned precisely through iterations to achieve the optimal performance. Various fine-tuning techniques, incorporated into the proposed models, are briefed as follows:

-

1.

Number of Layers- Several number of intermediate layers and nodes have been experimented within the proposed model. It has been observed that a small number of layers underfit the model capabilities. In contrast, large numbers lead to increased model complexity due to an increased number of weights to be learned, more time to converge, and performance degradation. Suitable values were set empirically to achieve performance improvement.

-

2.

Learning Rate- Learning rate is one of the most vital parameter that needs careful selection. It has been observed through experiments that a too-large value of the learning rate leads to the quick and sub-optimal convergence of the optimization process. At the same time, a too-small value stuck the learning process. An optimal value of the learning rate has been deduced analytically to obtain optimal performance.

-

3.

Batchsize- Batchsize is the number of training samples taken together to estimate the error gradient of the optimization algorithm. Experimentally, it has been noted that Batchsize impacts the learning behavior and speed of the model. A suitable value of Batchsize is chosen empirically.

-

4.

BatchNormalization- Improved stability of the neural network has been achieved by incorporating a BatchNormalization after every convolutional and fully connected layers. It reduces the covariance shift between the layers by normalizing the input to a layer[50] while training a neural network.

-

5.

Regularization- For the given training dataset, VGG16 and ResNet50 models showed the tendency of overfitting and sub-optimal performance. Mitigation of overfitting and generalization of the proposed model has been accomplished by incorporating a regularization technique. Two popular regularization methods, Dropout [51] and Early Stopping, have been implemented in this paper.

-

6.

Optimization Algorithms- Various optimization algorithms, viz. SGD, Adam, RMSprop have been analysed to assess their effectiveness in the classification task.

2.2.3. Proposed model

Initially, performance analysis of baseline VGG16 and ResNet50 model was carried out. It was observed that validation learning curves do not converge to training learning curves and have random variations. The baseline model also performed poorly on the test data. Based on these observations, it was predicted that the model is showing a tendency of overfitting and need fine-tuning of Hyperparameters. To further improve the performance, the following modifications have been incorporated in the baseline models:

-

1.

Weights of the convolution Blocks of VGG16 and ResNet50 are re-trained so that the proposed model is more suitable and efficient for the specific classification task.

-

2.

An additional convolution block consisting of Convolutional layers, BatchNormalization, MaxPooling, and Dropout layers are inserted before the fully connected layer in VGG16 architecture. This block has been primarily used to mitigate the overfitting of the model (through BatchNormalization and Dropout) and also to assist in the learning of fine-order features (through convolutional layers).

-

3.

BatchNormalization and Dropout are incorporated in the fully connected block of VGG16. Similar changes are also done in ResNet50, in addition to the incorporation of two more Fully Connected layers.

The process flow architecture of the proposed model based on VGG16 and ResNet50 architecture is shown in Fig. 2.

2.2.4. Experimental parameters

The proposed deep learning model has been trained and evaluated using Keras [52] library on Google Colab. The Hyperparameter values have been tuned optimally in multiple iterations while training the model. Details of the final experimental parameters are tabulated in Table 3 .

Table 3.

Details of Hyperparameters (Left) and data augmentation (Right) used in the proposed COVID-19 detection model.

| Hyperparameters | Details |

|

|---|---|---|

| Model1 | Model2 | |

| Learning rate | 0.00007 | 0.00003 |

| Momentum | 0.8 | 0.8 |

| Batchsize | 32 | 16 |

| Epochs | 500 | 350 |

| Patience(Early Stopping) | 100 | 100 |

| Dropout | 0.2–0.5 | 0.5 |

| Optimizer | SGD | SGD |

| Momentum SGD | 0.7 | 0.8 |

| Learning rate decay | epoch/iteration | epoch/iteration |

| Data Augmentation | ||

| Re-scaling | 1/255 | 1/255 |

| Horizontal flip | Yes | Yes |

| Vertical flip | Yes | Yes |

| Rotation range | 45° | 45° |

| Width shift range | 20% | 20% |

| Height shift range | 20% | 20% |

| Shear range | 20% | 20% |

| Zoom range | 20% | 20% |

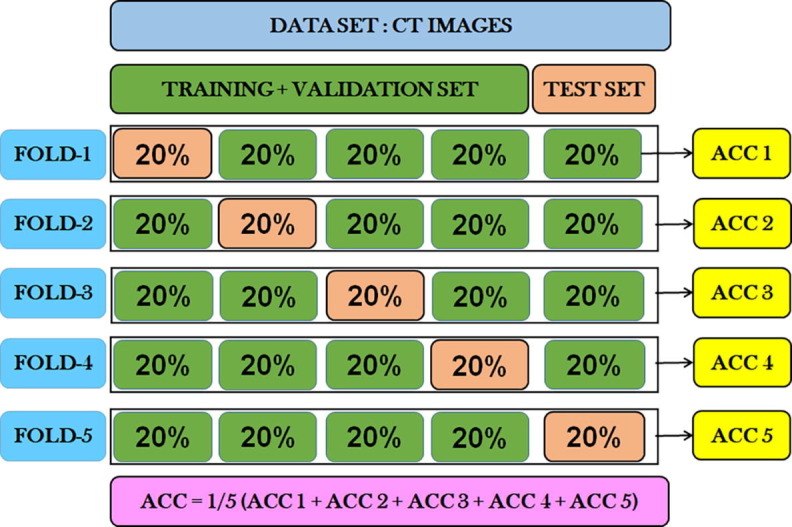

2.2.5. 5-fold cross-validation

Cross-validation has been used to estimate the performance and robustness of the proposed model on unseen data with the aim of minimal generalization error. In this study, 5-fold cross-validation has been used, where the actual dataset is divided into five folds. In each experiment, only four folds have been used for the purpose of training, and the holdout set is used for testing purpose. Five experiments are performed to get five different accuracies corresponding to each fold of the dataset as test set. The absolute accuracy of the proposed model is the average of the accuracy from all experiments. A stratified version of cross-validation has been implemented to ensure the balance of classes in each fold. The schematic representation of the proposed stratified 5-Fold cross-validation is shown in Fig. 3 .

Fig. 3.

Schematic representation of stratified 5-Fold cross-validation technique implemented in the proposed COVID-19 detection model.

3. Results analysis and discussions

Proposed models have been trained on Google Colab using Hyperparameters as listed in Table IV using the prepared dataset. The model based on VGG16 and ResNet50 have been trained for maximum epochs of 500 and 350, respectively, combined with Early Stopping. Performance analysis is carried out using Accuracy, Precision, Recall (Sensitivity), Specificity, and F1-Score metrics. The values of various metrices recorded and analyzed for each experiment. The performance measures are mathematically calculated as follows:

where TP, TN, FP, and FN are True Positives, True Negatives, False Positives, and False Negatives, respectively.

Four different experiments have been undertaken to obtain the detailed analysis of the proposed models. Binary classification of COVID vs. Normal, and multiclass (3-class) classification of COVID vs. Normal vs. Pneumonia have been undertaken using two different proposed models (VGG16 and ResNet50). For each experiment, 5-Fold cross-validation has been carried out to evaluate the robustness and effectiveness of the model. Details of models and various experiments are tabulated in Table 4 .

Table 4.

Details of models and various experiments conducted in the proposed COVID-19 detection model.

| Sr.No. | Particulars | Details |

|---|---|---|

| (i) | Baseline Model | Original VGG16 model with changes in output layer |

| Original ResNet50 with changes in output layer | ||

| (ii) | Proposed Model | Model1 based on VGG16 Architecture (Refer Fig. 2) |

| Model2 based on ResNet50 Architecture (Refer Fig. 3) | ||

| (iii) | Experiment-1 | Binary Classification |

| COVID vs. Normal) using Model1 | ||

| (iv) | Experiment-2 | Binary Classification |

| (COVID vs. Normal) using Model2 | ||

| (v) | Experiment-3 | Multiclass Classification |

| (COVID vs. Normal vs. Pneumonia) using Model1 | ||

| (vi) | Experiment-4 | Multiclass Classification |

| (COVID vs. Normal vs. Pneumonia) using Model2 |

The details of performance measures of the experiments are presented in Table 5 . Moreover, an evaluation matrix for the binary classification of COVID-19 vs. Normal using proposed models (Experiment-1 and Experiment-2) is tabulated in Table 6 . Similarly, an evaluation matrix for multiclass classification of COVID-19 vs. Normal vs. Pneumonia (Experiment-3 and Experiment-4) is presented in Table 7 .

Table 5.

Performance measures Matrix of the binary classification (COVID-19 vs. Normal) and multiclass classification (COVID-19 vs. Normal vs. Pneumonia) experiments, based on VGG16 and ResNet50 architecture.

| Folds | Experiment-1 |

Experiment-2 |

Experiment-3 |

Experiment-4 |

||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Test | Test | Epochs | Test | Test | Epochs | Test | Test | Epochs | Test | Test | Epochs | |

| Loss | Accuracy(%) | Loss | Accuracy(%) | Loss | Accuracy(%) | Loss | Accuracy(%) | |||||

| Fold-1 | 0.031 | 99.37 | 500 | 0.011 | 100 | 350 | 0.38 | 82.38 | 435 | 0.505 | 78.09 | 331 |

| Fold-2 | 0.032 | 99.37 | 346 | 0.022 | 100 | 350 | 0.45 | 80.67 | 307 | 0.688 | 81.64 | 328 |

| Fold-3 | 0.04 | 97.5 | 500 | 0.02 | 100 | 350 | 0.407 | 82.85 | 490 | 0.186 | 93.33 | 350 |

| Fold-4 | 0.0001 | 100 | 339 | 0.007 | 100 | 350 | 0.259 | 90.95 | 313 | 0.211 | 93.33 | 350 |

| Fold-5 | 0.046 | 99.37 | 500 | 0.054 | 98.12 | 350 | 0.117 | 96.87 | 500 | 0.105 | 96.19 | 350 |

| Average Accuracy = 99.12 | Average Accuracy = 99.62 | Average Accuracy = 86.74 | Average Accuracy = 88.52 | |||||||||

Table 6.

Evaluation Matrix of binary classification, COVID-19 vs. Normal, using VGG16 based architecture (Experiment-1) and using ResNet50 based architecture (Experiment-2).

| Folds | Class | Experiment-1 |

Experiment-2 |

||||||

|---|---|---|---|---|---|---|---|---|---|

| Precision | Sensitivity | Specificity | F1-score | Precision | Sensitivity | Specificity | F1-score | ||

| Fold-1 | COVID | 100 | 99 | 100 | 99 | 100 | 100 | 100 | 100 |

| Normal | 99 | 100 | 98.75 | 99 | 100 | 100 | 100 | 100 | |

| Average | 99 | 99 | 99.37 | 99 | 100 | 100 | 100 | 100 | |

| Fold-2 | COVID | 100 | 99 | 100 | 99 | 100 | 100 | 100 | 100 |

| Normal | 99 | 100 | 98.75 | 99 | 100 | 100 | 100 | 100 | |

| Average | 99 | 99 | 99.37 | 99 | 100 | 100 | 100 | 100 | |

| Fold-3 | COVID | 95 | 100 | 95 | 98 | 100 | 100 | 100 | 100 |

| Normal | 100 | 95 | 100 | 97 | 100 | 100 | 100 | 100 | |

| Average | 98 | 97 | 97.5 | 97 | 100 | 100 | 100 | 100 | |

| Fold-4 | COVID | 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 |

| Normal | 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | |

| Average | 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | |

| Fold-5 | COVID | 99 | 100 | 98.75 | 99 | 96 | 100 | 96.25 | 98 |

| Normal | 100 | 99 | 100 | 99 | 100 | 96 | 100 | 98 | |

| Average | 99 | 99 | 99.37 | 99 | 98 | 98 | 98.12 | 98 | |

Table 7.

Evaluation Matrix of multiclass classification, COVID-19 vs. Normal vs. Pneumonia, using VGG16 based architecture (Experiment-3) and using ResNet50 based architecture (Experiment-4).

| Folds | Folds | Experiment-3 | Experiment-4 | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Precision | Sensitivity | Specificity | F1-score | Precision | Sensitivity | Specificity | F1-score | ||

| Fold-1 | COVID | 79 | 72 | 88.46 | 76 | 81 | 55 | 92.3 | 66 |

| Normal | 99 | 100 | 99.23 | 99 | 100 | 100 | 100 | 100 | |

| Pneumonia | 62 | 70 | 86.87 | 66 | 53 | 80 | 77.5 | 63 | |

| Average | 80 | 81 | 91.52 | 80 | 78 | 78 | 89.93 | 76 | |

| Fold-2 | COVID | 79 | 65 | 90 | 71 | 90 | 57 | 96.15 | 70 |

| Normal | 98 | 100 | 98.42 | 99 | 100 | 100 | 100 | 100 | |

| Pneumonia | 60 | 74 | 84.07 | 66 | 58 | 90 | 78.98 | 70 | |

| Average | 79 | 80 | 90.83 | 79 | 82 | 82 | 91.71 | 80 | |

| Fold-3 | COVID | 84 | 68 | 92.31 | 75 | 93 | 89 | 96.15 | 91 |

| Normal | 98 | 100 | 98.46 | 99 | 100 | 99 | 100 | 99 | |

| Pneumonia | 62 | 80 | 85 | 70 | 84 | 92 | 94.37 | 88 | |

| Average | 81 | 83 | 91.92 | 81 | 92 | 93 | 96.84 | 93 | |

| Fold-4 | COVID | 94 | 81 | 96.92 | 87 | 92 | 90 | 95.38 | 91 |

| Normal | 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | |

| Pneumonia | 75 | 92 | 90.62 | 83 | 85 | 88 | 95 | 86 | |

| Average | 90 | 91 | 95.85 | 90 | 92 | 93 | 96.79 | 92 | |

| Fold-5 | COVID | 99 | 94 | 99.23 | 96 | 95 | 95 | 96.92 | 95 |

| Normal | 100 | 99 | 100 | 99 | 100 | 100 | 100 | 100 | |

| Pneumonia | 91 | 100 | 96.87 | 95 | 92 | 92 | 97.5 | 92 | |

| Average | 97 | 97 | 98.7 | 97 | 96 | 96 | 98.14 | 96 | |

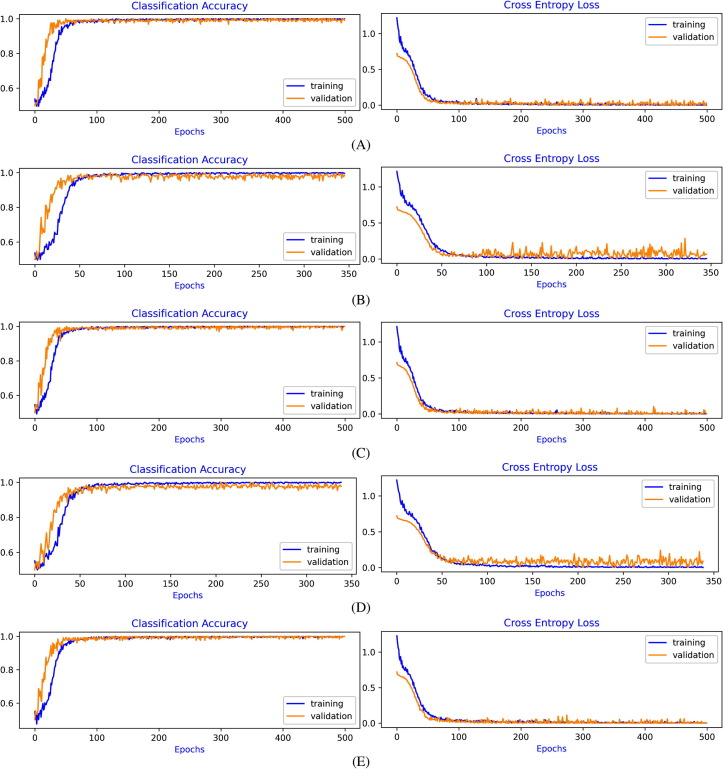

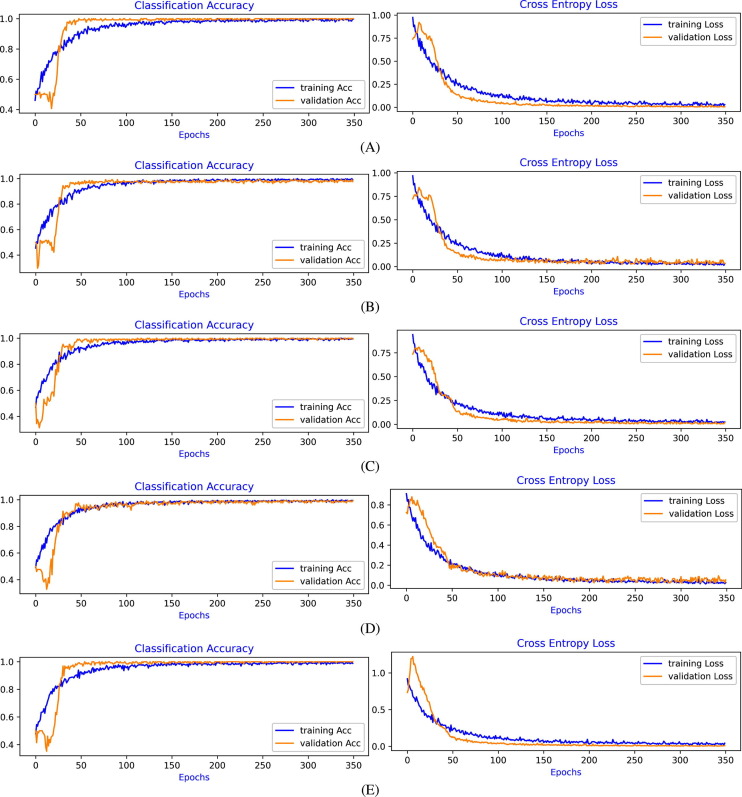

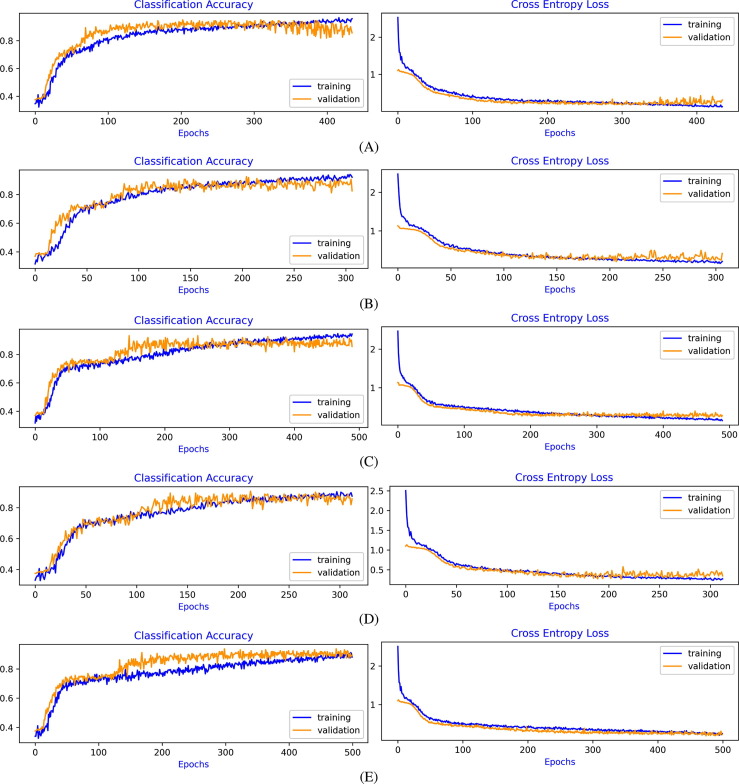

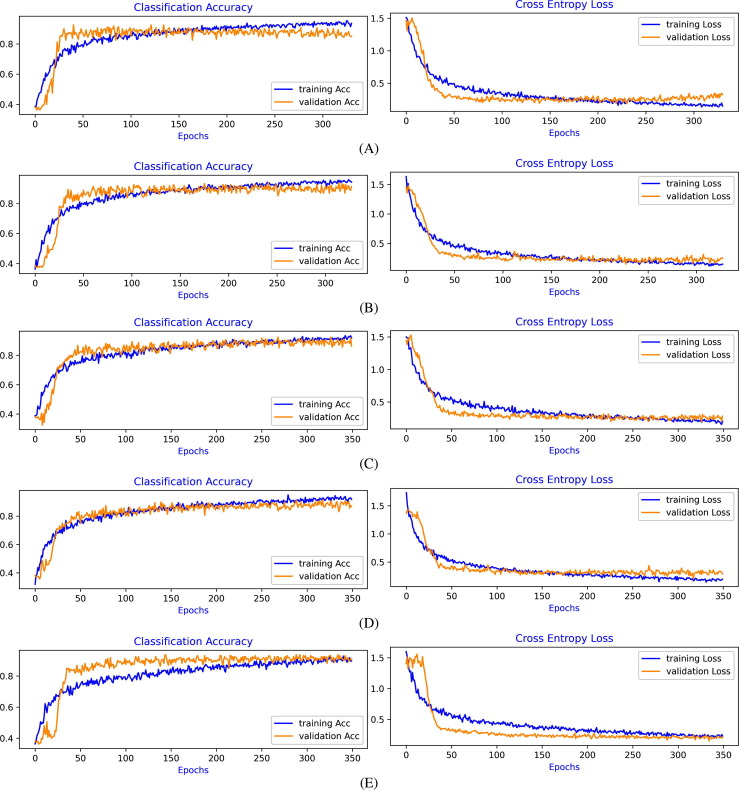

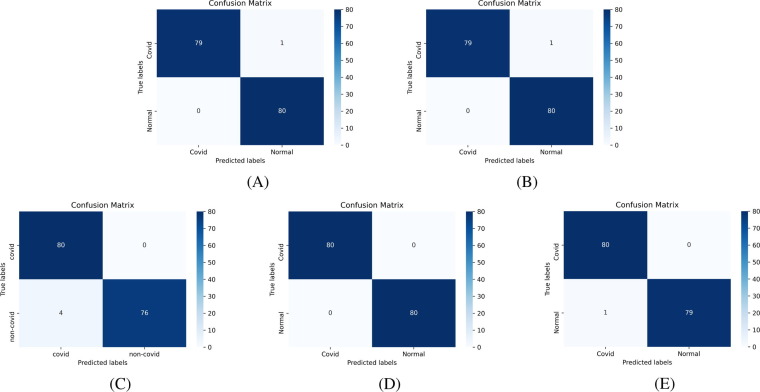

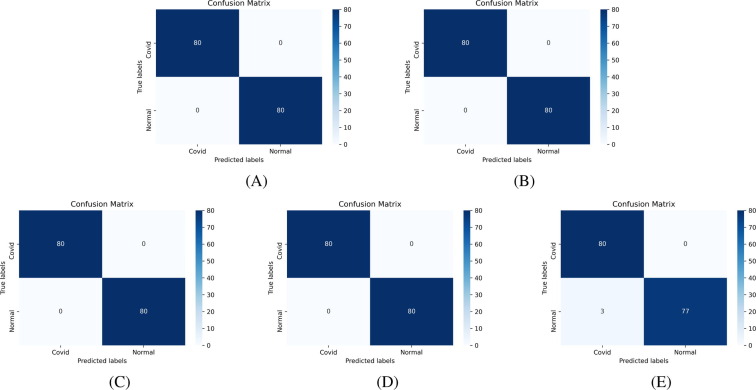

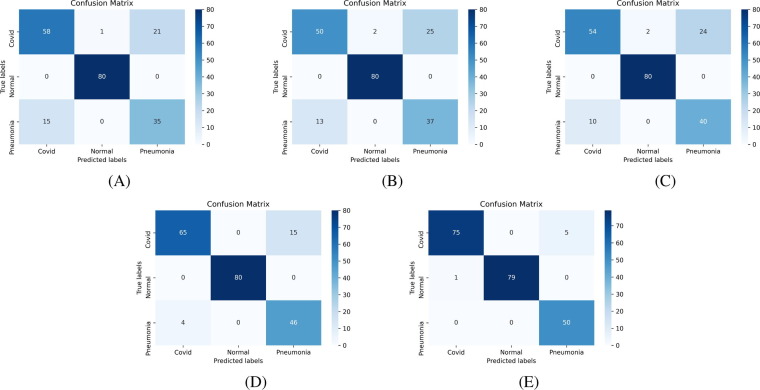

Training and Validation Learning Curves of Cross-Entropy Loss and Classification Accuracy have been also evaluated during the training process. Parameters of the model are optimized based on the learning curves of Cross-Entropy Loss, and the model has been evaluated based on the learning curves of Classification Accuracy. The validation curve indicates how well the model is learning. Overfitting and underfitting of the model are assessed, and based on these observations, fine-tuning of the Hyperparameters is carried out to achieve a Good-Fit of the model.

During the fine-tuning of the model, it is observed that the Stochastic Gradient Descent optimization algorithm, as compared to the other adaptive optimization algorithms, gives better control of Hyperparameters to improve the learning performance. We also observed that the model performance is highly sensitive to the Hyperparameters, particularly to learning rate, batch size, momentum, and thus careful fine-tuning is required. As compared to ResNet50 architecture, VGG16 architecture is more flexible as extra convolution blocks, including optimization techniques, can be incorporated more easily.

Learning curves of Cross-Entropy Loss and Classification Accuracy for Experiment-1 to Experiment-4, for each 5-fold cross-validation, are shown in Fig. 4, Fig. 5, Fig. 6, Fig. 7 , respectively. From the evaluation of the learning curves, it is observed that there is a minimal fluctuations and better convergence between the training and validation curves that shows the Good-Fit of the model.

Fig. 4.

Learning Curves of binary classification, COVID-19 vs. Normal, using VGG16 based architecture (Experiment-1) using: (A) Fold-1 dataset; (B) Fold-2 dataset; (C) Fold-3 dataset; (D) Fold-4 dataset; (E) Fold-5 dataset.

Fig. 5.

Learning Curves of binary classification, COVID-19 vs. Normal, using ResNet50 based architecture (Experiment-2) using: (A) Fold-1 dataset; (B) Fold-2 dataset; (C) Fold-3 dataset; (D) Fold-4 dataset; (E) Fold-5 dataset.

Fig. 6.

Learning Curves of multiclass classification, COVID-19 vs. Normal vs. Pneumonia, using VGG16 based architecture (Experiment-3) using: (A) Fold-1 dataset; (B) Fold-2 dataset; (C) Fold-3 dataset; (D) Fold-4 dataset; (E) Fold-5 dataset.

Fig. 7.

Learning Curves of multiclass classification, COVID-19 vs. Normal vs. Pneumonia, using ResNet50 based architecture (Experiment-4) using: (A) Fold-1 dataset; (B) Fold-2 dataset; (C) Fold-3 dataset; (D) Fold-4 dataset; (E) Fold-5 dataset.

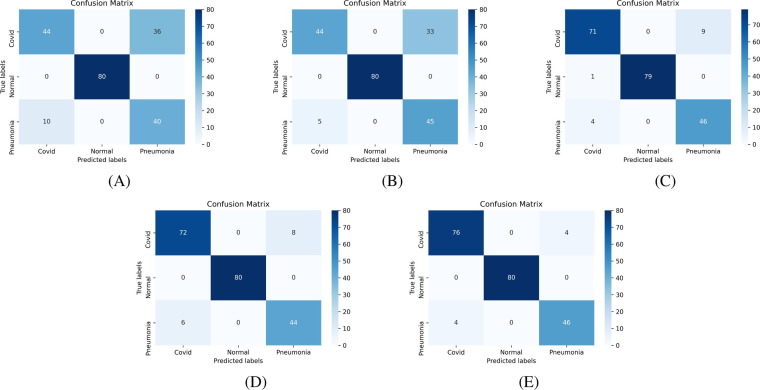

Confusion matrix [53] is an essential tool to analyze the model performance, which provides a matrix of true labels vs. predicted labels. Confusion matrices of Experiment-1 to Experiment-4, for each fold, are shown in Fig. 8, Fig. 9, Fig. 10, Fig. 11 , respectively. The diagonal elements of the matrix show the number of correct classification in each category. Through the confusion matrix, the performance measures can be calculated for each class, giving a better understanding of the relations and interdependencies among various classes. It is observed that the Normal category of CT images are categorized exceptionally well from COVID-19 and Pneumonia images. However, major confusion occurred between COVID and Pneumonia categories. This confusion is primarily due to the similarity of impacts made by the diseases on human.

Fig. 8.

Confusion Matrix of binary classification, COVID-19 vs. Normal, using VGG16 based architecture (Experiment-1) using: (A) Fold-1 dataset; (B) Fold-2 dataset; (C) Fold-3 dataset; (D) Fold-4 dataset; (E) Fold-5 dataset.

Fig. 9.

Confusion Matrix of binary classification, COVID-19 vs. Normal, using ResNet50 based architecture (Experiment-2) using: (A) Fold-1 dataset; (B) Fold-2 dataset; (C) Fold-3 dataset; (D) Fold-4 dataset; (E) Fold-5 dataset.

Fig. 10.

Confusion Matrix of multiclass classification, COVID-19 vs. Normal vs. Pneumonia, using VGG16 based architecture (Experiment-3) using: (A) Fold-1 dataset; (B) Fold-2 dataset; (C) Fold-3 dataset; (D) Fold-4 dataset; (E) Fold-5 dataset.

Fig. 11.

Confusion Matrix of multiclass classification, COVID-19 vs. Normal vs. Pneumonia, using ResNet50 based architecture (Experiment-4) using: (A) Fold-1 dataset; (B) Fold-2 dataset; (C) Fold-3 dataset; (D) Fold-4 dataset; (E) Fold-5 dataset.

In last one year, several efforts have been made in development of deep learning based COVID-19 detection models. Direct comparison with the proposed models in literature could not be carried out view lack of common training dataset. However, some comparisons have been undertaken in-terms of proposed methodologies. Authors in [29] have used DarkCOVIDNet model and achieves an average classification accuracy of 87.02% using 5-fold cross-validation on X-ray images. COVID-Net network architecture in [37] achieves classification accuracy of 93.3%, whereas, ResNet50 and VGG16 based models in [35] achieves classification accuracy of 87.54% and 74.84%, respectively. It is pertinent to note that the dataset in [37], [35] are class unbalanced due to lack of adequate COVID-19 images and have been evaluated using single-fold cross-validation only. Superior classification accuracy between COVID-19 and Pneumonia has been proposed using ultrasound images in [38].

The authors in [40] attains validation accuracy of 92.81% for binary class classification (COVID-19 vs. Normal) and 96.83% for three-class classification (COVID-19 vs. Pneumonia vs. Normal) using feature-based feed-forward neural network. The author in [41] using a 10-fold cross-validation experiment for binary classification (normal vs. COVID-19), achieves a sensitivity of 94.44%, a specificity of 93.63%, and accuracy of 94.03%. The proposed model in [42] achieves average F1-score of 97.04 and precision of 97.32%, 96.42%, 96.99%, 97.38% on COVID-19, pneumonia, tuberculosis and healthy classes, respectively. The extractor-classifier combination in [43] achieves the best F1-score of 98.5% using MobileNet architecture with the SVM classifier and a linear Kernel. The authors attains the best F1-score of 95.6% using DenseNet201 with multi-layer perceptron (MLP) for the other dataset. U-Net-based architecture [44] attains average classification accuracy of 94.26% using 10-fold cross-validation using lungs CT scan images. The model [45] based on Multiple Kernels-Extreme Learning Machine and neural network using chest CT images achieves classification accuracy of 98.36% for binary classification. The proposed model in [46] obtains classification accuracy of 100% for binary classification (COVID vs. Non-COVID), 99.72% for three-class classification (COVID-19 vs. Normal vs. Pneumonia) and 94.44% for four-class classification (COVID-19 vs. Normal vs. Bacterial Pneumonia vs. Viral Pneumonia).

Based on the extensive experimentation and detailed performance analysis of the proposed models in this study, some important observations have been made, which are briefly presented as follows:

-

1.

In Experiment-1, the model shows superior performance with a test accuracy of more than 97% in all the dataset folds. In all folds, training and validation learning curves converge perfectly, showing a Good-Fit of the model.

-

2.

In Experiment-2, the performance of the model is outstanding. It achieves test accuracy of almost 100% in all experiments that show the great avenues in developing technology for the detection of COVID-19 disease using CT images. This performance is consistent in all the 5-Fold experiments. The training and validation learning curves are converging very well without any substantial random variations.

-

3.

In Experiment-3, the model achieves varying degrees of test accuracies ranging from 80% to 96%. This variation is mainly due to the diverse origin of input images and the similarity of features of Pneumonia and COVID-19 images. However, in all the experiment folds, the training and validation learning curves converge very well. The model improvement can be achieved by adding more number of input training images.

-

4.

In Experiment-4, the Fold-5 experiment achieved the highest test accuracy of 97%, and the Fold-2 experiment achieved the lowest test accuracy of 78%. It shows a wide variation in the test accuracy from different experiments. Comprehensive analysis of the confusion matrix reveals that the reduction in performance is primarily due to the confusion between Pneumonia and COVID classes. As both diseases are viral with similar symptoms and training images are comparatively smaller in number, the model could not learn essential higher-order features that lead to lower test accuracies in some experiments. The model achieved an average accuracy of 88.52% that can be considered a promising performance towards developing a deep learning-based COVID-19 detection model.

-

5.

Stratified 5-Fold cross-validation plays a crucial role in assessing the effectiveness and robustness of the model. It averages the biases towards the large variations in the test dataset as final accuracy will be the mean of accuracy from each fold. Many deep learning-based models proposed in the literature have been evaluated based on single fold experiment only. It is considered to be a preliminary evaluation technique as noticeable from the large variations among the test results from 5-fold cross-validation of each experiment.

4. Conclusion and future scopes

A deep learning model based on VGG16 and ResNet50 transfer learning architecture has been experimented in this study for the rapid and efficient detection of COVID-19 disease using CT scan images. Four different experiments have been undertaken to evaluate the proposed models for binary class classification (COVID vs. Normal) and multiclass classification (COVID vs. Normal vs. Pneumonia). The robustness of the proposed models has been assessed through the stratified 5-fold cross-validation. It is observed that the proposed model performs exceptionally well in case of binary classification with an average classification accuracy of more than 99% in both VGG16 and ResNet50 based models. The model’s performance degrades relatively when Pneumonia images are added to make it multiclass classification, though it shows superior and promising results. With the limited available dataset, the proposed model achieves an average accuracy of 86.74% and 88.52% using VGG16 and ResNet50 architectures as baseline, respectively, in the case of multiclass classification. This degradation is primarily due to viral nature and similar impacts on human by COVID-19 and Pneumonia. The results analysis of the proposed model has shown promising results, which would enable the development of AI-based automated solutions/systems assisting the radiologist in efficient and accurate detection of COVID-19 disease. The proposed model can be further built upon or improved by researching the following areas:

-

1.

Availability of a large number of good quality training images will enable the learning of diverse and higher-order features and will reduce the generalization error that would further accelerate the research and development.

-

2.

Various high performing transfer learning-based models can be integrated with the proposed model to improve the model’s learning capabilities and performance.

-

3.

Performance can be further improved upon by incorporating X-ray or ultrasound images along with CT scan images.

-

4.

Pre-processing measures can be incorporated into the proposed model to learn the fine-order distinguishing features between Pneumonia and CT scan images to improve the overall performance.

-

5.

A hybrid approach based on integration of fuzzy logic and artificial neural network can be studied to have a robust representation of partial truth/uncertainty in the classification.

-

6.

Performance study of the proposed model can be undertaken by collecting continent-wise dataset to have more understanding about the impact of regional variation.

CRediT authorship contribution statement

Narendra Kumar Mishra: Conceptualization, Methodology, Software, Validation, Writing - original draft, Writing - review & editing. Pushpendra Singh: Conceptualization, Methodology, Validation, Writing - original draft, Writing - review & editing. Shiv Dutt Joshi: Conceptualization, Methodology, Validation, Writing - original draft, Writing - review & editing.

Declaration of Competing Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

References

- 1.https://covid19.who.int/, last accessed on 09 Jan 21.

- 2.Rajkumar R.P. COVID-19 and mental health: a review of the existing literature. Asian J Psychiatry. 2020;52 doi: 10.1016/j.ajp.2020.102066. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Luo M., Guo L., Yu M., Jiang W., Wang H. The psychological and mental impact of coronavirus disease 2019 (COVID-19) on medical staff and general public – a systematic review and meta-analysis. Psychiatry Res. 2020;291 doi: 10.1016/j.psychres.2020.113190. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Transmission of corona virus by asymptomatic cases: URL: http://www.emro.who.int/health-topics/coronavirus/transmission-of-covid-19-by-asymptomatic-cases.html, last accessed on 19 Oct 20.

- 5.URL: https://www.fda.gov/consumers/consumer-updates/coronavirus-testing-basics, last accessed on 19 Oct 20.

- 6.Ai T., Yang Z., Hou H., Zhan C., Chen C., Lv W., Tao Q., Sun Z., Xia L. Correlation of chest CT and RT-PCR testing for coronavirus disease 2019 (COVID-19) in China: a report of 1014 cases. Radiology. 2020;296(2):E32–E40. doi: 10.1148/radiol.2020200642. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Araujo-Filho J.A.B., Sawamura M.V.Y., Costa A.N., Cerri G.G., Nomura C.H. COVID-19 pneumonia: what is the role of imaging in diagnosis? J Brasileiro de Pneumol. 2020;46(2) doi: 10.36416/1806-3756/e20200114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Coronavirus tests: URL: https://www.nature.com/articles/d41586-020-02140-8, last accessed on 19 Oct 20.

- 9.Castillo O., Melin P. Forecasting of COVID-19 time series for countries in the world based on a hybrid approach combining the fractal dimension and fuzzy logic. Chaos Solitons Fractals. 2020;140 doi: 10.1016/j.chaos.2020.110242. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Sun T., Wang Y. Modeling COVID-19 epidemic in Heilongjiang province, China. Chaos Solitons Fractals. 2020;138 doi: 10.1016/j.chaos.2020.109949. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Singh P, Gupta A. Generalized SIR (GSIR) epidemic model: an improved framework for the predictive monitoring of COVID-19 pandemic. ISA Trans 2021;15:S0019-0578(21)00099-9.https://doi.org/10.1016/j.isatra.2021.02.016. [DOI] [PMC free article] [PubMed]

- 12.Singhal A., Singh P., Lall B., Joshi S.D. Modeling and prediction of COVID-19 pandemic using Gaussian mixture model. Chaos Solitons Fractals. 2020;138 doi: 10.1016/j.chaos.2020.110023. 110023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.URL: https://radiologyassistant.nl/chest/covid-19/covid19-imaging-findings, last accessed on 19 Oct 20.

- 14.Hani C., Trieu N.H., Saab I., Dangeard S., Bennani S., Chassagnon G., Revel M.P. COVID-19 pneumonia: a review of typical CT findings and differential diagnosis. Diagnost Intervent Imag. 2020;101(5):263–268. doi: 10.1016/j.diii.2020.03.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Fraiwan L., Hassanin O., Fraiwan M., Khassawneh B., Ibnian A.M., Alkhodari M. Automatic identification of respiratory diseases from stethoscopic lung sound signals using ensemble classifiers. Biocybern Biomed Eng. 2021;41(1):1–14. doi: 10.1016/j.bbe.2020.11.003. [DOI] [Google Scholar]

- 16.Alfian G., Syafrudin M., Anshari M., Benes F., Atmaji F.T.D., Fahrurrozi I., Hidayatullah A.F., Rhee J. Blood glucose prediction model for type 1 diabetes based on artificial neural network with time-domain features. Biocybern Biomed Eng. 2020;40(4):1586–1599. doi: 10.1016/j.bbe.2020.10.004. [DOI] [Google Scholar]

- 17.Daoud H., Bayoumi M.A. Efficient epileptic seizure prediction based on deep learning. IEEE Trans Biomed Circuits Syst. 2019;13(5):804–813. doi: 10.1109/TBCAS.2019.2929053. [DOI] [PubMed] [Google Scholar]

- 18.LeCun Y., Bengio Y., Hinton G. Deep learning. Nature. 2015;521(7553):436–444. doi: 10.1038/nature14539. [DOI] [PubMed] [Google Scholar]

- 19.Gehlot S., Gupta A., Gupta R. SDCT-AuxNet: DCT augmented stain deconvolutional CNN with auxiliary classifier for cancer diagnosis. Med Image Anal. 2020;61:1–15. doi: 10.1016/j.media.2020.101661. [DOI] [PubMed] [Google Scholar]

- 20.Gupta A., Singh P., Karlekar M.A. Novel signal modeling approach for classification of seizure and seizure-free EEG signals. IEEE Trans Neural Syst Rehab Eng. 2018;26(5):925–935. doi: 10.1109/TNSRE.2018.2818123. [DOI] [PubMed] [Google Scholar]

- 21.Mesleh A.M. Lung cancer detection using multi-layer neural networks with independent component analysis: a comparative study of training algorithms. Jordan J Biol Sci. 2017;10(4):239–249. [Google Scholar]

- 22.Convolutional Neural Network: URL: https://cs231n.github.io/convolutional-networks/, last accessed on 11 Oct 20.

- 23.Simonyan K, Zisserman A. Very deep convolutional networks for large-scale image recognition; 2014, arXiv:1409.1556.

- 24.He K., Zhang X., Ren S., Sun J. Deep residual learning for image recognition. IEEE Conference on Computer Vision and Pattern Recognition Las Vegas NV. 2016:770–778. doi: 10.1109/CVPR.2016.90. [DOI] [Google Scholar]

- 25.Szegedy C., Vanhoucke V., Ioffe S., Shlens J., Wojna Z. Rethinking the inception architecture for computer vision. IEEE Conference on Computer Vision and Pattern Recognition Las Vegas NV. 2016:2818–2826. doi: 10.1109/CVPR.2016.308. [DOI] [Google Scholar]

- 26.Chollet F. Xception: deep learning with depthwise separable convolutions. IEEE Conference on Computer Vision and Pattern Recognition Honolulu HI. 2017:1800–1807. doi: 10.1109/CVPR.2017.195. [DOI] [Google Scholar]

- 27.Szegedy C, Ioffe S, Vanhoucke V, Alemi A. Inception-v4, Inception–Resnet and the impact of residual connections on learning. Association for the Advancement of Artificial Intelligence Conference on Artificial Intelligence San Francisco CA USA AAAI Press 2017;4278–4284.https://doi.org/10.5555/3298023.3298188.

- 28.Huang G., Liu Z., Maaten L.V.D., Weinberger K.Q. Densely connected convolutional networks. IEEE Conference on Computer Vision and Pattern Recognition Honolulu HI USA. 2017:2261–2269. doi: 10.1109/CVPR.2017.243. [DOI] [Google Scholar]

- 29.Ozturk T., Talo M., Yildirim E.A., Baloglu U.B., Yildirim O., Acharya U.R. Automated detection of COVID-19 cases using deep neural networks with X-ray images. Comput Biol Med. 2020;121 doi: 10.1016/j.compbiomed.2020.103792. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Panwar H., Gupta P.K., Siddiqui M.K., Menendez R.M., Singh V. Application of deep learning for fast detection of COVID-19 in X-Rays using nCOVnet. Chaos Solitons Fractals. 2020;138 doi: 10.1016/j.chaos.2020.109944. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Rahimzadeh M, Attar A. A modified deep convolutional neural network for detecting COVID-19 and pneumonia from chest X-ray images based on the concatenation of Xception and ResNet50V2. Inf Med Unlocked 2020;19:100360.https://doi.org/10.1016/j.imu.2020.100360. [DOI] [PMC free article] [PubMed]

- 32.Luz E, Silva PL, Silva R, Moreira G. Towards an efficient deep learning model for covid-19 patterns detection in x-ray images; 2020, arXiv:2004.05717.

- 33.Ozturk T., Talo M., Yildirim E.A., Baloglu U.B., Yildirim O., Rajendra Acharya U. Automated detection of COVID-19 cases using deep neural networks with X-ray images. Comput Biol Med. 2020;121 doi: 10.1016/j.compbiomed.2020.103792. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Wang N, Liu H, Xu C. Deep learning for the detection of COVID-19 using transfer learning and model integration. IEEE 10th International Conference on Electronics Information and Emergency Communication Beijing China 2020;281–284. doi: 10.1109/ICEIEC49280.2020.9152329.

- 35.Asnaoui K.E., Chawki Y. Using X-ray images and deep learning for automated detection of coronavirus disease. J Biomol Struct Dyn. 2020:1–12. doi: 10.1080/07391102.2020.1767212. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Zhang H.T., Zhang J.S., Zhang H.H., Nan Y.D., Zhao Y., Fu E.Q., Xie Y.H., Liu W., Li W.P., Zhang H.J., Jiang H., Li C.M., Li Y.Y., Ma R.N., Dang S.K., Gao B.B., Zhang X.J., Zhang T. Automated detection and quantification of COVID-19 pneumonia: CT imaging analysis by a deep learning-based software. Eur J Nucl Med Mol Imag. 2020;47(11):2525–2532. doi: 10.1007/s00259-020-04953-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Wang L, Wong A. COVID-net: A tailored deep convolutional neural network design for detection of COVID-19 cases from chest X-ray images; 2020, arXiv:2003.09871. [DOI] [PMC free article] [PubMed]

- 38.Horry M.J., Chakraborty S., Paul M., Ulhaq A., Pradhan B., Saha M., Shukla N. COVID-19 detection through transfer learning using multimodal imaging data. IEEE Access. 2020;8:149808–149824. doi: 10.1109/ACCESS.2020.3016780. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Varela-Santos S., Melin P. A new modular neural network approach with fuzzy response integration for lung disease classification based on multiple objective feature optimization in chest X-ray images. Expert Syst Appl. 2021;168(114361):0957–4174. doi: 10.1016/j.eswa.2020.114361. [DOI] [Google Scholar]

- 40.Varela-Santos S., Melin P. A new approach for classifying coronavirus COVID-19 based on its manifestation on chest X-rays using texture features and neural networks. Inf Sci. 2021;545:403–414. doi: 10.1016/j.ins.2020.09.041. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Satapathy S.C., Zhu L.Y., G’orriz J.M. A seven-layer convolutional neural network for chest CT based COVID-19 diagnosis using stochastic pooling. IEEE Sens J. 2020;1 doi: 10.1109/JSEN.2020.3025855. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Wang S.H., Nayak D.R., Guttery D.S., Zhang X., Zhang Y.D. COVID-19 classification by CCSHNet with deep fusion using transfer learning and discriminant correlation analysis. Inf Fusion. 2021;68:131–148. doi: 10.1016/j.inffus.2020.11.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Ohata EF, Bezerra GM, Chagas JVSD, Lira Neto AV, Albuquerque AB, Albuquerque VHCD, Reboucas Filho PP. Automatic detection of COVID-19 infection using chest X-ray images through transfer learning. IEEE/CAA J Automatica Sin 2021;8(1):239–248.https://doi.org/10.1109/JAS.2020.1003393.

- 44.Kalane P., Patil S., Patil B.P., Sharma D.P. Automatic detection of COVID-19 disease using U-net architecture based fully convolutional network. Biomed Signal Process Control. 2021 doi: 10.1016/j.bspc.2021.102518. 102518. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Turkoglu M. COVID-19 detection system using chest CT images and multiple kernels-extreme learning machine based on deep neural network. IRBM J. 2021 doi: 10.1016/j.irbm.2021.01.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Elkorany A.S., Elsharkawy Z.F. COVIDetection-Net: a tailored COVID-19 detection from chest radiography images using deep learning. Optik (Stuttgart) J. 2021;231 doi: 10.1016/j.ijleo.2021.166405. 166405. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Normal (Healthy) CT scan images: URL: https://www.kaggle.com/plameneduardo/sarscov2-ctscan-dataset, last accessed on 15 Sep 2020.

- 48.COVID-19 infected CT scan images: URL: https://www.sirm.org/, last accessed on 10 Sep 2020.

- 49.COVID-19 and Pneumonia infected CT scan images: URL: https://radiopaedia.org/cases, last accessed on 15 Sep 2020.

- 50.Ioffe S, Szegedy C. Batch Normalization: accelerating deep network training by reducing internal covariate shift. Proceedings of the 32nd International Conference on Machine Learning 2015;37:448–456.

- 51.Srivastava N., Hinton G., Krizhevsky A., Sutskever I., Salakhutdinov R. Dropout: a simple way to prevent neural networks from overfitting. J Mach Learn Res. 2014;15:1929–1958. [Google Scholar]

- 52.Keras Library for software coding: URL: https://keras.io/, last accessed on 03 Oct 2020.

- 53.Confusion matrix: URL: https://en.wikipedia.org/wiki/Confusion_matrix, last accessed on 26 Aug 2020.