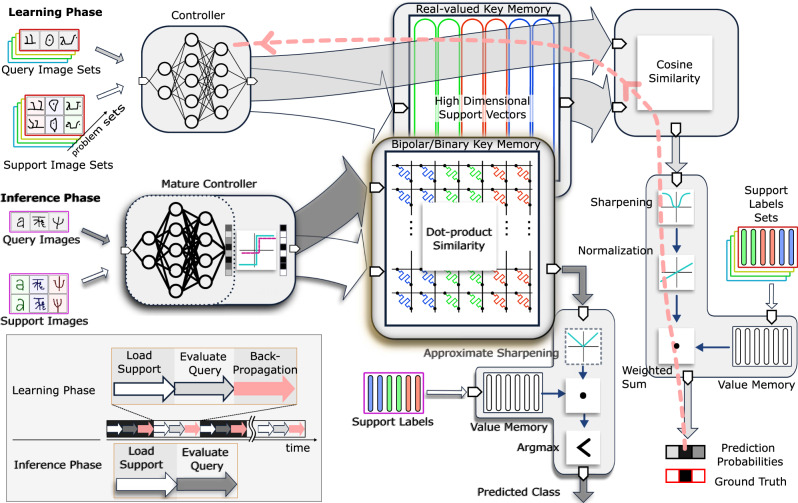

Fig. 2. Proposed robust HD MANN architecture.

The learning phase of the proposed MANN involves a CNN controller which first propagates images in the support set to generate the HD support vector representations that are stored in the real-valued key memory. The corresponding support labels are stored in the value memory. For the evaluation, the controller propagates the query images to produce the HD vectors for the query. A cosine similarity module then compares the query vector with each of the support vectors stored in the real-valued key memory. Subsequently, the resulting similarity scores are subject to a sharpening function, normalization, and weighted sum operations with the value memory to produce prediction probabilities. The prediction probabilities are compared against the ground truth labels to generate an error which is backpropagated through the network to update the w eights of the controller (see pink arrows). This episodic training process is repeated across batches of support and query images from different problem sets until the controller reaches maturity. In the inference phase, we use a hardware-friendly version of our architecture by simplifying HD vector representations, similarity, normalization, and sharpening functions. The mature controller is employed along with an activation function that readily clips the real-valued vectors to obtain bipolar/binary vectors at the output of controller. The modified bipolar or binary support vectors are stored in the key memristive crossbar array (i.e., bipolar/binary key memory). Similarly, when the query image is fed through the mature controller, its HD bipolar or binary representation, as a query vector, is used to obtain similarity scores against the stored support vectors in the memristive crossbar array. The bipolar/binary key memristive crossbar approximates cosine similarities between a query and all the support vectors with the constant-scaled dot products in by employing in-memory computing. The results are weighted and summed by the support labels (in the value memory) after an approximate sharpening step and the maximum response index is output as the prediction.