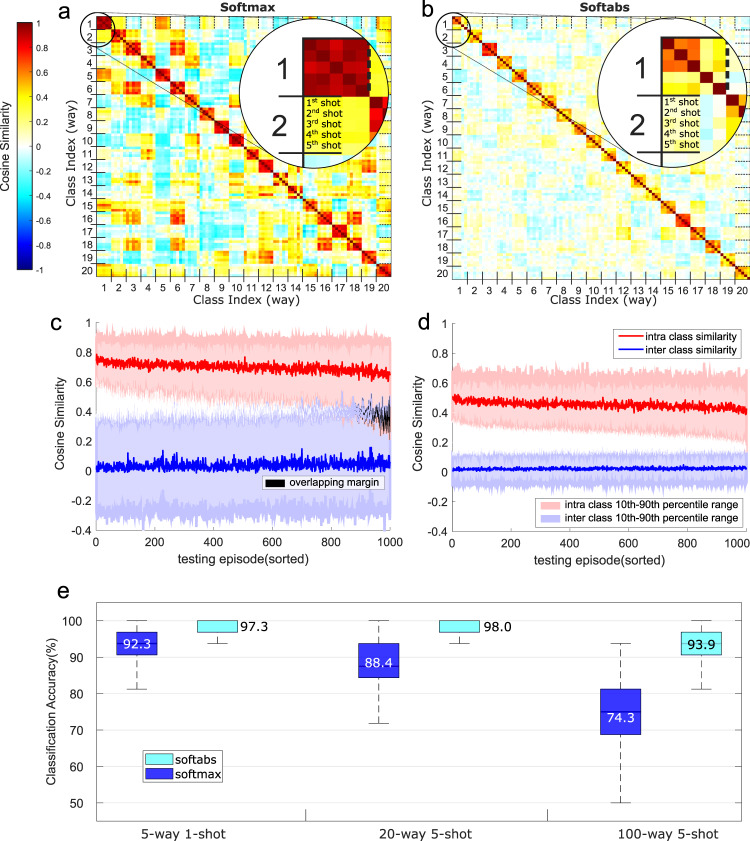

Fig. 3. The role of sharpening functions.

The pairwise cosine similarity matrix from the support set of a single testing episode of 20-way 5-shot problem learned using the softmax (a) and the softabs (b) as the sharpening functions. Intra-class and inter-class cosine similarity spread across 1000 testing episodes in 20-way 5-shot problem with the softmax (c) and the softabs (d) as the sharpening functions (the episodes are sorted by the intra-class to inter-class cosine similarity ratio highest to lowest). In the case of the softmax sharpening function, the margin between 10th percentile of intra-class similarity and 90th percentile of inter-class similarity is reduced, and sometimes becomes even negative due to overlapping distributions. In contrast, the softabs function leads to a relatively larger margin separation (1.75×, on average) without causing any overlap. The average margin for the softabs is 0.1371, compared with 0.0781 for the softmax. (e) Classification accuracy in the form of a box plot from 1000 few-shot episodes, where each episode consists of a batch of 32 queries. The softabs sharpening function achieves better overall accuracy and less variations across episodes for all few-shot problems. The average accuracy is depicted in each case.