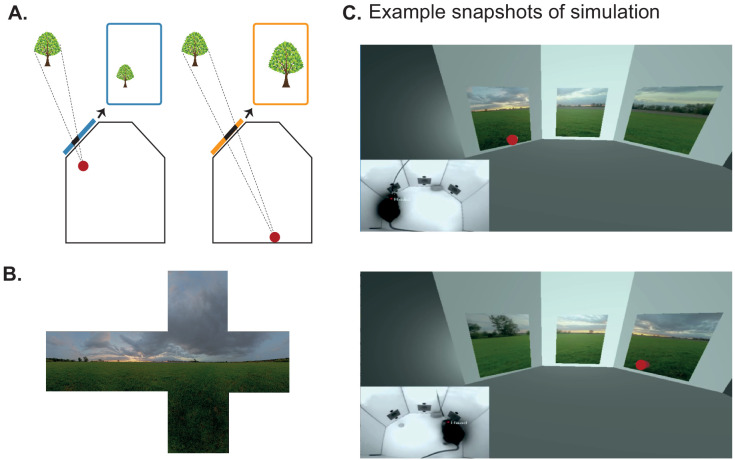

Figure 3. Using BonVision to generate an augmented reality environment.

(A) Illustration of how the image on a fixed display needs to adapt as an observer (red dot) moves around an environment. The displays simulate windows from a box into a virtual world outside. (B) The virtual scene (from: http://scmapdb.com/wad:skybox-skies) that was used to generate the example images and Figure 3—video 1 offline. (C) Real-time simulation of scene rendering in augmented reality. We show two snapshots of the simulated scene rendering, which is also shown in Figure 3—video 1. In each case the inset image shows the actual video images, of a mouse exploring an arena, that were used to determine the viewpoint of an observer in the simulation. The mouse’s head position was inferred (at a rate of 40 frames/s) by a network trained using DeepLabCut (Aronov and Tank, 2014). The top image shows an instance when the animal was on the left of the arena (head position indicated by the red dot in the main panel) and the lower image shows an instance when it was on the right of the arena.