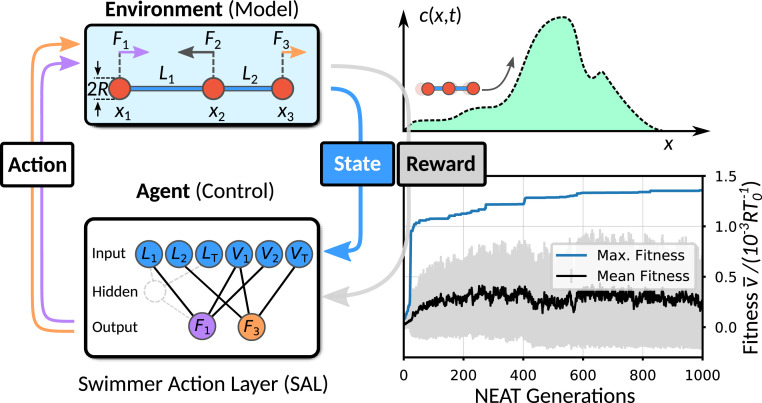

Fig. 1.

Schematic representation of the RL cycle for a three-bead swimmer moving in a viscous environment (Upper Left) controlled by an ANN-based agent (Lower Left). Reward is maximized during training and is granted either for unidirectional locomotion—phase 1—or for chemotaxis (Upper Right)—phase 2. (Lower Right) Typical NEAT training curves showing the maximum (blue), the mean (black), and the SD (gray) of the fitness (i.e., of the cumulative reward) of successive NEAT generations each covering 200 neural networks when learning unidirectional locomotion.