Abstract

Deep learning (DL) approaches are part of the machine learning (ML) subfield concerned with the development of computational models to train artificial intelligence systems. DL models are characterized by automatically extracting high-level features from the input data to learn the relationship between matching datasets. Thus, its implementation offers an advantage over common ML methods that often require the practitioner to have some domain knowledge of the input data to select the best latent representation. As a result of this advantage, DL has been successfully applied within the medical imaging field to address problems, such as disease classification and tumor segmentation for which it is difficult or impossible to determine which image features are relevant. Therefore, taking into consideration the positive impact of DL on the medical imaging field, this article reviews the key concepts associated with its evolution and implementation. The sections of this review summarize the milestones related to the development of the DL field, followed by a description of the elements of deep neural network and an overview of its application within the medical imaging field. Subsequently, the key steps necessary to implement a supervised DL application are defined, and associated limitations are discussed.

Index Terms—: Classification, convolutional neural networks (CNNs), deep learning (DL), medical imaging, segmentation, synthesis

I. Introduction

DEEP learning (DL) evolved from the machine learning (ML) subfield, which is part of the computer science branch known as artificial intelligence (AI). The goal of any AI system is to learn from its environment without being programmed to identify any specific patterns of it. Thus, AI systems can be seen as rational agents that “act so as to achieve the best outcome or, when there is uncertainty, the best-expected outcome” [1]. ML is the AI subfield concerned with the development of approaches to train AI systems [2]. Specifically, ML models learn by exposing the algorithm to an input dataset that has a matching output dataset—the objective of the ML model is to learn the relationship between the input and output datasets. Some established ML approaches include decision tree learning [3], reinforcement learning [4], and Bayesian networks [5]. An essential characteristic of ML models is that it requires a feature engineering step to reduce the dimensionality of the input data to select the best latent representation of the data for the training process. This can be a time-consuming operation with potentially indeterminate outcomes, requiring the practitioner to have some domain knowledge of the data. As a result, DL approaches were designed to overcome this challenge by automatically extracting high-level features from the input data [6]. This advantage of DL over ML dramatically simplifies the training process by eliminating the feature extraction step.

An area that has greatly benefited from this advantage is the medical imaging field in which most of the available data poses an uncertain nature, thus making it difficult, sometimes impossible, to know which features are relevant to the problem at hand. Currently, most of the DL applications within the medical imaging field are narrow, thus trained to solve very specific problems. Some of the most common applications include image classification, regression, segmentation, and synthesis. Applications of DL can be generally divided into two learning approaches: 1) supervised and 2) unsupervised [7]. In the supervised learning approach, there is a matched pair of data to train the model. For example, inputting positron emission tomography (PET) brain images of patients with and without Alzheimer’s disease into a DL model as two distinct classes for classification. Conversely, an unsupervised learning process is characterized for not possessing the same constraint (no matching data is included) [8], allowing the model to determine which are the important features within the dataset and how many distinct classes are present. While the former approach may be advantageous for some applications, in the case of medical imaging data, releasing the constraint offered by matching data may cause the DL model to find patterns that may not necessarily correlate with the clinical interpretation of the data. As a result, most applications of DL models for medical imaging have utilized supervised learning or a hybrid combination of both—unsupervised learning techniques have also been combined with common ML methods. For that reason, in this review, we attempt to present an overview DL and provide a guide on how to successfully implement DL techniques for medical imaging. To that end, Section II summarizes the elements of deep neural networks. Section III includes an overview of DL application in medical imaging. Furthermore, the steps needed to train a neural network are included in Section IV, followed by a discussion of robustness (Section V) and limitations (Section VI).

II. Elements of Deep Neural Networks

A. What Is Deep Neural Network?

Deep neural networks and DL have become a highly successful and popular research topic because of their excellent performance in many benchmark problems and applications [9], especially in the fields of the control system, natural language processing, information retrieval, computer vision, and image analysis [6], [10], [11]. Kohonen [12] gave a widely used definition in 1988 as follows:

“Artificial neural networks are massively parallel interconnected networks of simple (usually adaptive) elements and their hierarchical organizations which are intended to interact with the objects of the real world in the same way as biological nervous system do.”

1). Historical Development of Deep Neural Networks:

Neural networks have been studied for many years. In 1943, McCulloch and Pitts [13] put forward the famous M-P neuron model, which described artificial neurons with Boolean inputs and a binary output. In 1949, Hebb [14] introduced a learning rule that described how the connection between neurons was affected by neural activities, which could be used for updating weights of neural networks. In 1958, Rosenblatt [15] applied the Hebb rule to the M-P model and proposed the perceptron idea, which performed well in solving logic conjunction, disjunction, and negation problems. However, in 1969, Minsky [16] pointed out that the single-layer perceptron could not solve the logic exclusive disjunction problem. However, the multilayer perceptron (MLP) could, but there were no learning rules for updating weights of MLP, which made the research on neural networks sluggish for many years. Inspired by Hubel and Wiesel’s work [17]–[19], Fukushima [20] invented the neocognitron network in 1980 based on the previous work, the cognitron [21]. The neocognitron is a hierarchical and multilayer neural network, which holds many similarities to modern convolutional neural networks (CNNs). In 1985, Rumelhart et al. [22] supplied the backpropagation algorithm, which solved the MLP’s training problems. In 1989, LeCun et al. [23] applied the backpropagation algorithm to the training of multilayer neural networks named LeNet applied to identify handwritten numerals. In 1998, LeCun [24] put forward the LeNet-5, which presaged the coming of CNNs. In 2006, Hinton et al. [25] proposed the deep belief network (DBN), which gave a solution to the training of deep neural networks. In 2012, Krizhevsky et al. [26] created AlexNet and won the ImageNet competition (to perform visual object recognition from photographs). AlexNet was a historical breakthrough in neural network performance and capability. Since then, neural networks have undergone rapid development and developed in four main directions: 1) increasing the depth (number of layers) of neural networks, like the VGGNet [27]; 2) reinforcing the convolutional layer’s function, like the GoogleNet [28]; 3) transferring tasks from classification to detection, like the RCNN [29], Fast RCNN [30], and Faster R-CNN [31]; and 4) adding new functional modules, like FCN [32], UNet [33], and STNet [34].

2). Multilayer Perceptron:

The MLP is also called a feed-forward neural network (FNN) and defines the basic model of deep neural networks. It defines a map between the input x and the ground truth y, aiming at learning the parameters θ and getting the approximation of the function f. As shown in Fig. 1(b), the MLP is composed of multiple layers, including the input layer, hidden layer, and output layer. Each layer contains multiple neurons, the building block of deep neural networks. Neurons in adjacent layers are connected with each other while those in the same layer are independent.

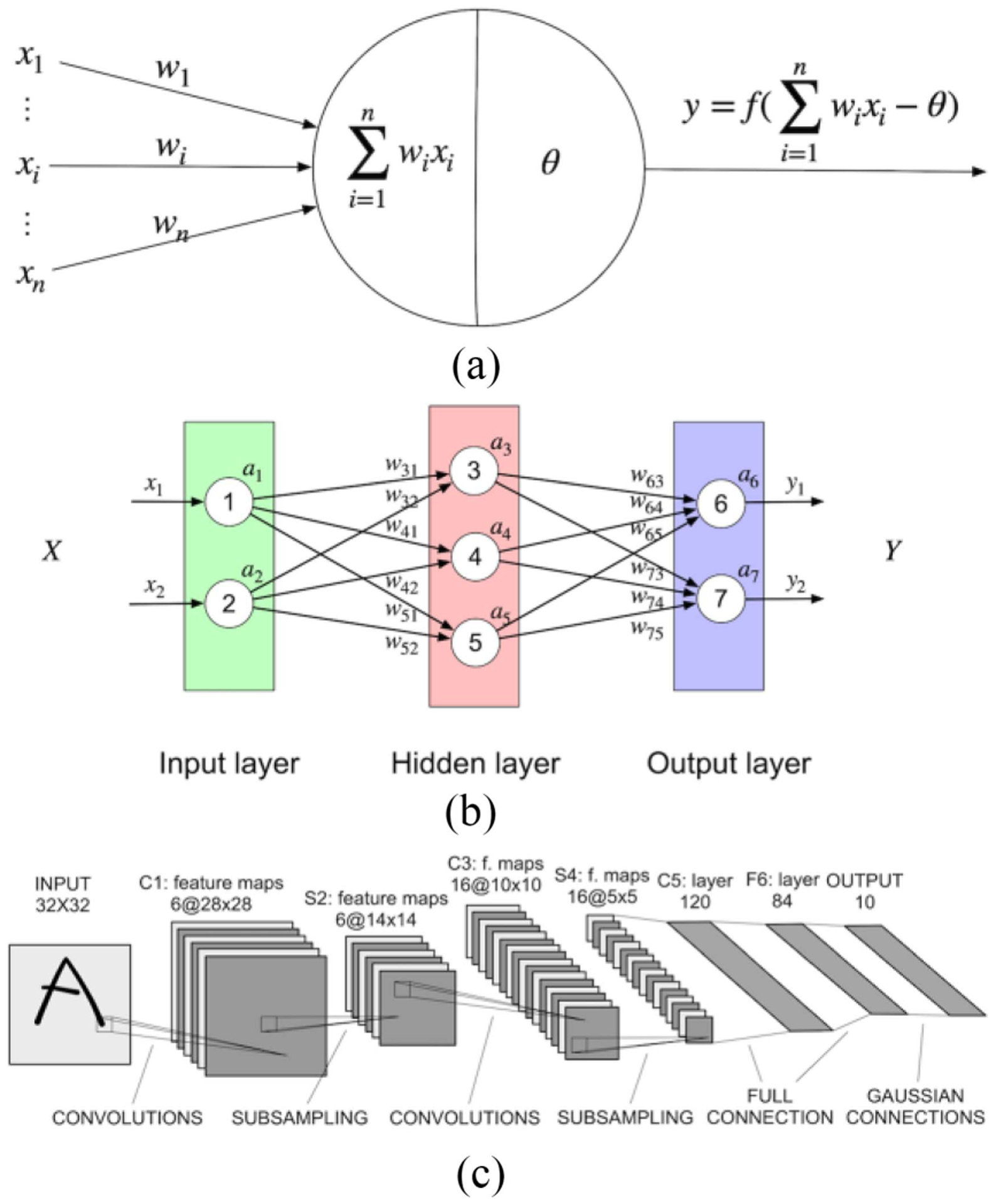

Fig. 1.

(a) Structure of the McCulloch and Pitts neuron model. xi stands for the input of the neuron, wi stands for the corresponding weight. θ means the bias which is related to decide whether this neuron should be activated or not. (b) Structure of the MLP. (c) Structure of the LeNet5 model, designed for character recognition.

The training of the MLP includes two stages, the forward propagation and the backpropagation.

Stage 1 (Forward Propagation):

For a single neuron, the M-P neural model is shown in Fig. 1(a). xi means the ith input of the neuron and wi means the corresponding weight of the ith input. θ is the threshold. f is the activation function, which decides whether the neuron is in an active or inactive state. From the M-P neural model, if f is defined as , then it means the neuron will be activated if the weighted sum of inputs is larger than the threshold (θ). Otherwise, it will remain silent.

Therefore, the forward propagation of MLP can be presented as follows:

| (1) |

| (2) |

is the output of the ith neuron in the lth layer. is the jth neuron’s value in the (l + 1)th layer before being activated. is the weight between the ith neuron in the lth layer and the jth neuron in the (l + 1)th layer. is the bias. f is the nonlinear activation function, like the Sigmoid, Tanh, and ReLU.

Stage 2 (Backpropagation):

The backpropagation algorithm is designed for updating the weights of the MLP based on the loss obtained by comparing the difference between the MLP’s output and the ground truth. Lower loss and a better model can be achieved by properly tuning hyperparameters, which is typically determined manually prior to training. A growing area of the field is a focus on the automation of hyperparameter optimization [35].

Loss functions and optimization methods are highly flexible and important during the training process. How to choose a suitable loss function and optimization method is described in Section IV-C “network training and validation.” If the squared error is used for measuring the difference between the MLP’s output and the ground truth, the loss can be obtained through the following formula. L is the number of layers in MLP. means the jth neuron’s output in the final layer, Lth layer

| (3) |

If the gradient descent optimization function is chosen as the optimization method, then the weights can be updated in such a way as shown in the following formula. η is the learning rate

| (4) |

According to the chain rule, the update rule for the output layer can be computed as follows:

| (5) |

Then, the updated weights of the last layer can be computed in the following way:

| (6) |

However, for the hidden layer, the update rule is slightly different

| (7) |

If we define

| (8) |

Then

| (9) |

Thus, the relationship between and can be represented as such

| (10) |

Finally, the update formula for the weights of the hidden layers is shown as follows:

| (11) |

In summary, the training loss will be computed after the forward propagation. Based on the loss, the parameters of each layer will be updated in the backpropagation according to certain rules.

B. Type of Deep Neural Network Layers

Fig. 1(c) shows the architecture of the LeNet-5 CNN, which was initially proposed for handwritten character recognition [24]. CNNs are composed of a sequence of layers, including multiple layers, such as a convolutional layer, a pooling layer, a fully connected layer, and an activation layer. Data size is usually reduced or downsampled pooling layers. Therefore, if data size needs to be upsampled, transpose convolution layers or upsampling layers are needed. In addition to these basic types of layers, there are some other advanced layers, such as the normalization layer and the dropout layer, which are designed for specific purposes, such as facilitating training and avoiding overfitting. Additionally, operations can happen not only between adjacent layers but also between nonadjacent layers, through operations, such as concatenation. Therefore, deep neural networks can be arbitrarily complicated. However, no matter how complex the network architecture might be, the individual layers are typically made up of common elements. We now describe common layers used in contemporary deep neural networks.

1). Convolutional Layer:

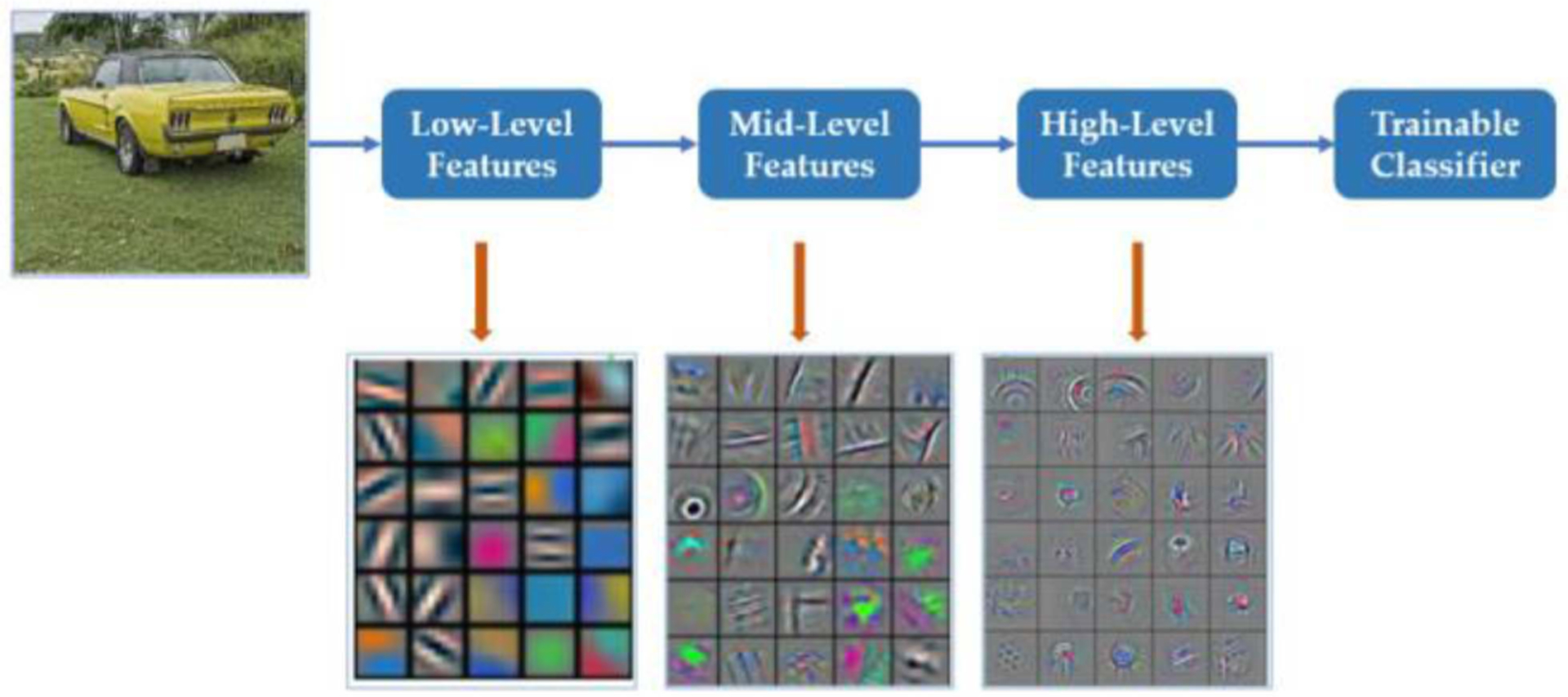

The convolutional layer is the key foundation of the CNN. The input of this layer can be the input images or feature maps. The parameters in this layer consist of learnable filters, also called kernels which are to be learned during training. Each filter usually has the same depth as the input images or feature maps have, but much smaller height and weight. The height and width (and depth, if applicable) of each kernel should be set manually in advance— 3 × 3 is the most commonly used shape. However, note that cascaded small kernels have the same receptive field (i.e., the area in the input involving convolution calculation and producing features) but with less parameters. For example, two cascaded 3×3 kernels only have 18N (N is the factor unrelated to the kernel size) parameters needed to be trained, but the receptive field is the same as one 5×5 kernel, which contains 25N parameters. A 1×1 filter is also possible and is equivalent to applying an affine transformation to the feature maps, which allows the exchange of information at different depths and increasing nonlinearity. As Fig. 2(a) shows, in the forward pass, each filter will move from the left to right first, then up to down. The distance between each move is called stride. Before each move, the dot products between the filter and the corresponding area will be computed and compose one feature map. The visualization of the feature map of various convolutional layers is shown in Fig. 3, as obtained from Meng et al. [36]. It can be concluded that the shallow convolutional layers generally detect the edge and color features while deeper ones generally combine the features from the shallow ones to get more specific features, like the wheel of the car. However, in this way, the image will become smaller and smaller and the boundary information will be lost. To solve this problem, zero padding is introduced by adding zeros to the left and right, up and down symmetrically. Then, the output shape of the convolutional layer can be computed by the following formula. The default value for dilation is typically 1

| (12) |

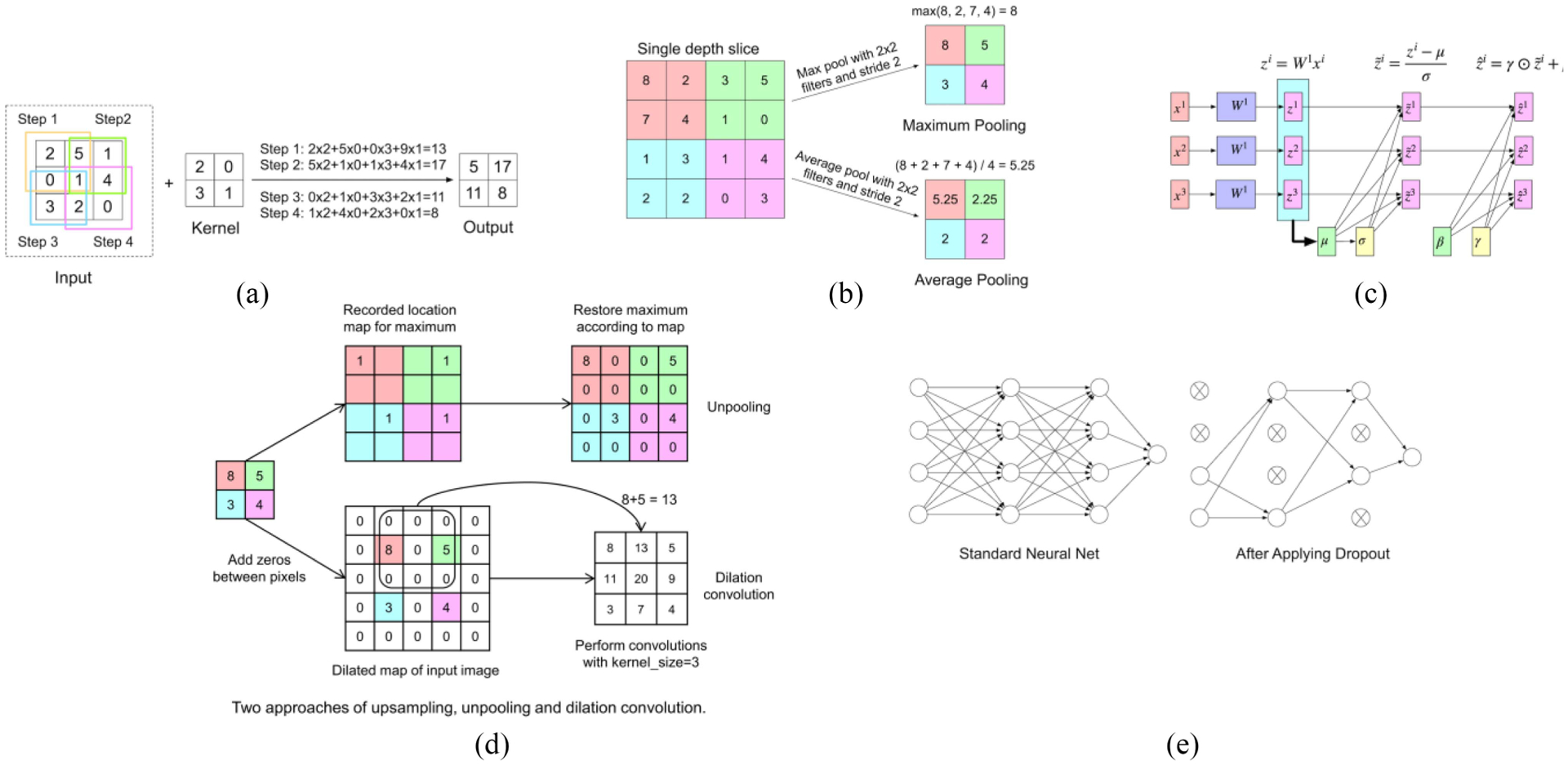

Fig. 2.

Graphical depiction of several layers from a CNN. (a) Convolutional layer. (b) Max-pooling and averaging pooling layers for downsampling. (c) Pipeline of a batch normalization layer. (d) Unpooling and dilation convolution layers for upsampling. (e) Dropout.

Fig. 3.

Depiction of different model features reconstructed from different layers of a CNN. *From F. Meng, X. Wang, F. Shao, D. Wang, and X. Hua, “Energy-Efficient Gabor Kernels in Neural Networks With Genetic Algorithm Training Method,” Electronics, vol. 8, no. 1, Art. no. 1, Jan. 2019, doi: 10.3390/electronics8010105. Meng et al. is an open-access article distributed under the terms of the Creative Commons CCBY license and no changes were made to this figure.

Three factors play an important role in the learning process of a CNN, including sparse interactions, parameter sharing, and equivariant representations [37], which make CNNs computationally efficient. Sparse interactions are also called sparse connectivity. It means each neuron is connected to only a limited number of other adjacent neurons instead of full neurons in the next layer, which requires fewer calculations to obtain the output. Parameter sharing refers to the fact that each kernel is location invariant between input images, aiming at controlling the number of parameters. Moreover, the parameter sharing property makes the convolutional layer equivariant to translation. Take object detection as an example. No matter where the object is, it can be detected and recognized because the kernel is the same for different parts of the whole image. Though these three methods are basic, they contribute significantly to improving the efficiency of a neural network.

2). Pooling Layer:

Pooling layers are also widely used in CNNs. This layer is designed for compressing the feature map to simplify the network computation and get the main feature of each feature map. Thus, the size of the images becomes smaller after the pooling layer. Similar to the convolutional layer, the stride, padding, and dilation concepts exist in the pooling layer as well. The relationship between the input size and the output size of the pooling layer is the same as (12).

There are two main kinds of pooling methods: 1) max pooling [38] and 2) average pooling. As Fig. 2(b) shows, for the max-pooling method, the maximum of each filter will be kept, while the average value of each activation will be computed and kept for the average pooling method. In practice, neither of the two methods appear to have advantages over the other.

3). Upsampling Layer:

In applications of segmentation [33] and synthesis [39], upsampling layers are deployed for extending compressed features to details in output images. To expand the image size, one of the simplest ways is to use interpolation, including nearest, linear, bilinear, trilinear, or bicubic resampling. Another approach is to reverse the concept of max pooling [40]. During the unpooling procedure, the locations of maximums in each filter will be filled into the larger zero-filled matrix according to location. This tracks the locations of the strongest activations so that it can reconstruct detailed features of an object [41]. Another approach is the dilated convolution [42]. This dilation convolution layer with dilation less than one, adds zero between input pixels and perform convolutions. The advantages of dilation convolution are extending receptive fields at no sacrifice of resolution. The unpooling layer and dilation convolution layers are shown in Fig. 2(d).

4). Activation Layer:

Activation functions are designed for increasing the nonlinear property of neural networks so that the neural network can approach the real map between the input and output as much as possible. Without the nonlinear activation functions, the neural network cannot solve the “exclusive or” (xor) problem. In recent years, more and more activation layers have been put forward.

For the sigmoid activation function, no matter what the input value is, the output will be limited in the range from 0 to 1, which can be thought of as the probability and is helpful to explain the result meaning. It is widely used for binary classification problems by comparing the output with the threshold, which is usually set as 0.5. The output larger than 0.5 can be labeled as one class while that smaller than 0.5 can be labeled as another class. However, it also has some problems. First is the gradient vanishing problem. According to the deviation function of sigmoid, the gradient is almost zero when the input is larger than 10 or smaller than −10. This means the weight of such kind of neurons will not be updated during the backpropagation. As a result, the neuron cannot learn from the data, and the neural network cannot be optimized. Second, the nonzero-centered problem. The output of the sigmoid function ranges from 0 to 1, which requires more time to converge because the inputs for the next layer are all positive, and thus the gradients for all the neurons are in the same direction. This could introduce undesirable fluctuations during training. Third, the sigmoid function can be computationally time consuming because exponentiation computation is involved.

The tanh function and sigmoid function are very similar. They are both smooth and easy to compute the derivative function. The tanh function also has the vanishing gradient problem and the exponentiation computation problem. However, the tanh function output ranges from 1 to −1, so the tanh function solves the nonzero-centered problem.

The ReLU activation function [43] is widely used successfully in many applications. It is defined as follows: Compared with other activation functions, the ReLU function alleviates the vanishing gradient problem to some degree. This is because, in the positive interval, the output of the derivative function can never be zero. Moreover, it is much faster to do the computation since it only needs to make the comparison with zero according to the formula. Finally, it can accelerate the process of convergence significantly as well. However, some limitations still exist. For example, the output of the ReLU function is also nonzero-centered. As a consequence, there could be some dead neurons due to the zero gradient when the input is negative. Dead neurons imply that some neurons can never be activated, so their weights can never be updated. To deal with the dead neuron problem some improvements have been suggested, such as the Leaky ReLU, ELU [44], and PReLU [45] functions, which allow a very small gradient in the negative interval. However, there is no strong evidence that the leaky ReLU and ELU functions will always work better than the ReLU function.

5). Batch Normalization Layer:

Batch normalization [46] was introduced by Ioffe and Szegedy in 2015. It is usually placed between the convolutional layer and the activation layer and is used to normalize the output of the convolutional layer. The details are shown in Fig. 2(c). A batch of data, x1, x2, and x3 are the inputs of the convolutional layer. z1, z2, and z3 are the outputs of the convolutional layer. Based on these outputs, the mean μ and standard deviation σ can be computed through the following formula:

| (13) |

| (14) |

Then, the normalized one can be computed like this one

| (15) |

After (15) is applied, the data distribution becomes stable with the average equaling zero and standard deviation equaling one. However, such simple normalization for the input of a layer may affect the layer’s nonlinearity. For example, if sigmoid is chosen as the activation layer, this normalization will force its inputs to be in the linear region, which reduces the nonlinearity. Therefore, two other parameters, γ and β, are introduced and an additional transformation is added to (15) just as (16) shows. γ and β are learned by the network during training and are used to shift the distribution of the data, so the nonlinearity of the network can be restored

| (16) |

Since the input of the activation layer has been processed into a certain range, this can reduce the likelihood of neurons getting into a saturated state. For example, if the sigmoid activation function is used and the input of the activation layer has been transferred into the range from −10 to 10, then the derivation function of the sigmoid can hardly become zero. As a result, the neurons can keep learning and update their weights. Additionally, batch normalization can also improve the training speed, performance, and stability of neural networks because it allows each layer of the neural network to learn by itself a little bit more independently of other layers. As a result, it is easier for the neural network to learn and converge.

6). Dropout Layer:

The dropout layer [47] is designed for solving the overfitting problem. Overfitting occurs when the model performs very well on the training dataset but performs badly on the validation dataset. This problem is often caused by smaller training datasets compared with the number of parameters of the model. Since the problem is related to the number of parameters, the dropout layer tries to ignore half of the neurons in a single layer randomly during the training, as shown in Fig. 2(e). In this way, the dependency between neurons in adjacent layers is weakened. As a result, neurons are forced to function more independently instead of depending on other neurons.

7). Fully Connected Layer:

In CNNs, fully connected layers are commonly used in the last few layers of a CNN. They map the feature learned in the previous layers to the marked sample space. The first fully connected layer can convert a multidimensional feature map into a 1-D vector through the convolution operation. During the conversion, the kernel has the same size as the feature map, which means the same height, the same width, and the same depth. An activation function usually follows the first fully connected layer, and its output is the input of another fully connected layer. Similar to neurons in the MLP, the neurons in the fully connected layer are connected to each neuron in the previous activation layer, while the neurons in the same layer are independent.

In other neural networks, like the AUTOMAP DL network used for medical image reconstruction [48], fully connected layers can be used at any layer. However, just as introduced above, neurons in the fully connected layer will be connected to those in the previous layer, which means a huge number of model parameters are needed. In practice, it is hard to train a network with so many parameters due to both computing hardware limitations and challenges with the convergence of extremely large models.

8). Infinite Possibilities:

In addition to the single layers introduced above, some other operations can also happen between nonadjacent layers, like addition, subtraction, multiplication, and concatenation. These layers allow the outputs from different layers to do some computation and make the design of the model more flexible. DL network layers can consist of any programmable operation.

III. Overview of Deep Learning Applications in Medical Imaging

Artificial neural networks evolved from the field of computer vision and have been successfully applied to solve a wide range of problems within the medical imaging field. Specifically, the utilization of DL in this area is greatly motivated by the fact that methods depend on the data, and no previous knowledge of it is required [49]. As a result of this attribute and the uncertain nature of most medical imaging data, DL has become an excellent alternative to solve common problems in the field. Classification, regression, segmentation, and image synthesis and denoising are among the most common DL applications employed to address medical imaging challenges, such as disease detection and prognosis, automatic tumors detection, and image reconstruction. Moreover, the DL model architecture depends on the specific application and in this section. We will attempt to summarize the current literature containing various challenges in the medical imaging field and DL models that have been used to address them.

A. Classification and Regression

Image classification and regression are DL applications that share many network architecture features but are used to solve distinct types of medical imaging problems. The objective of classification is to sort input images into two or more discrete classes, such as to detect the presence or absence of disease. Meanwhile, image regression is concerned with providing a single continuously valued output from an image input, such as a clinically relevant score. For both applications, the network is required to have an input layer, followed by a fixed number of hidden layers and an output layer. Conventionally there is a decrease in the number of filters or nodes as we move deeper into the network. A DL model used for image classification could be transformed into a regression model by simply modifying the activation function of its final output layer. For a binary and multiclass, multilabel classification, a sigmoid activation layer is utilized, while a SoftMax activation is used for multiclass-single-label classifiers [50]. In the case of regression, a linear activation is required for continuous output, and a sigmoid one for values between 0 and 1 [50]. Moreover, DL networks can be utilized to extract high-level features from data that are later inputted into a common ML model, such as a support vector machine (SVM) or Bayesian network (discussed in Section V), to perform the classification or regression task.

Currently, DL classifiers and PET data have been broadly used within the neuroimaging field for the early detection of Alzheimer’s (AD), specifically within the mild cognitive impartment (MCI) phase, and Parkinson’s (PD) disease. Several studies have performed AD/MCI classification using a combination of common ML techniques with DL methods. Unsupervised DL networks, such as stacked autoencoder (SAE) [51], [52], Boltzmann machine (DBM) [53], and DBN [54], were applied to extract high nonlinear features or patterns from PET and magnetic resonance imaging (MRI) data that were then inputted into an SVM classifier. Other studies utilized variants of deep multitask learning networks for feature selection in AD/MCI diagnosis [55], [56]. Furthermore, CNNs have been used for AD/MCI detection. PET image patches were inputted into a 2-D CNN network to explore the effect of multiscale data individually [57] and combined with multimodal data [58] on the early diagnosis of AD. A CNN network was used to predict cognitive decline in MCI patients (development of AD) [59]. Moreover, 3-D patches from PET images demonstrated to be more effective than MRI patches when using a 3-D CNN network or AD/MCI diagnosis [60]. Another way of including 3-D information into a DL classifier is by using 2-D image slices in different directions; Liu et al. included 3-D information into a classifier by inputting PET image slices of the brain in the axial, sagittal, and coral directions into a DL network composed of convolutional and recurrent neural layers [61]. Moreover, the feasibility of amyloid PET images for early detection of AD was studied by training a 2-D CNN network using amyloid positive and negative cases and testing it on equivocal cases [62]—a 3-D CNN was applied by combining amyloid PET with structural MRI data [63]. Multimodal hippocampal region of interest (ROI) images were applied to a 3-D VGG variant network for AD/MCI classification [64]. Finally, PET and single-photon emission computed tomography (SPECT) data along with CNN networks and LASSO sparse DBN have been utilized to detect neurological disorders, such as Parkinson’s disease [65], [66] and brain abnormalities [67].

DL-based classifiers have also proven to be beneficial within the cardiac and oncological imaging fields. Within the cardiac imaging field, a DL model (composed of convolutional and fully connected layers) and SPECT myocardial perfusion imaging were used to predict the presence of obstructive coronary artery disease [68]. Additionally, a pretrained Inception-v3 network [69] was utilized to extract high-level features from polar maps that following feature selection was then inputted to an SVM model to classify between cardiac sarcoidosis (CS) and non-CS [70]. Meanwhile, in the oncology field, PET data and CNN networks were used to predict treatment response in esophageal cancer patients after neoadjuvant chemotherapy [71] and radio-chemotherapy [72] and in chemoradiotherapy treated cervical cancer patients [73]. Additionally, a Bayesian network has been used to explore the biophysical relationships influencing tumor response and to predict local control in lung cancer using dosimetric, biological, and radiomics data [74]. For more information regarding DL methods for image radiomics, please see the following [75]. CNN networks were also used to detect mediastinal lymph node metastasis [76] and define T-stage of lung cancer [77]. An automatic classification system to detect metastasis on bones was created using artificial neural networks, and its performance was compared with eight other ML methods, such as SVM [78]. Furthermore, multimodal imaging data and a residual network (RN) were utilized to detect the molecular subtype of lower-grade gliomas tumors in the brain [79].

DL-based regression models are commonly applied to predict risk factors or treatment prognosis but could also be used to improve image quality. Ithapu et al. [80] explored the feasibility of an AD biomarker obtained from a randomized denoising autoencoder (AE) to filter out low-risk subjects from the MCI population and consequently reduce the sample size and variance requirements for clinical trials testing possible treatments for AD. The network predicted a risk factor (value between 0 and 1) to predicted how close an MCI patient was to develop AD [80]. Another study, successfully inputted gray and white matter volumetric maps and raw T1 MRI images to a 3-D CNN network to predict age [81]. PET data from normal subjects and a variational AE were used to determine how far from normality a brain was by predicting an abnormality score; the model was trained to learn the brain structure of normal subjects [82]. Unenhanced computed tomography (CT) images were used to predict SUV maximum value from lymph nodes as a surrogate endpoint for malignancy by applying a 3-D CNN network [83]. The 3-D CNN network architecture has also been used to predict survival risk prior to treatment from PET/CT images from rectal cancer patients [84]. Finally, a proof-of-concept study showed that CNN networks can be used to improve the timing resolution for time-of-flight PET utilizing a simple detector setup [85].

B. Segmentation and Image Synthesis

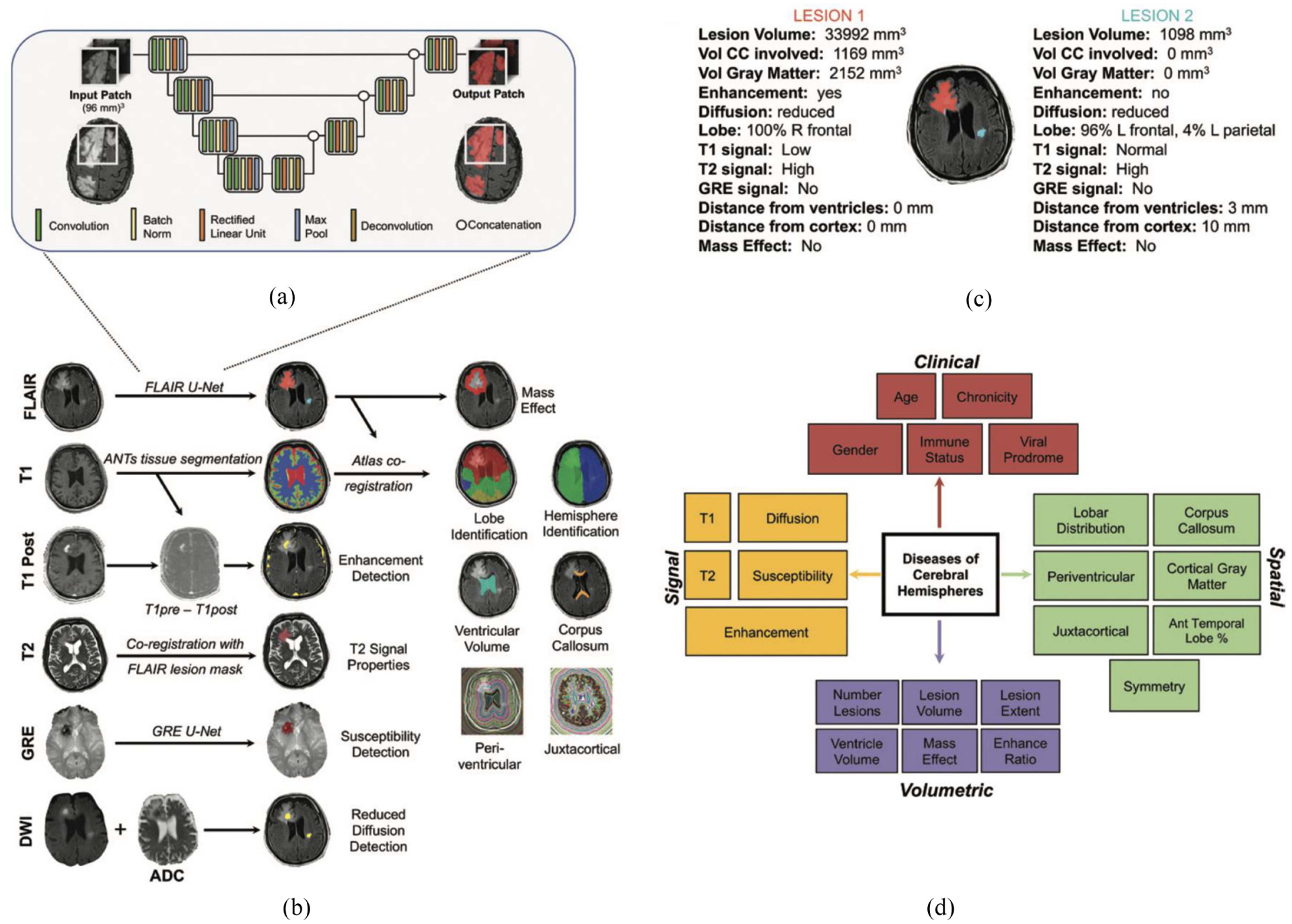

DL networks are currently implemented for medical image segmentation and synthesis. Image segmentation can be performed by assigning a class label to each individual pixel (semantic segmentation) or by delineating specific entities like tumors and lesions (instance segmentation). Moreover, the objective of image synthesis is to obtain a desired/unavailable image from an available/distinct one. With regards to the network architecture, both applications may be performed by applying some variant of the typical encoder–decoder architecture, often realized as a “U-Net” [33]. In the U-Net architecture, the input image size is downsampled during the encoder layers and then upsampled in the decoder layers—providing a continuous voxel-wise mapping from the input.

DL networks are commonly used to segment anatomical areas and detect tumors or lesions. Hu et al. utilized a 3-D CNN network to conduct a semiautomated segmentation of the liver by learning subject-specific probability maps from CT images [86]. A generative adversarial network (GAN) network was trained to segment white matter from only brain PET images [87]. For tumor segmentation, a 3-D U-Net CNN network was trained to automatically detect and segment brain lesions on PET images with a 0.88 and 0.99 specificity at the voxel level [88]. PET/CT image slices were inputted into a 2-D DL network for malignant gross tumor volume segmentation in the head and neck [89]. Meanwhile, Guo et al. [90] designed a 3-D DL network based on convolutional layers with dense connections for the same task. Zhao et al. [91] used a U-Net architecture to segment nasopharyngeal carcinoma tumors using PET/CT image slices. 3-D U-Net networks have also been combined with a feature fusion module [92] and graph cut base co-segmentation [93] for lung tumor segmentation from PET/CT images. The output was a single tumor mask for the first method and two (one from each imaging modality) for the second one. Another study designed a variant 3-D U-Net network architecture to segment lung tumors from PET/CT images—each input channel (individual U-Net network) shared complementary information in the decoder section with the other channel [94]. Likewise, a coarse U-Net and adversarial network have been used to segment extranodal natural killer/T cell lymphoma nasal cancer lesions [95] and the performance of a U-Net was compared to a W-Net [96] on segmenting multiple malignant bone lesions, both studies used PET/CT images [97]. Finally, Zhou and Chellappa applied a CNN network combined with prior knowledge (roundness and relative position) to segment cervical tumors in PET images [38]. Regarding multimodal-based segmentation, Guo et al. explored the performance of three algorithmic architectures to integrate multimodal data when implemented as part of a segmentation system. MRI, PET, and CT images were used to train a CNN network to segment lesions of soft tissue sarcomas and showed superior performance than networks trained on single-modal images [98].

Some current DL-based image synthesis applications include PET attenuation correction and image reconstruction. DL methods have been utilized for attenuation correction of PET images acquired during simultaneous PET/MRI scans. Hwang et al. trained a convolutional AE (CAE), U-Net, and a hybrid network of CAE and U-Net to synthesize a CT-derived brain μ-map from the maximum-likelihood reconstruction of activity and attenuation (MLAA) μ-map. The synthesized μ-map contained reduced crosstalk artifact and was less noisy and more uniform, which could improve time-of-flight PET attenuation correction [99]. A similar variant of the U-Net network was applied to synthesize whole-body CT μ-maps from MLAA-based simultaneous activity and attenuation maps, which identified bone structures more efficiently [100]. Liu et al. [101] designed a DL-based pipeline for MRI imaging-based attenuation correction for PET/MRI imaging. In this study, a CAE network was successfully trained to generate a discrete-valued pseudo-CT brain scans using structural MRI images and applied the generated pseudo-CT scans to reconstruct PET images [101]. Likewise, a data-driven DL-based pipeline, known as “deepAC,” was created to perform PET attenuation correction without anatomical imaging by synthesizing a continuously valued pseudo-CT brain image from PET data [102]. Pseudo-CT brain images were also synthesized from structural MRI images using a DL adversarial semantic structure, which incorporates semantic structure learning and GAN from PET attenuation correction, and its performance was compared with atlas-based approaches [103]. A population in which atlas-based approaches for attenuation correction are likely to fail is the pediatric one—because most of the available atlases are created using data from adult subjects [104]. For that reason, Ladefoged et al. [105] explored the feasibility of a modified version of a UNet network to synthesize attenuation maps from PET/MRI data in pediatric brain tumor patients. Additionally, a Dixon volumetric interpolated breath-hold examination (Dixon-VIBE) DL network (DIVIDE) that uses standard Dixon-VIBE images (in-phase and out-of-phase and water 2-D Dixon slices) was trained to synthesize pseudo-CT maps for PET attenuation correction in the pelvis, introducing less bias than the gold standard CT-based approach [106]. Finally, a 3-D GAN with discriminative and cycle-consistency loss was proposed by Gong et al. to derive continuous attenuation correction maps from Dixon MRI images; the technique generated better pseudo-CT images than the segmentation and atlas methods and its performance was comparable to a CNN-based one [107]. For a detailed overview of DL approaches for attenuation correction in PET, please refer to the following review article [108].

With regards to image reconstruction, AUTOMAP, a DL-based framework was designed to reconstruct medical images from any modality using the raw data by mapping sensor to image domains [48]. Similarly, DeepPET, a DL-based pipeline was created to reconstruct quantitative PET images from its sinogram data, which created less noisy and sharper images [109]. Furthermore, a stacked sparse AE was used for dynamic PET imaging reconstruction and compared its performance with conventional methods, such as maximum-likelihood expectation maximization (MLEM)—it effectively smooths and suppresses and recovers more details in the edges and complex areas [110]. Another study utilized a CNN network to synthesize patient-specific nuclear transmission data from T1 structural MRI data and evaluated the synthesized data on the reconstruction of both static and dynamic PET brain images [111]. DL networks have also been used to synthesize PET brain images from T1 MRI ones [112] and vice versa [113], which may be useful when one of the image scans is not available. Likewise, a CNN network was used to predict 15O-water PET CBF images using single- and multi-delay arterial spin labeling and MRI structural images [114]. Additionally, a sketcher-refiner GAN network with adversarial loss function was trained to predict PET-derived myelin content map from MRI magnetization transfer ratio map and three measures derived from diffusion tensor imaging (DTI) [115]. Please refer to Reader et al. for a complete review of PET image reconstruction using DL approaches [116]. Finally, Shao et al. [117] utilized DL approaches to reconstruct SPECT images directly from projection data and the corresponding attenuation map. The proposed network utilized two fully connected layers for basic profile reconstructed followed by a 2-D convolutional layer where the attenuation map was used to compensate and optimize the reconstructed image—this method proved to be robust to noise [117].

C. Image Denoising and Super-Resolution Tasks

DL methods are also used for image denoising and super-resolution applications. The objective of image denoising is to obtain a ground truth from a noisy image. A residual encoder–decoder was first used to denoise CT images, where the noise was added to the images to simulate a low-dose CT scan [39]. Shortly after, Shan et al. [118] introduced a conveying path-based convolutional encoder–decoder (CPCE) network for low-dose CT denoising using both real and simulated (added noise) data. The novelty of this approach lies on the transfer learning feature in which a 3-D-CPCE network was initiated using a trained 2-D one, achieving better denoising results than training from scratch [118]. To better preserve structural and textural information, Yang et al. proposed a CT denoising method based on GAN with Wasserstein distance and perceptual similarity (WGAN-VGG) [119]. The WGAN-VGG network solved the oversmoothing problem observed in other approaches, reduced noise, and improved lesion detection by increasing contrast when applied on simulated quarter-dose CT images [119]. Meanwhile, You et al. [120] implemented a 3-D GAN using a novel structurally sensitive loss function (SMGAN-3D) to also address the oversmoothing problem when denoising simulated quarter-dose CT images. The structurally sensitive loss function is a hybrid that integrate the best characteristics of mean and feature-based methods, thus, allowing noise and artifacts suppression while preserving structure and texture [120]. Furthermore, noise2noise (N2N) methods have also been propose to denoise low-dose CT images; N2N methods reduce noise using pairs of noisy images [121]. Hasan et al. [121] explored the potential collaboration between N2N generators for denoising of CT images by processing images from a phantom, and showed that these collaborative generators outperformed the common N2N method. For denoising of single-channel CT images, an unsupervised learning approach known as REDAEP was introduced by Zhang et al. in which variable-augmented denoising AEs were used to train higher-dimensional prior for the iterative reconstruction—this technique was tested using simulated and real data [122]. Finally, the use of a deep CNN has been explored to denoise either/both low-dose CT perfusion raw data and maps using digital brain perfusion phantoms and real images [123]. The results demonstrated the network has a superior performance when applying it in the derived map domain [123]. Moreover, DL networks are used to denoise low-dose PET images to obtain high-quality scans that would only be available by applying a standard dose of radioactive. Xiang et al. [124] input real low-dose PET (25% dose) and T1 MRI images into a deep auto-context CNN network to predict standard-dose PET images. Similarly, Wang et al. [125] utilized a locality adaptive multimodality GANs (LA-GANs) to also synthesize high-quality PET images from low-dose PET and T1 MRI images alone or combined with DTI [126]. Meanwhile, Chen et al. [127] utilized simultaneously acquired MR images and simulated ultralow-dose PET images to synthesize full-dose amyloid PET images using an encoder–decoder CNN. Using computer-simulated data of the brain and lungs, Gong et al. [128] pretrained a residual deep neural network architecture to denoise PET images and fine-tuned it using real data—this method outperformed the traditional Gaussian filtering method.

Meanwhile, super-resolution applications are concerned with obtaining a high-resolution image from a low-resolution one. For example, Li et al. [129] applied a semi-couple dictionary learning (SCDL) technique, an automatic feature learning method, to improve the resolution of 3-D high resolution peripheral quantitative CT (HR-pQCT). The authors of this study applied a 2.5-D strategy to extract high- and low-resolution dictionaries from coronal, sagittal, and axial directions separately and obtained a superior performance compared with other methods, such as total variation regularization [129]. Additionally, an unsupervised super-resolution method based on dual GAN networks was introduced by Song et al. [130] to improve the resolution of PET images. This method eased the need for paired training inputs (low- and high-resolution images) by utilizing low-resolution PET and high-resolution MRI images, along with spatial coordinates—making the technique more practical as it is difficult to obtain ground-truth data on a clinical setting [130].

IV. Training Neural Networks

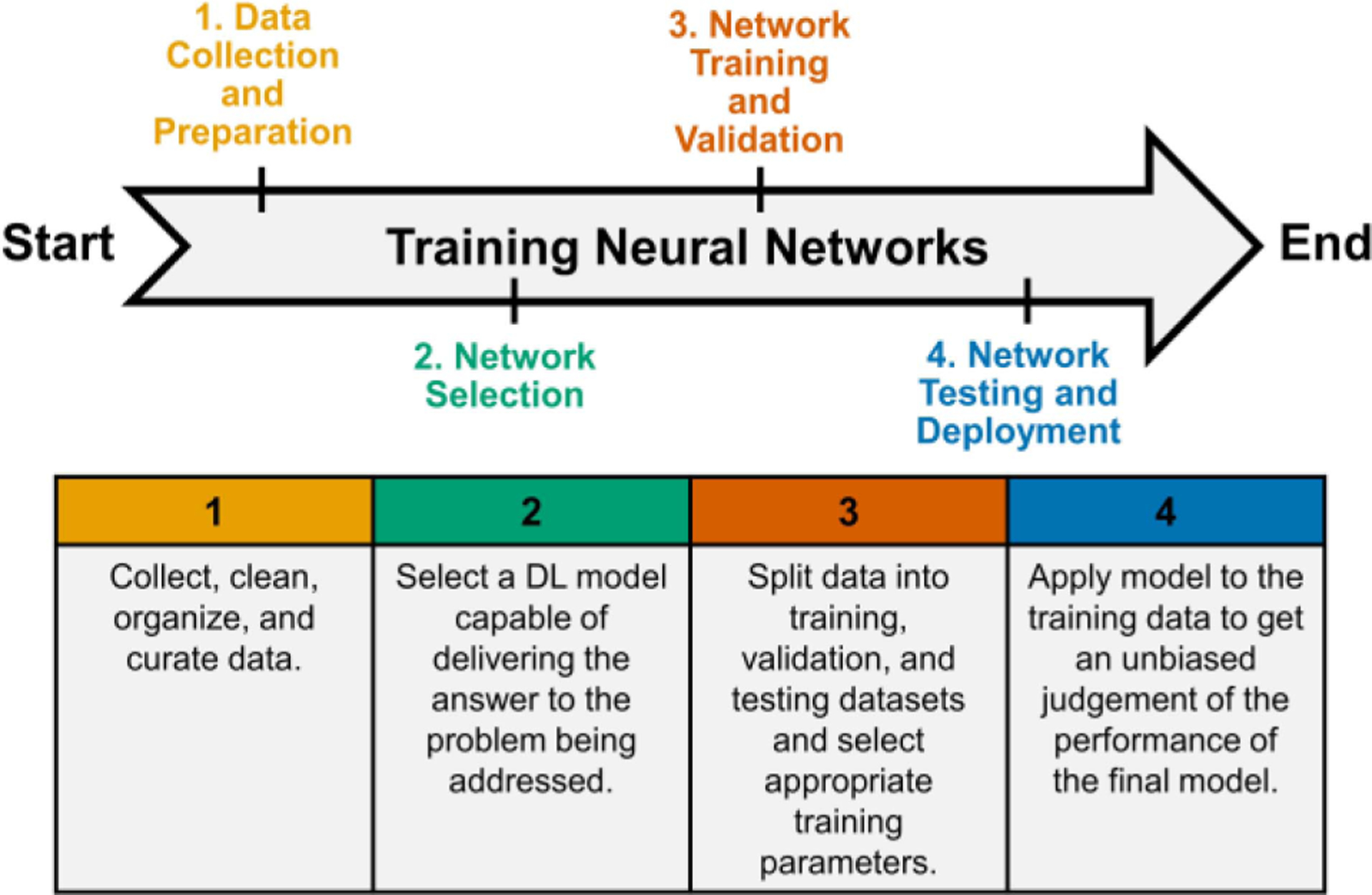

In this section, we define key steps necessary to implement a supervised DL application (Fig. 4). The steps as detailed below are data collection and preparation, network selection, network training and validation, and network testing and deployment.

Fig. 4.

Overview of steps necessary to implement a supervised DL application. The first step is concerned with data collection and preparation; the dataset should only contain true negative and true positive cases. The second step is to select a network architecture to perform the task at hand. The third and fourth steps include the training, validation, testing, and deployment of the final model.

A. Data Collection and Preparation

The data utilized when implementing a DL method could be acquired at the study site to address the research question at hand or obtained from a public medical imaging database. The objective of public medical imaging databases is to enhance the understanding of diseases by providing the scientific community with fair access to large quantities of high-quality imaging data. Examples of well-known public medical imaging databases are the Alzheimer’s Disease Neuroimaging Initiative (ADNI) [131] and the Cancer Imaging Archive (TCIA) [132]. The ADNI database provides MRI and PET images, genetics, cognitive tests, cerebrospinal fluid, and blood biomarkers with the goal of improving the characterization of the Alzheimer disease [131]. Meanwhile, TCIA hosts multimodal images of cancer and allows scientist to publish new datasets after approval [132].

The data preparation step is often the most time-consuming part of the DL network training process. The purpose of this step is to clean, organize, and curate the data in a way that is useful for the task at hand and accessible for reproducibility and sharing purposes. The first step is to make sure the dataset only contains true negative and true positive cases, in other words, a good quality ground truth. For example, when training a DL network for brain tumor detection, images containing other lesions, such as hematoma and infections should not be included. Additionally, images should be normalized to alleviate any error due to acquisition from various scanners/protocols. The images should all be saved using the same format and have the same size, resolution, and pixel scale. The only difference between images should be those features related to the problem the DL network will be trained to solve. During the data acquisition process, methods to alleviate hidden bias are discussed in Section V.

B. Network Selection

After data preparation, a DL model structured to deliver the answer to the problem being addressed should be selected. Currently, deep FNNs are the most common type of DL networks applied to solve medical imaging problems. FNNs are characterized for having information movement in only one direction (no feedback) and usually contain an input layer, one or more hidden layers, and an output layer. In addition, in FNN networks, each unit of one layer is connected to every unit on the next one, and there is no connection between neurons in the same layer. In this section, we will present the FNN model architectures commonly applied within the medical imaging field.

1). Feedforward Neural Network:

CNN: A CNN is a DL model capable of detecting spatial and temporal high-level features from inputted images. The key component of CNNs is convolutional layers which work by convolving filters with input images that assign ranked weights and biases to individual features differentiate one from the other [133]. The example of well-known CNN architectures is LeNet-5 [24], AlexNet [26], ZFNet [40], GoogleLeNet/Inception [28], VGGNet [27], and U-Net [33].

RN: An RN is a DL model characterized by having connections between the output of previous layers to the output of new layers [134]. Like CNNs, RNs can detect high-level features from images. For example, an RN could have a connection from the output of layer 1 to the input and output of layer 2. The example of the RN architecture is ResNet [134] and DenseNet [135].

AE: An AE is a neural network capable of performing dimensionality/feature reduction by learning/encoding features from a given dataset [136]. Examples of AE architectures are sparse [137] and variational [138] AEs.

GAN: A GAN is a DL model capable of synthesizing entirely new data by having a generative and discriminative network working together/against each other [139]. The objective of the generative network is to create artificial data from the distribution space of the input dataset [139]. Meanwhile, the discriminative network attempts to discriminate between the artificial and real data [139]. As a result, the generative network is trained to increase the error rate of the discriminative one by creating a more realistic data [139].

Restricted Boltzmann Machine (RBM): An RBM is a stochastic DL model that learns the probability distribution of the input dataset [140]. This type of network is characterized by having one visible layer and one hidden layer (there is no output layer), and every unit in the visible layer is connected to every unit within the hidden layer, but there is no connection between units/nodes in the same layer [140]. Examples of the RBM-based architectures are the deep belief network [141] which contains stacked RBMs and the deep Boltzmann machine [142].

C. Network Training and Validation

1). Train, Validate, and Split:

Before the start of training, the data need to be divided into three parts, including the training dataset, the validation dataset, and the test dataset. In the training phase, only a training dataset and a validation dataset are needed. A testing dataset can only be used after model training has been finished. Ripley [143] defined the training set, validation set, and test set as follows:

“– Training set: A set of examples used for learning, that is to fit the parameters of the classifier.

– Validation set: A set of examples used to tune the parameters of a classifier, for example to choose the number of hidden units in a neural network.

– Test set: A set of examples used only to assess the performance of a fully specified classifier.”

The training dataset is used to fit the model, and the model mainly learns from this data directly. The validation dataset aims at providing an unbiased evaluation of a model fit on the training dataset. The evaluation contributes to tuning hyperparameters which are set manually before training, like the depth of the model, the training batch size, loss functions, and optimizers introduced in the following part, to help the model get improved. If the model is improved, its performance on the validation dataset can be improved as well. Therefore, the validation dataset affects the model indirectly. In a word, the training dataset and validation dataset are involved in the training. The training dataset is used for training the model directly while the validation dataset is indirect.

However, what strategies are best to split the dataset? Usually, the ratio between the training dataset and the validation dataset size is 8:2 or 7:3. It is a general method for splitting. Actually, the real split ratio is affected by the volume of the whole dataset and the number of parameters of the actual model. If the dataset is very large when compared with the model’s parameters, the proportion between training and validation has fewer effects on the training result because either the training data volume or the validation data volume is large enough to train the model or give an accurate evaluation of the model’s performance. However, if the dataset is small, the split ratio should be considered carefully. For the model with few hyperparameters, it is easy to validate and tune the hyperparameters to make the model perform better, so the size of the validation dataset can be reduced under this circumstance; on the contrary, if the model is complex and has many hyperparameters, the proportion of the validation dataset should be increased. In summary, the train–validation split is quite specific to the volume of the dataset and the complexity of the chosen model.

In addition, class imbalance needs to be considered carefully during data splitting. For example, consider the situation where negative examples take up 90% of the database while the positive ones only consist of 10%. Under such circumstances, it is not suitable to use 0.5 as threshold and refer to the accuracy as the indicator of a model’s performance because in this way the model can always give its prediction as the negative one no matter what the input is, yet the accuracy is still 90%.

Several simple methods have been proposed to solve this problem, including undersampling, oversampling, and threshold moving. Undersampling is to delete some data from the majority class while oversampling is to duplicate the data from the minority class. However, these two methods can change the data distribution obviously. Undersampling wastes precious data, and oversampling can lead to overfitting. Compared with these two methods, moving the threshold is often a better choice. The new threshold should be the minority ratio to the total. The evaluation method should also be modified, such as using the receiver operating characteristic curve analysis.

However, the methods mentioned above cannot get an accurate estimation of the model, since the validation results fluctuate significantly due to different split between the training dataset and the validation dataset [144]. Cross-validation is frequently used to solve this problem. Among various kinds of cross-validation methods, k-fold cross-validation is the most commonly used one. The first step is to choose the single parameter for this method, k, which refers to the number of groups a given dataset should be split into. Kohavi [145] suggested that tenfold cross-validation is sufficient for model selection even if computation power allows using more folds. The second step is to divide the dataset into k groups. Then k − 1 groups can be selected as the training dataset and one group as the validation dataset. There should be k different selections. Finally, train the model with the training dataset. As a result, k different models obtained for which model performance can be assessed across cross-validated runs, and if necessary, the single best model performing model can be selected according to its performance on a corresponding validation dataset.

2). Loss Functions:

As described above, deep neural networks learn by means of a loss function. The goal of the model is to minimize the loss as much as possible, so loss functions play an important role in training the model. Take the classification problem as an example. If the prediction deviates too much from actual results, the loss function will become very large at first. However, with the help of an optimization function, the model can be improved, and the loss will go down. Different loss functions have been designed to solve different problems. Broadly, most problems can be seen as a regression problem or classification problem. In practice, any programmable function can be used as a loss function. Here, we highlight the most commonly utilized approaches.

In regression and image synthesis, a continuous value will be given. Three loss functions commonly used in solving this kind of problem: 1) mean-square error (MSE); 2) mean absolute error (MAE); and 3) Huber loss [146]. If m is the number of training samples, y(i) is the ground truth while f (x(i)) is the prediction of the model, the corresponding formula for each loss functions can be presented as follows.

- MSE:

(17) - MAE:

(18) - Huber Loss:

(19)

According to the above formulas, the MSE’s derivative is consecutive, while the MAE’s not. Additionally, the derivative of MSE changes, which helps the model converge while the derivative of MAE is nearly the same. However, when it comes to outliers, MSE is more sensitive, while MAE is more robust. That is because if ∣y(i) − f (x(i))∣ > 1, the loss from MSE will become much larger than the loss computed by MAE. Therefore, there is no assertion that any loss has definite advantages over the other one. In practice, it depends on the application. For example, if the sensitivity to outliers is very important, and they should be detected, then MSE should be a better choice for solving that problem.

However, under some circumstances, neither MSE nor MAE is suitable. For example, if the target of 90% of the training samples is 150 while that of the rest ranges from 0 to 30, the performances of MSE and MAE may not be suitable. For the MAE, it will ignore those outliers and give the same prediction, 150, all the time. For the MSE, it will be greatly affected by those outliers. As a result, it performs badly on most data. In order to make use of the benefits of MSE and MAE, the Huber loss is proposed. Herein δ is a hyperparameter, which controls the definition of outliers. According to the formula, different measures are adopted for dealing with normal data and outliers, so the result performs better in general as long as the suitable value for δ has been chosen.

In classification and segmentation, the output of the model should be in a set of finite categorical values. The classification problem can be divided into a binary classification problem or a multiclass classification problem based on the number of categories. For example, when dealing with a single-digit recognition problem, the prediction of the model should be one of the numbers from 0 to 9. Since there are ten categories, it belongs to the multiclass classification problem. Binary cross-entropy is the recommended loss function for solving the binary classification problem, while multiclass cross-entropy loss is suggested for the multiclass classification problem.

The following formula shows how binary cross entropy loss is computed. m is the number of training samples. y(i) is the ground truth for the sample x(i) while p(i) is the prediction of the model, which means the possibility of the input belonging to a certain class

| (20) |

Similar to the binary cross entropy loss, m is the number of training samples. C is the number of categories. stands for the ground truth that the ith training sample belongs to the jth category or not while is the prediction from the model which means how likely the ith training sample belongs to the jth category

| (21) |

In addition to the basic loss functions introduced above, there are some other complex loss functions designed for some specific applications, like the perceptual loss function for style transfer [147]. Furthermore, custom loss functions can also be developed and used according to the specific problem.

3). Optimizers:

In the previous section, several loss functions have been introduced. However, the loss is just a static value between the estimated output and the desired output. How does the model improve this estimate during the training process? This is where optimizers come in. They tie together the loss function and model parameters by updating the model in response to the output of the loss function. In other words, optimizers learn from the loss function and update model parameters by following a certain rule. Gradient descent is the classic optimizer; however, other improved strategies have been proposed.

a). Gradient descent:

The rule for parameters update is shown as follows:

| (22) |

θ stands for the model’s parameters. η is the learning rate. is the gradient of loss function to parameters θ. In gradient descent, the loss is computed with the whole dataset, so the computed gradient is accurate. However, it is hard to add more data to update the model at the same time. Moreover, it can be very time consuming if the dataset is very large.

Besides the dataset limitation of gradient descent, the learning rate is a hyperparameter, which should be given in advance. The learning rate has significant effects on the outcome of training, so the value should be chosen carefully. Fig. 5 shows the impact of the learning rate. If the learning rate is too large, which means the weights change too fast, it may be hard for the model to converge to the optimal value. However, if the learning rate is too low, which means the weights are updated very little at each step, it may take a long time to train the model, and the model is more likely to converge on a local minimum. In summary, the learning rate plays an important role in the gradient descent algorithm, and it should be chosen carefully.

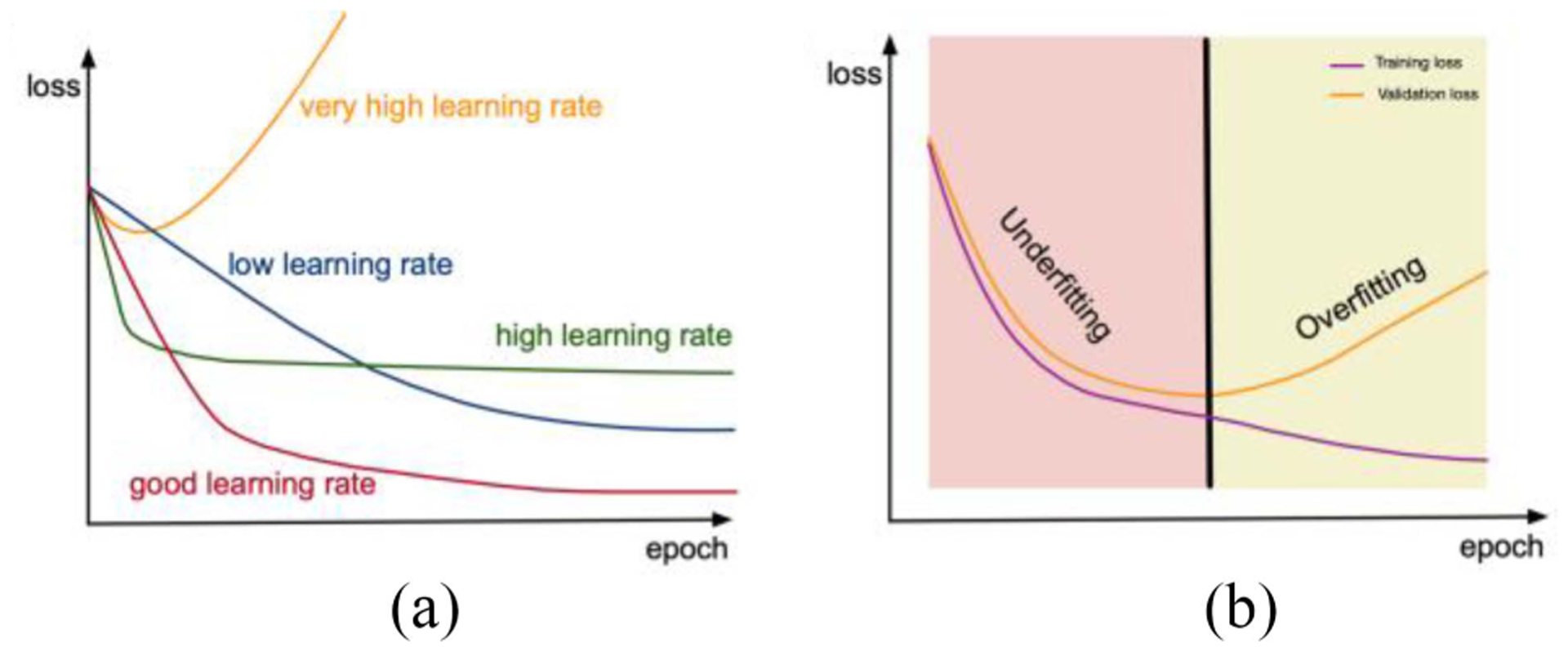

Fig. 5.

(a) Graphical depiction of training loss with varying learning rates. The optimal selection of learning rate can provide the lowest possible training loss. (b) Graphical depiction of training and validation loss. Overfitting can be observed when the validation loss increases while the training loss continues to decrease.

b). Stochastic gradient descent:

| (23) |

Since it is time consuming to compute the gradient with the whole dataset, it will save a lot of time if only one sample has been used. This is what stochastic gradient descent does. In this way, it is much quicker to train the model. However, the loss might fluctuate significantly. Additionally, since the gradient is not accurate, the model may converge on a local minimum, which may reduce accuracy compared with the gradient descent.

c). Mini-batch gradient descent:

| (24) |

Mini-batch gradient descent uses n samples to compute the gradient instead of only one. The standard deviation of updating parameters with mini-batch gradient descent can be reduced, and the final convergence is more stable as well.

However, this algorithm has two drawbacks. First, the mini-batch approach cannot guarantee optimal convergence. If the learning rate is too low, the loss will fluctuate around a minimum or even deviate. If the loss function is not convex, it is also easy to become stuck in the local minimum. Second, all parameters are updated with the same learning rate, which may not be reasonable. For features with lower frequency, the learning rate should be larger compared then for features with higher frequency. Moreover, the learning rate should become smaller and smaller with the increase of training epochs.

d). Momentum [148]:

To solve the aforementioned drawback, the concept of momentum has been introduced

| (25) |

| (26) |

γ vt−1 is the momentum. γ is often set as 0.9. After adding this term, the dimension in which the direction of gradient keeps the same changes faster while the one in which the direction of the gradient is not altered changes slowly, which could facilitate the convergence progress and reduce vibration. However, momentum is not smart enough to slow the update rate before the loss becomes larger.

e). Nesterov accelerated gradient [149]:

To make momentum smarter, the Nesterov accelerated gradient has been proposed

| (27) |

| (28) |

The future position θ − γ vt−1 is used to compute the gradient instead of the current position θ.

f). Adagrad [150]:

Adagrad aims at solving the second drawback of a mini-batch gradient algorithm, a constant learning rate

| (29) |

| (30) |

gt,i is the gradient of θi at time t, and it is the same as . here is a diagonal matrix where each diagonal element Gt,ii is the sum of the squares of the gradients with respect to θi up to time step t while ε is a smoothing term that avoids division by zero. η is the initial learning rate, which is often set as 0.01. According to the formula, the learning rate is low for those parameters which have been updated frequently. However, the denominator is an accumulated number, which will become larger and larger with the training going on. As a result, the learning rate will be very close to zero, which will lead to the end of training in advance. To solve this problem, RMSprop and Adadelta have been proposed.

g). RMSprop:

A very small learning rate can be caused by the accumulated sum, this problem can be solved by changing the accumulated sum term. Hinton proposed the following update rule:

| (31) |

| (32) |

η is recommended to be set as 0.001.

h). Adadelta [151]:

Adadelta is very similar to RMSprop in the first phase

| (33) |

| (34) |

| (35) |

However, η can be eliminated by using a similar idea, which means the initial learning rate is not needed any longer

| (36) |

| (37) |

As a result, the final form of the rule should be

| (38) |

| (39) |

i). Adam:

Adaptive moment estimation is another method that computes the adaptive learning rate for each parameter [152]. Combining the ideas from momentum and adadelta, it defines the decaying averages of past and past squared gradients mt and vt, respectively, as follows:

| (40) |

| (41) |

However, since mt and vt are initialized as the zero vector, it is easy for them to be biased toward zero at the beginning of the training. This can also happen when β1 and β2 are close to one. To avoid this problem, the following transformation is applied

| (42) |

| (43) |

Then, the update rule is listed as follows:

| (44) |

β1, β2, and ε are recommended to be set as 0.9, 0.999, and 10−8, respectively.

In summary, the classic optimizer is gradient descent; however, the computation involves the whole dataset. This is too time consuming, so stochastic gradient descent has been proposed. It is very fast and needs only one sample to update the parameters, but loss fluctuates significantly when using it. Therefore, mini-batch gradient descent was proposed. Still, two problems remained: 1) fluctuation around local minimums and 2) constant learning rate. To solve the fluctuation problem, the concept of momentum was proposed and improved with Nesterov accelerated gradient. Adaptive learning rate optimizers have been proposed as well, like Adagrad. The improvement of Adagrad for the problem of a vanishing learning rate has been suggested by RMSprop and Adadelta. Finally, Adam combines concepts from momentum and Adadelta.

There is no answer to the question of which is the best optimizer. It relies heavily on the data and problem. If the input data is sparse, the adaptive learning-rate methods are more likely to get the best results. For adaptive learning rate optimizers, like RMSprop, Adadelta, and Adam, are very similar and often do well in similar circumstances. Kingma and Ba [152] showed that its bias-correction helps Adam slightly outperform RMSprop toward the end of optimization as gradients become sparser. In general, both mini-batch SGD and Adam are widely utilized and perform well and are suggested to be utilized first.

4). Overfitting:

During the training process, several problems can occur. The most common one is overfitting. Overfitting occurs when the model fits very well on training data, but it does not generalize and perform well on the validation data. Several approaches can be applied to reduce the likelihood of overfitting in training DL models.

Getting More Training Data: If the training dataset is small, and the model is complex enough, the model may learn to remember the training data. However, after adding more data, the model will be forced to learn the underlying pattern from the training dataset instead of just remembering it. It is usually effective, but another problem is that sometimes it is hard to get more data in reality. Data augmentation discussed in Section V is also a strategy to reduce overfitting.

Regularization: In addition to enlarging the training dataset, adding a regularizer term to the loss function, such as the l1-norm and l2-norm, can be useful. This is because this term will become larger if the number of nonzero parameters of the model increases. As a result, the loss will become larger, which could hold back the overfitting process. Therefore, the regularizer term can also prevent the model from being too complex and help avoid the overfitting problem.

Early Stopping: Usually, if the training loss continues to decrease while the validation loss begins to increase, this is indicative of overfitting. Therefore, the training process should be stopped before the validation loss continues to increase. In this way, the generalization of the model can be maintained. Whether this method works or not depends on both the data and the model.

Adding Specific Layers: As described in Section II, the batch normalization layer and the dropout layer can help ease the overfitting problem. According to the batch normalization algorithm, the parameters μ and σ are computed with the batch data instead of the whole dataset. This can introduce some uncertainty, which can be helpful in avoiding overfitting. Additionally, a dropout layer can also deal with this problem. Since some of the neurons are chosen to be ignored in each layer, the model becomes simpler. As a result, it could help alleviate overfitting.

D. Network Testing and Deployment

Once the training of the model is finished, a test dataset is needed. The test dataset is designed to give an unbiased judgment of the final model for a certain task. Compared with the validation dataset, testing data can never affect the model’s training or performance, which means the model remains unchanged after testing. There are some key factors concerning the test dataset. First, there should not be any overlap among training, validation, and test datasets. This means the cases used for training and validation should not appear in the test dataset, otherwise, an assessment of the model’s generalization is incomplete. Additionally, test cases should cover not only a representative range of patient data as included in the training or validation dataset but also some edge cases which have not been seen by the model before but may exist in the real world. Where possible, the same type of input data but from other sources (different institutions) or systems (different vendors) should be tested to evaluate the model’s generalizability. Results from the test data can be used to test real-world model performance, including some important indicators, like MSE and accuracy. Then these indicators show the performance of a model and provide a way to compare the performances of different models. Once the model meets the minimum requirements for the specific application, it can be deployed. The model parameters and structure remain unchanged. It is key that the data used for training, validation, and testing are representative of data that are encountered during deployment.

V. Robustness

Advanced AI technology will deliver huge improvements to the healthcare industry only if AI-related products meet ethical and legal requirements. According to a recent discussion paper about Proposed Regulatory Framework for Modifications to AI/ML-Based Software as a Medical Device [153] presented by FDA and Code of Conduct for Data-Driven Health and Care Technology [154] presented by National Health Service of the United Kingdom (NHS), transparency and accountability are always valued. Is the trained model robust enough to various clinal situations? How can we prove it? It is a new challenge beyond simple training/validating processes.

A. Data Selection

The DL technology is also called the data-driven technology; thus, dataset selection is one of the key parts of the DL pipeline. As the data preparation and data split have been introduced above, the discussion here concentrates on avoiding structural bias, which is not only an ethical concern, but also related to performance after commissioning. The difference between the training datasets distribution and deploying datasets distribution (the datasets faced in the application) in the real world will lead to potential performance drop. Even in the same distribution, subsampled datasets will also cause instability. Additionally, imaging protocols can also be treated as a source of bias. The popular methods designed to diminish bias from datasets are as follows.

Larger dataset population.

Data augmentation.

Manual division of datasets.

Human investigation.

Deriving at the beginning of learning, Valiant [155] built the fundamental learning theory called probably approximate correct learning (PAC learning). The statement was proved via statistics that a larger training dataset would lead to a higher probability of less error. Hence, extending the training datasets is always the best way to improve accuracy for DL applications. However, huge amounts well-annotated data or even unlabeled medical data may be too expensive or time consuming to acquire.

B. Data Augmentation

Given limited data samples, it is intuitive to think of amplifying them for a larger dataset. The method is called data augmentation. In the medical imaging field, images often maintain one or several spatial invariance properties, including shift, shear, zoom, reflection, and rotation (i.e., spatial transformation does not change the diagnoses), researchers may choose manually implement those transformations to augment datasets. While some specific applications may violate partial invariance, generally, data augmentation can increase the dataset population as well as inject domain knowledge (spatial invariance properties) into DL models. In a review presented by Shorten and Khoshgoftaar [156] regarding data augmentation, those basic augmentation techniques can improve accuracy in traditional classification challenges. However, some techniques may not be suitable for medical images. Random cropping or image fusion could remove crucial diagnostical details. In medical image classification tasks, Hussain et al. [157] focused on the influences of differential techniques and proved those did improve task accuracy. It is also mentioned that adding noises (Gaussian and Jittering) and Gaussian blurring are also options to simulate perturbation and real-world artifacts encountered in imaging protocols.

As previously described, the dataset division focuses on how to divide collected datasets into training/validating/testing three subsets. If the validation loss is significantly different higher than the training loss, we encounter overfitting, indicating that the model learns too much from the training dataset at the sacrifice of generalization. From the dataset division view, one possible reason is imbalanced division. Perhaps some details or features emerge only in the validation datasets. Or the populations of categories are biased, shown as one category of data (one label in classification or one feature in synthesizing) occupies most data populations and squeezes computational resources of other categories. In this case, data from minor categories should be amplified to retrieve the balance among categories. After training, the same thing could happen during testing. Balanced dataset division may reduce the training loss, but it can be an appropriate way to maintain generalization for model deployment.

C. Ensemble Learning Methods