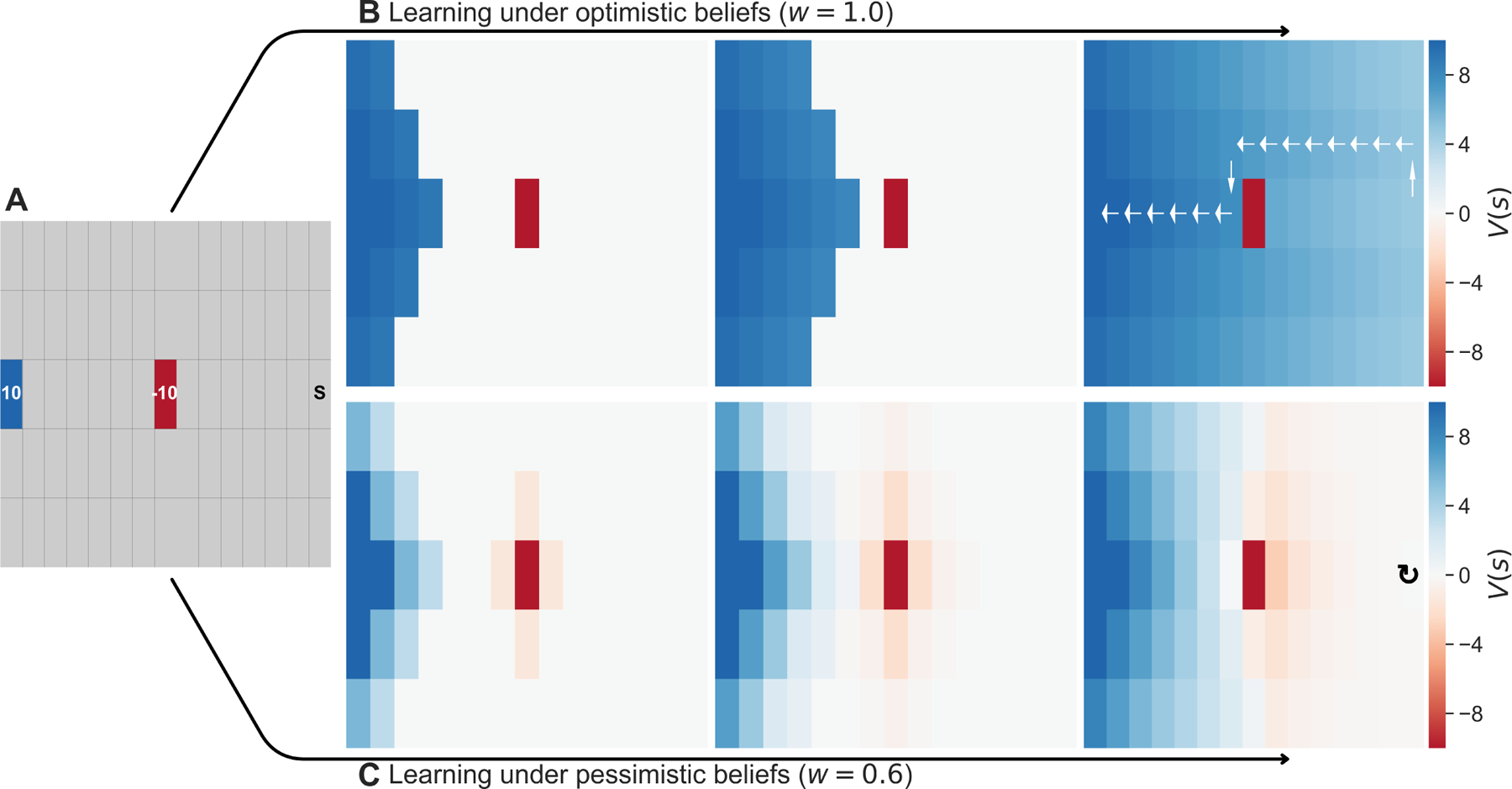

Figure 4:

(A) A simple deterministic gridworld with two terminal states: one rewarding (blue) and one aversive (red). (B, c) The development of value expectancies over three steps of learning, for two levels of pessimism. States are colored by their value under different levels of pessimism, with arrows showing an optimal trajectory. (B) For an optimistic agent (w = 1), all states (other than the harmful state) take on positive value with learning. (C) For a pessimistic agent (w = 0.6), negative value spreads from the source to antecedent states. As a result of avoidance, the agent learns reward is unobtainable and develops anergic symptoms (i.e. foregoes action). (Parameters: γ = 0.95)