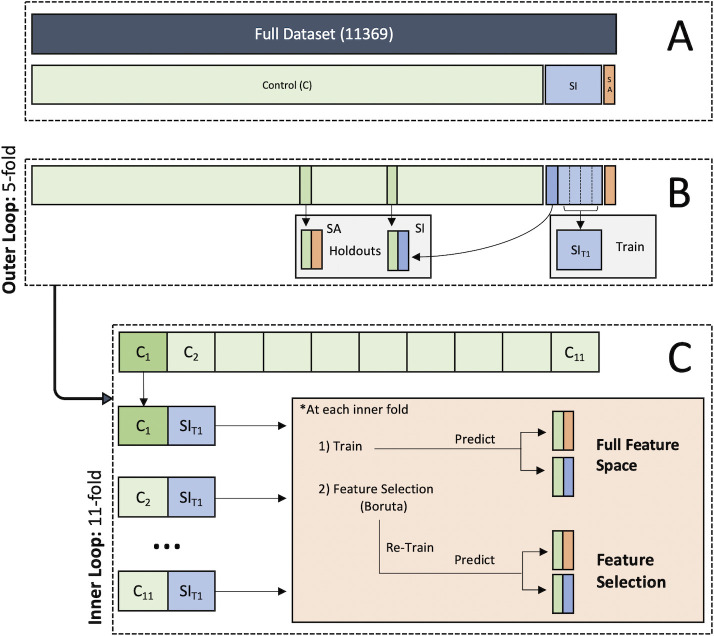

Fig 1. Nested cross-fold validation data partitioning scheme.

A. First, data were partitioned into respective class labels. B. Next, the outer loop split the suicidal ideation (SI) class into five folds, setting aside one fold and a randomly subsampled set of controls as a holdout test set for each iteration. The group of individuals endorsing concomitant suicidal ideation and attempt (SA), along with another randomly subsampled set of controls were also set aside as a holdout test set. C. The inner loop then divided the remaining control sample into 11 folds (NControl/NSI) to combine with the SI training set to create class balanced datasets for training. These balanced training sets circumvent common class imbalance issues in machine learning, while also mitigating sampling bias induced by techniques such as down-sampling. Therefore, the reported performance for each model spanned the 55 total folds for each set of inner (11-folds) and outer folds (5-folds).