Abstract

Machine learning methods are widely used in autism spectrum disorder (ASD) diagnosis. Due to the lack of labelled ASD data, multisite data are often pooled together to expand the sample size. However, the heterogeneity that exists among different sites leads to the degeneration of machine learning models. Herein, the three-way decision theory was introduced into unsupervised domain adaptation in the first time, and applied to optimize the pseudolabel of the target domain/site from functional magnetic resonance imaging (fMRI) features related to ASD patients. The experimental results using multisite fMRI data show that our method not only narrows the gap of the sample distribution among domains but is also superior to the state-of-the-art domain adaptation methods in ASD recognition. Specifically, the ASD recognition accuracy of the proposed method is improved on all the six tasks, by 70.80%, 75.41%, 69.91%, 72.13%, 71.01% and 68.85%, respectively, compared with the existing methods.

Keywords: autism spectrum disorder, machine learning, three-way decision, domain adaptation

1. Introduction

Autism spectrum disorder (ASD) is a common neurodevelopmental disease originating in infancy [1,2,3,4,5,6]. According to a recent study, one in 45 children in the world has autism, and the number of affected children has increased by 78% in the last decade [7]. Some symptoms of ASD even appear in young children by the age of two years [8]. Therefore, the early diagnosis of and intervention in ASD have received great attention in recent years [9,10]. Researchers have applied machine learning methods to identify biomarkers from resting-state functional magnetic resonance imaging (rs-fMRI) data to assist in diagnosing ASD [11,12,13].

Machine learning methods have demonstrated their effectiveness with the assumption that we have sufficient training data and test data drawn from the same distribution [14,15]. However, this assumption calling for enough examples is not always satisfied in practical applications and is not true in most cases, which will lead to the poor generalization ability of a model trained on one dataset when applied to another new dataset. First, clinical neural image datasets often face the problem of small dataset size due to their expensive acquisition and time-consuming labels. Therefore, multisite rs-fMRI data are often combined to expand the dataset in some research, such as ASD diagnosis, which leads to the second problem: samples from different scanners or acquisition protocols do not follow the same distribution in most cases [16,17].

The fMRI samples from different sites have also been named domains in the machine learning research community. In addition to the distribution difference of the training set (source domain) and the test set (target domain), the scarcity of labelled samples is another challenge to ASD recognition. Previous studies have investigated domain adaptation approaches to overcome site-to-site transfer [18]. Many studies have successfully applied domain adaptation to object recognition [19], activity recognition [20], speech recognition [21], text classification [22] and autism recognition [7]. The main goal of domain adaptation is to reduce the difference in the data distribution between the source domain and the target domain and then train a robust classifier for the target domain by reusing the labelled data in the source domain.

At present, the research on domain adaptation mainly focuses on three methods, namely, instance adaptation, feature adaptation and classifier adaptation. Specifically, the instance-based domain adaptation method reuses samples from the source domain according to a certain weighting rule. Instance adaptation has achieved good results by eliminating cross domain differences [23]. However, this method needs to satisfy two strict assumptions: (1) the source domain and the target domain follow the same conditional distribution, and (2) some data in the source domain can be reused in the target domain by reweighting. The classifier-based domain adaptation method transfers knowledge from the source domain to the target domain by sharing parameters between the source domain and target domain [24,25]. Classifier transfer has performed well with labelled samples. However, regarding ASD diagnosis from fMRI, the data distributions of different sites are different, and reliable labelled data are difficult to obtain.

Therefore, the application of domain adaptations based on instances or classifiers is relatively difficult. However, the feature-based domain adaptation method can learn the subspace geometrical structure [26,27,28,29] or distribution alignment [30,31,32]. This method gears the marginal or conditional distributions of different domains in a principled dimensionality reduction procedure. Our work employed the feature-based domain adaptation method to eliminate the divergence of the data distribution.

Currently, most feature-based domain adaptation research is devoted to the adaptation of the marginal distribution, conditional distribution, or both. For example, Long et al. [26] found that the marginal distribution and conditional distribution between domains are different in the real world, and better performance can be achieved if the two distributions are adapted simultaneously. Subsequently, some studies based on joint distribution adaptation have been proposed successively [33,34,35], and these works have greatly contributed to the development of domain adaptation.

It is worth noting that in order to obtain the pseudolabels of the target domain data, the traditional methods usually directly apply the classifier trained in the source domain to the prediction of the target domain data. However, these pseudolabels might lead to some error due to the possible domain mismatch. Here, we proposed a robust method using a three-way decision model derived from triangular fuzzy similarity. The proposed model roughly classified the samples in the target domain into three domains, i.e., the positive region, the negative region and the boundary region. Then, the label propagation algorithm was used to optimize the label and make secondary decisions on the boundary region samples. The experiments demonstrate that our method can effectively improve the classification performance for automated ASD diagnosis.

The contributions of this paper is as follows:

A three-way decision model based on triangular fuzzy similarity is proposed to reduce the cost loss of target domain data prediction. To the best of authors’ knowledge, it is the first time to combine the three-way decision model and the distribution adaptation method to reduce the distribution differences between domains. The proposed method extends the application of machine learning in the field of decision making.

Our method utilizes the label information from the source domain and the structural information from the target domain at the same time, which not only reduces the distribution differences between domains but also further improves the recognition ability of the target domain data.

Comprehensive experiments on the Autism Brain Imaging Data Exchange (ABIDE) dataset prove that our method is better than several state-of-the-art methods.

The remainder of this paper is organized as follows. Section 2 reviews the related work concisely. In Section 3, we elucidate the foundation of the proposed method. Our proposed method is illustrated in detail in Section 4. Then, the results and discussion are presented in Section 5 and Section 6, respectively. Finally, the paper is concluded in Section 7.

2. Related Work

It has been a lasting challenge to build maps between different domains in the field of machine learning. Domain adaptation has become a hot research topic in disease diagnosis with machine learning. In this paper, we proposed transfer learning based on distribution adaptation and three-way decisions. To elaborate the proposed method, we will introduce the related work from the following three aspects in this section.

2.1. Distribution Adaptation

Distribution adaptation is one of the most commonly used methods in domain adaptation. It seeks a space translation and eliminated data distribution differences between source and target domains by explicitly minimizing the predefined distance in this feature space. According to the nature of the data distribution, distribution adaptation can be divided into three categories: marginal distribution adaptation, conditional distribution adaptation and joint distribution adaptation.

Pan et al. [36] first proposed a transfer component analysis (TCA) method based on marginal distribution adaptation, which used the maximum mean discrepancy (MMD) to measure the distance between domains and achieve feature dimensionality reduction. The method assumes that there is a mapping so that the marginal distribution of the mapped source domain and target domain is similar in the new space. The disadvantage of TCA is that the algorithm only focuses on reducing the cross-domain marginal distribution difference without considering reducing the conditional distribution difference. Long et al. [37] proposed a transfer joint matching (TJM) method, which mainly combines source domain sample selection and distribution adaptation to further eliminate cross domain distribution differences.

Recently, in the work based on conditional distribution adaptation, Wang et al. [38] proposed a stratified transfer learning method (STL). Its main idea is to reduce the spatial dimension in the reproducing kernel Hilbert space (RKHS) by using the intraclass similarity so as to eliminate the distribution differences. However, in the real world, differences may exist in both marginal distributions and different conditional distributions. Adjusting only one of the distributions is insufficient to bridge domain differences. In order to solve this problem, Long et al. [26] proposed the joint distribution adaptation (JDA) method. The goal of JDA is to jointly adjust the marginal distribution and the conditional distribution using a principled dimensionality reduction process, and the representation in this common feature space reduced the domain differences significantly. Other work extended JDA by adding structural consistency [29], domain invariant clustering [30] and label propagation [31].

To provide supervised information for the target domain, JDA methods applied source domain classifiers in the target domain and took the classifier outputs as the pseudolabels of the target domain data. However, due to the different data distributions of domains, the direct use of these inaccurate pseudolabels will result in the degradation of the final model’s performance.

Considering the domain gap both in labels and samples, three-way decisions provided a novel method to transmit the label information between domains and reuse the intrinsic structural information of the target domain data to further improve the performance of the model in the domain adaptation process.

2.2. Three-Way Decisions

As an effective extension of traditional rough sets, three-way decision [39] (3WD) theory has been widely applied to address uncertain, inaccurate and fuzzy problems, such as medical diagnosis [40], image processing [41], emotion analysis [42], etc. In simple terms, 3WD divides the universe of discourse into three disjoint parts, i.e., the positive region (Pos), the negative region (Neg), and the boundary region (Bnd), through a pair of upper and lower, approximately. Acceptance and rejection decisions were made for the objects in Pos and Neg, respectively. Specifically, the objects in Bnd adopt the delay decision.

Strictly speaking, the current 3WD research can be divided according to whether it is based on decision-theoretic rough sets (DTRSs) [43]. For example, Zhang et al. [44] proposed a 3WD model for interval-valued DTRSs and gave a new decision cost function. Liu et al. [45] introduced intuitionistic fuzzy language DTRSs and 3WD models to obtain fuzzy information in uncertain languages. Agbodah [46] focused on the study of the DTRS loss function aggregation method in group decision making and utilized it to construct a 3WD model.

In addition, scholars have also conducted in-depth explorations on 3WD outside the DTRS framework. For example, Liang et al. [47] integrated the risk preference of decision makers into the decision-making process and proposed a 3WD model based on the TODIM (an acronym in Portuguese for interactive multicriteria decision making) method. Qian et al. [48] investigated three-way formal concept lattices of objects (properties) based on 3WD. Yang et al. [49] presented a 3WD model oriented to multigranularity space to adapt 3WD to intuitionistic fuzzy decisions.

From a broad perspective, 3WD can be classified as static or dynamic. Static 3WD includes related research based on the DTRS framework and fusion of other theories. Dynamic 3WD mainly addresses the problem of constantly changing data in time series and space, and its typical representative is the sequential 3WD model [50]. For example, Yang et al. [51] proposed a three-way calculation method for dynamic mixed data based on time and space. Zhang et al. [52] systematically investigated a new sequential 3WD model to balance autoencoder classification and reduce its misclassification cost. Liu et al. [53] combined 3WD and granular computing to construct a dynamic three-way recommendation model to reduce decision-making costs.

3WD theory has been widely used in many areas, such as emerging three-way formal concept analysis [54], three-way conflict analysis [55], three-way granular computing [56], three-way classification [57], three-way recommendation [58], and three-way clustering [59]. This paper will combine the idea of 3WD to improve the performance of heterogeneous ASD data diagnosis by reducing the difference in the data distributions between the source domain and target domain.

2.3. Application of Machine Learning in Identification of ASD Patients

In recent years, magnetic resonance imaging (MRI) has been widely used in clinical practice [60,61]. The commonly used MRI can be divided into structural MRI (sMRI) and functional MRI (fMRI). As fMRI can measure the hemodynamic changes caused by the activity of brain neurons, it has been widely used in the research of brain dysfunction diseases. For example, Li et al. [62] proposed a 4D deep learning model for ASD recognition that can utilize both temporal and spatial information of fMRI data. In the work of Riaz et al. [63], they proposed an end-to-end deep learning method called DeepfMRI for accurately identifying patients with Attention Deficit Hyperactivity Disorder (ADHD) and achieved an accuracy rate of 73.1% on open datasets. To study the relationship between mild cognitive impairment (MCI) and Small Vessel Disease (SVD), Diciotti et al. [64] applied the Stroop test to the rs-fMRI data of 67 MCI subjects and found that regional homogeneity of rs-fMRI is significantly correlated with measurements of the cognitive deficits.

As a neurodevelopmental disorder, early diagnosis of ASD is very important to improve the quality of life of patients. In recent years, researchers have attempted to extract biomarkers representing ASD from fMRI data using machine learning methods, so as to provide an auxiliary diagnosis for clinicians. For example, Lu et al. [65] proposed a multi-kernel-based subspace clustering algorithm for identifying ASD patients, which still has a good clustering effect on high-dimensional network datasets. Leming et al. [66] trained a convolutional neural network and applied it to ASD recognition, and their experiments showed that deep learning models that distinguish ASD from NC controls focus broadly on temporal and cerebellar connections. However, the problem of small size fMRI data prevented the generalization of the above research works [67].

To solve this problem, the Autism Brain Imaging Data Exchange, an international collaborative project, has collected data from over 1000 subjects and made the whole database publicly available. Based on the ABIDE database, many advanced machine learning models have been proposed for the identification of ASD patients. For example, Eslami et al. [68] used autoencoder and single-layer perceptron to diagnose ASD and proposed a deep learning framework called ASD-DiagNet, which achieved classification accuracy of 70.3%. Bi et al. [69] used randomized support vector machine (SVM) clusters to distinguish ASD patients from normal controls and identified a number of abnormal brain regions that contribute to ASD. Mladen et al. [70] selected 368 ASD patients and 449 normal controls from ABIDE database, and then used the Fisher score as the feature selection method to quantitatively analyze 817 subjects and obtained classification accuracy of 85.06%.

3. Preliminaries

We start with the definition of the problem and the terms and introduce the notation we will use below. The source domain data denoted as are drawn from distribution , and the target domain data denoted as are drawn from distribution , where is the dimension of the data instance and and are the number of samples in the source and target domains, respectively.

Assume a labelled source domain , and an unlabeled target domain . We assume that their feature space and label space are the same, i.e., , but their marginal distribution and conditional distribution are different, i.e., .

Domain adaptation methods often seek to reduce the distribution differences across domains by explicitly adapting both the marginal and conditional distributions between domains. To be specific, domain adaptation seeks to minimize the distance (Equation (1)):

| (1) |

where and are the marginal distribution distance and conditional distribution distance between domains, respectively.

There are many metrics that can be used to estimate the distance between distributions, such as the Kullback–Leibler (KL) divergence. However, most of these distance metrics are based on parameters, and it is difficult to calculate the distance. Therefore, Borgwardt et al. [71] proposed a nonparametric distance metric MMD using a kernel learning method to measure the distance between two distributions in RKHS. The definition of the MMD is as follows:

Definition 1.

Given two random variables and, their MMD squared distance is calculated as follows (Equation (2)):

(2) whereis a universal RKHS[72], and.

Next, we introduce the concepts of triangular fuzzy numbers and three-way decisions.

Definition 2.

[73]. Letbe a triangular fuzzy number, whereanddenote the upper bound and lower bound of, respectively, andis the median of. Ifis satisfied, then is called a normal triangular fuzzy number. For any two triangular fuzzy numbersand, the distance between them is as follows (Equation (3)):

(3)

In addition, the basic operations between and are as follows (Equation (4)):

| (4) |

Definition 3.

[74]. Let U be the universe of discourse,. If threshold exists, then its positive region, negative region and boundary region are defined with threshold (Equation (5)):

(5) where is the equivalence class containing , and is the conditional probability.

4. Methods

4.1. Joint Distribution Adaptation

Domain adaptation seeks an invariant feature expression for the source domain and the target domain in a low-dimensional (K < d) space. Let be the linear transformation matrix and and be the projected variables from the source and target data, respectively. We use the nonparametric metric MMD, which computes the distance between the sample means of the source and target data in the k-dimensional embeddings, to estimate the difference between distributions. Specifically, according to Equation (2), can be expressed as (Equation (6)):

| (6) |

By further using the matrix transformation rule and regularization and then minimizing the marginal distribution distance, Equation (6) can be formalized as follows (Equation (7)):

| (7) |

where represents the input matrix containing and . In addition, following [26], is the MMD matrix and can be constructed as follows (Equation (8)):

| (8) |

However, the label information of the domain data is not considered, which will lead to the lack of sufficient discriminability of the adapted features; therefore, so it is insufficient to adapt to the marginal distribution only. To solve this problem, we will next adjust the conditional distribution between domains.

Since no label information is available in the target domain, we cannot directly estimate the conditional distribution of the target domain. Here, based on the concept of sufficient statistics, we can replace and with class conditional distributions and , respectively. However, obtaining target domain label information through source domain data while reducing the distribution difference between domains is a challenging problem in unsupervised domain adaptation. In Section 4.2, we introduce how to obtain the label information of the target domain data so as to obtain the above class conditional distribution. Thus far, we can match the class condition distribution of the two domains. Similar to the calculation of the marginal distribution, we use the modified MMD formula to estimate the conditional distribution between domains. can be represented as (Equation (9)):

| (9) |

where is the class label, and and are samples belonging to class in the source domain and target domain, respectively. and are the number of samples belonging to class in the source domain and target domain, respectively.

Similar to the marginal distribution, we formalize Equation (9) as Equation (10) by using matrix transformation rules and regularization:

| (10) |

where the MMD matrices containing class labels are constructed as follows (Equation (11)):

| (11) |

In order to reduce both the marginal distribution and conditional distribution between domains, we incorporate Equations (7) and (10) into one object Function (Equation (12)):

| (12) |

where the first term considers both the adaptive marginal distribution and conditional distribution, and the second term is the regularization term. is the Frobenius norm, and is the regularization parameter. As noted in [29], adding the constraint in Function (12) would preserve the inner properties of the original data, which implies and introduces an additional data discrimination ability into the learned model. In addition, in function (12), represents the input matrix containing and ; denotes the identity matrix; and is the centering matrix, where is the matrix of ones.

To obtain the transformation matrix , we obtain the Lagrange solution to function (12), which is rewritten as (Equation (13)):

| (13) |

where is the Lagrange multiplier. Setting , the original optimization problem is transformed into the following eigen-decomposition problem (Equation (14)):

| (14) |

The transformation matrix is the solution to Equation (14) and thus builds the bridge between the source and target domains in the new expression .

4.2. Three-Way Decision Model Based on Triangular Fuzzy Similarity

In practice, the conditional distribution cannot be obtained directly because there is no label information in the target domain. In order to solve this problem, we first give the concept of the degree of information difference and apply it to the construction of triangular fuzzy numbers and the calculation of the corresponding triangular fuzzy similarity. Then, according to the degree of association of the triangular fuzzy similarity between objects in the target domain, the target domain is divided into positive regions, negative regions and boundary regions with structural information.

For the convenience of the description, suppose that both the domain of discourse U and attribute set A are nonempty finite sets and that is an object in U, is an attribute in A, where .

4.2.1. Information Difference Degree and Triangular Fuzzy Similarity

Definition 4.

Letbe the domain of discourse,be the set of attributes, and the value of objectunder attributebe. When, the degree of information difference of object is as follows (Equation (15)):

(15)

Remark 1.

- (1)

The greater the value of is, the greater the degree of information difference of objectunderand. When objecthas the same descriptionforand, the real part of the log function will have a denominator of 0, i.e.,. In this case, since, we can obtain that the final degree of information deviationis independent of the value of. For the reasonableness of the calculation, let.

- (2)

For the convenience of the representation, we obtain the information difference matrix of object , which can be expressed as follows (Equation (16)):whererepresents the degree of information difference of objectunder attributesand.

(16)

Theorem 1.

According to definition 4, we have the following conclusions:

- (1)

Boundedness:.

- (2)

Monotonicity: The degree of information difference ofaboutand increases monotonously as the difference increases.

- (3)

Symmetry:.

Proof.

Properties (2) and (3) are easily proven by Definition 4.

- (1)

According to Definition 4, and . When the description of under and appears in two extreme cases, namely, or , we can obtain , and the information difference reaches the maximum at this time, . □

Definition 5.

Let U be the domain of discourse, and the triangular fuzzy number of under attribute set A is, where,,anddenotes the number of information difference values. Then, the degree of triangular fuzzy similarity betweenand is as follows (Equation (17)):

(17)

Theorem 2.

The degree of triangular fuzzy similarity satisfies the following properties:

- (1)

.

- (2)

if, andifandorand.

Proof.

According to Definition 5, (1) obviously holds.

- (2)

Since , when , i.e., , ,, we have , so . Similarly, since and , . When , . In this case, we can obtain and or and . □

4.2.2. Construction of the 3WD Model

Definition 6.

Let U be the universe and A be the set of attributes. The triangular fuzzy similarity between any objectandin U is. If there is a threshold, then the-level classes ofwith respect toare defined as follows (Equation (18)):

(18) whereandare triangular fuzzy similarity classes of positive and negative fields, respectively. Specifically, the objects inhave the smallest degree of information difference and the largest triangular fuzzy similarity on the-level while the objects inare the opposite to.

Suppose is a given goal concept and is the set of states, which represents object x in the -level similarity domain or x in the -level negative similarity domain . is the set of actions, where means acceptance, means delay, and means rejection. According to reference [75], the losses caused by actions taken in different states are shown in Table 1.

Table 1.

Cost function matrix.

| Action | Cost Function | |

|---|---|---|

When the object , , and represent the loss of acceptance, delay and rejection decisions, respectively. Analogously, , and represent the corresponding decision loss cost when . Without any loss of generality, when , we assume that the correct acceptance cost is less than the delay decision cost and less than the corresponding wrong acceptance cost, namely . Similarly, when misclassified, we have . Therefore, the expected losses of object x under the above three decision actions are as follows (Equation (19)):

| (19) |

where and are the probabilities that object x belongs to a similar state of the -level positive or negative domain. By introducing Bayesian minimum risk decision theory, we have (Equation (20)):

| (20) |

Furthermore, form Equations (19) and (20), we can obtain (Equation (21)):

| (21) |

where (Equation (22))

| (22) |

In the Algorithm 1, we first measure the degree of information difference for each object according to any two attributes in the target domain (line 1 and line 2). On this basis, the triangular fuzzy similarity of each object can be calculated (line 3). It is worth noting that we can obtain triangular fuzzy similarity at different levels by adjusting the threshold parameter . Furthermore, the triangular fuzzy similarity is regarded as the cost loss of different classification decisions, and the final decision is implemented by comparing with the decision thresholds and (line 4).

In addition, the higher the value of is, the greater the triangular fuzzy similarity between objects is. On the one hand, since , by changing the parameter , we can obtain the triangular fuzzy similarity of objects in the target domain at different levels. One the other hand, the values of and will directly affect the values of threshold and . In order to visualize the impact of the final result and the threshold, we have shown it in detail in Section 6.1.

| Algorithm 1 Three-way decision model based on the triangular fuzzy similarity |

| Input: target domain data , threshold , and . |

| Output: positive region object set , negative region object set , boundary region object set . |

| 1: BEGIN |

| 2: Calculate the degree of information difference of each object in the target domain under any two attributes according to Equation (15). |

| 3: Calculate the triangular fuzzy similarity between any two objects in the target domain using Equation (17). |

| 4: According to Equation (21), divide the target domain into three domains. |

| 5: END BEGIN |

4.3. Adaptation Via Iterative Refinement

In this section, we integrate the methods presented in Section 4.1 and Section 4.2 and finally realize unsupervised domain adaptation to the conditional distribution of cross-domain data by introducing the label propagation algorithm. Specifically, we first obtain the initial pseudolabel of the target domain according to joint distribution adaptation, then obtain the set of boundary objects of the target domain according to the three-way decision model proposed in Section 4.2 and place these objects into objects to be classified. Once the above and are obtained, we effectively set a semisupervised setting for the target domain data. Following [29], we use the label propagation algorithm to discriminate the boundary objects in the target domain and update . Algorithm 2 summarizes our proposed method. Algorithm 2—which in addition to the initial stage, we only adapt to the marginal distribution—and the subsequent steps consider both the marginal distribution and the conditional distribution. In addition, the accuracy of the labels in the target domain is gradually improved as the cross domain distribution differences decrease. In the following experiments, we will show that the proposed method converges to the optimal solution in a finite number of iterations and further prove the effectiveness of the proposed method.

| Algorithm 2 Our Proposed Model |

| Input: source domain data , target domain data , labels of source domain data, threshold , and |

| Output: as labels of target domain data |

| 1: BEGIN |

| 2: Initialize as Null |

| 3: while not converged do |

| 4: (1) Distribution adaptation in Equation (14) and let and |

| 5: (2) Assign using classifiers trained by |

| 6: (3) Obtain in Algorithm 1 |

| 7: (4) execute label propagation algorithm |

| 8: End while |

| 9: |

| 10: END BEGIN |

5. Experiments

5.1. Materials

5.1.1. Data Acquisition

In order to verify the effectiveness of our proposed method and compare this method with the existing research, our experimental data are obtained from the publicly accessible ABIDE dataset. ABIDE is a multisite platform that has aggregated functional and structural brain imaging data collected from 17 different laboratories around the world, which including 539 ASD patients and 573 neurotypical controls. All subjects had corresponding resting-state fMRI images and phenotypic information such as age and gender. More details on the data collection, exclusion criteria, and scan parameters are available on the ABIDE website, namely, http://fcon_1000.projects.nitrc.org/indi/abide/, (accessed on 8 October 2020). As different sites have different numbers of limited samples, we use the data from three different sites, including NYU, UM and USM, each with more than 50 subjects and using different fMRI protocols. Specifically, there were 343 subjects, including 159 ASD patients and 184 neurotypical controls. Detailed demographic information of the subjects is listed in Table 2. In Table 2, m std and M/F are short for mean standard deviation and male/female, respectively. In each site, we used the two-sample t-test to evaluate the differences in age between the two groups and no significant differences was observed between the control group and the ASD group, i.e., p = 0.42 (NYU), p = 0.31 (USM), p = 0.34 (UM). Since the subjects across different sites follow different distributions, it is necessary to perform domain adaptation. In the experiments, we use A→B to denote the knowledge transfer from source domain A to target domain B. We construct a total of six tasks: NYU→USM, NYU→UM, USM→NYU, USM→UM, UM→NYU, and UM→USM.

Table 2.

Demographic information of the studied subjects from three imaging sites in the ABIDE database. The age values are denoted as the mean ± standard deviation. M/F: male/female.

| Site | ASD | Normal Control | ||

|---|---|---|---|---|

| Age (m ± std) | Gender (M/F) | Age (m ± std) | Gender (M/F) | |

| NYU | 14.92 7.04 | 64/9 | 15.75 6.23 | 70/36 |

| USM | 24.59 8.46 | 38/0 | 22.33 7.69 | 23/0 |

| UM | 13.85 2.29 | 39/9 | 15.03 3.64 | 49/16 |

5.1.2. Data Pre-Processing

To ensure replicability, each rs-fMRI datapoint used in this research was provided by the Preprocessed Connectome Project initiative and preprocessed by using the Data Processing Assistant for Resting-State fMRI (DPARSF) software [76]. The image preprocessing steps are listed as follows. (1) Remove the first 10 time points, (2) conduct slice timing correction, and (3) conduct head motion realignment. (4) Next, image standardization was performed by normalizing the functional images into the echo planar imaging (EPI) template, followed by (5) spatial smoothing, (6) removing the linear trend, (7) temporal filtering, and (8) removing covariates. Subsequently, the brain was divided into 90 regions of interest (ROIs) based on the Automatic Anatomical Labelling (AAL) [77] atlas, and the average time series of each ROI was extracted. Then, for each subject, we obtained a 90 × 90 functional connectivity symmetric matrix, where each element represents the Pearson correlation coefficient between a pair of ROIs. Finally, we convert the upper triangle into a 4005 (90 × 89/2)-dimensional feature vector to represent each subject.

5.2. Competing Methods

We compared the performance of our method with the following state-of-the-art machine learning models, including one baseline method and three representation-based methods.

Baseline: In this study, we use a support vector machine (SVM) as the base classifier, which is widely used in the field of neuroimaging [11]. Specifically, we specify site data as the source domain, directly train an SVM model using the original features on it, and then use the rest of the site data as the target domain to test the classifier we have trained. In the SVM classifier, we applied a linear kernel and searched the margin penalty using the grid-search strategy from the range of [2−5, 2−4…, 24, 25] via cross-validation.

Transfer component analysis (TCA) [36]: This is a general feature transformation method that reduces the difference in the marginal distribution between different domains by learning the transfer components between domains in RKHS.

Joint distribution adaptation (JDA) [26]: The JDA approach reduces both the marginal distribution and conditional distribution between different domains.

Domain adaptation with label and structural consistency (DALSC) [29]: DALSC is an unsupervised domain adaptation method that uses the structural information of the target domain to improve the performance of the model while adjusting the marginal distribution and conditional distribution between domains.

5.3. Experimental Setup

In this work, we use 5-fold cross-validation to evaluate the performance of each method. For our method, we set , is searched in {0.5,0.55,,0.85,0.9}, is searched in {0.55,0.6,,0.9,0.95}, and . In addition, to evaluate the classification performance, we calculated the true positives (TPs), false positives (FPs), true negatives (TNs), and false negatives (FNs) for the classification by comparing the classified labels and gold-standard labels. Then, six evaluation metrics on test data, including the classification accuracy (ACC), sensitivity (SEN), specificity (SPE), balanced accuracy (BAC), positive predictive value (PPV) and negative predictive value (NPV), are utilized. These metrics can be computed as follows (Equation (23)):

| (23) |

For these metrics, higher values indicate better classification performance.

5.4. Results on ABIDE with Multisite fMRI Data

In this section, we present the experimental results of the proposed method and several other comparative methods on six tasks. Note that data from each site can be used as the source domain while the data from other sites can be used as the target domain. For the three domain adaptation methods (i.e., TCA, JDA, and DALSC) and our proposed method, an unsupervised adaptive experimental setup is adopted, which has no label information of the target domain to be utilized in the prediction process. The classification performance results of various methods are shown in Table 3. From Table 3, we can make the following three observations.

Table 3.

Performance of five different methods in ASD classification on the multisite ABIDE database. The number in bold indicates the best result achieved under a certain metric.

| Task | Method | ACC (%) | SEN (%) | SPE (%) | BAC (%) | PPV (%) | NPV (%) |

|---|---|---|---|---|---|---|---|

| NYU →UM | Baseline | 54.87 | 49.23 | 62.5 | 55.87 | 64 | 47.62 |

| TCA | 62.83 | 58.46 | 68.75 | 63.61 | 71.69 | 55.00 | |

| JDA | 64.50 | 66.67 | 61.64 | 64.16 | 69.57 | 58.44 | |

| DALSC | 64.60 | 56.92 | 75.00 | 65.96 | 75.51 | 56.25 | |

| Ours | 70.80 | 72.31 | 68.75 | 70.53 | 75.81 | 64.71 | |

| NYU →USM | Baseline | 67.21 | 78.26 | 60.53 | 69.39 | 54.55 | 82.14 |

| TCA | 68.85 | 82.61 | 60.53 | 71.57 | 55.88 | 85.19 | |

| JDA | 70.49 | 86.96 | 60.53 | 73.74 | 57.14 | 88.46 | |

| DALSC | 72.13 | 73.91 | 71.05 | 72.48 | 60.71 | 81.81 | |

| Ours | 75.41 | 91.30 | 65.79 | 78.55 | 61.76 | 92.59 | |

| USM →UM | Baseline | 57.52 | 35.38 | 87.50 | 61.44 | 79.31 | 50.00 |

| TCA | 58.41 | 38.46 | 85.42 | 61.94 | 78.13 | 50.62 | |

| JDA | 61.06 | 61.54 | 60.42 | 60.98 | 67.80 | 53.70 | |

| DALSC | 64.60 | 73.85 | 52.08 | 62.96 | 67.61 | 59.52 | |

| Ours | 69.91 | 76.92 | 60.42 | 68.67 | 72.46 | 65.91 | |

| USM →NYU | Baseline | 53.25 | 35.42 | 76.71 | 56.06 | 66.67 | 47.46 |

| TCA | 57.39 | 40.63 | 79.45 | 60.04 | 72.22 | 50.43 | |

| JDA | 60.36 | 64.58 | 54.79 | 59.69 | 65.26 | 54.05 | |

| DALSC | 63.91 | 65.63 | 61.64 | 63.63 | 69.23 | 57.69 | |

| Ours | 72.13 | 78.26 | 68.42 | 73.34 | 60.00 | 83.87 | |

| UM →NYU | Baseline | 58.58 | 83.33 | 26.03 | 54.68 | 59.70 | 54.29 |

| TCA | 61.54 | 82.29 | 34.25 | 58.27 | 62.20 | 59.50 | |

| JDA | 63.31 | 82.29 | 38.35 | 60.32 | 63.71 | 62.22 | |

| DALSC | 64.49 | 92.70 | 27.39 | 60.05 | 62.68 | 74.07 | |

| Ours | 71.01 | 90.63 | 45.21 | 67.92 | 68.50 | 78.57 | |

| UM →USM | Baseline | 54.09 | 78.26 | 39.47 | 58.87 | 43.90 | 75.00 |

| TCA | 60.66 | 73.91 | 52.63 | 63.27 | 48.57 | 76.92 | |

| JDA | 60.66 | 78.26 | 50.00 | 64.13 | 48.65 | 79.17 | |

| DALSC | 57.38 | 73.91 | 47.37 | 60.64 | 45.95 | 75.00 | |

| Ours | 68.85 | 82.61 | 60.53 | 71.57 | 55.88 | 85.19 |

First, in terms of accuracy, the domain adaptive method based on feature representation is better than the direct use of the SVM classifier to predict the target domain.

Second, the TCA method in the domain adaptation method has the worst classification result because it only considers the marginal distribution.

Finally, the experimental results show that the classification accuracy of the proposed method is better than the existing domain adaptive methods (such as TCA, JDA and DALSC) in six tasks, and it also has good performance in SEN, SPE, BAC and other indicators.

6. Discussion

In this section, we first analyze the influence of the parameters in the proposed method on the algorithm performance and then compare the proposed method with other state-of-the-art methods.

6.1. Parameter Analysis

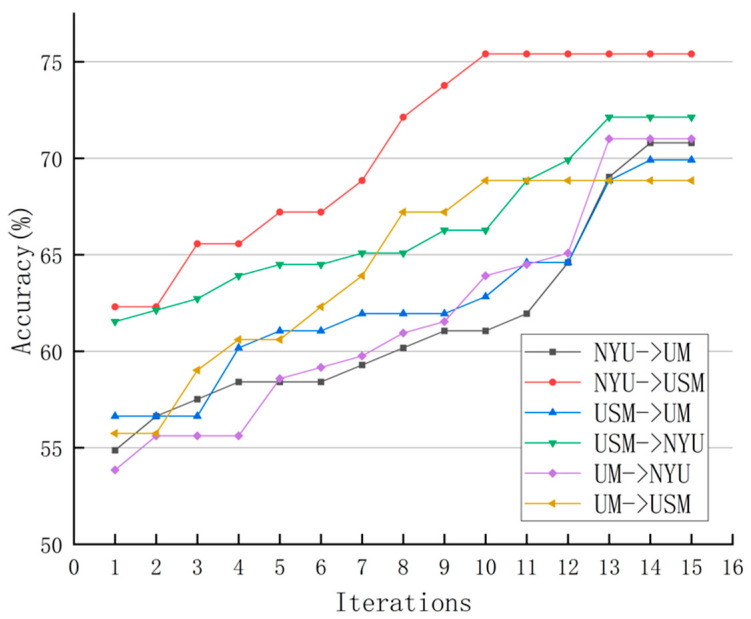

We first analyze the impact of the number of iterations on the performance of the proposed method. As mentioned in Section 4.3, for domain adaptation, we solve the proposed model iteratively. In order to evaluate its convergence, Figure 1 shows the change in algorithm accuracy as the number of iterations increases on the six tasks. It can be seen from Figure 1 that the classification accuracy of each task is gradually improved with the increase in the number of iterations. This indicates that our model learned an invariant data distribution among domains/sites after multiple iterations. The figure shows that the accuracy rate converges in 10–15 iterations.

Figure 1.

Classification accuracy versus the number of iterations on six domain pairs.

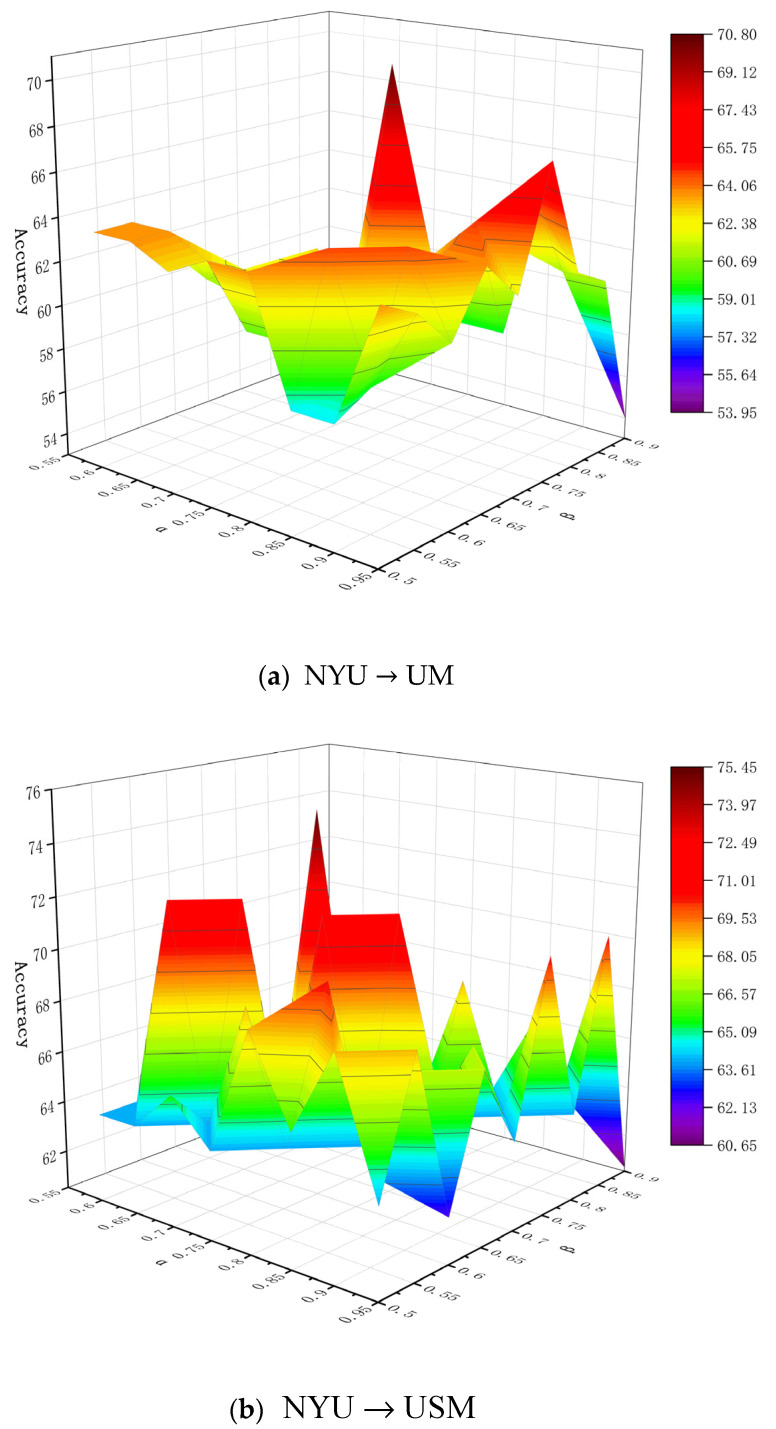

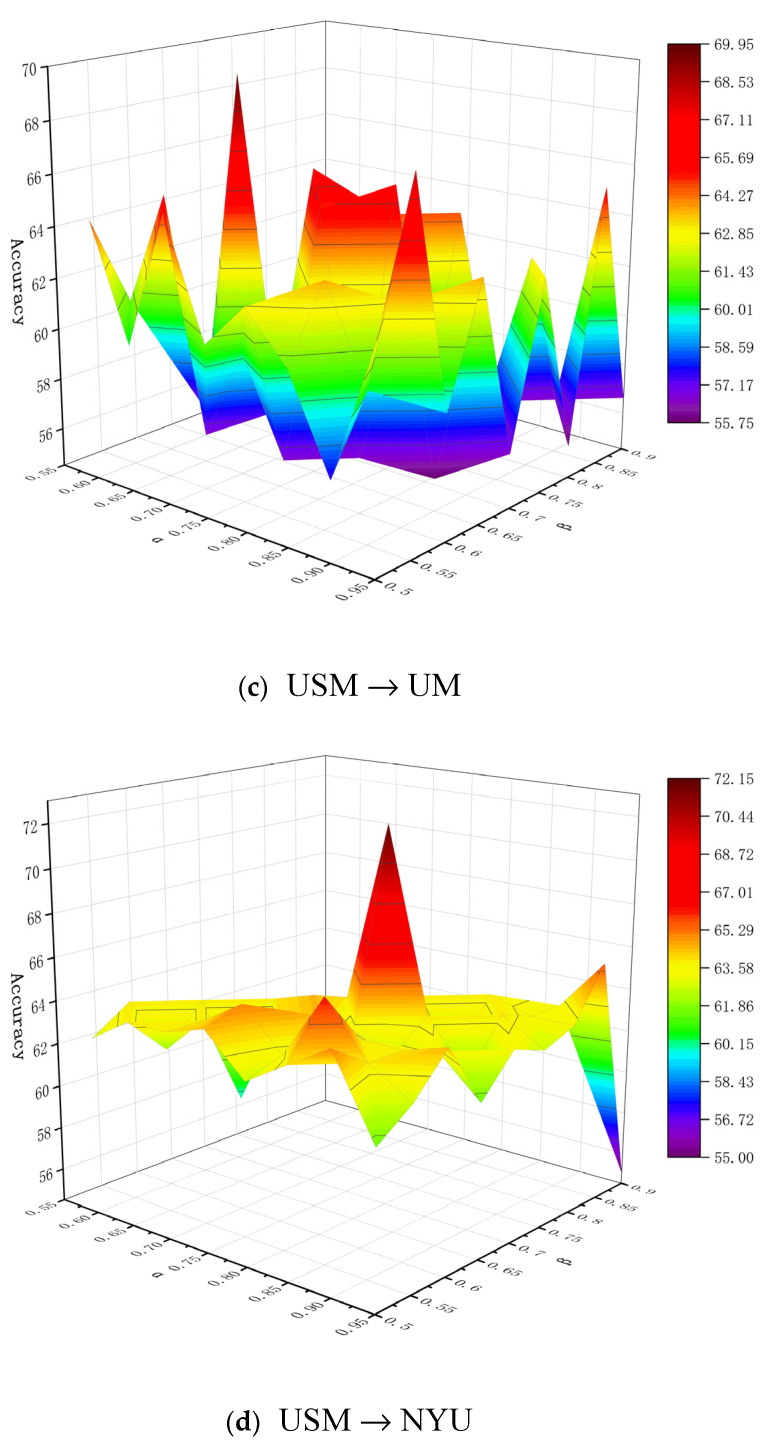

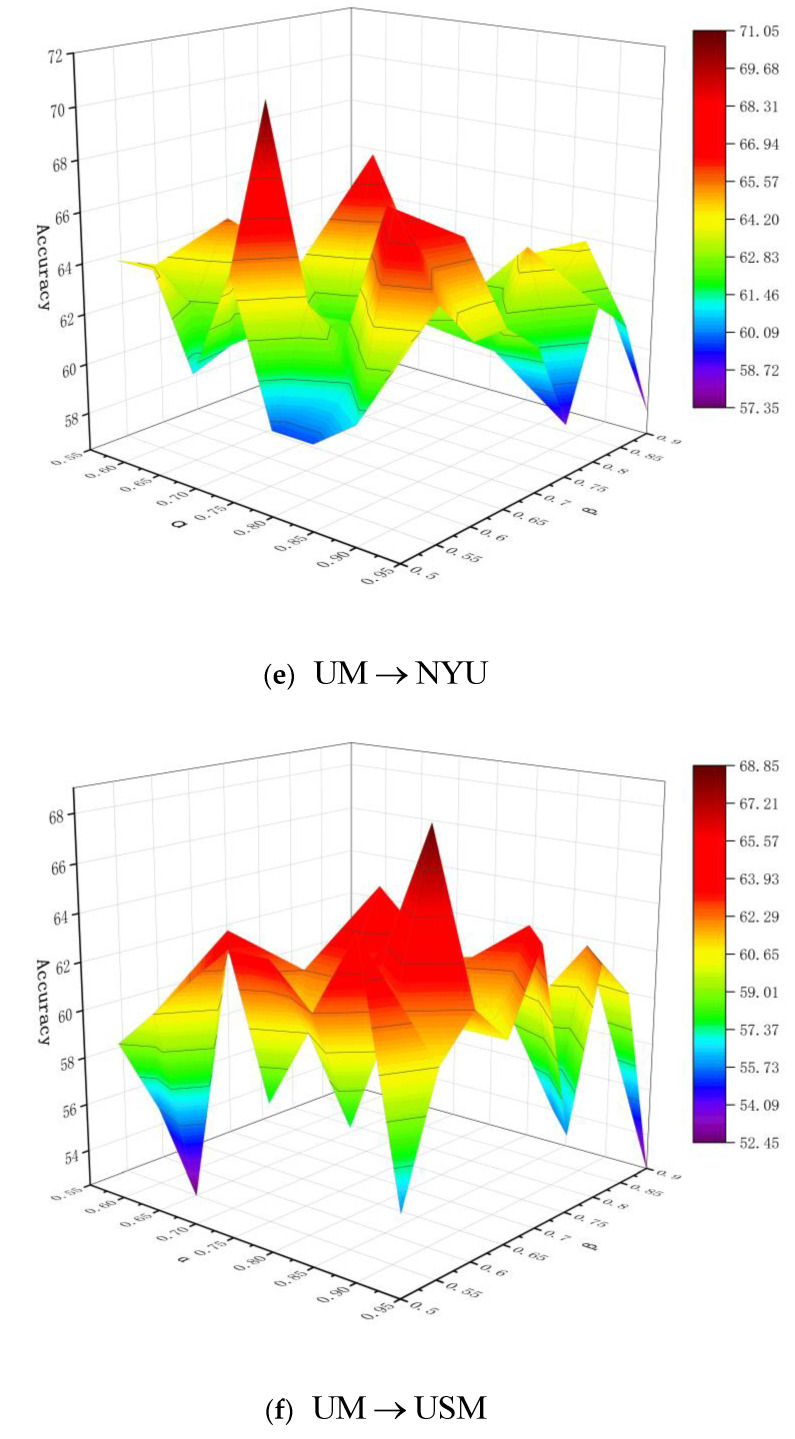

In addition, the values of and involved in the experiment represent different decision risk cost levels, and their slight differences may induce different decision results. Without any loss of generality, in order to obtain more suitable parameters, we analyze the influence of different threshold parameters on the performance of the proposed method. Specifically, in order to evaluate the method’s convergence, we conducted comparison experiments at different levels on the six tasks, and the final results are shown in Figure 2. The figures show that the accuracy of the algorithm changes as the threshold changes; and although the degree of fluctuation of the accuracy is different under different , it will eventually converge. It can be seen from Figure 2 that the optimal values of (,) under six tasks NYUUM, NYUUSM, USMUM, USMNYU, UMNYU and UMUSM are (0.8, 0.7), (0.75, 0.65), (0.7, 0.6), (0.8, 0.7), (0.75, 0.55), (0.9, 0.6), respectively. Furthermore, it can be observed from Figure 2 that when given smaller and larger , the classification accuracy of the six tasks is relatively low. This shows that smaller and larger result in more samples from the target domain being divided into the boundary region. More boundary objects increase the uncertainty information when implementing the label propagation algorithm, which leads to the decline of classification performance.

Figure 2.

Classification accuracies with respect to different parameter values of and on six domain pairs (a) NYU→UM; (b) NYU→USM; (c) USM→UM; (d) USM→NYU; (e) UM→NYU; (f) UM→USM.

6.2. Comparison with State-of-the-Art Methods

To further verify the effectiveness of our proposed method, we also compare it with six other advanced methods (including the deep learning method) using the rs-fMRI data in the ABIDE database. Since only a few research papers have reported their average classification results among different sites, we only list the classification results on the NYU site in Table 4. In addition, we list the details of each method in Table 4, including the classifier and the type of feature. It is worth noting that in the research of [14,17], they selected a part of the samples from each site in proportion as the training set and then used the trained deep learning model to predict the NYU site directly.

Table 4.

Comparison with state-of-the-art methods for ASD identification using rs-fMRI ABIDE data on the NYU site. HOA: Harvard Oxford Atlas. GMR: grey matter ROIs, and AAL: anatomical automatic labelling. CC200: Craddock 200. sGCN: siamese graph convolutional neural network. FCA: functional connectivity analysis. DAE: denoising autoencoder. DANN: deep attention neural networks.

| Method | Feature Type | Feature Dimension | Classifier | ACC (%) |

|---|---|---|---|---|

| sGCN + Hing Loss [14] | HOA | 111 × 111 | K-Nearest Neighbor (KNN) | 60.50 |

| sGCN + Global Loss [14] | HOA | 111 × 111 | KNN | 63.50 |

| sGCN + Constrained Variance Loss [14] | HOA | 111 × 111 | KNN | 68.00 |

| FCA [17] | GMR | 7266 × 7266 | t-test | 63.00 |

| DAE [16] | CC200 Atlas | 19,900 | Softmax Regression | 66.00 |

| DANN [78] | AAL | 6670 | Deep neural network | 70.90 |

| Ours | AAL | 4005 | SVM | 72.13/71.01 |

As Table 4 shows, the proposed method achieves 72.13% and 71.01% classification accuracy, respectively in the two tasks with NYU as the target domain, which is better than the models proposed in other research papers. In terms of feature type and feature dimension, this paper uses AAL atlas to divide brain regions, and obtains the original feature vector with the smallest dimension. In addition, although the sGCN, DAE and DANN are three deep learning methods, our proposed method still has a better classification effect. There may be two reasons for this. (1) Training a robust deep learning model usually requires a large number of samples. However, for multisite ASD recognition, although the data from each site can be fused together to generate a larger data set, these samples are still insufficient to train a reliable deep neural network. (2) The overfitting problem usually occurs when a deep neural network processes data with noise. In fact, fMRI data usually contain a large amount of noise information, which limits the generalization ability of the trained neural network.

7. Conclusions

In this paper, we propose a novel domain adaptation method for ASD identification with rs-fMRI data. Specifically, we introduce a three-way decision model based on triangular fuzzy similarity and divide the objects in the target domain with coarse granularity. Then, a label propagation algorithm is used to make secondary decisions on boundary region objects so as to improve the performance of ASD diagnosis based on cross-site rs-fMRI data. We conduct extensive experiments on the ABIDE dataset based on multisite data to verify the convergence and robustness of the proposed algorithm. Compared with several state-of-the-art methods, the experimental results show that the proposed method has better classification performance.

Although the classification results of our proposed method based on cross-site ASD diagnosis are significantly improved compared with the existing domain adaptation methods based on feature distribution, the following technical problems need to be considered in the future. First, although the proposed method can alleviate data heterogeneity between source and target domains, the input fMRI features are still unfiltered original high-dimensional features. However, the original high-dimensional features may have redundant features, which will reduce the performance of the model. Therefore, in the future, we will study how to combine feature selection with our methods for ASD diagnosis. Second, in this paper, we only take the functional connectivity matrix of rs-fMRI data as the feature representation of each subject without considering the network topology information. In future research, we will consider the fusion of functional brain network topology data to provide more valuable discriminant information for ASD diagnosis. Finally, in order to obtain more valuable structured information of the target domain, we will consider combining multigranularity rough sets to further improve the model performance in the future.

Acknowledgments

The authors would like to thank all individuals who participated in the initial experiments from which raw data were collected.

Author Contributions

C.S. initiated the research and wrote the paper. C.S. and X.X. performed the experiments; J.Z. supervised the research work and provided helpful suggestions. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the General Program (61977010), Key Program (61731003) of Nature Science Foundation of China and the Beijing Normal University Interdisciplinary Research Foundation for the First-Year Doctoral Candidates (BNUXKJC1925).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Conflicts of Interest

The authors declare no conflict of interest.

Footnotes

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Khan N.A., Waheeb S.A., Riaz A., Shang X. A Three-Stage Teacher, Student Neural Networks and Sequential Feed Forward Selection-Based Feature Selection Approach for the Classification of Autism Spectrum Disorder. Brain Sci. 2020;10:754. doi: 10.3390/brainsci10100754. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Kong Y., Gao J., Xu Y., Pan Y., Wang J., Liu J. Classification of autism spectrum disorder by combining brain connectivity and deep neural network classifier. Neurocomputing. 2019;324:63–68. doi: 10.1016/j.neucom.2018.04.080. [DOI] [Google Scholar]

- 3.Zecavati N., Spence S.J. Neurometabolic disorders and dysfunction in autism spectrum disorders. Curr. Neurol. Neurosci. 2009;9:129–136. doi: 10.1007/s11910-009-0021-x. [DOI] [PubMed] [Google Scholar]

- 4.Amaral D.G., Schumann C.M., Nordahl C.W. Neuroanatomy of autism. Trends Neurosci. 2008;31:137–145. doi: 10.1016/j.tins.2007.12.005. [DOI] [PubMed] [Google Scholar]

- 5.Khundrakpam B.S., Lewis J.D., Kostopoulos P., Carbonell F., Evans A.C. Cortical Thickness Abnormalities in Autism Spectrum Disorders Through Late Childhood, Adolescence, and Adulthood: A Large-Scale MRI Study. Cereb. Cortex. 2017;27:1721–1731. doi: 10.1093/cercor/bhx038. [DOI] [PubMed] [Google Scholar]

- 6.Zablotsky B., Black L.I., Maenner M.J., Schieve L.A., Blumberg S.J. Estimated Prevalence of Autism and Other Developmental Disabilities Following Questionnaire Changes in the 2014 National Health Interview Survey. Natl. Health Stat. Rep. 2015;87:1–20. [PubMed] [Google Scholar]

- 7.Wang M., Zhang D., Huang J., Yap P., Shen D., Liu M. Identifying Autism Spectrum Disorder With Multi-Site fMRI via Low-Rank Domain Adaptation. IEEE Trans. Med. Imaging. 2020;39:644–655. doi: 10.1109/TMI.2019.2933160. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Fernell E., Eriksson M.A., Gillberg C. Early diagnosis of autism and impact on prognosis: A narrative review. Clin. Epidemiol. 2013;5:33–43. doi: 10.2147/CLEP.S41714. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Mwangi B., Ebmeier K.P., Matthews K., Steele J.D. Multi-centre diagnostic classification of individual structural neuroimaging scans from patients with major depressive disorder. Brain J. Neurol. 2012;135:1508–1521. doi: 10.1093/brain/aws084. [DOI] [PubMed] [Google Scholar]

- 10.Plitt M., Barnes K.A., Martin A. Functional connectivity classification of autism identifies highly predictive brain features but falls short of biomarker standards. NeuroImage Clin. 2015;7:359–366. doi: 10.1016/j.nicl.2014.12.013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Shi C., Zhang J., Wu X. An fMRI Feature Selection Method Based on a Minimum Spanning Tree for Identifying Patients with Autism. Symmetry. 2020;12:1995. doi: 10.3390/sym12121995. [DOI] [Google Scholar]

- 12.Van den Heuvel M.P., Hulshoff Pol H.E. Exploring the brain network: A review on resting-state fMRI functional connectivity. Eur. Neuropsychopharmacol. 2010;20:519–534. doi: 10.1016/j.euroneuro.2010.03.008. [DOI] [PubMed] [Google Scholar]

- 13.Song J., Yoon N., Jang S., Lee G., Kim B. Neuroimaging-Based Deep Learning in Autism Spectrum Disorder and Attention-Deficit/Hyperactivity Disorder. J. Child Adolesc. Psychiatry. 2020;31:97–104. doi: 10.5765/jkacap.200021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Ktena S.I., Parisot S., Ferrante E., Rajchl M., Lee M., Glocker B., Rueckert D. Metric learning with spectral graph convolutions on brain connectivity networks. Neuroimage. 2018;169:431–442. doi: 10.1016/j.neuroimage.2017.12.052. [DOI] [PubMed] [Google Scholar]

- 15.Abraham A., Milham M.P., Di Martino A., Craddock R.C., Samaras D., Thirion B., Varoquaux G. Deriving reproducible biomarkers from multi-site resting-state data: An Autism-based example. Neuroimage. 2017;147:736–745. doi: 10.1016/j.neuroimage.2016.10.045. [DOI] [PubMed] [Google Scholar]

- 16.Heinsfeld A.S., Franco A.R., Craddock R.C., Buchweitz A., Meneguzzi F. Identification of autism spectrum disorder using deep learning and the ABIDE dataset. NeuroImage Clin. 2017;17:16–23. doi: 10.1016/j.nicl.2017.08.017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Nielsen J.A., Zielinski B.A., Fletcher P.T., Alexander A.L., Lange N., Bigler E.D., Lainhart J.E., Anderson J.S. Multisite functional connectivity MRI classification of autism: ABIDE results. Front. Hum. Neurosci. 2013;7 doi: 10.3389/fnhum.2013.00599. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Dai W., Yang Q., Xue G.R., Yu Y. Boosting for transfer learning; Proceedings of the 24th International Conference on Machine Learning; Corvalis, OR, USA. 20–24 June 2007; pp. 193–200. [Google Scholar]

- 19.Ren C.X., Dai D.Q., Huang K.K., Lai Z.R. Transfer learning of structured representation for face recognition. IEEE Trans. Image Process. 2014;23:5440–5454. doi: 10.1109/TIP.2014.2365725. [DOI] [PubMed] [Google Scholar]

- 20.Chen Y., Wang J., Huang M., Yu H. Cross-position activity recognition with stratified transfer learning. Perv. Mob. Comput. 2019;57:1–13. doi: 10.1016/j.pmcj.2019.04.004. [DOI] [Google Scholar]

- 21.Yi J., Tao J., Wen Z., Bai Y. Language-adversarial transfer learning for low-resource speech recognition. IEEE-ACM Trans. Audio Speech Lang. 2019;27:621–630. doi: 10.1109/TASLP.2018.2889606. [DOI] [Google Scholar]

- 22.Do C.B., Ng A.Y. Transfer learning for text classification. Adv. Neural Inf. Process. Syst. 2005;18:299–306. [Google Scholar]

- 23.Xu Y., Pan S.J., Xiong H., Wu Q., Luo R., Min H., Song H. A Unified Framework for Metric Transfer Learning. IEEE Trans. Knowl. Data Eng. 2017;29:1158–1171. doi: 10.1109/TKDE.2017.2669193. [DOI] [Google Scholar]

- 24.Duan L., Tsang I.W., Xu D., Chua T.S. Domain adaptation from multiple sources via auxiliary classifiers; Proceedings of the 26th Annual International Conference on Machine Learning; Montreal, QC, Canada. 14–18 June 2009; pp. 289–296. [Google Scholar]

- 25.Long M., Wang J., Ding G., Pan S.J., Yu P.S. Adaptation Regularization: A General Framework for Transfer Learning. IEEE Trans. Knowl. Data Eng. 2014;26:1076–1089. doi: 10.1109/TKDE.2013.111. [DOI] [Google Scholar]

- 26.Fernando B., Habrard A., Sebban M., Tuytelaars T. Unsupervised visual domain adaptation using subspace alignment; Proceedings of the IEEE International Conference on Computer Vision; Sydney, Australia. 1–8 December 2013; pp. 2960–2967. [Google Scholar]

- 27.Gong B., Shi Y., Sha F., Grauman K. Geodesic flow kernel for unsupervised domain adaptation; Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition; Washington, DC, USA. 16–21 June 2012; pp. 2066–2073. [Google Scholar]

- 28.Sun B., Feng J., Saenko K. Return of frustratingly easy domain adaptation; Proceedings of the AAAI Conference on Artificial Intelligence; Phoenix, AR, USA. 12–17 February 2016. [Google Scholar]

- 29.Wang J., Chen Y., Yu H., Huang M., Yang Q. Easy transfer learning by exploiting intra-domain structures; Proceedings of the IEEE International Conference on Multimedia and Expo; Shanghai, China. 8–12 July 2019; pp. 1210–1215. [Google Scholar]

- 30.Long M., Wang J., Ding G., Sun J., Yu P.S. Transfer feature learning with joint distribution adaptation; Proceedings of the IEEE International Conference on Computer Vision; Sydney, Australia. 1–8 December 2013; pp. 2200–2207. [Google Scholar]

- 31.Wang J., Chen Y., Hao S., Feng W., Shen Z. Balanced distribution adaptation for transfer learning; Proceedings of the 2017 IEEE International Conference on Data Mining; New Orleans, LA, USA. 18–21 November 2017; pp. 1129–1134. [Google Scholar]

- 32.Zhang J., Li W., Ogunbona P. Joint geometrical and statistical alignment for visual domain adaptation; Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition; Honolulu, HI, USA. 21–26 July 2017; pp. 1859–1867. [Google Scholar]

- 33.Hou C., Tsai Y.H., Yeh Y., Wang Y.F. Unsupervised Domain Adaptation with Label and Structural Consistency. IEEE T Image Process. 2016;25:5552–5562. doi: 10.1109/TIP.2016.2609820. [DOI] [PubMed] [Google Scholar]

- 34.Tahmoresnezhad J., Hashemi S. Visual domain adaptation via transfer feature learning. Knowl. Inf. Syst. 2017;50:586–605. doi: 10.1007/s10115-016-0944-x. [DOI] [Google Scholar]

- 35.Zhang Y., Deng B., Jia K., Zhang L. Label propagation with augmented anchors: A simple semi-supervised learning baseline for unsupervised domain adaptation; Proceedings of the European Conference on Computer Vision; Glasgow, UK. 23–28 August 2020; pp. 781–797. [Google Scholar]

- 36.Pan S.J., Tsang I.W., Kwok J.T., Yang Q. Domain Adaptation via Transfer Component Analysis. IEEE Trans. Neural Netw. 2011;22:199–210. doi: 10.1109/TNN.2010.2091281. [DOI] [PubMed] [Google Scholar]

- 37.Long M., Wang J., Ding G., Sun J., Yu P.S. Transfer joint matching for unsupervised domain adaptation; Proceedings of the IEEE conference on computer vision and pattern recognition; Columbus, OH, USA. 23–28 June 2014; pp. 1410–1417. [Google Scholar]

- 38.Wang J., Chen Y., Hu L., Peng X., Philip S.Y. Stratified transfer learning for cross-domain activity recognition; Proceedings of the 2018 IEEE International Conference on Pervasive Computing and Communications; Athens, Greece. 19–23 March 2018; pp. 1–10. [Google Scholar]

- 39.Yao Y. Three-way decisions with probabilistic rough sets. Inf. Sci. 2010;180:341–353. doi: 10.1016/j.ins.2009.09.021. [DOI] [Google Scholar]

- 40.Chu X., Sun B., Huang Q., Zhang Y. Preference degree-based multi-granularity sequential three-way group conflict decisions approach to the integration of TCM and Western medicine. Comput. Ind. Eng. 2020;143 doi: 10.1016/j.cie.2020.106393. [DOI] [Google Scholar]

- 41.Almasvandi Z., Vahidinia A., Heshmati A., Zangeneh M.M., Goicoechea H.C., Jalalvand A.R. Coupling of digital image processing and three-way calibration to assist a paper-based sensor for determination of nitrite in food samples. RSC Adv. 2020;10:14422–14430. doi: 10.1039/C9RA10918H. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Ren F., Wang L. Sentiment analysis of text based on three-way decisions. J. Intell. Fuzzy Syst. 2017;33:245–254. doi: 10.3233/JIFS-161522. [DOI] [Google Scholar]

- 43.Yao Y. International Conference on Rough Sets and Knowledge Technology. Springer; Berlin/Heidelberg, Germany: May 14–16, 2007. Decision-theoretic rough set models; pp. 1–12. [Google Scholar]

- 44.Zhang H., Yang S. Three-way group decisions with interval-valued decision-theoretic rough sets based on aggregating inclusion measures. Int. J. Approx. Reason. 2019;110:31–45. doi: 10.1016/j.ijar.2019.03.011. [DOI] [Google Scholar]

- 45.Liu P., Yang H. Three-way decisions with intuitionistic uncertain linguistic decision-theoretic rough sets based on generalized Maclaurin symmetric mean operators. Int. J. Fuzzy Syst. 2020;22:653–667. doi: 10.1007/s40815-019-00718-7. [DOI] [Google Scholar]

- 46.Agbodah K. The determination of three-way decisions with decision-theoretic rough sets considering the loss function evaluated by multiple experts. Granul. Comput. 2019;4:285–297. doi: 10.1007/s41066-018-0099-0. [DOI] [Google Scholar]

- 47.Liang D., Wang M., Xu Z., Liu D. Risk appetite dual hesitant fuzzy three-way decisions with TODIM. Inf. Sci. 2020;507:585–605. doi: 10.1016/j.ins.2018.12.017. [DOI] [Google Scholar]

- 48.Qian T., Wei L., Qi J. A theoretical study on the object (property) oriented concept lattices based on three-way decisions. Soft Comput. 2019;23:9477–9489. doi: 10.1007/s00500-019-03799-6. [DOI] [Google Scholar]

- 49.Yang C., Zhang Q., Zhao F. Hierarchical Three-Way Decisions with Intuitionistic Fuzzy Numbers in Multi-Granularity Spaces. IEEE Access. 2019;7:24362–24375. doi: 10.1109/ACCESS.2019.2900536. [DOI] [Google Scholar]

- 50.Yao Y., Deng X. Sequential three-way decisions with probabilistic rough sets; Proceedings of the IEEE 10th International Conference on Cognitive Informatics and Cognitive Computing; Banff, AB, Canada. 18–20 August 2011; pp. 120–125. [Google Scholar]

- 51.Yang X., Li T., Liu D., Fujita H. A temporal-spatial composite sequential approach of three-way granular computing. Inf. Sci. 2019;486:171–189. doi: 10.1016/j.ins.2019.02.048. [DOI] [Google Scholar]

- 52.Zhang L., Li H., Zhou X., Huang B. Sequential three-way decision based on multi-granular autoencoder features. Inf. Sci. 2020;507:630–643. doi: 10.1016/j.ins.2019.03.061. [DOI] [Google Scholar]

- 53.Liu D., Ye X. A matrix factorization based dynamic granularity recommendation with three-way decisions. Knowl. Based Syst. 2020;191 doi: 10.1016/j.knosys.2019.105243. [DOI] [Google Scholar]

- 54.Yao Y. Three-way granular computing, rough sets, and formal concept analysis. Int. J. Approx. Reason. 2020;116:106–125. doi: 10.1016/j.ijar.2019.11.002. [DOI] [Google Scholar]

- 55.Lang G., Luo J., Yao Y. Three-way conflict analysis: A unification of models based on rough sets and formal concept analysis. Knowl. Based Syst. 2020;194 doi: 10.1016/j.knosys.2020.105556. [DOI] [Google Scholar]

- 56.Xin X., Song J., Peng W. Intuitionistic Fuzzy Three-Way Decision Model Based on the Three-Way Granular Computing Method. Symmetry. 2020;12:1068. doi: 10.3390/sym12071068. [DOI] [Google Scholar]

- 57.Yue X.D., Chen Y.F., Miao D.Q., Fujita H. Fuzzy neighborhood covering for three-way classification. Inf. Sci. 2020;507:795–808. doi: 10.1016/j.ins.2018.07.065. [DOI] [Google Scholar]

- 58.Ma Y.Y., Zhang H.R., Xu Y.Y., Min F., Gao L. Three-way recommendation integrating global and local information. J. Eng. 2018;16:1397–1401. doi: 10.1049/joe.2018.8300. [DOI] [Google Scholar]

- 59.Yu H., Wang X., Wang G., Zeng X. An active three-way clustering method via low-rank matrices for multi-view data. Inf. Sci. 2020;507:823–839. doi: 10.1016/j.ins.2018.03.009. [DOI] [Google Scholar]

- 60.Taneja S.S. Re: Variability of the Positive Predictive Value of PI-RADS for Prostate MRI across 26 Centers: Experience of the Society of Abdominal Radiology Prostate Cancer Disease-Focused Panel. J. Urol. 2020;204:1380–1381. doi: 10.1097/JU.0000000000001283. [DOI] [PubMed] [Google Scholar]

- 61.Salama S., Khan M., Shanechi A., Levy M., Izbudak I. MRI differences between MOG antibody disease and AQP4 NMOSD. Mult. Scler. 2020;26:1854–1865. doi: 10.1177/1352458519893093. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Li W., Lin X., Chen X. Detecting Alzheimer’s disease Based on 4D fMRI: An exploration under deep learning framework. Neurocomputing. 2020;388:280–287. doi: 10.1016/j.neucom.2020.01.053. [DOI] [Google Scholar]

- 63.Riaz A., Asad M., Alonso E., Slabaugh G. DeepFMRI: End-to-end deep learning for functional connectivity and classification of ADHD using fMRI. J. Neurosci. Meth. 2020;335 doi: 10.1016/j.jneumeth.2019.108506. [DOI] [PubMed] [Google Scholar]

- 64.Diciotti S., Orsolini S., Salvadori E., Giorgio A., Toschi N., Ciulli S., Ginestroni A., Poggesi A., De Stefano N., Pantoni L., et al. Resting state fMRI regional homogeneity correlates with cognition measures in subcortical vascular cognitive impairment. J. Neurol. Sci. 2017;373:1–6. doi: 10.1016/j.jns.2016.12.003. [DOI] [PubMed] [Google Scholar]

- 65.Lu H., Liu S., Wei H., Tu J. Multi-kernel fuzzy clustering based on auto-encoder for fMRI functional network. Exp. Syst. Appl. 2020;159 doi: 10.1016/j.eswa.2020.113513. [DOI] [Google Scholar]

- 66.Leming M., Górriz J.M., Suckling J. Ensemble Deep Learning on Large, Mixed-Site fMRI Datasets in Autism and Other Tasks. Int. J. Neural Syst. 2020;30 doi: 10.1142/S0129065720500124. [DOI] [PubMed] [Google Scholar]

- 67.Benabdallah F.Z., Maliani A.D.E., Lotfi D., Hassouni M.E. Analysis of the Over-Connectivity in Autistic Brains Using the Maximum Spanning Tree: Application on the Multi-Site and Heterogeneous ABIDE Dataset; Proceedings of the 2020 8th International Conference on Wireless Networks and Mobile Communications (WINCOM); Reims, France. 27–29 October 2020; pp. 1–7. [Google Scholar]

- 68.Eslami T., Mirjalili V., Fong A., Laird A.R., Saeed F. ASD-DiagNet: A Hybrid Learning Approach for Detection of Autism Spectrum Disorder Using fMRI Data. Front. Neuroinf. 2019;13 doi: 10.3389/fninf.2019.00070. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Bi X., Wang Y., Shu Q., Sun Q., Xu Q. Classification of Autism Spectrum Disorder Using Random Support Vector Machine Cluster. Front. Genet. 2018;9 doi: 10.3389/fgene.2018.00018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Rakić M., Cabezas M., Kushibar K., Oliver A., Lladó X. Improving the detection of autism spectrum disorder by combining structural and functional MRI information. NeuroImage Clin. 2020;25 doi: 10.1016/j.nicl.2020.102181. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Borgwardt K.M., Gretton A., Rasch M.J., Kriegel H., Schölkopf B., Smola A.J. Integrating structured biological data by Kernel Maximum Mean Discrepancy. Bioinformatics. 2006;22:e49–e57. doi: 10.1093/bioinformatics/btl242. [DOI] [PubMed] [Google Scholar]

- 72.Steinwart I. On the influence of the kernel on the consistency of support vector machines. J. Mach. Learn. Res. 2001;2:67–93. [Google Scholar]

- 73.Xu Z.S. Study on method for triangular fuzzy number-based multi-attribute decision making with preference information on alternatives. Syst. Eng. Electron. 2002;24:9–12. [Google Scholar]

- 74.Yao Y. The superiority of three-way decisions in probabilistic rough set models. Inf. Sci. 2011;180:1080–1096. doi: 10.1016/j.ins.2010.11.019. [DOI] [Google Scholar]

- 75.Yao Y. An outline of a theory of three-way decisions; Proceedings of the International Conference on Rough Sets and Current Trends in Computing; Chengdu, China. 17–20 August 2012; pp. 1–17. [Google Scholar]

- 76.Chao-Gan Y., Yu-Feng Z. DPARSF: A MATLAB Toolbox for “Pipeline” Data Analysis of Resting-State fMRI. Front. Syst. Neurosci. 2010;4 doi: 10.3389/fnsys.2010.00013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Tzourio-Mazoyer N., Landeau B., Papathanassiou D., Crivello F., Etard O., Delcroix N., Mazoyer B., Joliot M. Automated anatomical labeling of activations in SPM using a macroscopic anatomical parcellation of the MNI MRI single-subject brain. Neuroimage. 2002;15:273–289. doi: 10.1006/nimg.2001.0978. [DOI] [PubMed] [Google Scholar]

- 78.Niu K., Guo J., Pan Y., Gao X., Peng X., Li N., Li H. Multichannel deep attention neural networks for the classification of autism spectrum disorder using neuroimaging and personal characteristic data. Complexity. 2020 doi: 10.1155/2020/1357853. [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.