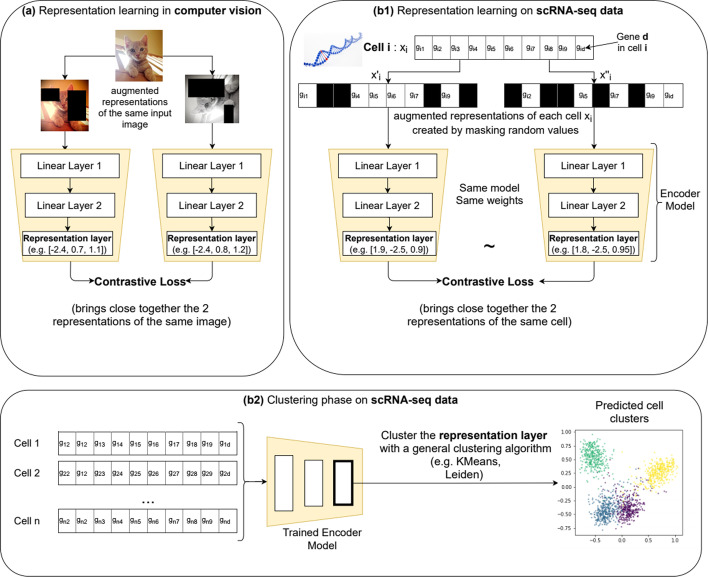

Fig. 1.

Method overview. The method is inspired by the contrastive learning techniques proposed for image analysis (a). For each image, an embedding (i.e. the value of the representation layer) is learned by applying a contrastive loss on the representations from 2 copies of the same image, modified with strong transformations such as multiple cropping, pixel noise, rotations, translations. This embedding can be analyzed with a general clustering algorithm in order to produce cluster assignments for each image. A similar process was proposed for scRNA-seq data (b1, b2): first, a representation learning phase (b1), produces an embedding for each cell (e.g. the vector [2.3, − 3.1, 0.2] is the embedding for the depicted Cell i). After training the network, all generated cell embeddings are clustered with a general clustering algorithm like KMeans or Leiden (b2). The representation learning starts from two strongly augmented copies of the input data ( and ) created by masking an arbitrary number (e.g. 80%) of input genes, denoted as [g1, …, gd]. The network is trained with an unsupervised contrastive loss, guiding the model to map the similar views to neighboring representations and the dissimilar to non-neighboring representations