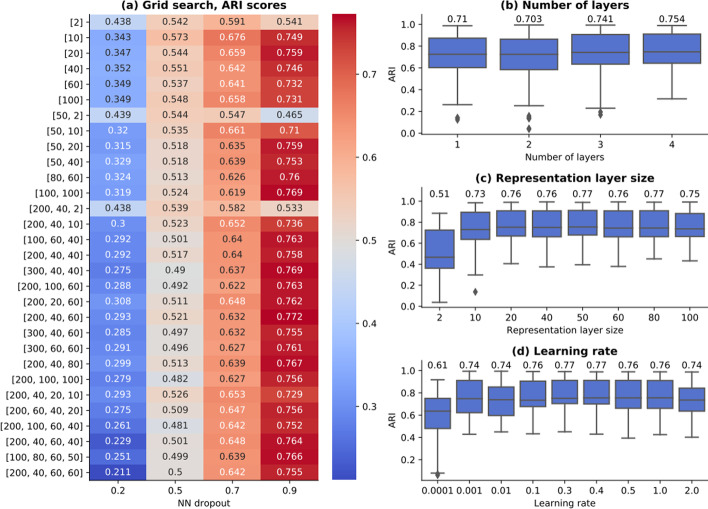

Fig. 11.

Network architecture search. A set of 30 neural network architectures consisting of 1–4 stacked linear layers have been trained on all real datasets (a). The labels indicate the network layer sizes (i.e. [60, 20] is a network composed of 2 linear layers of size 60 and 20, the latter also representing the cell embedding size). All values in a–d represent ARI scores of 3 runs for each experiment. The network dropout is the only parameter having a significant impact on model performance. The number of layers (b) influence only marginally the model output. Similar results have been obtained for the embedding size (c), as long as it is large enough (> 10 values). Various learning rates from 0.0001 to 2 have been explored (d) and indicate that the optimal value is 0.4. The annotated values represent the mean across all underlying experiments