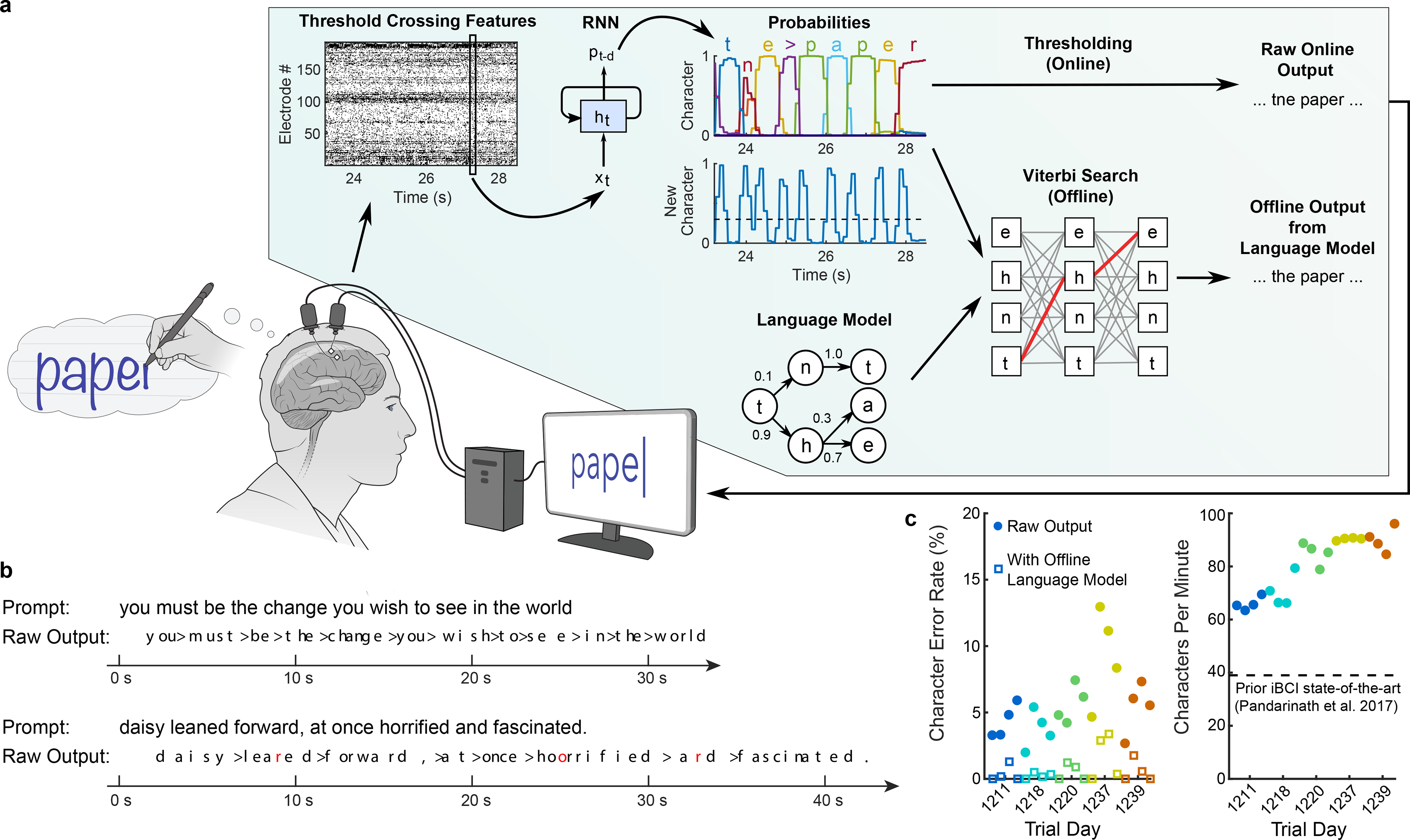

Figure 2. Neural decoding of attempted handwriting in real-time.

a, Diagram of the decoding algorithm. First, the neural activity (multiunit threshold crossings) was temporally binned and smoothed on each electrode (20 ms bins). Then, a recurrent neural network (RNN) converted this neural population time series (xt) into a probability time series (pt-d) describing the likelihood of each character and the probability of any new character beginning. The RNN had a one second output delay (d), giving it time to observe each character fully before deciding its identity. Finally, the character probabilities were thresholded to produce “Raw Online Output” for real-time use (when the ‘new character’ probability crossed a threshold at time t, the most likely character at time t+0.3s was emitted and shown on the screen). In an offline retrospective analysis, the character probabilities were combined with a large-vocabulary language model to decode the most likely text that the participant wrote (using a custom 50,000-word bigram model). b, Two real-time example trials are shown, demonstrating the RNN’s ability to decode readily understandable text on sentences it was never trained on. Errors are highlighted in red and spaces are denoted with “>”. c, Error rates (edit distances) and typing speeds are shown for five days, with four blocks of 7–10 sentences each (each block is indicated with a single circle and colored according to the trial day). The speed is more than double that of the next fastest intracortical BCI7.