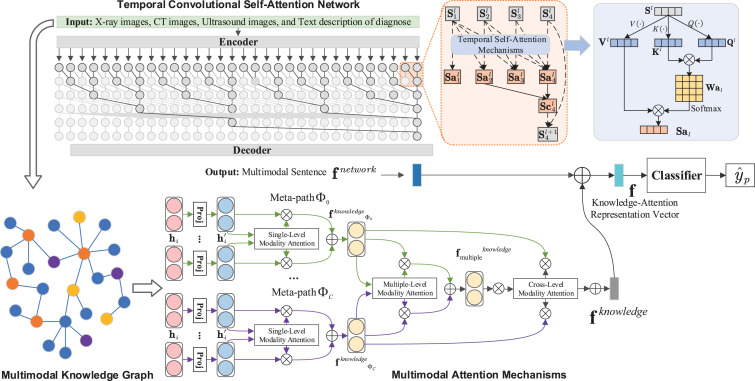

Fig. 4.

The proposed multi-modal knowledge graph attention embedding model. Given multimodal knowledge graph , we propose the multimodal attention mechanisms including three parts: ➀ the single-level modality attention and its results denoted as ; ➁ the multiple-level modality attention and its embedding denoted as ; ➂ cross-level modality attention mechanism that fuse the information of single-level modality and multiple-level modality attentions, and its the embedding matrix denoted as . Meanwhile, we propose the Temporal Convolutional Self-Attention Network (TCSAN) to handle the inputted multimodal data and get the multimodal sentence vectors . Then, we get the knowledge-based attention feature vector . Finally, we use the classifier (in this paper, we use the ResNet-34 [70]) to gain the labels, i.e., .