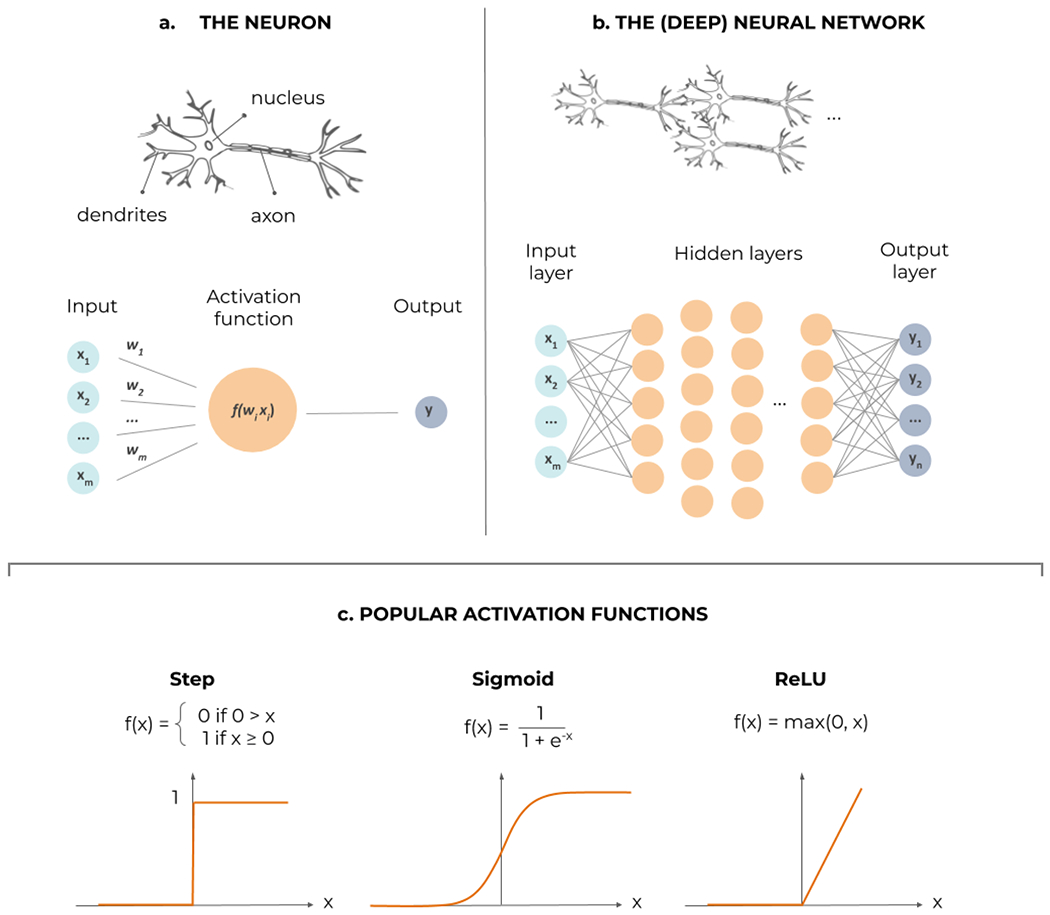

Figure 5.

Artificial neural networks in a nutshell. (a) The formal neuron, processing several dendritic inputs through a nonlinear activation function f, to produce its actional output. (b) The neurons can be interconnected in a feed-forward way, into successive layers; as soon as a nonlinear ‘hidden’ layer is inserted in between the inputs and outputs, the network can potentially approximate any function; specific activation functions can be fitted in the output layer to achieve either regression or classification. (c) Examples of nonlinear activation functions in the hidden layers: the step function, from biological inspiration, the sigmoid, its continuous and differentiable surrogate, and the rectified linear unit (ReLU), that improves training of deep layers.