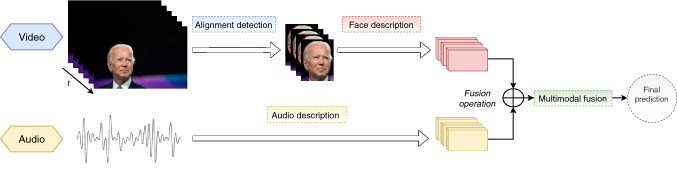

Fig. 2.

A schematic illustration of the method used: The visual modality (video) involves the extraction of facial regions of interest followed by a visual mapping representation scheme. The obtained representations are then temporally fused into a common space. Additionally, the audio descriptions are also generated. The two modalities are then combined using a multimodal fusion operation to predict the target class label (emotion) of the test sample