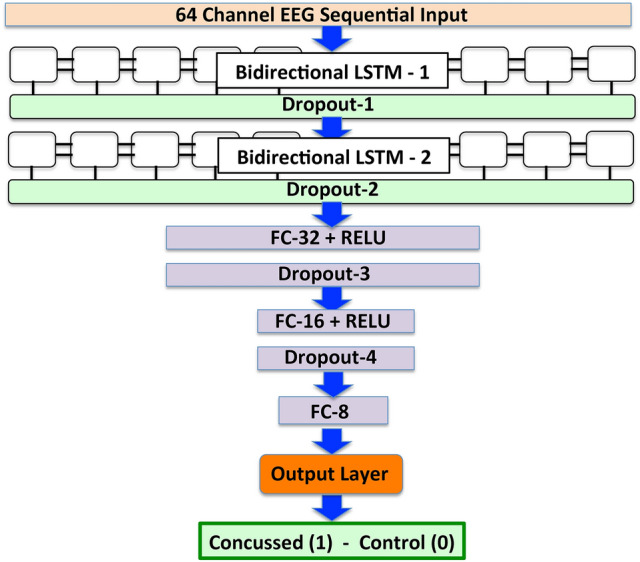

Figure 1.

The architecture of our first generation ConcNet, a recurrent neural network architecture comprising two LSTM layers. The 64-channel EEG input is fed through a sequential input layer to the first recurrent layer with 100 bi-directional LSTM units. The output is then passed to a dropout layer for regularization to reduce overfitting, and then to the second LSTM layer, also with 100 bi-directional LSTM units. The output of the 100 LSTM units then passes through another dropout layer, followed by two fully connected and two dropout layers organized as shown. These two fully connected layers have 32 and 16 nodes, and use Rectified Linear Unit activation functions. The results are then fed to a third fully connected layer with 8 nodes. This layer computes the weighted sum of the inputs and passes the output to the final output layer, which uses a logistic function to assign the input raw90-rsEEG data a score (). We classify it as concussed or control on the basis of the score. (Flow chart created with PowerPoint v14.5.6).