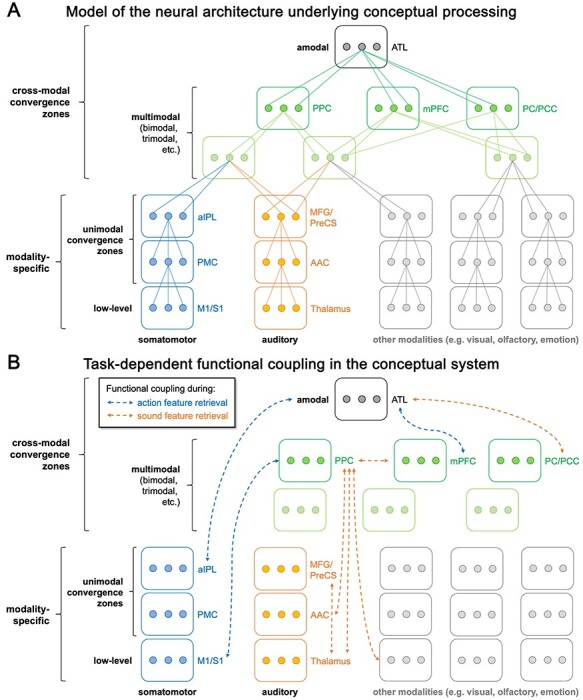

Figure 8.

(A) A novel model of the neural architecture underlying conceptual processing. Low-level modality-specific representations converge onto more abstract modality-specific representations in unimodal convergence zones. Multimodal convergence zones integrate information across modalities, while retaining modality-specific information. Finally, amodal areas completely abstract away from modality-specific content. Boxes represent brain regions and connected dots represent individual representational units that converge onto a more abstract representation at a higher level. (B) Task-dependent functional coupling during action and sound feature retrieval. Functional coupling in the conceptual system is extensive and flexible. Modality-specific regions selectively come into play when the knowledge they represent is task-relevant. Multimodal PPC dynamically adapts its connectivity profile to task-relevant modality-specific nodes. Amodal ATL mainly interacts with other high-level cross-modal convergence zones in a task-dependent fashion.