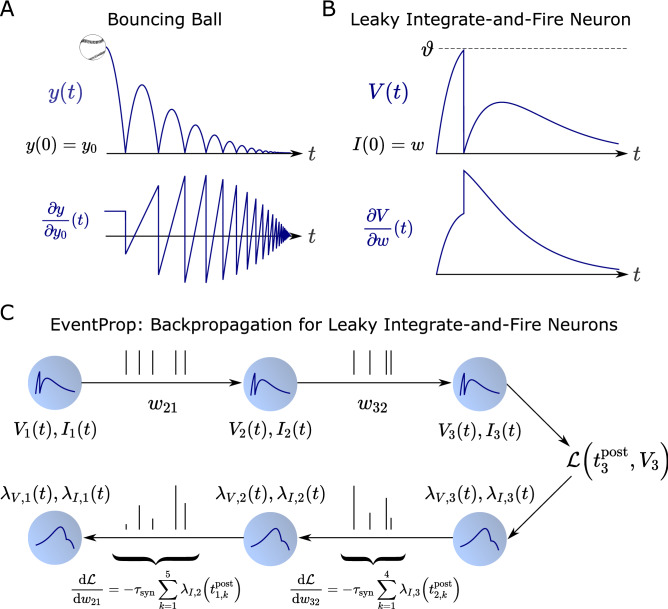

Figure 1.

We derive the precise analogue to backpropagation for spiking neural networks by applying the adjoint method together with the jump conditions for partial derivatives at state discontinuities, yielding exact gradients with respect to loss functions based on membrane potentials or spike times. (A, B) Dynamical systems with parameter-dependent discontinuous state transitions typically have discontinuous partial derivatives of state variables with respect to system parameters4, as is the case for the two examples shown here. Both examples model dynamics occurring on short timescales, namely inelastic reflection and the neuronal spike mechanism, using an instantaneous state transition. We denote quantities evaluated before and after a given transition by − and . In A, a bouncing ball starts at height and is described by with gravitational acceleration g. It is inelastically reflected as as soon as holds, causing the partial derivative with respect to to jump as (see first methods subsection). In B, a leaky integrate-and-fire neuron described by the system given in Table 1 with initial conditions , resets its membrane potential as when holds, causing the partial derivative to jump as (see methods for the full derivation). (C) Applying the adjoint method with partial derivative jumps to a network of leaky integrate-and-fire neurons (Table 1) yields the adjoint system (Table 2) that backpropagates errors in time. EventProp is an algorithm (Algorithm 1) returning the gradient of a loss function with respect to synaptic weights by computing this adjoint system. The forward pass computes the state variables V(t), I(t) and stores spike times and each firing neuron’s synaptic current. EventProp then performs the backward pass by computing the adjoint system backwards in time using event-based error backpropagation and gradient accumulation: each time a spike was transferred across a given synaptic weight in the forward pass, EventProp backpropagates the error signal represented by the adjoint variables , of the post-synaptic (target) neuron and updates the corresponding component of the gradient by accumulating , finally yielding sums as given in the figure