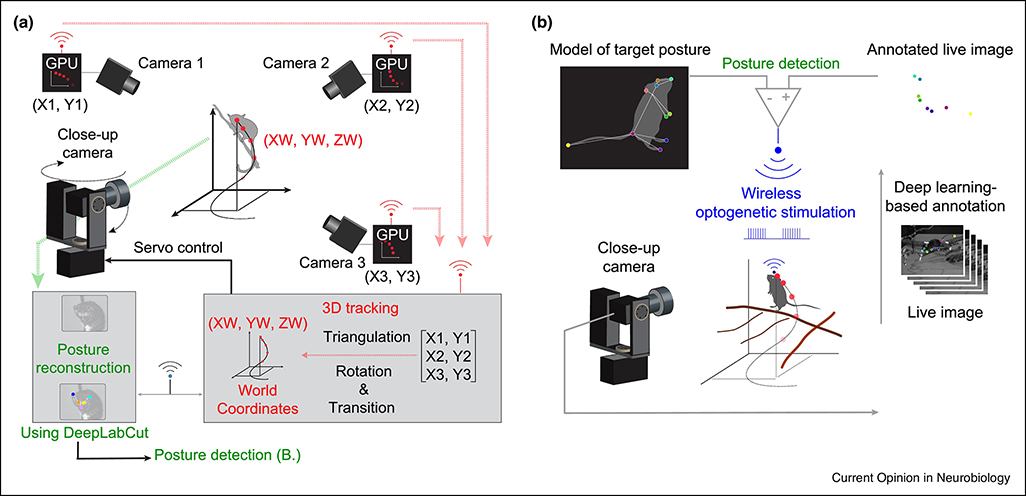

Figure 2. Pose tracking in naturalistic 3D environments and real-time reinforcement of automatically detected behaviors.

(A) Schematic depiction and spatial arrangement of various EthoLoop components. Multiple infrared cameras (cameras 1–3) with dedicated GPUs process images from different viewing angles. The identity and positions of the detected markers are wirelessly transmitted to a central host computer for 3D reconstruction (triangulation, followed by rotation and transition into real-world 3D coordinates). 3D coordinates are forwarded to control the position and focus of a mounted close-up camera. (B) The images from the close-up system are either saved for offline analysis or processed on-the-fly to trigger optogenetic activation of the animal’s reward system. The close-up camera provides high-resolution live images of the tracked animal, the body parts are then classified in real time using a pre-trained deep-learning network (DeepLabCut). If there is a match between the annotated live image and a geometric model of the posture, a behavioral event is detected. This detection wirelessly triggered the optogenetic stimulation through a portable, battery-powered stimulator. Content adapted from [64], with permission.