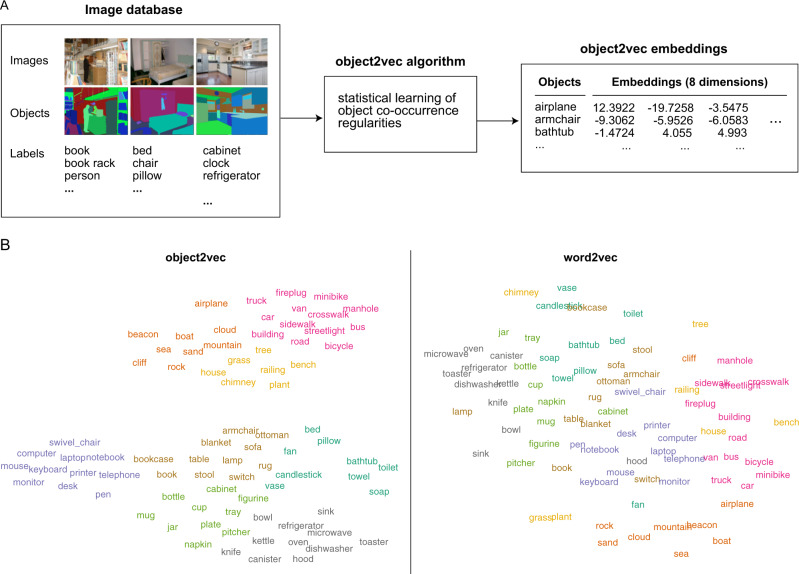

Fig. 1. Statistical model of visual object context.

A Object context was modeled using the ADE20K data set27, which contains 22,210 images in which every pixel is associated with an object label provided by an expert human annotator. An adaptation of the word2vec machine-learning algorithm for distributional semantics—which we call object2vec—was applied to this corpus of image annotations to model the statistical regularities of object-label co-occurrence in a large sample of real-world scenes. Using this approach, we generated a set of 8-dimensional object2vec representations for the object labels in the ADE20K data set. These representations capture the co-occurrence statistics of objects in the visual environment, such that objects that occur in similar scene contexts will have similar object2vec representations. B The panel on the left shows a two-dimensional visualization of the object2vec representations for the 81 object categories in the fMRI experiment. This visualization shows that the object2vec representations contain information about the scene contexts in which objects are typically encountered (e.g., indoor vs. outdoor, urban vs. natural, kitchen vs. office). The panel on the right shows a similar plot for the language-based word2vec representations of the same object categories. These plots were created using t-distributed stochastic neighbor embedding (tSNE). The colors reflect cluster assignments from k-means clustering and are included for illustration purposes only. K-means clustering was performed on the tSNE embeddings for object2vec, and the same cluster assignments were applied to the word2vec embeddings in the right panel for comparison. Source data are provided as a Source Data file.