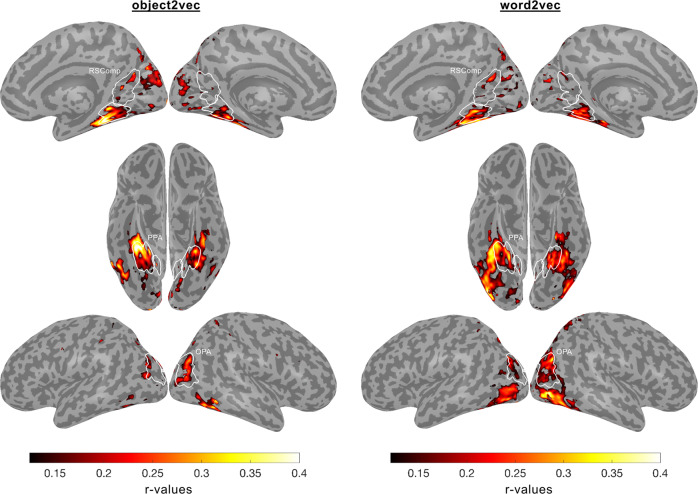

Fig. 6. Encoding models of visual and linguistic context predict fMRI responses in distinct but overlapping regions of high-level visual cortex.

Voxel-wise encoding models were estimated and assessed using the 9-fold cross-validation procedure described in Fig. 3. These analyses were performed for all voxels with split-half reliability scores greater than or equal to r = 0.1841, which corresponds to a one-sided, uncorrected p-value of 0.05 (see split-half reliability mask in Supplementary Fig. 8). Encoding model accuracy scores are plotted for voxels that show significant effects (p < 0.05, FDR-corrected, one-sided permutation test). The left panel shows prediction accuracy for the image-based object2vec encoding model, and the right panel shows prediction accuracy for the language-based word2vec encoding model. There is a cluster of high prediction accuracy for the object2vec model overlapping with the anterior portion of the PPA, mostly in the right hemisphere. There are also several other smaller clusters of significant prediction accuracy throughout high-level visual cortex, including clusters in the OPA and RSComp. The word2vec model produced a cluster of high prediction accuracy that partially overlapped with the significant voxels for the object2vec model in the right PPA but extended into a more lateral portion of ventral temporal cortex as well as the lateral occipital cortex. ROI parcels are shown for scene-selective ROIs. PPA parahippocampal place area, OPA occipital place area, RSComp retrosplenial complex.