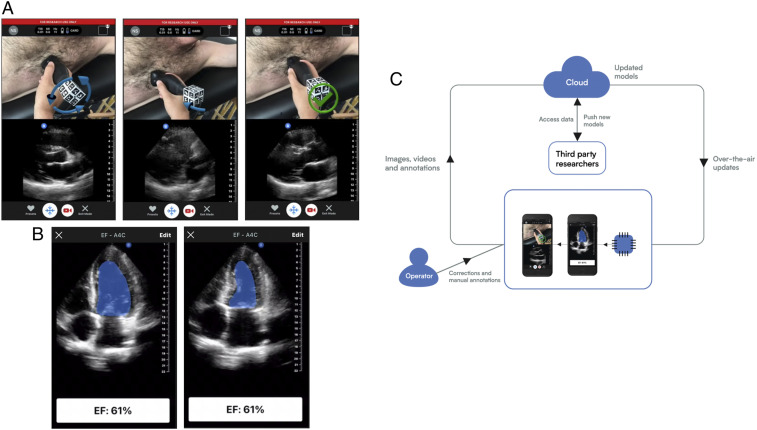

Fig. 5.

Forward-looking developments. (A) An assistance deep-learning model provides guidance to the user via a split screen where the camera image with augmented reality arrows overlain is shown on the top and the b-mode ultrasound image is shown on the bottom. Both frames are updated in real time. The screens show a cardiac scan where the arrows over the probe (Left) indicate a corrective action of counterclockwise rotation is needed, (Middle) indicates a corrective action of tilting the end of probe toward the subject’s feet is needed, and (Right) indicates that no corrective action is needed. (B) An interpretation deep-learning model is run on a captured movie of the heart beating and automates segmentation for the calculation of the EF of the heart by taking one minus the ratio of volumes of blood in the heart’s left ventricle (LV) at (Left) end systole and (Right) end diastole. The volume is calculated using a disc integration based on the cross-sectional area using a technique known as Simpson’s method (53). (C) A virtuous cycle in which ultrasound data are collected from devices in the field along with annotations, such as one might do in a research clinical study. These data are transmitted to a central location for analysis and to train models. The data annotations may be de novo or corrections to suggestions for a previous algorithm. The same data link that is used for retrieving data may also be used to deploy updated or new models. The cycle is further strengthened by leveraging acquisition assistance to guide a user to the correct acquisition and then assisting in the initial interpretation. As data are collected in the cloud, models improve by training on the correction data.