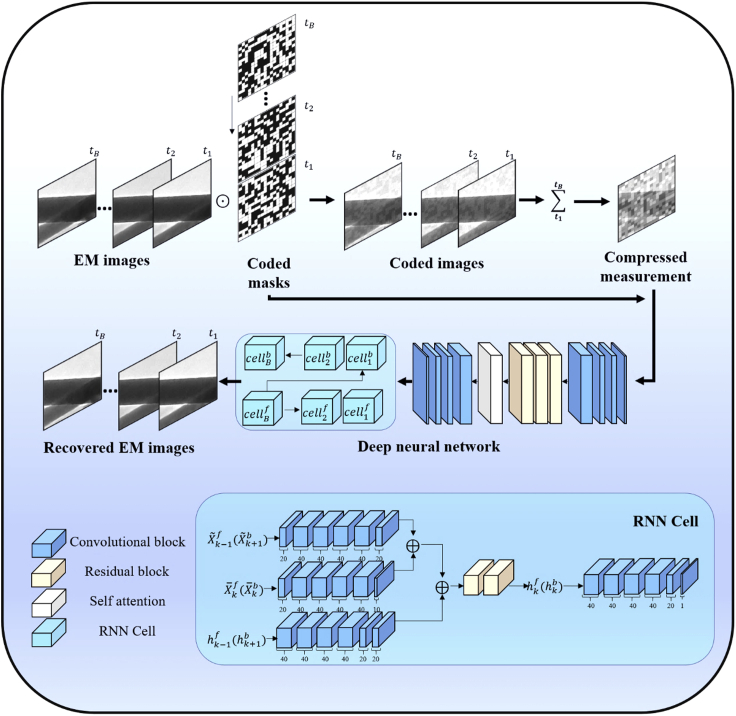

Figure 1.

Workflow of the TCS-DL for big data EM

The TCS-DL consists of two parts: an encoder compressing B frames of EM images using a B random binary mask into a single compressed measurement (upper part), and a decoder using a deep neural network to reconstruct the EM images (middle part). The masks used in the encoder and the compressed measurement are first sent into a CNN to generate the first video frame. Then a forward RNN is used to generate the consequent frames. After this, a backward RNN is used to refine the frames in a reverse order to output the final EM images.