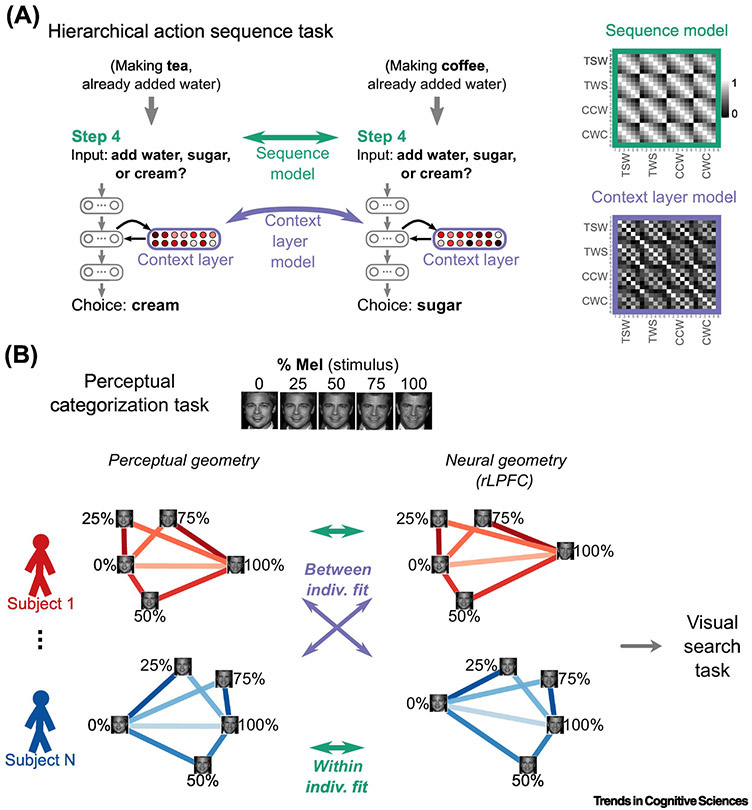

Figure 2.

Diagrams of two reviewed RSA methods. A, Mapping internal representations of an artificial neural network (ANN) to brain activity with RSA [82]. An ANN was trained to perform a hierarchical action sequence task, in which the action at one point in the sequence depended on previously chosen actions (e.g., ingredients could only be added once; cream could only be added to coffee). After training, the ANN simulated each step of each sequence (depicted: the fourth step of two different sequences), and the resulting activation patterns (reddish nodes) within the context layer were extracted; the similarity structure of these patterns served as the Context Layer Model (right). A competing model (Sequence Model), which contained only information regarding position-in-sequence (i.e., not previous choices) was built by taking the distance (absolute difference) between each pair of steps (green arrow). B, RSA “fingerprinting” [114]. Individuals first performed a famous-face classification task, in which an exemplar face, linearly morphed between two famous faces (e.g., Brad Pitt and Mel Gibson), had to be classified (as either Brad or Mel). Each individual's categorizations were expressed in similarity matrix form (here, depicted as 2-dimensional perceptual geometries) then used as models to explain (green and purple arrows) neural similarity matrices (neural geometries) from each and every subject. Idiosyncratic brain–behavior relationships were identified in brain regions (i.e., rLPFC) for which the within-subject models (green arrows) were better fit on average than the between-subject models (purple arrows). Neural geometries were then used to predict the patterns of interference within a separate attentional search task that used the same stimulus set (grey arrow).