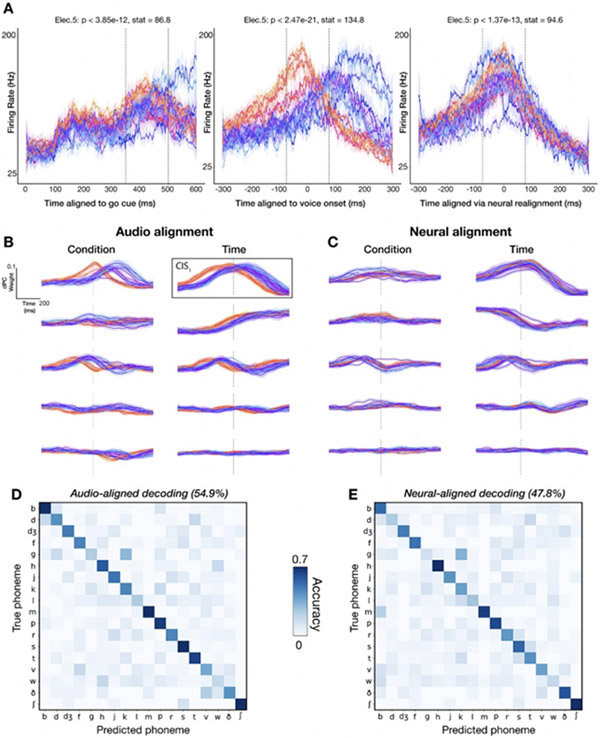

Figure 4:

Audio-based phoneme onset alignments cause spurious neural variance across phonemes.

(A) Firing rate (20 ms bins) of an example electrode across 18 phoneme classes is plotted for distinct alignment strategies (left to right: aligning the same utterances’ data to the go cue, voice onset, and the “neural onset” approach we introduce). Each trace is one phoneme, and shading denotes standard errors. Plosives are shaded with warm colors to illustrate how voice onset alignment systematically biases the alignment of certain phonemes.

(B-C) dPCs for phoneme-dependent and phoneme-independent factorizations of neural ensemble firing rates in a 1500 ms window. The top five dPC component projections (sorted by variance explained) are displayed for each marginalization for the audio and neural alignment approaches. (B) dPC projections aligned to voice onset (vertical dotted lines). Plosives (warm colors) have a similar temporal profile to other phonemes (cool colors) except for a temporal offset. This serves as a warning that voice onset alignment may artificially introduce differences between different phonemes’ trial-averaged activities. To compensate for this, we re-aligned data to a neural (rather than audio) anchor: each phoneme’s trial-averaged peak time of the largest condition-invariant component, outlined in black, was used to determine a “neural onset” for neural realignment. (C) Recomputed dPC projections using this CIS1-realigned neural data. Vertical dotted lines show estimated CIS1 peaks. (D) Decoder confusion matrix from predicting the first phoneme in each word using a 500 ms window centered on voice onset. (E) Confusion matrix when classifying the same phoneme utterances, but now using neurally realigned data.