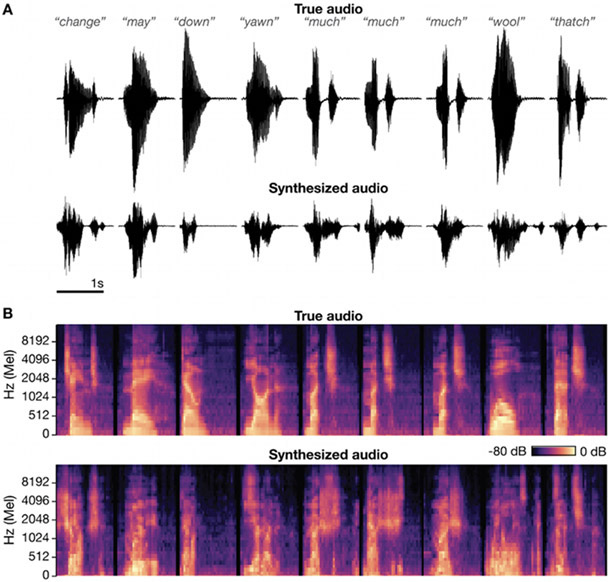

Fig. 6:

Speech synthesis using ‘brain-to-speech’ unit selection.

(A) Audio waveforms for the actual words spoken by participant T5 (top) and the synthesized audio reconstructed from neural data (bottom). (B) Corresponding acoustic spectrograms. The correlation coefficient between true and synthesized audio (averaged across all 40 Mel frequency bins) for these 9 good examples was 0.696.