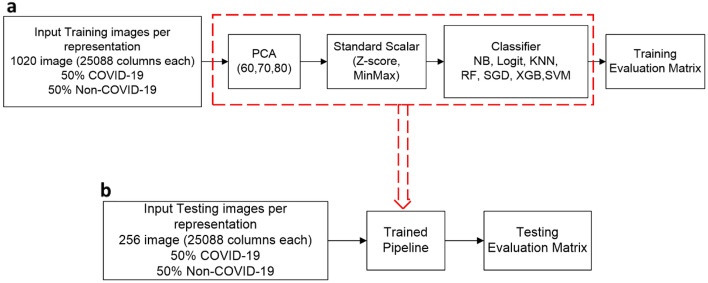

Figure 3.

Training and testing pipelines for the shallow machine learning models used in our study. (a) Training pipeline: The input training images per representation are scored against the vgg19 (pre-trained model) to extract features automatically. Each representation has 1020 images in the training data, where 50% of the training data labels are COVID-positive, and the remaining 50% are negative. The features' size is 25,088, and we further select the essential features using a principal component analysis (PCA) processing step to select either 60, 70, or 80 features. The selected features are then normalized using either Z-score or MinMax standard scalars. Then the normalized features are used to train seven classifiers (Naïve Bayes (NB), Logistic Regression (Logit), K-nearest neighbours (KNN), Random Forest (RF), Stochastic Gradient Descent (SGD), Extreme Gradient Boosting (XGB), and Support Vector Machine (SVM). We measure and record the training evaluation results to choose the best classifiers. (b) Testing pipeline: Once the training process is completed, we score the testing data against each trained pipeline. The trained pipeline is composed of the best PCA, standard scalar, and classifier parameters. The testing data has 256 images per representation with equal labels for both COVID-positive and negative cases. We measure and record the testing evaluation results to estimate the generalization error of each pipeline.