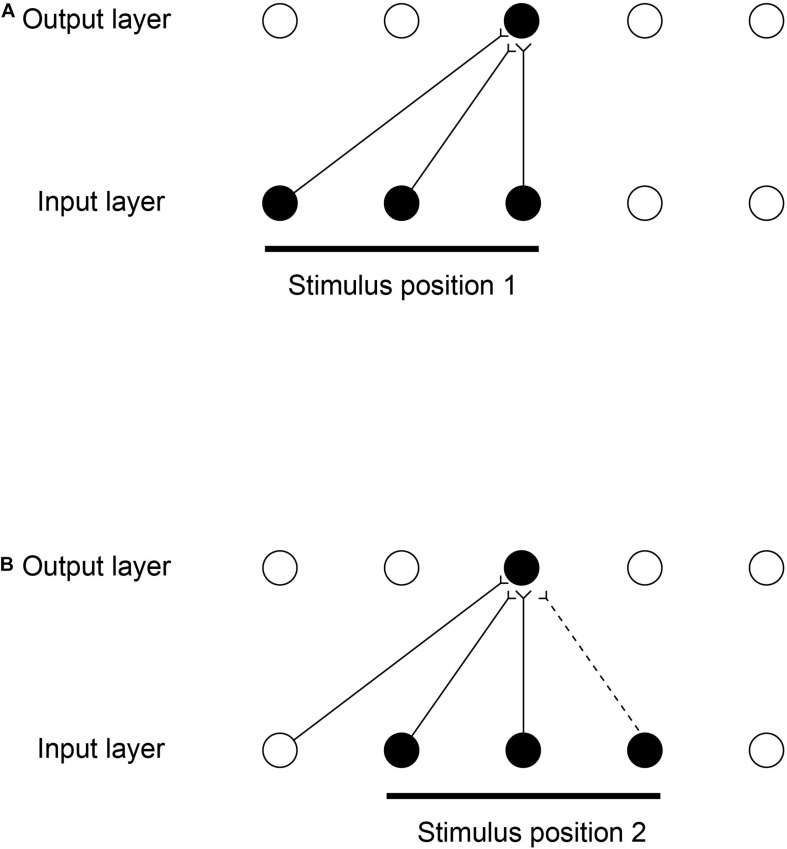

FIGURE 8.

Continuous spatial transformation learning of transform-invariant visual representations of objects. This illustrates how continuous spatial transformation (CT) learning would operate in a network with forward synaptic connections between an input Layer of neurons and an output Layer. Initially the forward synaptic connection weights are set to random values. (A) The presentation of a stimulus to the network in position 1. Activation from the active (shaded black) input neurons is transmitted through the initially random forward connections to activate the neurons in the output Layer. The neuron shaded black in the output Layer wins the competition in the output Layer. The synaptic weights from the active input neurons to the active output neuron are then strengthened using an associative synaptic learning rule. (B) The situation after the stimulus is shifted by a small amount to a new partially overlapping position 2. Because some of the active input neurons are the same as those that were active when the stimulus was presented in position 1, the same output neuron is driven by these previously strengthened synaptic afferents to win the competition. The rightmost input neuron shown in black is activated by the stimulus in position 2, and was inactive when the stimulus was in position 1, now has its synaptic connection to the active output neuron strengthened (denoted by the dashed line). Thus the same neuron in the output Layer has learned to respond to the two input patterns that have vector elements that overlap. The process can be continued for subsequent shifts, provided that a sufficient proportion of input neurons is activated by each new shift to activate the same output neuron. (After Stringer et al., 2006).