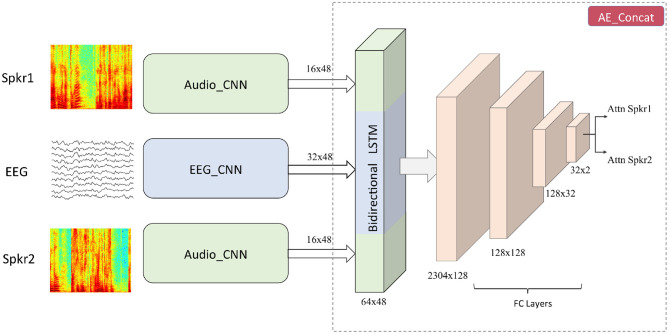

Figure 1.

The architecture of the proposed joint CNN-LSTM model. Input to the audio stream is the spectrogram of speech signals and input to the EEG stream is the downsampled version of EEG signals. Number of Audio_CNNs depends on the number of speakers present in the auditory scene (here two). From the outputs of Audio_CNN and EEG_CNN, speech and EEG embeddings are created which are concatenated together and passed to a BLSTM layer followed by FC layers.