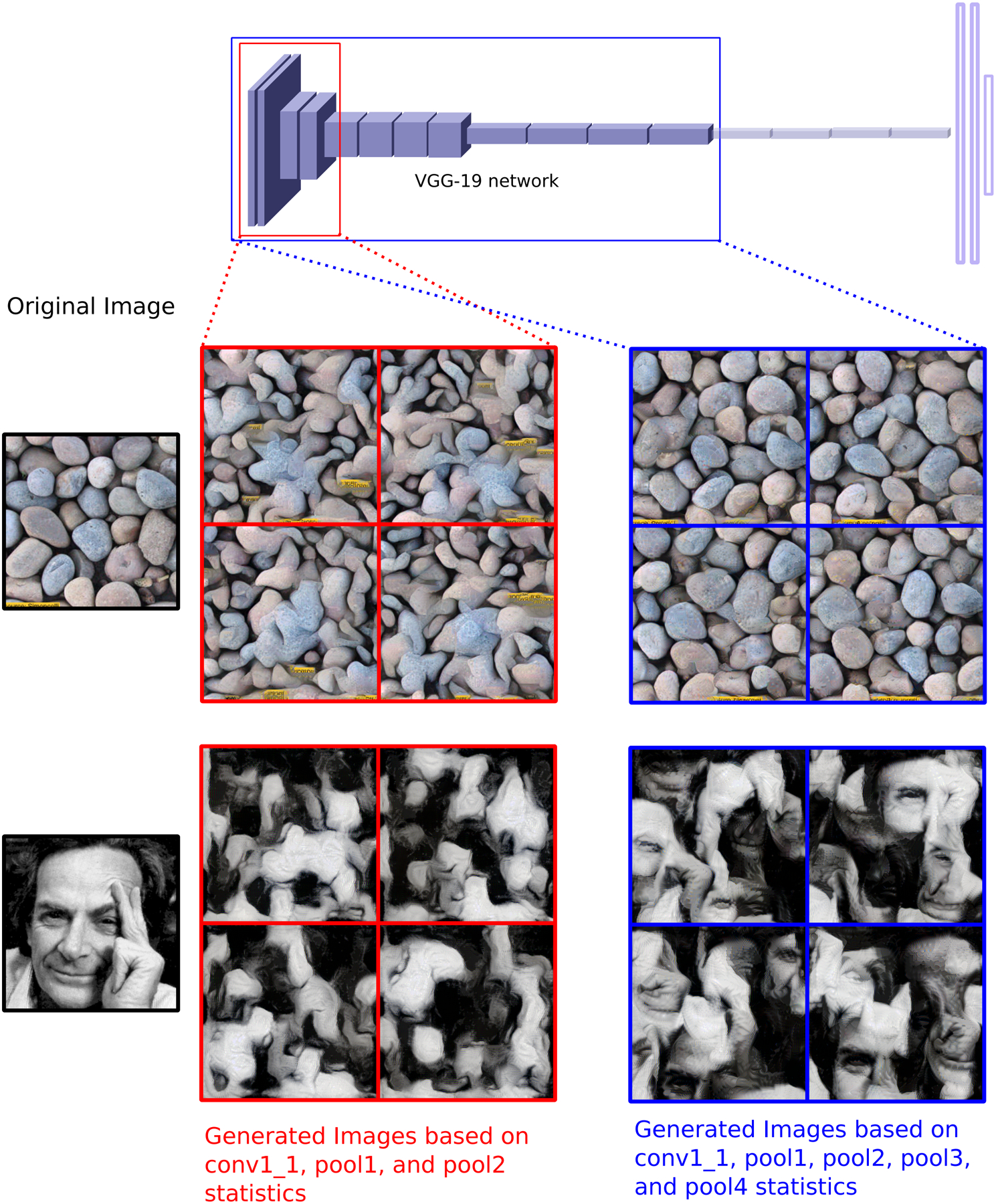

Figure 1: Texture Synthesis based on Deep Convolutional Neural Networks.

The activations of different layers of a DNN trained for object recognition can be employed to capture statistics of textures beyond second order (Gatys et al., 2015). Texture synthesis is accomplished by numerical optimization of the pixel values of an image that matches the statistics of a reference image (Original Image enclosed in black). Statistics can be obtained from activation values at different stages of the deep DNN. Images enclosed in red are synthesized by considering only activations from the first and second pooling stages of the DNN, whereas images enclosed in blue include the third and fourth pooling stages in their statistics. In the case of the inhomogeneous images (bottom row) the texture generation tiles local features in scrambled places that will match the activation statistics that have been averaged over space.