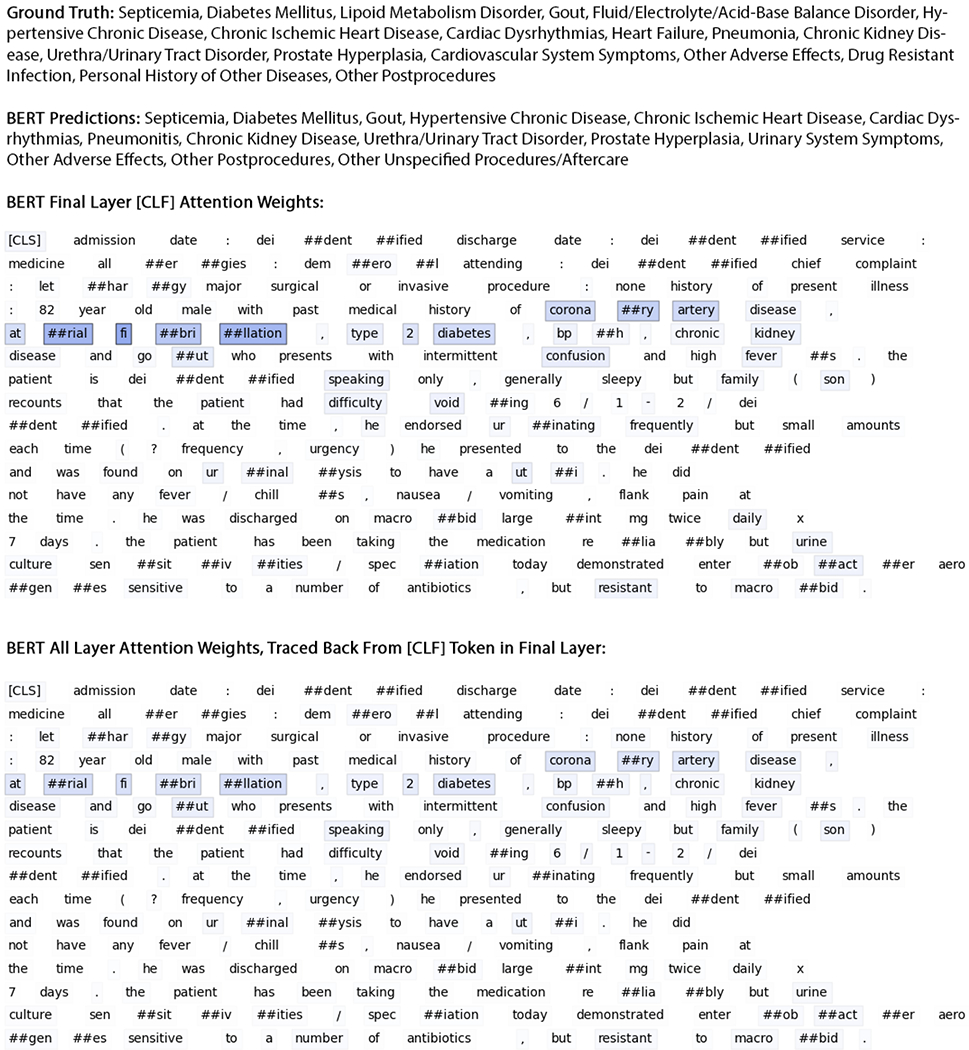

Fig. A2:

Attention weights and predictions on an example document from the MIMIC-III dataset for the diagnostic category task. In the top, using our fine-tuned BlueBERT model (first 510 WordPiece tokens only), we visualize the attention weights from the very final layer that are associated with the [CLS] token used for classification; these weights represent the most important subword tokens after they have already incorporated contextual information from other subword tokens based off the 12 self-attention layers of the main BERT model. In the bottom, we start from the attention weights from the very final layer that are associated with the [CLS] token and multiply these attention weights through all 12 self-attention layers of the BERT model; these weights represent the most important subword tokens accounting for all the inter-word relationships captured during pretraining and fine-tuning. For this visualization, we sum the attention weights across all attention heads.