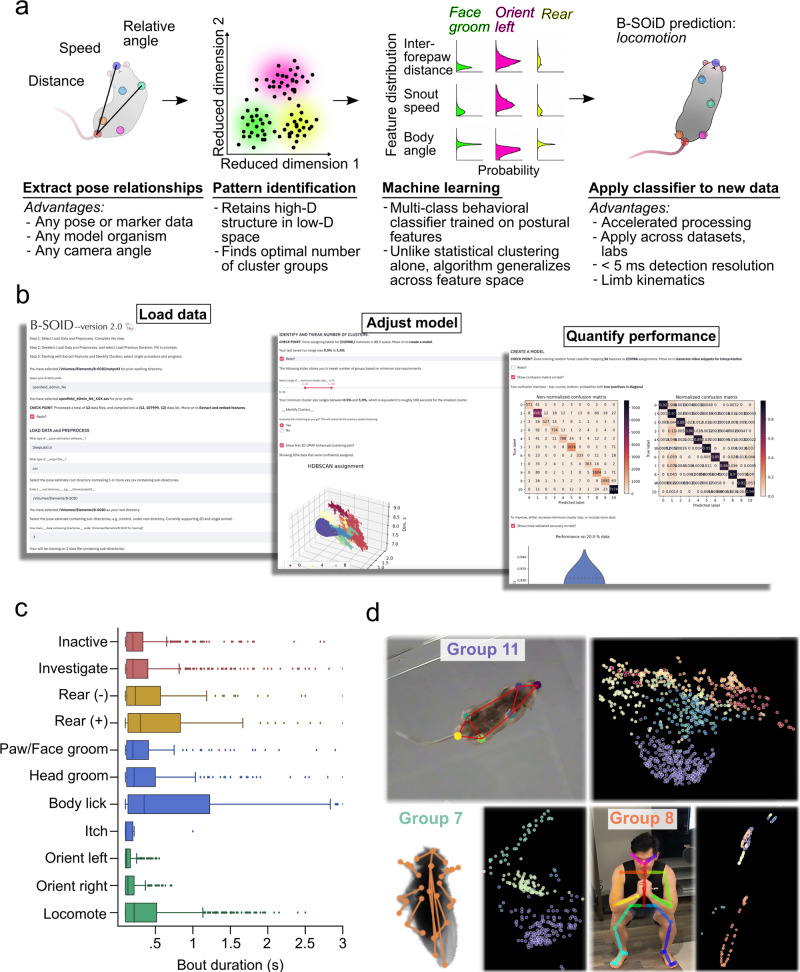

Fig. 1. Summary of B-SOiD process, GUI, and performance in mice and other animals.

a After extracting the pose relationships that define behaviors, B-SOiD performs a non-linear transformation (UMAP) to retain high-dimensional postural time-series data in a low-dimensional space and subsequently identifies clusters (HDBSCAN). The clustered spatiotemporal features are fed as inputs to train a random forests machine classifier. This classifier can then be used to quickly predict behavioral categories in any related data set. Once trained, the model will segment any dataset into the same groupings. b Screen shots from B-SOiD app GUI, available freely for download. Examples of simple language progress from loading data, to improving model, and quantifying performance are shown. c Bout durations for each of the identified behaviors throughout an hour-long session, n = 1 animal. Data are presented as mean values ± SEM. d Snapshot of behavioral state space aligned to a freely moving mouse(DeepLabCut), fruit fly(SLEAP), and the first author - taken with a cell phone camera(OpenPose). Informed consent to publish the images was obtained. Color of group number refers to colored distribution within UMAP space. Source data are provided as a Source Data file for (c).