Summary

Objectives: To analyze the content of publications within the medical NLP domain in 2020.

Methods: Automatic and manual preselection of publications to be reviewed, and selection of the best NLP papers of the year. Analysis of the important issues.

Results: Three best papers have been selected in 2020. We also propose an analysis of the content of the NLP publications in 2020, all topics included.

Conclusion: The two main issues addressed in 2020 are related to the investigation of COVID-related questions and to the further adaptation and use of transformer models. Besides, the trends from the past years continue, such as diversification of languages processed and use of information from social networks

Keywords: Natural language processing; semi-automatic selection of publication; topics, issues, 2020

1 Introduction

Natural Language Processing (NLP) aims at providing methods, tools and resources designed in order to mine textual and narrative documents, and to make it possible to access the information they convey [ 1 ]. While human languages are complex (as an example, learning a human language requires many years in order to be fluent), the importance of using NLP approaches to mine documents produced by humans has been pointed out since a long time [ 2 ]. In this synopsis, we first present the selection process applied this year and then we analyze the content of some publications. More particularly, we will focus on several important issues such as robustness of the methods, reproducibility of the results, as well as the originality of the research questions addressed in 2020.

2 The Selection Process

In order to identify all papers published during the year 2020 in the field of NLP, we queried two databases: MEDLINE 1 , specifically dedicated to the biomedical domain, and the Association for Computational Linguistics (ACL) anthology 2 , a database that brings together the major NLP conferences (ACL, International Conference for Computational Linguistics (COLING), Empirical Methods in Natural Language Processing (EMNLP), International Conference on Language Resources and Evaluation (LREC), Annual Conference of the North American Chapter of the Association for Computational Linguistics (NAACL), etc.) and journals, since some NLP studies concerning the biomedical domain are published in conferences and journals which are not indexed by PubMed.

We applied the basic query we defined last year on MEDLINE:

(English[LA]

AND journal article[PT]

AND 2020[DP]

AND (medical OR clinical

OR natural)

AND “language processing”)

all journal papers published in English in 2020, having abstract, and composed of sequences “clinical language processing” or “medical language processing” or “natural language processing”. As of 2020, January 9 th , we collected 739 entries. We applied a similar query on the ACL anthology database and collected 27 entries. In order to process those 766 papers, we automatically scored the papers. Indeed, all the candidate papers are not specifically related to the NLP domain despite the use of one of the three sequences from the query. For instance, they can be related to other sections from the IMIA Yearbook (Public Health and Epidemiology Informatics, Decision Support, Knowledge Representation and Management, etc.) without providing major issues for the NLP section. Hence, we applied three sets of rules we defined in 2018 while identifying best papers in a previous edition, in order to compute global scores for each publication.

The first set of rules is based upon the name of the journal (both full name and concepts found in the name):

the positive score is assigned to the main journals in which the biomedical NLP research is usually published by the NLP community (Biomedical informatics insights, International Journal of Medical Informatics, Journal of the American Medical Informatics Association, Journal of Biomedical Informatics, BMC Bioinformatics);

the negative score is assigned to journals not specifically related to NLP, but to other domains such as Cognitive studies and Communication disorders (Neuroscience, Human brain mapping, Operative neurosurgery, Speech therapy, …etc.). We also dismiss survey papers and papers published in the IMIA Yearbook. We manually defined this set of journals in order to rule out those false positives.

The second set of rules relies on concepts found in both the title and abstract of papers:

the positive score is assigned to concepts typically involved in papers related to NLP. Those concepts may be related to objectives, resources, and tools (such as natural language processing, NLP, named entity recognition, NER, part of speech, POS, tagged words, semantic, syntax, biomedical entity, meanings, electronic health record, EHR, reports, notes, clinical text, text corpus, free text, unstructured text, tweets, PubMed, Social Media, MedDRA, UMLS, annotated data, Metamap );

the negative score is assigned to concepts that are usually involved in studies related to disorders involving anatomical parts or language abilities (such as word processing, language production, language comprehension, voice quality, left posterior superior temporal gyrus, pSTG, posterior superior temporal sulcus, pSTS, inferior fronto-occipital fasciculus, IFOF, dorsolateral prefrontal cortex, cortex, language lateralization, chemical fragment, fragment chemistry, brain structures, verbal intelligence, cerebral, positive mismatch responses, pMMRs, prelingual, postlingual, cochlear, aphasia, SAPS, cortical, language function, infants ).

The third set of rules is also applied on titles and abstracts, and covers the concepts describing the methodology used in papers:

the positive score is assigned to papers using classical NLP methods or evaluation metrics (such as annotation tool, text-mining, rule-based, regular expression, lexicon, CRF, recall, precision, F1-score, F-measure, accuracy, Inter-annotator agreement, Kappa, classify/classifier, detect, extract, extraction, predict, predicting, text simplification, lexical simplification );

the negative score is assigned to papers claiming to use the NLP methods, such as pointed out by sequences like using natural language processing, using NLP, perform a Natural Language Processing analysis . Such papers are downgraded because the NLP claims are usually limited to the use of existing and ready-to-use NLP tools while the main contribution of papers is related to the analysis of tool results rather than to the improvements made to NLP methods by researchers who take advantage of existing tools.

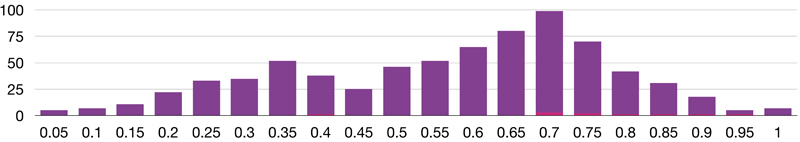

For each of the 766 candidate papers, the final score ranked from 0.05 to 1 ( Figure 1 ). On this figure, the violet bars indicate the total number of papers for each computed grade, while the pink bars indicate the papers we kept in the top-15 best papers’ list. This score has been used as a meta element during the manual selection of the top-15 papers. Indeed, the section editors did not fully rely on the scores but only used them as additional information. Hence, both section editors independently browsed the abstracts, keywords and automatic scores, and assigned a Yes / Maybe / No score to each paper. All papers having at least one Yes or Maybe score have been kept for the next step of the selection. At this stage, 143 candidate papers remain (i.e., a subset of 18.4% of the whole dataset). We then performed an adjudication process, in order to choose the final 15 candidates to be proofread by external reviewers. We payed attention to the topics addressed by the researchers and to their geographic origin so as to provide enough diversity. As a result, out of the fifteen papers, four come from the USA, three from China, and one from each of the following countries: Finland, Germany, Italy, Peru, Spain, Turkey, and United-Kingdom. This is the first time we select a paper from South America in our top-15 best paper candidates.

Fig. 1.

Distribution of papers according to the filter scores (violet bars indicate the total number of papers, pink bars indicate papers kept in the top-15 best papers).

In the next sections, we present the main issues and approaches addressed in the preselected publications.

3 Current Trends in Biomedical NLP

Since a few years, we observed the increasing use of transformer models based on word embeddings. Those models are useful to capture the context of words. When they achieve to cover adequately the domain (e.g., clinical domain) or the properties of a given corpus (e.g., clinical texts), they allow to obtain high performances w.r.t. approaches that do not use such models. Several transformer models have been released during the last years: currently, the most famous one is BERT, a multilingual generic model provided by Google [ 3 ].

Based on this model, several other models were produced: (i) either specific to a domain such as BioBERT [ 4 ] for the biomedical domain; or (ii) specific to a language, such as BERTje [ 5 ] for Dutch, FlauBERT [ 6 ] and CamemBERT [ 7 ] for French, GottBERT [ 8 ] for German, AlBERTo [ 9 ] and UmBERTo 3 for Italian, or BETO [ 10 ] for Spanish; or (iii) specific to a given task such as MRCBert [ 11 ] for summarization or SQuADBERT 4 for question-answering, trained on the Stanford Question-Answering Dataset [ 12 ]. Conversely, almost all previous methods still disappeared, due to their relative high lifespan: Word2vec [ 13 ], GloVe [ 14 ], and ELMo [ 15 ]. New approaches are coming, with an increasing number of parameters in those new models, especially the Generative Pretrained Transformer (GPT) series provided by OpenAI: GPT-2 5 [ 16 ] for text generation, and GPT-3 [ 17 ] for all tasks of NLP (using up to 175 billion parameters).

As a consequence, there is a harmful race for being the first to produce such resources, which implies to rapidly publish a paper in the first available conference or workshop, or on the arXiv deposit, in order to be cited. Nevertheless, this also implies that such papers would certainly never be identified as best paper candidates for the NLP chapter of the IMIA Yearbook, unless they are indexed in PubMed, or published in an NLP conference that we, co-editors of the NLP section, used to visit.

Nevertheless, an opposite way has also been observed, with authors searching for a green research that does not use models which are not environmentally friendly (expensive in terms of hardware, running time, and CO 2 footprint). This way has been investigated by Poerner et al. [ 18 ] which proposed a GreenBioBERT model that has been produced using Word2vec to train a model on a new target domain (namely, on the COVID-19 issue) along with an alignment of vectors from the existing BioBERT model and the model trained with Word2vec.

In terms of architecture, the most commonly used for NLP tasks currently is a bidirectional LSTM, which permits to capture both left and right contexts, plus a final Conditional Random Field (CRF) layer to refine the outputs (BiLSTM-CRF). Those methods achieve excellent results but need high computation resources to train new models, currently only available in huge data centers, which implies an important CO 2 footprint. The main issue is how to use such models on new domains, new languages, or for a new task? Instead of training new models, alternative solutions exist, such as fine-tuning of existing models, domain adaptation, transfer learning, as done by Jin et al. [ 19 ] to predict clinical trial results. Nevertheless, such models remain expensive.

3.1 Languages Addressed

In relation with the languages processed, work with texts written in English represents the largest part of publications. Indeed, there is a significant number of existing corpora, datasets and resources available in English. Yet, we observe an increasing number of publications dedicated to other languages and a greater variety of languages: Arabic [ 20 ], Chinese [ 21 22 23 24 25 26 ], Croatian [ 27 ], Finnish [ 28 , 29 ], French [ 30 , 31 ], German [ 32 33 34 ], Hebrew [ 35 ], Italian [ 36 37 38 ], Japanese [ 39 , 40 ], Korean [ 41 , 42 ], Norwegian [ 43 ], Portuguese [ 44 ], Spanish [ 45 46 47 48 ], Swedish [ 49 ], and Turkish [ 28 ]. Overall, we believe that the trend observed in previous years is continuing. We expect it will develop further in the future.

3.2 COVID-19

A lot of work has been done on the COVID-19 issue. In order to help such work, several papers present COVID-19 resources, such as the COVID-19 Open Research Dataset (CORD-19) produced by Wang et al. [ 50 ] and available through the Kaggle 6 platform. Besides, several works are anchored in hospitals and propose to predict conditions and events related to COVID, such as prediction of admission of patients with COVID in an intensive care unit [ 51 ], detection of COVID cases from radiological text reports [ 46 ], prognosis prediction for patients with chronic obstructive pulmonary disease [ 45 ] or for patients with hypertension [ 31 ].

In addition to clinical and hospital information, researchers investigate scientific literature looking for instance for drug repurposing recommendations [ 52 ], and for temporal evolution of research work on COVID-19 [ 53 ]. Besides, the first systematic reviews related to COVID are proposed [ 54 ], including the focus on research needs [ 55 ].

The researches also investigate social networks, focusing on analyzing public opinion and emotions on the COVID pandemics in Twitter posts [ 56 , 57 ], monitoring illicit sales of COVID medication on Twitter and Instagram [ 58 ], and observing COVID symptoms and disease histories collected from a large population in Reddit, which may provide more reliable insights [ 59 ]. Another important issue is that, in the current situation, the surveillance of emerging epidemiological events becomes again very important, as around 60% of all outbreaks are detected using informal sources, which motivated online epidemiological surveillance [ 32 ].

3.3 Neurological and Psychiatric Disorders

We observed an interest for neurological and psychiatric disorders, which are mainly issued from clinical context: detection of duration of untreated psychosis [ 38 ], analysis of language in patients with aphasia [ 60 ], Alzheimer's disease [ 61 ], and autism spectrum disorder [ 62 ], generation of artificial mental health records and their evaluation [ 63 ], detection and prediction of suicide in mental illness [ 64 65 66 ], automatic detection of agitation and related symptoms among hospitalized patients [ 67 ], analysis of COVID impact on people with epilepsy [ 36 ], prediction of care cost in mental health setting [ 68 ], and, more generally, the use of artificial intelligence in mental health and its biases [ 69 ].

3.4 Place of Patients

The place of patients in the healthcare context is increasing and several publications place patients at the center of their investigations. Hence, in addition to the patient-centered healthcare process, we can also mention work focusing on patient outcome [ 70 ], studying public opinion on use of free-text data in electronic medical records for research [ 71 ], studying patient feedback on the quality of care [ 72 ], analyzing the ways the patients describe their pain [ 73 ], measuring the quality of patient-doctor communication [ 74 ], analyzing the developmental crisis episodes that occur during early adulthood in social media [ 75 ], and analyzing patient experience in order to define some guidelines [ 76 ].

3.5 Social Networks

As noticed above, the social networks continue to provide important information on several issues: public opinion and emotions on the COVID pandemics in Twitter posts [ 56 , 57 ], surveillance of illicit sales of COVID medication on Twitter and Instagram [ 58 ], observation of COVID symptoms and disease histories collected from a large population in Reddit [ 59 ], surveillance of emerging epidemiological events [ 32 ], analysis of HIV-related tweets and of their relation to the HIV incidence [ 77 ], analysis of drug use on Twitter [ 78 ], analysis of developmental crisis episodes during early adulthood in social media [ 75 ].

We assume that the investigation of social networks will go further in the future, as these networks provide independent and massive information on various events.

4 Conclusion

The NLP publications in 2020 have been heavily marked by the sanitary situation, which motivated an increasing number of works related to the COVID-19 pandemic. The authors addressed all types of content (clinical texts, clinical trials, scientific papers, social media, etc.) so as to mine information related to this major issue (adverse drug reactions, usefulness of existing treatments, psychological impact of the pandemic, …etc.).

From a scientific perspective, we observe an increasing use of transformer models based on word embeddings. In continuation of the trend already observed in previous years, we notice that the variety of languages processed is also increasing. This observation is also related to the use of multilingual transformer models, among them BERT is the most used since it allows to process more than one hundred languages. In addition, several authors adapted those multilingual models to their data (specific domain for a given language), which also increases the number of publications related to new languages. An opposite way also appeared with papers focusing on green research, especially to propose methods for processing new domains or new data, using existing transformer models without any new training steps for these complex models.

In the coming years, we hope that environmentally friendly solutions will be preferred to the production of new transformer models which still need more and more computing resources. In addition, we also urge the NLP community to go back to a qualitative analysis of their outputs, rather than a basic and harmful race to present numerical gains over other similar studies. The positive impact of NLP research on clinical issues should be highlighted rather than the search for better results computed by evaluation metrics.

Table 1. Best paper selection of articles for the IMIA Yearbook of Medical Informatics 2021 in the section ‘Natural Language Processing’. The articles are listed in alphabetical order of the first author's surname.

| Section Natural Language Processing |

|---|

| ▪ Ive J, Viani N, Kam J, Yin L, Verma S, Puntis S, Cardinal R, Roberts A, Stewart R, Velupillai S. Generation and evaluation of artificial mental health records for Natural Language Processing. NPJ Digit Med 2020;3:69. ▪ Jin Q, Tan C, Chen M, Liu X, Huang S. Predicting clinical trial results by implicit evidence integration. Proc of Empirical Methods in NLP; 2020. ▪ Poerner N, Waltinger U, Schütze H. Inexpensive domain adaptation of pre-trained language models: case studies on biomedical NER and Covid-19 QA. Proc of Empirical Methods in NLP; 2020. |

Footnotes

Content Summaries of Best Papers for the Natural Language Processing Section of the 2021 IMIA Yearbook.

Jin Q, Tan C, Chen M, Liu X, Huang S

Predicting clinical trial results by implicit evidence integration

Proc of Empirical Methods in NLP; 2020

The clinical trial result prediction (CTRP) task is based on medical literature containing PICO (how the Intervention group compares with the Comparison group in terms of the measured Outcomes in the studied Population). The authors proposed an EBM-Net model which is a transformer model that uses unstructured sentences as implicit evidences and a fine-tuning approach. They compared their fine-tuned model w.r.t. the BioBERT model and other approaches (MeSH ontology, bag-of-words, …etc.) and achieved better results on the COVID-19 clinical trials’ dataset (22 clinical trials from the CORD-19 dataset).

Poerner N, Waltinger U, Schütze H

Inexpensive domain adaptation of pre-trained language models: case studies on biomedical NER and Covid-19 QA

Proc of Empirical Methods in NLP; 2020

The authors highlight the expensive cost of domain adaptation while training a model on target-domain text. This cost is expressed in terms of hardware requirement, high running time and negative impact on the CO2 footprint. The authors investigated a solution they called GreenBioBERT that relies first on a Word2vec training stage on target-domain texts (PubMed, PMC, CORD-19), and second on the alignment of word vectors with the vectors from BioBERT. They applied their model on two issues: 8 biomedical NER tasks in English, and question-answering (QA) on COVID-19 issue. The authors achieved competitive results using BioBERT and a better precision on a few tasks; on the COVID-19 QA task, their model achieved better results than the SQuADBERT model (designed for QA). In this paper, the authors proposed a useful method to use existing pretrained language models in order to adapt them to new datasets, new tasks, new languages, etc.

Ive J, Viani N, Kam J, Yin L, Verma S, Puntis S, Cardinal R, Roberts A, Stewart R, Velupillai S

Generation and evaluation of artificial mental health records for Natural Language Processing

NPJ Digit Med 2020;3:69

The main problem for biomedical NLP is the difficult access to clinical documents and the inherent complexity to completely de-identify documents. The solution proposed by the authors consists in generating artificial discharge summaries. In this paper, the authors produced artificial summaries in mental health, based on the MIMIC-III data. Then, they used their artificial texts in order to train models using the Keras toolkit for classification tasks. The authors observed that models trained on their synthetic data perform as well as models trained on real data. Since the synthetic discharge summaries have been produced taking as input the MIMIC-III data, the authors cannot share their resources. Nevertheless, the method is reproducible.

References

- 1.Nadkarni P M, Ohno-Machado L, Chapman W W. Natural Language Processing: an introduction. J Am Med Inform Assoc. 2011;18:544–51. doi: 10.1136/amiajnl-2011-000464. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Friedman C, Hripcsak G. Natural language processing and its future in medicine. Acad Med 1999 Aug. 74(08):890–5. doi: 10.1097/00001888-199908000-00012. [DOI] [PubMed] [Google Scholar]

- 3.Devlin J, Chang M-W, Lee K, Toutanova K.BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding [Internet] 2019. Available from:https://arxiv.org/abs/1810.04805v2

- 4.Lee J, Yoon W, Kim S, Kim D, Kim S, So C H. BioBERT: a pre-trained biomedical language representation model for biomedical text mining. Bioinformatics. 2020;36(04):1234–40. doi: 10.1093/bioinformatics/btz682. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.de Vries W, Van Cranenburgh A, Bisazza A, Caselli T, van Noord G, Nissim M.BERTje: A Dutch BERT Model [Internet] 2019. Available from:https://arxiv.org/abs/1912.09582v1

- 6.Le H, Vial L, Frej J, Segonne V, Coavoux M, Lecouteux B.FlauBERT: Unsupervised Language Model Pre-training for FrenchIn: Proc of LREC Marseille, France: European Language Resources Association;20202479–90.

- 7.Martin L, Muller B, Ortiz Suàrez P J, Dupont Y, Romary L, De La Cergerie É.CamemBERT: a tasty French language model. In Proc. of ACL Association for Computational Linguistics;20207203–19.

- 8.Boeker M (2020) Scheible R, Thomczyk F, Tippmann P, Jaravine V.GottBERT: a pure German Language Model [Internet] 2020. Available from: arXiv preprint arXiv: 2012.02110

- 9.Semeraro G & Basile V Polignano M, Basile P, de Gemmis M.AlBERTo: Italian BERT Language Understanding Model for NLP Challenging Tasks Based on TweetsIn: Proc of CLiC-it, volume 2481. CEUR2019

- 10.Canete J, Chaperon G, Fuentes R, Ho J-H, Kang H, Pérez J.Spanish Pre-Trained BERT Model and Evaluation DataIn PML4DC at ICLR;2020

- 11.Jain S, Tang G, Chi L S.MRCBert: A Machine Reading Comprehension Approach for Unsupervised Summarization [Internet] 2021. Available from:https://arxiv.org/abs/2105.00239v1

- 12.Rajpurkar P, Zhang J, Lopyrev K, Liang P.SQuAD: 100,000+ questions for machine comprehension of textIn: Proceedings of the 2016 Conference on Empirical Methods in Natural Language Processing.Austin, Texas: Association for Computational Linguistics; 20162383–92. [Google Scholar]

- 13.Corrado G & Dean J Mikolov T, Chen K.Efficient Estimation of Word Representations in Vector Space [Internet] 2013. Available from:https://arxiv.org/abs/1301.3781v3

- 14.Pennington J, Socher R, Manning C D.GloVe: Global Vectors for Word RepresentationIn: Empirical Methods in Natural Language Processing (EMNLP);20141532–43.

- 15.Peters M E, Neumann M, Iyyer M, Gardner M, Clark C, Lee K, Zettlemoyer L.Deep contextualized word representationsIn: Proc. of NAACL; 2018.

- 16.Radford A, Wu J, Child R, Luan D, Amodei D, Sutskever I.Language Models are Unsupervised Multitask Learners 2019. Available from:https://d4mucfpksywv.cloudfront.net/better-language-models/language-models.pdf

- 17.Brown T B, Mann B, Ryder N, Subbiah M, Kaplan J, Dhariwal P.D. Language Models are Few-Shot Learners [Internet] 2020. Available from:https://arxiv.org/abs/2005.14165v4

- 18.Poerner N, Waltinger U, Shütze H.Inexpensive Domain Adaptation of Pretrained Language Models: Case Studies on Biomedical NER and Covid-19 QAIn: Proc. of EMNLP;20201482–90.

- 19.Liu X & Huang S Jin Q, Tan C, Chen M.Predicting Clinical Trial Results by Implicit Evidence IntegrationIn: Proc. of EMNLP;20201461–77.

- 20.Alomari M & Alomari A . Faris H, Habib M, Faris M. Medical speciality classification system based on binary particle swarms and ensemble of one vs. rest support vector machines. J Biomed Inform. 2020;109:103525. doi: 10.1016/j.jbi.2020.103525. [DOI] [PubMed] [Google Scholar]

- 21.Chen C-H, Hsieh J-G, Cheng S-L, Lin Y-L, Lin P-H, Jeng J-H. Early short-term prediction of emergency department length of stay using natural language processing for low-acuity outpatients. Am J Emerg Med. 2020;38(11):2368–73. doi: 10.1016/j.ajem.2020.03.019. [DOI] [PubMed] [Google Scholar]

- 22.Li X, Lin X, Ren H, Guo J. Ontological organization and bioinformatic analysis of adverse drug reactions from package inserts: Development and usability study. J Med Internet Res. 2020;22(07):e20443. doi: 10.2196/20443. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Wang Z, Huang H, Cui L, Chen J, An J, Duan H. Using natural language processing techniques to provide personalized educational materials for chronic disease patients in China: Development and assessment of a knowledge-based health recommender system. JMIR Med Inform. 2020;8(04):e17642. doi: 10.2196/17642. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Wu C, Luo G, Guo C, Ren Y, Zheng A, Yang C. An attention-based multi-task model for named entity recognition and intent analysis of Chinese online medical questions. J Biomed Inform. 2020;108:103511. doi: 10.1016/j.jbi.2020.103511. [DOI] [PubMed] [Google Scholar]

- 25.Xia H, An W, Li J, Zhang Z J. Outlier knowledge management for extreme public health events: Understanding public opinions about COVID-19 based on microblog data. Socioecon Plann Sci. 2020;10(09):41–1. doi: 10.1016/j.seps.2020.100941. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Zhang Z, Zhu L, Yu P. Multi-level representation learning for Chinese medical entity recognition: Model development and validation. JMIR Med Inform. 2020;8(05):e17637. doi: 10.2196/17637. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Krsnik I, Glavas G, Krsnik M, Miletic D, SŠtajduhar I. Automatic annotation of narrative radiology reports. Diagnostics (Basel) 2020;10(04):196. doi: 10.3390/diagnostics10040196. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Güngör O, Güngör T, Uskudarli S. Exseqreg: Explaining sequence-based nlp tasks with regions with a case study using morphological features for named entity recognition. PLoS One. 2020;15(12):e0244179. doi: 10.1371/journal.pone.0244179. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Moen H, Hakala K, Peltonen L-M, Matinolli H-M, Suhonen H, Terho K. Assisting nurses in care documentation: from automated sentence classification to coherent document structures with subject headings. J Biomed Semantics2020. 11(01):10. doi: 10.1186/s13326-020-00229-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Grabar N, Dalloux C, Claveau V. CAS: corpus of clinical cases in French. J Biomed Semantics. 2020;11(01):7. doi: 10.1186/s13326-020-00225-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Neuraz A, Lerner I, Digan W, Paris N, Tsopra R, Rogier A. Natural language processing for rapid response to emergent diseases: Case study of calcium channel blockers and hypertension in the COVID-19 pandemic. J Med Internet Res. 2020;22(08):e20773. doi: 10.2196/20773. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Abbood A, Ullrich A, Busche R, Ghozzi S. EventEpi-A natural language processing framework for event-based surveillance. PLoS Comput Biol. 2020;16(11):e1008277. doi: 10.1371/journal.pcbi.1008277. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Ferrario A, Demiray B, Yordanova K, Luo M, Martin M. Social reminiscence in older adults’ everyday conversations: Automated detection using natural language processing and machine learning. J Med Internet Res. 2020;22(09):e19133. doi: 10.2196/19133. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Wulff A, Mast M, Hassler M, Montag S, Marschollek M, Jack T.Designing an openEHR-based pipeline for extracting and standardizing unstructured clinical data using natural language processing Methods Inf Med 202059(S02):e64–e78. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Barash Y, Guralnik G, Tau N, Soffer S, Levy T, Shimon O. Comparison of deep learning models for natural language processing-based classification of non-english head ct reports. Neuroradiology. 2020;62(10):1247–56. doi: 10.1007/s00234-020-02420-0. [DOI] [PubMed] [Google Scholar]

- 36.Lanzone J, Cenci C, Tombini M, Ricci L, Tufo T, Piccoli M. Glimpsing the impact of COVID19 lock-down on people with epilepsy: A text mining approach. Front Neurol. 2020;11:870. doi: 10.3389/fneur.2020.00870. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Mensa E, Colla D, Dalmasso M, Giustini M, Mamo C, Pitidis A. Violence detection explanation via semantic roles embeddings. BMC Med Inform Decis Mak. 2020;20(01):263. doi: 10.1186/s12911-020-01237-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Viani N, Kam J, Yin L, Bittar A, Dutta R, Patel R. Temporal information extraction from mental health records to identify duration of untreated psychosis. J Biomed Semantics. 2020;11(01):2. doi: 10.1186/s13326-020-00220-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Nakatani H, Nakao M, Uchiyama H, Toyoshiba H, Ochiai C. Predicting inpatient falls using natural language processing of nursing records obtained from Japanese electronic medical records: Case-control study. JMIR Med Inform. 2020;8(04):e16970. doi: 10.2196/16970. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Ujiee S, Yada S, Wakamiya S, Aaramaki E. Identification of adverse drug event-related Japanese articles: Natural language processing analysis. JMIR Med Inform. 2020;8(11):e22661. doi: 10.2196/22661. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Lee M & Kim Y . Cho I. What are the main patient safety concerns of healthcare stakeholders: a mixed-method study of web-based text. Int J Med Inform. 2020;140:104162. doi: 10.1016/j.ijmedinf.2020.104162. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Lee K H, Kim H J, Kim Y J, Kim J H, Song E Y. Extracting structured genotype information from free-text hla reports using a rule-based approach. J Korean Med Sci. 2020;35(12):e78. doi: 10.3346/jkms.2020.35.e78. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Eskildsen N K, Eriksson R, Christensen S B, Aghassipour T S, Bygso M J, Brunak S. Implementation and comparison of two text mining methods with a standard pharmacovigilance method for signal detection of medication errors. BMC Med Inform Decis Mak. 2020;20(01):94. doi: 10.1186/s12911-020-1097-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Teixeira C & Oliveira HG . Lopes F. Comparing different methods for named entity recognition in Portuguese neurology text. J Med Syst. 2020;44(04):77. doi: 10.1007/s10916-020-1542-8. [DOI] [PubMed] [Google Scholar]

- 45.Graziani D, Soriano J B, Rio-Bermudez C D, Morena D, Dìaz T, Castillo M. Characteristics and prognosis of COVID-19 in patients with COPD. J Clin Med. 2020;9(10):3259. doi: 10.3390/jcm9103259. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Lopez-Úbeda P, Diaz-Galiano M C, Martìn-Noguerol T, Luna A, Urena-Lopez L A, Martìn-Valdivia M T. COVID-19 detection in radiological text reports integrating entity recognition. Comput Biol Med. 2020;127:104066. doi: 10.1016/j.compbiomed.2020.104066. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Najafabadipour M, Zanin M, Rodrìguez-Gonzàlez A, Torrente M, Garcìa B N, Bermudez JLC. Reconstructing the patient's natural history from electronic health records. Artif Intell Med. 2020;105:101860. doi: 10.1016/j.artmed.2020.101860. [DOI] [PubMed] [Google Scholar]

- 48.Santiso S, Pérez A, Casillas A, Oronoz M. Neural negated entity recognition in Spanish electronic health records. J Biomed Inform. 2020;105:103419. doi: 10.1016/j.jbi.2020.103419. [DOI] [PubMed] [Google Scholar]

- 49.Caccamisi A, Jorgensen L, Dalianis H, Rosenlund M. Natural language processing and machine learning to enable automatic extraction and classification of patients’ smoking status from electronic medical records. Ups J Med Sci. 2020;125(04):316–24. doi: 10.1080/03009734.2020.1792010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Wang L L, Lo K, Chaddrasekhar Y, Reas R, Yang J, Burdick D.CORD-19: The COVID-19 Open Research Dataset [Internet] 2020. Available from:https://arxiv.org/abs/2004.10706v4

- 51.Savana COVID-19 Research Group . Izquierdo J L, Ancochea J, Soriano J B. Clinical characteristics and prognostic factors for intensive care unit admission of patients with COVID-19: Retrospective study using machine learning and natural language processing. J Med Internet Res. 2020;22(10):e21801. doi: 10.2196/21801. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Gates L E, Hamed A A. The anatomy of the SARS-CoV-2 biomedical literature: Introducing the CovidX network algorithm for drug repurposing recommendation. J Med Internet Res. 2020;22(08):e21169. doi: 10.2196/21169. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Ebadi A, Xi P, Tremblay S, Spencer B, Pall R, Wong A. Understanding the temporal evolution of COVID-19 research through machine learning and natural language processing. Scientometrics. 2020;11:1–15. doi: 10.1007/s11192-020-03744-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Wang L L, Lo K. Text mining approaches for dealing with the rapidly expanding literature on COVID-19. Brief Bioinform. 2021;22(02):781–99. doi: 10.1093/bib/bbaa296. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Doanvo A, Qian X, Ramjee D, Piontkivska H, Desai A, Majumder M. Machine learning maps research needs in COVID-19 literature. Patterns (N Y) 2020;1(09):100123. doi: 10.1016/j.patter.2020.100123. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Boon-Itt S, Skunkan Y. Public perception of the COVID-19 pandemic on Twitter: Sentiment analysis and topic modeling study. JMIR Public Health Surveill. 2020;6(04):e21978. doi: 10.2196/21978. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Dyer J, Kolic B. Public risk perception and emotion on Twitter during the Covid-19 pandemic. Appl Netw Sci. 2020;5(01):99. doi: 10.1007/s41109-020-00334-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Mackey T K, Li J, Purushothaman V, Nali M, Shah N, Bardier C. Big data, natural language processing, and deep learning to detect and characterize illicit COVID-19 product sales: Infoveillance study on Twitter and Instagram. JMIR Public Health Surveill. 2020;6(03):e20794. doi: 10.2196/20794. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Picone M, Inoue S, Defelice C, Naujokas M F, Sinrod J, Cruz V A. Social listening as a rapid approach to collecting and analyzing COVID-19 symptoms and disease natural histories reported by large numbers of individuals. Popul Health Manag. 2020;23(05):350–60. doi: 10.1089/pop.2020.0189. [DOI] [PubMed] [Google Scholar]

- 60.Themistocleous C, Webster K, Afthinos A, Tsapkini K. Part of speech production in patients with primary progressive aphasia: An analysis based on natural language processing. Am J Speech Lang Pathol. 2021;30(15):466–80. doi: 10.1044/2020_AJSLP-19-00114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Reeves S, Williams V, Costela F M, Palumbo R, Umoren O, Christopher M M. Narrative video scene description task discriminates between levels of cognitive impairment in Alzheimer's disease. Neuropsychology. 2020;34(04):437–46. doi: 10.1037/neu0000621. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Chojnicka I, Wawer A. Social language in autism spectrum disorder: A computational analysis of sentiment and linguistic abstraction. PLoS One. 2020;15(03):e229985. doi: 10.1371/journal.pone.0229985. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Ive J, Viani N, Kam J, Yin L, Verma S, Puntis S. Generation and evaluation of artificial mental health records for natural language processing. NPJ Digit Med. 2020;3:69. doi: 10.1038/s41746-020-0267-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Senior M, Burghart M, Yu R, Kormilitzin A, Liu Q, Vaci N. Identifying predictors of suicide in severe mental illness: A feasibility study of a clinical prediction rule (oxford mental illness and suicide tool or OxMIS) Front Psychiatry. 2020;11:268. doi: 10.3389/fpsyt.2020.00268. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Levis M, Westgate C L, Gui J, Watts B V, Shiner B. Natural language processing of clinical mental health notes may add predictive value to existing suicide risk models. Psychol Med. 2020:1–10. doi: 10.1017/S0033291720000173. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Dutta R & Stewart R . Jayasinghe L, Bittar A. Clinician-recalled quoted speech in electronic health records and risk of suicide attempt: a case-crossover study. BMJ Open. 2020;10(04):e36186. doi: 10.1136/bmjopen-2019-036186. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Hart K L, Pellegrini A M, Forester B P, Berretta S, Murphy S N, Perlis R H. Distribution of agitation and related symptoms among hospitalized patients using a scalable natural language processing method. Gen Hosp Psychiatry. 2021;68:46–51. doi: 10.1016/j.genhosppsych.2020.11.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Colling C, Khondoker M, Patel R, Fok M, Harland R, Broadbent M. Predicting high-cost care in a mental health setting. BJPsych Open. 2020;6(01):e10. doi: 10.1192/bjo.2019.96. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Straw I, Callison-Burch C. Artificial intelligence in mental health and the biases of language based models. PLoS One. 2020;15(12):e0240376. doi: 10.1371/journal.pone.0240376. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Hernandez-Boussard I, Blayney D W, Brooks J D. Leveraging digital data to inform and improve quality cancer care. Cancer Epidemiol Biomarkers Prev. 2020;29(04):816–22. doi: 10.1158/1055-9965.EPI-19-0873. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Ford E, Oswald M, Hassan L, Bozentko K, Nenadic G, Cassell J. Should free-text data in electronic medical records be shared for research? A citizens’ jury study in the UK. J Med Ethics. 2020;46(06):367–77. doi: 10.1136/medethics-2019-105472. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Nawab K, Ramsey G, Schreiber R. Natural language processing to extract meaningful information from patient experience feedback. Appl Clin Inform. 2020;11(02):242–52. doi: 10.1055/s-0040-1708049. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Tighe P J, Sannapaneni B, Fillingim R B, Doyle C, Kent M, Shickel B. Forty-two million ways to describe pain: Topic modeling of 200,000 PubMed pain-related abstracts using natural language processing and deep learning-based text generation. Pain Med. 2020;21(11):3133–60. doi: 10.1093/pm/pnaa061. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Cuffy C, Hagiwara N, Vrana S, McInnes B T. Measuring the quality of patient-physician communication. J Biomed Inform. 2020;112:103589. doi: 10.1016/j.jbi.2020.103589. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Agarwal S, Guntuku S C, Robinson O C, Dunn A, Ungar L H. Examining the phenomenon of quarter-life crisis through artificial intelligence and the language of twitter. Front Psychol. 2020;11:341. doi: 10.3389/fpsyg.2020.00341. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Cammel S A, De Vos M S, van Soest D, Hettne K M, Boer F, Steyerberg E W. How to automatically turn patient experience free-text responses into actionable insights: a natural language programming (NLP) approach. BMC Med Inform Decis Mak. 2020;20:1–97. doi: 10.1186/s12911-020-1104-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.(2020) . Stevens R, Bonett S, Bannon J, Chittamuru D, Slaff B, Browne S K. Association between HIV-related tweets and HIV incidence in the United States: Infodemiology study. J Med Internet Res. 2020;22(06):e17196. doi: 10.2196/17196. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Tassone J, Yan P, Simpson M, Mendhe C, Mago V, Choudhury S.Utilizing deep learning and graph mining to identify drug use on Twitter data BMC Med Inform Decis Mak 202020(Suppl 11):304. [DOI] [PMC free article] [PubMed] [Google Scholar]