Abstract

Time-course gene-expression data have been widely used to infer regulatory and signaling relationships between genes. Most of the widely used methods for such analysis were developed for bulk expression data. Single cell RNA-Seq (scRNA-Seq) data offer several advantages including the large number of expression profiles available and the ability to focus on individual cells rather than averages. However, the data also raise new computational challenges. Using a novel encoding for scRNA-Seq expression data, we develop deep learning methods for interaction prediction from time-course data. Our methods use a supervised framework which represents the data as 3D tensor and train convolutional and recurrent neural networks for predicting interactions. We tested our time-course deep learning (TDL) models on five different time-series scRNA-Seq datasets. As we show, TDL can accurately identify causal and regulatory gene–gene interactions and can also be used to assign new function to genes. TDL improves on prior methods for the above tasks and can be generally applied to new time-series scRNA-Seq data.

Keywords: deep learning, time-course data, single cell RNA-Seq

Introduction

Inferring gene relationships and function from expression data has been a major focus of computational biology over the last two decades [1, 2]. While many of these methods were developed for, and applied to, static data, time-series data may be even more appropriate for such analysis. By profiling genes over time, researchers can identify not just correlations (that may imply interactions) but also causation [3]. Indeed, several methods have been developed and used to infer causal interactions from time-series gene-expression data. Some of these methods were originally developed for static data and later applied to time-series as well [4, 5]. Other methods were specifically developed for time-series data [6]. These methods ranged from alignment methods [7] to regression analysis [5], methods based on Granger causality inference [3, 6] and methods utilizing various forms of graphical models [8, 9].

All of the methods discussed above were developed for bulk expression analysis. Recently, researchers have been performing time-series studies using single cell RNA-sequencing (scRNA-Seq) [10–13]. While such studies provide much more detailed information about the genes and cell types involved in the process, they also raise new challenges. A major issue for using such data to infer gene relationships is the fact that cells profiled in a specific time point cannot be tracked. Thus, it is not clear which cell in the next time point is a descendent (or closely related) to a specific cell in the previous time point making it hard to determine exact trajectories for genes. Another challenge arises from the large number of cells being profiled at each time point. Finally, the fact that cells in a time point may be from several different types and may not be fully synchronized [14] makes it harder to establish a specific pattern for temporal analysis.

To address these issues, some methods first perform pseudo-time ordering of the cells [15] followed by regression or correlation analysis [16]. While such methods can indeed identify some of the casual interactions, they depend very strongly on specific assumptions including the accuracy of the ordering achieved, the expected time lag (in regression) or the format of the interaction (in the case of correlation).

We have recently developed the convolutional neural network for coexpression (CNNC) analysis method which is focused on inferring gene–gene relationships from static scRNA-Seq data [17]. Unlike prior methods for inferring interactions, CNNC does not make any assumptions about the specific attributes or correlations that can be observed for interacting genes. Instead, it uses a supervised framework to train a CNN for predicting such interactions. The main novel idea of CNNC is the way gene-expression data are represented. While most methods represent such data as vectors (for a single cell) or matrix (for a collection of cells) CNNC converts expression data into image-like histogram representation. This enables CNNC to take full advantage of the ability of CNNs to utilize local substructures in the data.

While CNNC can be directly applied to time-series scRNA-Seq data, such application ignores the temporal information and so does not lead to optimal results as we show. Here, we present two extensions of CNNC and show that by explicitly considering the temporal information we can greatly improve the performance of the method. The first extension we consider is converting the input from a 2D to a 3D representation of the joint probability function over time, while still using a CNN. The second uses a different type of deep NN termed long short-term memory (LSTM), which is specifically appropriate for time-series analysis [18]. By using LSTM we can encode both, an image for each time point and the way the images are related over time enabling the method to infer interactions both within and across time. We term the new methods we develop time-course deep learning (TDL). We discuss how to formulate TDL models, how to train them and learn parameters for them and how to apply them to time-series scRNA-Seq data. Testing the methods on several recent time-series scRNA-Seq datasets, we show that they can accurately infer interactions, causality and assign function to genes and that TDL improves upon current and prior methods suggested for these tasks.

Methods

To utilize the advantages of convolutional neural networks (CNNs), we encode time-series scRNA-Seq data using a 2D or 3D histogram termed normalized empirical probability-density function (NEPDF). These inputs encode, for each pair of genes, the co-occurrence frequency of their values either across cells in all time points (2D NEPDF) or for all cells in a specific time point (3D NEPDF). Next, we use these as features and train a TDL model using a supervised computational framework to predict causality, infer interactions and assign function to genes.

Data used

To test the ability of our TDL methods to predict interaction and causal relationships, we used time-series scRNA-Seq data from four mouse and human embryonic stem cell studies (mESC and hESC). Data were downloaded from the accession numbers of GSE79578, GSE65525, E-MTAB-3929 and GSE75748 [11, 19–21]. The two mESC datasets profiled 3456 and 2718 cells with nine and four time points, respectfully. The hESC datasets profiled 1530 and 758 cells, both in six time points (we removed the first time point for the first hESC dataset since it contains much less cells than other time points). We also used mouse brain time-course single-cell data (cortex cells with three time points (GSE104158) [10]) when testing our method’s ability to infer new functional genes. Count expression data were normalized so that all cells had the same total value of 10 000. Some of the datasets provided RPKM data, so we adopted it directly. Genes that are never expressed were filtered out. See Table 1 for the dataset summary.

Table 1.

Summary for scRNA-Seq datasets used to test TDL

Ground truth interactions

TDL methods rely on a supervised learning framework so need both positive and negative inputs for training. We used TF-gene regulation information to construct the skeleton of TF-gene networks and then defined the edges as positive pairs for training and testing for both causality and interaction-prediction tasks. To determine TF’s regulated genes, we used mESC and hESC ChIP-seq data. We relied on a strict peak P-value cutoff to identify binding sites (P value < 10−400 based on MACS2 [22]). We next defined a ‘promoter region’ as 10 Kb upstream and 1 Kb downstream from the TSS of each gene and assigned TFs to regulate genes if a peak for that TF was identified in the promotor region for the gene as has been previously done [17, 23]. Genes whose promoter region had a significant peak were regarded as positive targets, while those that did not were used as the negative set. To test the accuracy of the functional gene assignments, we downloaded from GSEA [24] 680 cell cycle, 93 rhythm, 184 immune and 137 proliferation genes.

2D and 3D NEPDF input construction

The input to our TDL methods is a 3D image-like NEPDF. For a gene pair (a, b), 2D and 3D NEPDF are generated as follows: Expression ranges for all genes across all cells are divided into 16 equal bins in log space. Next, expression values of gene pairs in each cell were used to compute a 16 × 16 matrix where each entry (i, j) represents the co-occurrence frequency of gene pair (a,b) with the ith, and the jth expression level, respectively. Due to drop-out in scRNA-seq data, the value in the zero–zero position always dominates the entire matrix. To solve this problem, one more log transformation with a psedudo-count was applied to every entry to generate the final 2D normalized matrix. A 3D NEPDF is generated in a similar way by constructing a separate 2D NEPDF for each of the time points in the input data. For the 3D NEPDF, we used an 8 × 8 matrix for each time point rather than 16 × 16 since the number of cells in each time point is much smaller than the total number of cells. Thus, the 3D NEPDF is a tensor with dimension of #time point (T) × 8 × 8. We note that while the dimensions listed above are the ones used in the paper, we tried other dimensions as well for both the 2D and 3D representations. To select the optimal dimension for each method, we performed analysis of various potential input sizes, and determined that the optimal size for TDL model is #time point (T) × 8 × 8 and that the optimal input size for CNNC is 16 × 16. See Figures S6 and S7 available online at https://academic.oup.com/bib for details. In the 3D tensor, each column (row) represents gene a (b)‘s expression level, while each depth represents the time point t in biological experiment settings. As a result, the entry in the 3D NEPDF represents the normalized frequency (probability) of co-occurrence of its corresponding gene pair expression in time point t. Figure 1, presents an overview of the encoding. See Supporting Methods for details about constructing 2D and 3D NEPDF, normalization of the histogram data and transformations used to overcome dropouts and the large concentration that is often observed at the [0,0] point.

Figure 1 .

TDL model architecture. To infer gene interactions (top left), we first convert time-course single cell expression data to 3D tensor, which we term NEPDF. Each 2D slice of the NEPDF captures the co-expression of a pair of genes at one of the time points profiled and the 3D NEPDF represents their co-expression over time. 3D NEPDF is then used as input to a TDL model. The model is trained using labeled positive and negative pairs. The figure shows the convolutional LSTM architecture which is one of the two TDL models we tested. This model consists of LSTM layer, followed by a dense layer which concatenates all convolutional hidden state from LSTM layer and then a final output (classification) layer. See Figure S1 available online at https://academic.oup.com/bib for the other TDL architecture we tested, 3D CNN.

Architectures for TDL models

While our previous models can use the 2D representation [17], this representation does not utilize the temporal information. We have thus developed two TDL models that work directly on the 3D tensor input. The output of all models is a N-dimension (ND) vector, where N depends on specific task on which the TDL is trained (for example, N = 1 for interaction predictions and three for interaction and causality predictions). The first TDL model we consider is termed 3D CNN and is a direct extension of the 2D CNNC method [17]. In general, 3D CNN consists of one T × 8 × 8 tensor input layer, several intermediate 3D convolutional layers, Max pooling layers, one flatten layer and a ND classification layer (see Figure S1 available online at https://academic.oup.com/bib for structure details).

While CNNs can utilize time-series data if the data are encoded as 3D tensor, they are not intended for time-series or sequential data. Another type of neural network, termed recurrent neural network (RNN) [25, 26] is more appropriate for such data. In RNNs, the activation function used for subsequent inputs (for example, later time points) uses the values learned from previous inputs (earlier time points) (Figure 1). Specifically, for time t the network computes the following value for the hidden layer:

|

(1) |

where  is a hidden state computed for the previous time point and

is a hidden state computed for the previous time point and  is the input for time t.

is the input for time t.  and

and  are the (learned) weight matrices,

are the (learned) weight matrices,  is an activation function and

is an activation function and  is the bias term. We compute the output by setting:

is the bias term. We compute the output by setting:

|

(2) |

where  is the output at time t, and

is the output at time t, and  is an activation function. See Figure S2 available online at https://academic.oup.com/bib for details on the RNN architecture and its unrolled sequential structure.

is an activation function. See Figure S2 available online at https://academic.oup.com/bib for details on the RNN architecture and its unrolled sequential structure.

While successful, RNN can suffer from gradient-vanishing or gradient-explosion problems [27]. A popular way to overcome these problems is to use a variant of RNN termed LSTM [28]. It utilizes a cell memory unit, and combines the current (time point) input, the previous cell memory and the computed hidden state to update the cell memory. An output gate is used to control the information propagation from cell memory to hidden state.

|

(3) |

|

(4) |

|

(5) |

|

(6) |

|

(7) |

where  is the sigmoid function,

is the sigmoid function,  are the input gate, forget gate, output gate, cell and hidden state vectors at time t and

are the input gate, forget gate, output gate, cell and hidden state vectors at time t and  represents Hadamard product.

represents Hadamard product.  serves as both, input to the next layer over time and the output for the final state in the model.

serves as both, input to the next layer over time and the output for the final state in the model.

The original LSTM method uses a fully connected NN. However, for our time-course 3D NEPDF, we also need to account for spatial information encoded for each time point. As a result, we use a convolutional version of LSTM. The conv-LSTM uses the following functions:

|

(8) |

|

(9) |

|

(10) |

|

(11) |

|

(12) |

where  is the sigmoid function, and

is the sigmoid function, and  are the input gate, forget gate, output gate, cell vector at time t and

are the input gate, forget gate, output gate, cell vector at time t and  represents convolution operator. All hidden states are then converted as a vector followed by classifier. See Figures S3 and S4 available online at https://academic.oup.com/bib for complete structure details of conv-LSTMs we used.

represents convolution operator. All hidden states are then converted as a vector followed by classifier. See Figures S3 and S4 available online at https://academic.oup.com/bib for complete structure details of conv-LSTMs we used.

Train and test strategy

We used a threefold cross-validation strategy to train and test models for all tasks. We separated the data to avoid information leakage: For the gene causality and interaction prediction tasks, the generated NEPDF was separated based on the TFs. In other word, all predictions related to a specific TF were used either only in training or only in testing. In each fold, we computed AUROC for each left-out TF and then the curves and values for TFs in each fold were combined in the figures presented. For gene-function assignment, NEPDFs for training did not use any of the test genes to avoid information leakage. We randomly selected genes not in the known (positive) function gene set as negative genes. Positive (negative) genes were split into positive (negative) train and test gene set. For the train (test) set, all possible gene pairs where the first gene is in the positive training set and the second is in the positive train (test) set were treated as positive pairs, and pairs where the first gene is in the positive training set and the second from the negative train (test) set are treated as negative. All the deep learning models were trained with the stochastic gradient descent (SGD) algorithm using a maximum training epoch of 100. Accuracy of the internal validation set was monitored for early stopping. See Table 2 for runtime information based on the size of the input and Table S1, available online at https://academic.oup.com/bib, for the time of all models for interaction task.

Table 2.

Run times (second per epoch) for deep learning methods for the hESC1 dataset

| Input size | 3D CNN | Deep conv-LSTM | 2D CNNC |

|---|---|---|---|

| 5 × 4 × 4 | 10 | 24 | – |

| 5 × 8 × 8 | 35 | 57 | – |

| 5 × 16 × 16 | 177 | 360 | – |

| 16 × 16 | – | – | 9 |

Brief manual for using TDL

We discussed above in detail the methods and algorithms developed for each of the four steps required for using TDL. Below we provide a brief manual on how TDL is used to analyze a new time-series scRNA-Seq dataset. The TDL software pipeline contains the following steps: data processing and label assignment, generation of NEPDFs for training and test data, model training and application of the model to predict new interaction pairs. Figure S10, available online at https://academic.oup.com/bib, and the TDL github website provide a detailed overview of the pipeline we implemented for using TDL. The pipeline requires the following information and input from the users:

Step 1. Users provide a list of known interaction gene pairs for the condition/dataset they are studying and a gene reference list including all profiled genes. These are uploaded as files using the “gene_pair_set_generation.py’ function (see the github supporting website for technical details). TDL uses the uploaded list and a set of random pairs (from genes on the list that are not listed as interacting) as positive and negative samples, respectively, for training the RNN.

Step 2. Users provide a normalized scRNA-Seq expression dataset for all genes [including genes in the pairs provided in step (1) but also the other genes which would be used for testing in step (4)], in which each row represents one cell and each column represents one gene. The expression data and the gene pair list from step (1) are uploaded using the command line ‘3D_tensor_generation.py’ (see the github supporting website). TDL uses these data to create time-course 3D NEPDF tensor for all pairs selected in step (1).

Step 3. This step does not require user input. In step (3) TDL uses the NEPDFs from step 2 and labels from step 1 to train the RNN using cross validation analysis as described above.

Step 4. This step provides predictions for gene-pair set provided by the user or for all pairs in the users uploaded scRNA-Seq data from step 2. Here, the new NEPDF tensor is created for the gene pair set using the same command line used in step 2. Then, the trained model and the new NEPDF dataset are uploaded using the command line of ‘conv_lstm_deep_prediction.py’ (see our github supporting website). The output is a prediction for each pair of genes based on the trained RNN (the predicted label depends on the type of label provided in step 1 (interacting or not, same function or causal interactions).

Results

Causality predictions using TDL models

One of the major advantages of time-series when compared to static expression data is the ability to use lagged relationships between expression profiles to infer the direction (causality) of gene–gene interactions [7, 29]. We thus first tested if our TDL models can correctly infer the direction of interaction for TF-gene pairs since for these we know the regulator and the direction. For this, we used two mouse embryonic stem cell (mESC) and two human embryonic stem cell (hESC) time-course SC datasets and mESC, and hESC ChIP-seq data from the gene transcription regulation database (GTRD) [30]. We used 38 and 36 TFs for testing the two mESC expression datasets due to differences in coverage of the two datasets. For both hESC data, we used 36 TFs. We trained all models with the following labeled data: A TF a and its regulated target gene b, (a, b) will be assigned a label of 1 while the label for (b, a) is 0. In other words, the goal is to use the time-series data to infer not which genes are interacting but rather the direction of the interaction.

We compared the TDL approaches (3D CNN and Conv-LSTM) with a 2D CNNC [17] (which is also supervised), and unsupervised methods that utilize Granger-causality and lagged-regression analysis to infer the interaction direction [6]. The Granger-causality inference method was applied to three of the four datasets since the second (mESC2) only had four time points which turned out to be less than the minimal number of time points required by the method. We tested two versions for the method. The first strategy uses the average expression of genes in each time point for the analysis, while the second first orders all cells using on the pseudo-time ordering determined by Monocle3 [31] and based on this order performs the regression analysis. We note that we were only able to obtain useful results when using Monocle3 for two of the four datasets (mESC1 and hESC2) and so did not perform ordered analysis for the other two datasets (see Figure S5 available online at https://academic.oup.com/bib for the outcome of pseudo-time ordering for all four datasets). We did not compare causality prediction with other unsupervised methods (Pearson correlation – PC and mutual information – MI) since these are symmetric and so cannot infer directionality information. Threefold cross-validation was used to evaluate all methods. Since each fold contains more than 10 TFs, the area under the receiver operating characteristic curve (AUROC) was calculated for each TF, and the curves for each TF were then collected to compute the final performance for each fold.

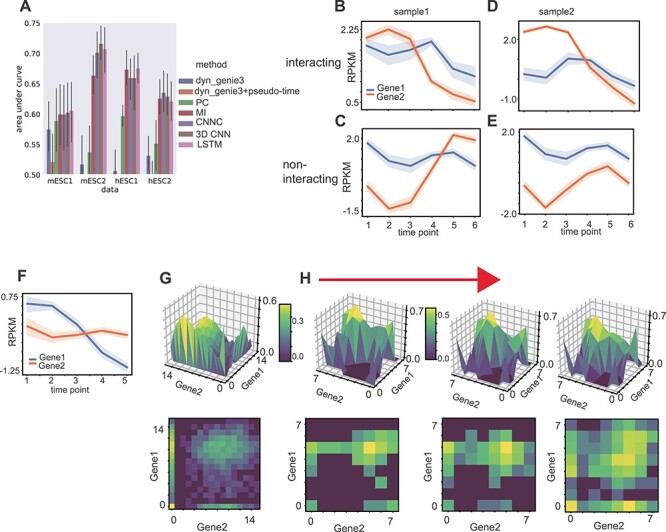

Results presented in Figures 2A and S8, available online at https://academic.oup.com/bib, show that pseudo-time ordering improved the Granger-causality analysis results though using such ordering results for this method is still inferior to deep learning methods. We also observe that the TDL models outperform 2D CNNC on all the datasets (mean AUROC for mESC1, 0.73 versus 0.72; mESC2, 0.67 versus 0.61; hESC1, 0.77 versus 0.73; and hESC2, 0.66 versus 0.65). We also plotted the input representation for a few correctly predicted causal pairs. As expected (Figure 2B–E), we observe that a shift or phase delay is always from the affecting to the affected gene. Unlike prior methods that require the user to specify the lag duration, TDL determines the importance of such shift and the length based on training information only, highlighting the flexibility of the representation and method. We also explored a sample that was only correctly assigned by TDL while the 2D CNNC incorrectly inferred the wrong direction for them (Figure 2F–H). We found that the model trained with the 2D NEPDF (CNNC) focuses on low-expression (marginal) regions, while TDL models focus on temporal dynamics including phase differences which enables them to make the correct assignments.

Figure 2 .

Causality prediction. (A) AUROC of Granger causality (with and without pseudo-time ordering), CNNC, 3D CNN and conv-LSTM on TF-target causality prediction tasks in mESC1, mESC2, hESC1 and hESC2 datasets, respectively. (B–E) Average gene pair expression over time of four pairs that were correctly predicted as gene1 → gene2 (top) and 2 → 1 (bottom) by TDL models in hESC2 dataset. (F–H) The Average gene expression over time (F), and the stereoscopic surface (upper)/heatmap (bottom) of NEPDF used by CNNC (G), and time-course NEPDFs along with down-sampled time point used by TDL (H) for a pair that was correctly predicted as positive by TDL while wrongly predicted as negative by CNNC in hESC1 dataset.

Using TDL to predict TF target genes

Next we tested the ability of TDL models to predict general gene interactions using time-series scRNA-Seq data. Here, we used the same datasets as in the previous section but changed the task performed by the models so that they attempt to find interactions and not just causal relationships. Specifically, the set of pairs we use for training contains known interacting pairs (positive) which are a TF and its known target and negative (random) pairs which contain a TF and a random gene that is determined to be not a target of that TF. We compared TDL models to several prior methods developed for learning interactions from time-series expression data. For all comparisons, we used the labeled training dataset to learn parameters for all supervised models. Specifically, we compared the TDL approaches (3D CNN and Conv-LSTM) with a 2D CNNC [17] (which is also supervised), two popular unsupervised methods, PC and MI [32], and to regression methods for time-course gene expression data. For the regression comparison, we used dyngenie3 [5] which performs random-forest regression and uses it to select the key features (other genes) for each gene in two ways, as discussed before (either using the average expression for each time point or applying the method to pseudo-time ordered cells).

Results are presented in Figure 3A (see Figure S9 available online at https://academic.oup.com/bib for results of all models). As can be seen, the supervised methods outperformed the unsupervised methods (PC and MI) in all tasks. They were also much better than the regression models and were still lower than the TDL results. In addition, the TDL models outperformed 2D CNNC in three of the four datasets. To gain insight about the information the TDL methods utilize to accurately predict interactions we plotted input samples that were correctly predicted as interacting (label of 1) or not-interacting (0) by TDL. As can be seen in Figure 2B and D, unlike 2D-input-based methods, TDL methods can take advantage of phase difference between the two genes to correctly determine that they are interacting. While prior methods have also utilized delayed or time shifted interactions [5], these required explicit choices or search for the duration of the shifted curves. In contrast, TDL were able to infer such shifts without any explicit setting and are flexible adapting to the specific experiments and gene-pair shifts. Note also that simple correlation may not be enough to infer interactions. For example, in the hESC2 dataset, the two genes presented in Figure 2C and E are highly correlated and yet the TDL methods correctly inferred that they are not interacting. It is well known that gene-expression correlation often results from co-regulation of pairs of genes and is not always the result of direct interactions. The fact that TDL models were able to infer such phenomena from training data is a strong indication for their flexibility and ability to focus on relevant information. We also plotted positive samples that were correctly predicted by TDL model (Figure 2F–H) while wrongly assigned as not interacting by the 2D CNNC. We again observe that the models seem to focus on both phase delay between genes along the time axes (Figure 2F), and dynamics among 2D NEPDF in each time point (Figure 2H).

Figure 3 .

TF target prediction. (A) AUROC of dyngenie3, dyngenie3 with pseudo-time ordering by Monocle3, Pearson correlation (PC), mutual information (MI), CNNC, 3D CNN and conv-LSTM on TF-target prediction in mESC1, mESC2, hESC1 and hESC2 datasets respectively. (B–E) Average gene pair expression along with time point of typical samples that were correctly predicted as interacting gene pairs and non-interacting gene pairs by TDL models in hESC2 dataset. (F–H) Average gene pair expression along with time point (F), stereoscopic surface (upper)/heatmap (bottom) of NEPDF used by CNNC (G) and time-course NEPDF along with down-sampled time point used by TDL (H) of a sample that was correctly predicted as positive by TDL while wrongly predicted as negative by CNNC in hESC1 dataset.

To explore additional interactions beyond TF–gene relationships, we next selected the top 1000 highly expressed genes from the MESC2 dataset, and used a model trained using TF-target pairs to score all possible gene pairs among these 1000 genes. Manual inspection showed that among the top 10 predicted pairs (none of which include TFs used for training), five are supported by recent studies (Table 3). For example, ‘ndufa4’ is known to be upregulated by ‘apoe4’ [33], and ‘igfbp2’ and ‘hist1h2ao’ are both involved in a pathway activated for Alcohol and Stress Responses [34].

Table 3.

Top predicted gene pairs based on conv-LSTM

| Gene1 | Gene2 | Reference |

|---|---|---|

| igfbp2 | hist1h2ao | Both are involved in Alcohol and Stress Responses [34] |

| apoe | slc2a1 | Both are AD-associated genes regulating LRP1 function [35] |

| apoe | rpl14-ps1 | |

| cd63 | ndufa4 | Both are highly expressed genes in the choroid plexus in normal physiological conditions [36] |

| lgals1 | hist1h2ap | |

| cd63 | cyc1 | |

| cd63 | sugt1 | |

| apoe | ndufa4 | Ndufa4 is upregulated by apoe [33] |

| ctsl | hist1h2ao | |

| apoe | dnmt3b | Apoe is overexpressed in HSPCs isolated from dnmt3b7 transgenic embryos identified [37] |

Table S1, available online at https://academic.oup.com/bib, presents the run time for the different NN methods we compared. As can be seen, while TDL models improve on 2D CNNC, their run time is much larger [when using the optimal input resolution for TDL (8 × 8) and for 2D (16 × 16)].

Using TDL for assigning functions to genes

TDL can also be used to predict indirect or functional gene relationships. For example, there are hundreds of genes involved in cell-cycle-related activities. All of them share the same functional annotation though most do not interact. We then used TDL to identify new functions for genes based on known genes encoding that function. For this, we focused on the following categories: cell cycle, rhythm, immune and proliferation.

We downloaded the relevant gene sets from GSEA [24]. We used 2/3 of the genes for each function for training and the remaining genes for testing. For the function prediction tasks, we used mouse brain time-course single-cell data since the data contain many more cells (21 K cells) than the mESC and hESC datasets.

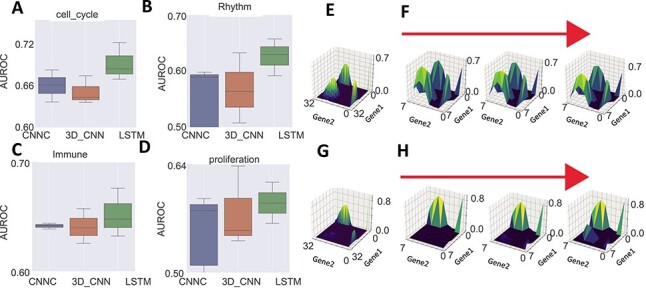

As can be seen in Figure 4A–D, for cell-cycle function-assignment, conv-LSTM achieves an AUROC of 0.72, compared to 0.68 for CNNC and 0.67 for 3D CNN. Conv-LSTM also outperforms other methods significantly for all functions. To explore the reason for TDL’s improvement over CNNC, we selected two positive samples that were correctly predicted by conv-LSTM, while 2D CNNC predicts one correctly as cell cycle (Figure 4E and F) and the other, incorrectly, as not (Figure 4G and H). We then plotted the 2D and 3D NEPDF used by the two methods. As can be seen, for samples correctly predicted by both conv-LSTM and CNNC, both 2D and 3D NEPDF display cyclic-like patterns. However, for the ones on which 2D CNNC makes mistakes we observe such dynamics for only a subset of the time points (either due to noise or sampling size) which can still be identified by conv-LSTM, but obscures the average computed by 2D CNNC.

Figure 4 .

Function assignment. (A–D) AUROC of CNNC, 3D CNN and conv-LSTM on the function prediction task for cell cycle, rhythm, immune and proliferation genes respectively. (E, F) The 2D NEPDF used by CNNC and 3D NEPDF used by conv-LSTM for a positive pair which both CNNC and conv-LSTM correctly classified. (G, H) 2D and 3D NEPDF for a pair that was correctly classified by conv-LSTM while incorrectly classified by CNNC.

Discussion

Several computational methods have been developed for inferring interactions and causality from time-series gene-expression data. Most of these methods were developed for bulk data and while some have been also applied to time-series scRNA-Seq data, such application is not straightforward. First, in scRNA-Seq analysis there are many more expression profiles when compared to bulk datasets. Second, the ordering of the single cells is much less clear when compared to time-series bulk data. Finally, various noise and drop-out issues make scRNA-Seq analysis more challenging.

To address these problems, we developed TDL methods for the analysis of time-series scRNA-Seq data. TDL models rely on encoding for gene-expression data as images or a series of images. Each image captures the histogram of a pair of genes for a specific time point and the series of images capture how such histograms evolve over time. Training TDL with known positive and negative pairs leads to models that can infer interactions from such inputs and can be applied to all unknown pairs to predict interactions, causality and function.

We tested TDL using five time-series scRNA-Seq datasets ranging in size from 700 to 21 000 cells. TDL models were used to perform several tasks including predicting the direction of an interaction, predicting gene–gene interaction (targets of TFs), and predicting the function of a gene. In most cases we tested, TDL and CNNC [17] models outperform methods developed for bulk data analysis and methods developed for the analysis of static scRNA-Seq data, indicating that neural network-based methods can improve over traditional methods for predicting interacting pairs. In addition, as we showed, TDL can accurately predict novel interactions that CNNC cannot find. CNNC uses asymmetric NEPDF but cannot rely on changes over time and so cannot accurately predict casual interactions when the overall NEPDF is pretty symmetric as shown in Figure 2G. In contrast, by relying on the dynamics of the observed data TDL can more accurately identify such interactions. Thus, TDL can be used to greatly reduce experimental time, costs and effort when studying interactions in specific cell types. Using TDL we can profile a small subset of TFs in these cells (using bulk ChIP-Seq experiments) and combine the outcome with time-series scRNA-Seq to accurately predict targets for TFs that were not experimentally profiled.

TDL can use two different neural network architectures: conv-LSTM and 3D CNN. conv-LSTM is a natural way to handle dynamic NEPDF data since they contain both image-like and time-course information. However, for short time-series LSTM may not be able to correctly learn temporal dependencies and for these 3D CNN may work better. Since the method allows user to determine training performance, the user can test both architectures and chose the one that works best for the data.

While TDL worked well for the datasets we looked at, there are still several ways in which they can be improved. Determining the optimal dimension for the input NEPDF, which should be a function of the number of cells and the number of time points, is a challenge. In addition, the current architecture is dependent on the number of time points and so the same model cannot be applied to a different dataset, even for the same condition, if the number of time points do not match. Furthermore, in this paper, we did not distinguish between activators and repressors and the method was trained to predict regulation regardless of the role of the TF given the limited number of TFs we had for training. However, in specific applications of the method researchers may indeed be interested in separating the two sets of TFs. In that case, researchers can either train two separate networks, one to predict for activators and one to predict for repressors or they can train a single network with three labels (activator, repressor, not a regulator).

Our TDL models are implemented in Python. Complete code and sample data are available from the supporting website.

Key Points

A novel encoding strategy for time-course single cell RNA-seq data.

A corresponding deep learning framework for inferring gene–gene interactions using the novel encoding.

Demonstration of the usefulness of the method by applying it to several real biological datasets.

Interpretations of the signals the network focuses on and on their biological meaning.

Supplementary Material

Ye Yuan is an associate professor in the Department of Automation, Shanghai Jiao Tong University. His research interests focus on machine learning methods for the analysis and modeling of biological data.

Ziv Bar-Joseph is the FORE Systems Professor of Computational Biology and Machine Learning at CMU. His work focuses on the development of machine learning methods for the analysis, modeling and visualization of time-series high-throughput biological data.

Contributor Information

Ye Yuan, Department of Automation, Shanghai Jiao Tong University, USA.

Ziv Bar-Joseph, FORE Systems Professor of Computational Biology and Machine Learning at CMU, USA.

Funding

National Institutes of Health (1R01GM122096, OT2OD026682, 1R01HL127349 to Z.B.-J.).

Availability and Implementation

Freely available at https://github.com/xiaoyeye/TDL.

References

- 1.Stuart JM, Segal E, Koller D, et al. A gene-coexpression network for global discovery of conserved genetic modules. Science 2003;302:249–55. [DOI] [PubMed] [Google Scholar]

- 2.Marbach D, Costello JC, Kuffner R, et al. Wisdom of crowds for robust gene network inference. Nat Methods 2012;9:796–804. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Finkle JD, Wu JJ, Bagheri N. Windowed Granger causal inference strategy improves discovery of gene regulatory networks. Proc Natl Acad Sci USA 2018;115:2252–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Huynh-Thu VA, Irrthum A, Wehenkel L, et al. Inferring regulatory networks from expression data using tree-based methods. PLoS One 2010;5:e12776. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Huynh-Thu VA, Geurts P. dynGENIE3: dynamical GENIE3 for the inference of gene networks from time series expression data. Sci Rep 2018;8:3384. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Kim S, Putrino D, Ghosh S, et al. A Granger causality measure for point process models of ensemble neural spiking activity. PLoS Comput Biol 2011;7:e1001110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Qian J, Dolled-Filhart M, Lin J, et al. Beyond synexpression relationships: local clustering of time-shifted and inverted gene expression profiles identifies new, biologically relevant interactions. J Mol Biol 2001;314:1053–66. [DOI] [PubMed] [Google Scholar]

- 8.Schulz MH, Devanny WE, Gitter A, et al. DREM 2.0: improved reconstruction of dynamic regulatory networks from time-series expression data. BMC Syst Biol 2012;6:104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Zou M, Conzen SD. A new dynamic Bayesian network (DBN) approach for identifying gene regulatory networks from time course microarray data. Bioinformatics 2005;21:71–9. [DOI] [PubMed] [Google Scholar]

- 10.Mayer C, Hafemeister C, Bandler RC, et al. Developmental diversification of cortical inhibitory interneurons. Nature 2018;555:457–62. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Semrau S, Goldmann JE, Soumillon M, et al. Dynamics of lineage commitment revealed by single-cell transcriptomics of differentiating embryonic stem cells. Nat Commun 2017;8:1096. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Soldatov R, Kaucka M, Kastriti ME, et al. Spatiotemporal structure of cell fate decisions in murine neural crest. Science 2019;364:6444. [DOI] [PubMed] [Google Scholar]

- 13.Shin D, Lee W, Lee JH, et al. Multiplexed single-cell RNA-seq via transient barcoding for simultaneous expression profiling of various drug perturbations. Sci Adv 2019;5:eaav2249. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Wang Z, Jin S, Liu G, et al. DTWscore: differential expression and cell clustering analysis for time-series single-cell RNA-seq data. BMC Bioinformatics 2017;18:270. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Trapnell C, Cacchiarelli D, Grimsby J, et al. The dynamics and regulators of cell fate decisions are revealed by pseudotemporal ordering of single cells. Nat Biotechnol 2014;32:381–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Specht AT, Li JLEAP. Constructing gene co-expression networks for single-cell RNA-sequencing data using pseudotime ordering. Bioinformatics 2017;33:764–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Yuan Y, Bar-Joseph Z. Deep learning for inferring gene relationships from single-cell expression data. Proc Natl Acad Sci USA 2019;116:27151–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Shi X, Chen Z, Wang H, et al. Convolutional LSTM network: a machine learning approach for precipitation nowcasting. In: Advances in Neural Information Processing Systems, 2015;2015:802–10. [Google Scholar]

- 19.Klein AM, Mazutis L, Akartuna I, et al. Droplet barcoding for single-cell transcriptomics applied to embryonic stem cells. Cell 2015;161:1187–201. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Chu LF, Leng N, Zhang J, et al. Single-cell RNA-seq reveals novel regulators of human embryonic stem cell differentiation to definitive endoderm. Genome Biol 2016;17:173. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Petropoulos S, Edsgard D, Reinius B, et al. Single-cell RNA-Seq reveals lineage and X chromosome dynamics in human preimplantation embryos. Cell 2016;165:1012–26. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Zhang Y, Liu T, Meyer CA, et al. Model-based analysis of ChIP-Seq (MACS). Genome Biol 2008;9:R137. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Schulz MH, Pandit KV, Lino Cardenas CL, et al. Reconstructing dynamic microRNA-regulated interaction networks. Proc Natl Acad Sci USA 2013;110:15686–91. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Subramanian A, Tamayo P, Mootha VK, et al. Gene set enrichment analysis: a knowledge-based approach for interpreting genome-wide expression profiles. Proc Natl Acad Sci USA 2005;102:15545–50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Min S, Lee B, Yoon S. Deep learning in bioinformatics. Brief Bioinform 2017;18:851–69. [DOI] [PubMed] [Google Scholar]

- 26.Mikolov T, Karafiát M, Burget L, et al. Recurrent neural network based language model. In: INTERSPEECH, 11th Annual Conference of the International Speech Communication Association, Makuhari, Chiba, Japan, 2010;2010:1045–8. [Google Scholar]

- 27.Hochreiter S. The vanishing gradient problem during learning recurrent neural nets and problem solutions. Int J Uncertain Fuzziness Knowl Based Syst 1998;6:107–16. [Google Scholar]

- 28.Hochreiter S, Schmidhuber J. Long short-term memory. Neural Comput 1997;9:1735–80. [DOI] [PubMed] [Google Scholar]

- 29.Bar-Joseph Z, Gitter A, Simon I. Studying and modelling dynamic biological processes using time-series gene expression data. Nat Rev Genet 2012;13:552–64. [DOI] [PubMed] [Google Scholar]

- 30.Yevshin I, Sharipov R, Valeev T, et al. GTRD: a database of transcription factor binding sites identified by ChIP-seq experiments. Nucleic Acids Res 2017;45:D61–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Cao J, Spielmann M, Qiu X, et al. The single-cell transcriptional landscape of mammalian organogenesis. Nature 2019;566:496–502. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Song L, Langfelder P, Horvath S. Comparison of co-expression measures: mutual information, correlation, and model based indices. BMC Bioinformatics 2012;13:328. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Nuriel T, Peng KY, Ashok A, et al. The endosomal-lysosomal pathway is dysregulated by APOE4 expression in vivo. Front Neurosci 2017;11:702. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Luo J, Xu P, Cao P, et al. Integrating genetic and gene co-expression analysis identifies gene networks involved in alcohol and stress responses. Front Mol Neurosci 2018;11:102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Ramanathan A, Nelson AR, Sagare AP, et al. Impaired vascular-mediated clearance of brain amyloid beta in Alzheimer's disease: the role, regulation and restoration of LRP1. Front Aging Neurosci 2015;7:136. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Marques F, Sousa JC, Coppola G, et al. Transcriptome signature of the adult mouse choroid plexus. Fluids Barriers CNS 2011;8:10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Vasanthakumar A, Zullow H, Lepore JB, et al. Epigenetic control of apolipoprotein E expression mediates gender-specific hematopoietic regulation. Stem Cells 2015;33:3643–54. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.