Abstract

Objective

To identify themes and temporal trends in the sentiment of COVID-19 vaccine-related tweets and to explore variations in sentiment at world national and United States state levels.

Methods

We collected English-language tweets related to COVID-19 vaccines posted between November 1, 2020, and January 31, 2021. We applied the Valence Aware Dictionary and sEntiment Reasoner tool to calculate the compound score to determine whether the sentiment mentioned in each tweet was positive (compound ≥ 0.05), neutral (-0.05 < compound < 0.05), or negative (compound ≤ -0.05). We applied the latent Dirichlet allocation analysis to extract main topics for tweets with positive and negative sentiment. Then we performed a temporal analysis to identify time trends and a geographic analysis to explore sentiment differences in tweets posted in different locations.

Results

Out of a total of 2,678,372 COVID-19 vaccine-related tweets, tweets with positive, neutral, and negative sentiments were 42.8%, 26.9%, and 30.3%, respectively. We identified five themes for positive sentiment tweets (trial results, administration, life, information, and efficacy) and five themes for negative sentiment tweets (trial results, conspiracy, trust, effectiveness, and administration). On November 9, 2020, the sentiment score increased significantly (score = 0.234, p = 0.001), then slowly decreased to a neutral sentiment in late December and was maintained until the end of January. At the country level, tweets posted in Brazil had the lowest sentiment score of −0.002, while tweets posted in the United Arab Emirates had the highest sentiment score of 0.162. The overall average sentiment score for the United States was 0.089, with Washington, DC having the highest sentiment score of 0.144 and Wyoming having the lowest sentiment score of 0.036.

Conclusions

Public sentiment on COVID-19 vaccines varied significantly over time and geography. Sentiment analysis can provide timely insights into public sentiment toward the COVID-19 vaccine and guide public health policymakers in designing locally tailored vaccine education programs.

1. Introduction

Twitter has become an important platform for gathering public opinion and is widely used in public health research [1]. It provides real-time public discussions, attitudes, and reflections of the varieties of opinions in different locations [2], [3], [4], [5], [6]. Twitter has over 166 million daily users with a median monthly posting volume of two [7], [8]. According to the results of a survey of Twitter users in the United States, Twitter users tend to be younger and more educated compared to the general public [8]. However, there are no significant differences in gender, race, and ethnicity between Twitter users and the general U.S. population [8].

The outbreak of COVID-19 has become one of the most urgent issues in public health recently [9] In the past few months, with the successful development of the COVID-19 vaccine, many countries have started vaccination rollout [10], [11], [12]. At this point, the focus of research should also shift to the rapid and effective rollout of the vaccine [13]. Public sentiment toward the COVID-19 vaccine affects whether vaccination can be administered to large populations and thus successfully achieve herd immunity.[14] Exploring whether public sentiment varies over time and geography will help public health policymakers make timely adjustments to vaccine education programs based on the local population's sentiment. In addition, topics identified in tweets with negative sentiments about COVID-19 vaccines can play an important role in guiding education and communication.

Previous studies have successfully used tweets to analyze public sentiment towards other vaccines besides COVID-19 vaccines. Raghupathi et al. analyzed public sentiment towards vaccination through a frequency-inverse document frequency technique and found that most people expressed concern about newly developed vaccines/diseases [15]. Du et al. developed deep learning models for HPV vaccine-related tweets and found that sentiment changed in early 2017 and varied significantly across states in the U.S. [16]. Martin et al. analyzed maternal vaccination posts from different countries and successfully identified the main topics with a global concern (vaccination promotion, participation of pregnant women in research, and institutional trust and transparency) [17]. Currently, few studies have analyzed public sentiment specifically for COVID-19 vaccine-related tweets on a worldwide scale. For example, Dubey analyzed sentiments in COVID-19 vaccine-related tweets that posted only in India and from January 14, 2021, to January 18, 2021 [18]. Liu et al. collected a small dataset using the COM-B model to explain behavioral intention towards COVID-19 vaccines from a social psychological perspective [19]. Some researchers have also built models of transfer learning to automatically detect tweets containing behavioral intentions with respect to the COVID-19 vaccine [20]. Muller et al. developed a BERT variant language model (COVID-Twitter-BERT) that focuses on natural language processing studies of COVID-19 related tweets. The model improves performance by 10–30% compared to the base model, BERT [21]. However, it remains unknown whether public sentiment toward the COVID-19 vaccine changed during the initial launch of the vaccine, or whether the public sentiment was influenced by geographic location.

This study was aimed at the early stages of vaccine approval and large-scale population rollout, specifically exploring changes in public sentiment following Pfizer's vaccine announcement. We proposed two research questions: RQ1) Does public sentiment toward the COVID-19 vaccines change before and after Pfizer's vaccine announcement? RQ2) Does public sentiment toward the COVID-19 vaccines differ based on geographic location? To answer these research questions, we calculated sentiment scores for a large set of COVID-19 vaccine-related tweets posted from November 1, 2020, to January 31, 2021, and conducted temporal analysis and geographic analysis.

2. Methods

2.1. Data collection

We used a combination of keywords and hashtags “(#covid OR covid OR #covid19 OR covid19) AND (#vaccine OR vaccine OR #vacine OR vacine OR vaccinate OR immunization OR immune OR vax)”) to search for English COVID-19 vaccine-related tweets posted between November 1, 2020, and January 31, 2021. We used the snscrape package in Python to collect tweet IDs and then used the tweepy package to collect the data. We also used a filter to exclude retweets.

2.2. Sentiment analysis

Sentiment analysis is an important task in natural language processing, which applies rule-based, supervised, or unsupervised machine learning or deep learning techniques to classify the sentiment expressed in a text into different categories [22]. The most common classification is divided into positive emotions, negative emotions, and neutral sentiment. This study applied the Valence Aware Dictionary and Sentiment Reasoner (VADER) [23]. VADER is a high-performing lexicon and rule-based tool for computing sentiment scores, especially for microblog-like contexts in social media. It can achieve an F1 of 0.96 in classifying a tweet sentiment, which is higher than machine learning models and even more accurate than human raters (F1 = 0.84) [23]. The tool could analyze each tweet, including emojis, and generate a normalized score called a compound score, ranging from −1 (extremely negative) to + 1 (extremely positive). Researchers typically classify texts as positive, neutral, and negative using the following thresholds: positive (compound >=0.05); neutral (-0.05 < compound < 0.05); and negative (compound<=-0.05).

2.3. Temporal analysis

After obtaining each tweet's sentiment score, we calculated the daily average and plotted the distribution of sentiment scores over time. We compared the sentiment scores with dates of announcement for different COVID-19 vaccines to determine the impact on public sentiment. To determine if a significant change in sentiment over time existed, we used the Pruned Exact Linear Time (PELT) algorithm [24], which applied a procedure that minimized the cost function to find change points. It has been shown to create a substantial improvement compared to other methods, both in accuracy and speed [24], [25].

2.4. Geographic analysis

We used a dictionary to map the user's location in the tweet to the country. We also used the python package Twitter_Geoloaction to map the location of United States users in tweets to states. We performed the geographic analysis at two levels: the national level and the state level. We reported means and 95% CIs for sentiment scores. We conducted correlation analyses to analyze the relationship between the number of infections or deaths and sentiment scores. In addition, we grouped the sentiment scores according to the voting results of each state in 2020 U.S. election (Democratic or Republican) and performed t-tests to compare the results. The threshold of statistical significance was 0.001.

3. Results

3.1. Data summary

We collected 2,678,372 COVID-19 vaccine-related tweets in English posted from November 1, 2020, to January 31, 2021. 1,971,342 (73.6%) tweets contained users’ locations. 1,146,866 (42.8%) tweets had positive sentiment, 720,737 (26.9%) tweets had neutral sentiment, and 810,769 (30.3%) tweets had negative sentiment.

3.2. Temporal analysis

The duration of the collection of tweets was 92 days. The average number of tweets per day was 29,112.94, with the highest number of 57,320 (December 8, 2020) and the lowest number of 4,666 (November 8, 2020). We noted a significant increase in the number of tweets starting from November 9, 2020 (P < 0.001).

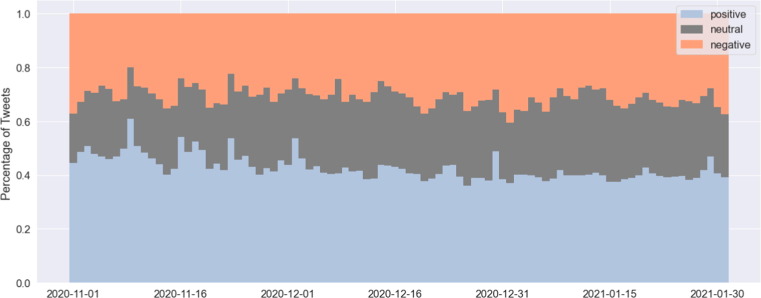

Average daily sentiment scores ranged from −0.014 (January 1, 2021) to 0.234 (November 9, 2020), with an overall mean of 0.065, indicating an overall positive sentiment for COVID-19 vaccine-related tweets. The PELT algorithm identified 8 change points: '2020–11-03 14:00:00′, '2020–11-09 15:00:00′, '2020–11-12 23:00:00′, '2020–11-23 14:00:00′, '2020–12-07 13:00:00′, '2020–12-10 01:00:00′, '2020–12-17 13:00:00′, and '2020–12-28 19:00:00′. They are shown in Fig. 1 . In particular, the change point of '2020–11-09 15:00:00′ indicated a significant increase in sentiment scores (P < 0.001). However, the sentiment score then gradually decreased until the end of December, after which it remained around 0.05. The 14-day moving average of sentiment scores, daily average sentiment scores were also shown in Fig. 3 .

Fig. 1.

Distribution of daily average sentiment score (yellow dots). The solid line is the 14-day moving average of sentiment scores. The diamond markers in red are change points. The green line indicates the date of the first effective vaccine announcement (November 9, 2020). (For interpretation of the references to colour in this figure legend, the reader is referred to the web version of this article.)

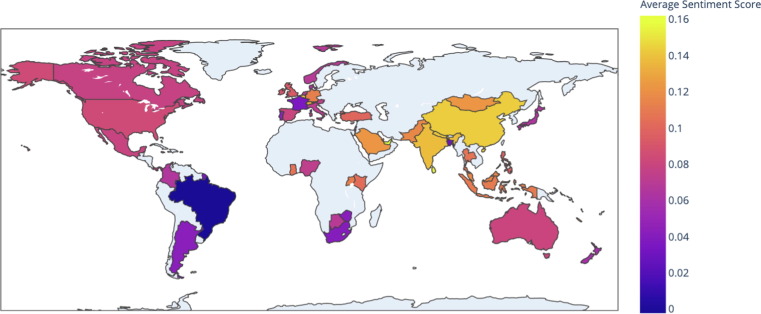

Fig. 3.

Heat map of the average sentiment score by country. The countries with<1000 tweets in English were shown in grey.

Using the threshold values for the sentiment (compound>=0.05: positive; −0.05 < compound < 0.05: neutral; and compound<=-0.05: negative), we calculated the proportion of tweets for each category of sentiment (see Fig. 2). The percentage of positive tweets ranged from 36.3% (December 26, 2020) to 61.1% (November 9, 2020).

Fig. 2.

Distribution of tweets related to COVID-19 across sentiment types.

3.3. Geographic analysis

We mapped 1,395,944 tweets to countries. We calculated the average sentiment score based on each country. We selected 53 countries with > 1,000 tweets over the duration of 92 days from November 1, 2020 to January 31, 2021 for the analysis. The heat map of the average sentiment scores for these countries is shown in Fig. 3. The mean scores and 95 %CIs for sentiment scores from the top 10 countries that posted the most tweets are shown in Table 1 . Among them, tweets posted in India had a very high sentiment score of 0.138 [0.135, 0.141] (n = 81,472). Tweets posted in Brazil had the lowest sentiment score of −0.002 [-0.022, 0.018] (n = 1,645), while tweets posted in the United Arab Emirates had the highest sentiment score of 0.162 [0.152, 0.172] (n = 5,691). In the correlation analysis, country-level sentiment scores were not statistically significantly associated with the number of infections (r = -0.063, P = 0.401) or deaths (r = -0.030, P = 0.692).

Table 1.

The average sentiment, 95 %CI, and the number of tweets in the top 10 most posted countries.

| Country | Mean | Number of Tweets |

|---|---|---|

| United States | 0.084 [0.083, 0.085] | 831,755 |

| United Kingdom | 0.093 [0.090, 0.095] | 161,980 |

| India | 0.138 [0.135, 0.141] | 81,472 |

| Canada | 0.077 [0.074, 0.080] | 74,994 |

| Australia | 0.080 [0.074, 0.086] | 23,270 |

| South Africa | 0.041 [0.035, 0.047] | 21,541 |

| Nigeria | 0.073 [0.067, 0.079] | 17,719 |

| Ireland | 0.090 [0.083, 0.097] | 17,075 |

| Philippines | 0.096 [0.090, 0.102] | 13,259 |

| France | 0.036 [0.028, 0.044] | 9925 |

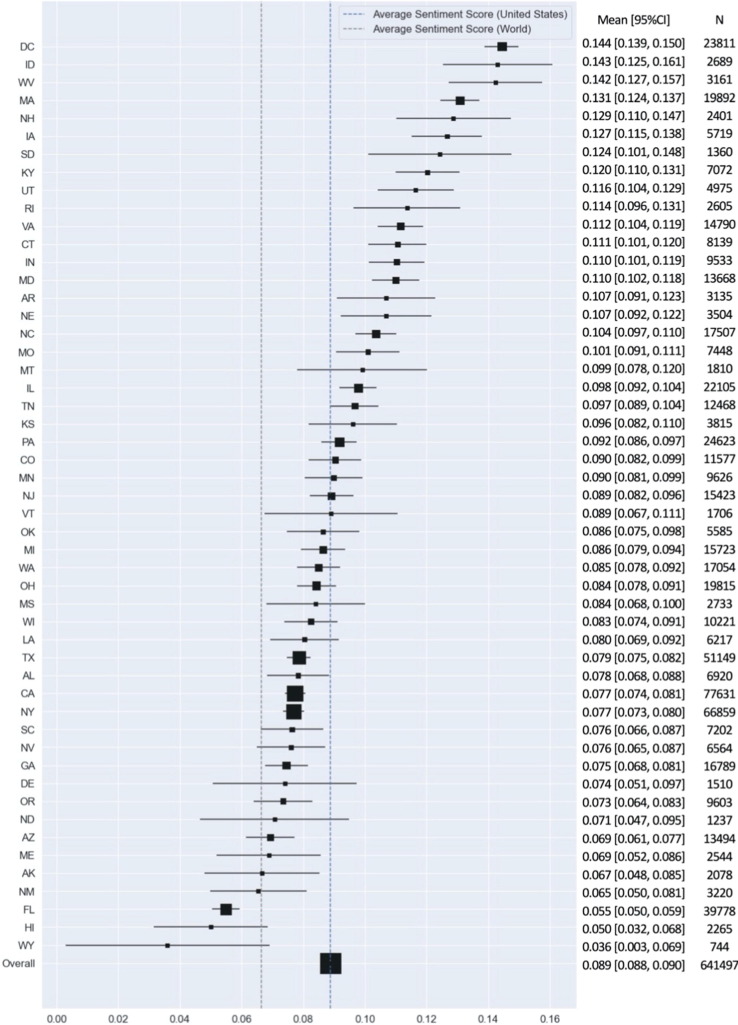

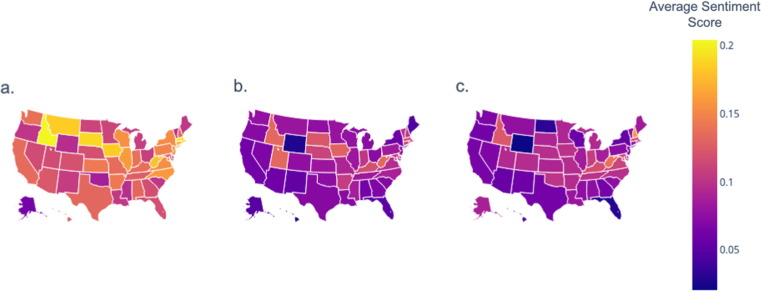

We mapped 641,497 tweets to each U.S. state. We calculated an average sentiment score based on each state. The overall average sentiment score for tweets posted in the U.S. was 0.089 [0.088, 0.090], with Washington DC having the highest sentiment score of 0.144 [0.139,0.150] and Wyoming having the lowest sentiment score of 0.036 [0.003, 0.069]. Based on the commonly used threshold for judging positive sentiment, a score >=0.05, even though the highest and lowest sentiment scores were slightly different, their sentiment types were quite different. Only Wyoming had a neutral average sentiment, while all other states were in the positive sentiment category. There was a significant difference in sentiment in tweets posted between states (F = 27.184, P < 0.001). The mean values and 95 %CIs of each state's sentiment scores in the United States are displayed in Fig. 4 . Heatmaps of the monthly average sentiment scores for tweets posted in each state from November 2020 to January 2021 are shown in Fig. 5 . In the correlation analysis, state-level sentiment scores were not statistically significantly associated with the number of infections (r = -0.205, P = 0.148) or deaths (r = -0.186, P = 0.192). The sentiment scores for Democratic and Republican states were 0.091 (0.022) and 0.096 (0.026), respectively. In the t-test, there was no significant difference between the sentiment scores for Democratic and Republican states (P = 0.468).

Fig. 4.

Average sentiment score and its error bar in each state in the United States.

Fig. 5.

Heat map of the state's average sentiment score in the United States in each month. (a. November 2020; b. December 2020; c. January 2021).

4. Discussion

In this study, we collected a large corpus of 2,678,372 COVID-19 vaccine-related tweets posted from November 1, 2020, to January 31, 2021. Of these, 42.8% were positive tweets, while 30.3% were negative tweets. Public sentiment and the number of tweets increased significantly starting on November 9, 2020. Sentiment then slowly decreased and stabilized around 0.05 by the end of December. The sentiment reflected in tweets about the COVID-19 vaccine varied significantly by geography.

Regarding the change point we identified in the temporal analysis, November 9, 2020, was the date when Pfizer announced that their vaccine had a 90% effective rate, and this was the first vaccine to be announced. The temporal analysis results showed that people posted many tweets with a high positive sentiment after the Pfizer vaccine announcement. However, by the end of December, the positive sentiment declined to a neutral sentiment. This finding suggested that the public's positive sentiment toward the COVID-19 vaccines faded over time. Public policymakers need to pay more attention to the doubts that exist and strengthen vaccine education programs after the vaccine launch.

The geographic analysis findings indicated that the number of confirmed cases or deaths in a region might influence local sentiment toward the COVID-19 vaccines. For example, Brazil was one of the three countries with the highest number of confirmed cases and death: 992,198 confirmed cases and 24,094 deaths [26]. Meanwhile, the average sentiment score for tweets posted in Brazil was the lowest in our geographic analysis (-0.002) and the only country with a negative sentiment score. However, no statistically significant correlation was found in the correlation analysis. Significant country-level differences in sentiment may also be due to different national pandemic control and vaccination policies. At the U.S. state level, states with severe outbreaks, such as California, Texas, Florida, New York, and Louisiana, had lower sentiment scores than the average U.S. sentiment score. In addition, Washington, DC had the highest sentiment score, possibly due to the positive news and promotion of COVID-19 vaccines by politicians.

Significant differences of sentiment scores in English tweets were found at both the country-level and the state-level in the U.S., suggesting that the COVID-19 vaccines promotion programs need to be tailored to geographic areas. This finding also provided an opportunity for future researchers to examine why people in different regions have different sentiments toward COVID-19 vaccines.

Several studies have conducted sentiment analysis with tweets on COVID-19 disease itself, not vaccines. For example, Li et al. used deep learning models to classify emotions in the tweets into joy, trust, surprise, anticipation, fear, anger, sadness, and disgust [27]. Then, they calculated the correlation of keywords with tweets that expressed emotions of fear and anger to understanding why the public feel those emotions during the pandemic. Valdez et al. used the VADER tool and identified the sentiment was decreased significantly in late March 2020 during the pandemic [28]. We urge more researchers to use tweets specific to the COVID-19 vaccine to understand public perceptions of the vaccine, which could greatly assist in the development of public policies to effectively promote the vaccine during the later stages of the pandemic. It also corresponds to the suggestion in the scoping review by Karafillakis et al. that social media monitoring is a valuable study design for providing evidence-based views of large populations [29].

This study has several limitations. First, collected tweets might involve identifiable information. Because Twitter is a public platform, we did not perform data protection interventions on the dataset. Second, after reviewing the tweets' content, we noticed that even though the VADER sentiment score could accurately determine the sentiment in the text, it cannot distinguish whether the object of the sentiment was the COVID-19 vaccine or not. For example, a user could post that “COVID will be gone” with the hashtags COVID-19 and vaccine. This post reflected the user's confidence in the vaccine; however, VADER scored it as a neutral sentiment. Therefore, a simple VADER sentiment score calculation might not be sufficient if researchers intend to further assess public attitudes toward the COVID-19 vaccines. In future research, we will manually label 5,000 tweets from this corpus as positive, neutral, and negative attitudes. Then, we will develop the transfer learning models to more accurately classify users' attitudes toward the COVID-19 vaccines. In addition, because applying theoretical models to design implementation studies can improve the effectiveness of interventions [30], [31], [32]. Researchers can use theoretical models to further explore topics in tweets with negative attitudes to propose evidence- and theory-based interventions to improve the effectiveness of vaccination promotion.

5. Conclusion

In this study, we conducted a sentiment analysis of 2,678,372 COVID-19 vaccine-related tweets posted from November 1, 2020, to January 31, 2021, and identified that 42.8% of the tweets were positive, while 30.3% were negative. Public sentiment and the number of tweets rose significantly after Pfizer announced that the first COVID-19 vaccine had reached 90% effectiveness and then slowly declined until the end of December when it stabilized at the neutral sentiment. Public sentiment also varied by geography. Public health policymakers and governments should design more effective vaccine education programs based on timely public sentiment and different geographic regions.

7. Contributors

JL, and SL conceived the study. SL, JL and JL performed the analysis, interpreted the results and drafted the manuscript. All authors revised the manuscript. All authors read and approved the final manuscript.

8. Ethic

The work presented in the article has been carried out in an ethical way

Public Perception about COVID-19 Vaccines in Twitter: A

Declaration of Competing Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

References

- 1.Sinnenberg L., Buttenheim A.M., Padrez K., Mancheno C., Ungar L., Merchant R.M. Twitter as a Tool for Health Research: A Systematic Review. Am J Public Health. 2017 Jan;107(1):e1–e8. doi: 10.2105/AJPH.2016.303512. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Deiner M.S., Fathy C., Kim J., Niemeyer K., Ramirez D., Ackley S.F., et al. Facebook and Twitter vaccine sentiment in response to measles outbreaks. Health Informatics J. 2019 Sep 17;25(3):1116–1132. doi: 10.1177/1460458217740723. PMID:29148313. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Allen C, Tsou M-H, Aslam A, Nagel A, Gawron J-M. Applying GIS and Machine Learning Methods to Twitter Data for Multiscale Surveillance of Influenza. Ebrahimi M, editor. PLoS One Public Library of Science; 2016 Jul 25;11(7):e0157734. [doi: 10.1371/journal.pone.0157734]. [DOI] [PMC free article] [PubMed]

- 4.Hawn C. Take two aspirin and tweet me in the morning: how Twitter, Facebook, and other social media are reshaping health care. Health Aff (Millwood) Project HOPE - The People-to-People Health Foundation. Inc. 2009 Mar 2;28(2):361–368. doi: 10.1377/hlthaff.28.2.361. PMID:19275991. [DOI] [PubMed] [Google Scholar]

- 5.Grajales FJ, Sheps S, Ho K, Novak-Lauscher H, Eysenbach G. Social media: a review and tutorial of applications in medicine and health care. J Med Internet Res JMIR Publications Inc.; 2014 Feb 11;16(2):e13. PMID:24518354. [DOI] [PMC free article] [PubMed]

- 6.Chou WYS, Hunt YM, Beckjord EB, Moser RP, Hesse BW. Social media use in the United States: Implications for health communication. J Med Internet Res JMIR Publications Inc.; 2009;11(4). PMID:19945947. [DOI] [PMC free article] [PubMed]

- 7.Twitter: monthly active users worldwide | Statista [Internet]. https://www.statista.com/statistics/282087/number-of-monthly-active-twitter-users/ [accessed April 3,2021].

- 8.Wojcik S., Hughes A. Policy File; Pew Research Center: 2019. Sizing Up Twitter Users. [Google Scholar]

- 9.Liu J., Liu S., Wei H., Yang X. Epidemiology, clinical characteristics of the first cases of COVID-19. Eur J Clin Invest. 2020;50(10) doi: 10.1111/eci.13364. [DOI] [PubMed] [Google Scholar]

- 10.Mahase E. Covid-19: UK approves Pfizer and BioNTech vaccine with rollout due to start next week. BMJ 2020 Dec 2;371:m4714. PMID:33268330. [DOI] [PubMed]

- 11.Limb M. Covid-19: Data on vaccination rollout and its effects are vital to gauge progress, say scientists. BMJ 2021 Jan 11;372:n76. PMID:33431370. [DOI] [PubMed]

- 12.Rosen B., Waitzberg R., Israeli A. Israel’s rapid rollout of vaccinations for COVID-19. Isr J Health Policy Res. 2021 Dec 1;10(1):1–14. doi: 10.1186/s13584-021-00440-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Liu J., Liu S. The management of coronavirus disease 2019 (COVID-19) J Med Virol. 2020 Sep 22;92(9):1484–1490. doi: 10.1002/jmv.25965. PMID:32369222. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Chou W-YS, Budenz A. Considering Emotion in COVID-19 Vaccine Communication: Addressing Vaccine Hesitancy and Fostering Vaccine Confidence. Health Commun Routledge 2020 Dec 5;35(14):1718–1722. PMID:33124475. [DOI] [PubMed]

- 15.Raghupathi V., Ren J., Raghupathi W. Studying public perception about vaccination: A sentiment analysis of tweets. Int J Environ Res Public Health. 2020 May 2;17(10):3464. doi: 10.3390/ijerph17103464. PMID:32429223. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Du J., Luo C., Shegog R., Bian J., Cunningham R.M., Boom J.A., et al. Use of Deep Learning to Analyze Social Media Discussions About the Human Papillomavirus Vaccine. JAMA. 2020 Nov 2;3(11) doi: 10.1001/jamanetworkopen.2020.22025. PMID:33185676. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Martin S., Kilich E., Dada S., Kummervold P.E., Denny C., Paterson P., et al. “Vaccines for pregnant women…?! Absurd” - Mapping maternal vaccination discourse and stance on social media over six months. Vaccine. 2020;38(42):6627–6637. doi: 10.1016/j.vaccine.2020.07.072. [DOI] [PubMed] [Google Scholar]

- 18.Dubey AD. Public Sentiment Analysis of COVID-19 Vaccination Drive in India. SSRN 2021 Jan 27; [doi: 10.2139/ssrn.3772401].

- 19.Liu S, Liu L. Understanding Behavioral Intentions Toward COVID-19 Vaccines: A Theory-based Content Analysis of Tweets. J Med Internet Res 2021 Apr 29. doi: 10.2196/28118. Epub ahead of print. PMID: 33939625. [DOI] [PMC free article] [PubMed]

- 20.Liu S, Li J, Liu J. Leveraging Transfer Learning to Analyze Opinions, Attitudes, and Behavioral Intentions Toward COVID-19 Vaccines. J Med Internet Res 2021 Jul 11. [doi: 10.2196/30251]. Epub ahead of print [PMID: 34254942]. [DOI] [PMC free article] [PubMed]

- 21.Müller M, Salathé M, Kummervold PE. COVID-Twitter-BERT: A Natural Language Processing Model to Analyse COVID-19 Content on Twitter. arXiv,May 2020. [arXiv:2005.07503]. [DOI] [PMC free article] [PubMed]

- 22.Agarwal A, Xie B, Vovsha I, Rambow O, Passonneau R. Sentiment Analysis of Twitter Data. Proceedings of the Workshop on Language in Social Media (LSM 2011), 2011:30-38. [doi: 10.5555/2021109.2021114].

- 23.Ueta C.B., Olivares E.L., Bianco A.C. Responsiveness to Thyroid Hormone and to Ambient Temperature Underlies Differences Between Brown Adipose Tissue and Skeletal Muscle Thermogenesis in a Mouse Model of Diet-Induced Obesity. Endocrinology. 2011 Sep 1;152(9):3571–3581. doi: 10.1210/en.2011-1066. PMID:21771890. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Killick R., Fearnhead P., Eckley I.A. Optimal detection of changepoints with a linear computational cost. J Am Stat Assoc. 2012;107(500):1590–1598. doi: 10.1080/01621459.2012.737745. [DOI] [Google Scholar]

- 25.Dorcas W.G. The Power of the Pruned Exact Linear Time(PELT) Test in Multiple Changepoint Detection. Am J Theor Appl Stat. 2015 Dec 2;4(6):581–586. doi: 10.11648/j.ajtas.20150406.30. [DOI] [Google Scholar]

- 26.World Health Organization. WHO Coronavirus Disease (COVID-19) Dashboard. World Health Organization; 2020. https://covid19.who.int/ [accessed January 31, 2021].

- 27.Li I., Li Y., Li T., Alvarez-Napagao S., Garcia-Gasulla D., Suzumura T. What Are We Depressed About When We Talk About COVID-19: Mental Health Analysis on Tweets Using Natural Language Processing. Lect Notes Comput Sci. 2020;12498:358–370. doi: 10.1007/978-3-030-63799-6_27. [DOI] [Google Scholar]

- 28.Valdez D, Marijn Ten Thij, Bathina K, Rutter LA, Bollen J. Pandemic: Longitudinal Analysis of Twitter Data. [doi: 10.2196/21418]. [DOI] [PMC free article] [PubMed]

- 29.Valdez D., Ten Thij M., Bathina K., Rutter L.A., Bollen J. Social Media Insights Into US Mental Health During the COVID-19 Pandemic: Longitudinal Analysis of Twitter Data. J Med Internet Res. 2020 Dec 14;22(12) doi: 10.2196/21418. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Karafillakis E., Martin S., Simas C., Olsson K., Takacs J., Dada S., et al. Methods for Social Media Monitoring Related to Vaccination: Systematic Scoping Review. JMIR Public Heal Surveill. 2021;7(2):e17149. doi: 10.2196/17149. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Liu S, Reese TJ, Kawamoto K, Del Fiol G, Weir C. Toward Optimized Clinical Decision Support: A Theory-Based Approach. In: 2020 IEEE International Conference on Healthcare Informatics (ICHI). IEEE; 2020:1-2. [doi:10.1109/ICHI48887.2020.9374346].

- 32.Liu S., Reese T.J., Kawamoto K., Del Fiol G., Weir C. A systematic review of theoretical constructs in CDS literature. BMC Med Inform Decis Mak. 2021;21(1):102. doi: 10.1186/s12911-021-01465-2. [DOI] [PMC free article] [PubMed] [Google Scholar]