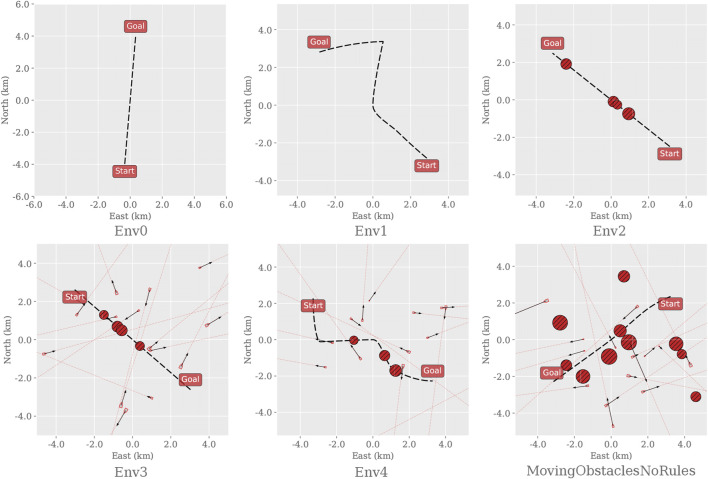

FIGURE 1.

Instances of each training environment used to evaluate the applicability of different RL algorithms on the dual task of path following and collision avoidance. Before defining the path, the start and goal positions are initialized at random but with a fixed distance (8000m) apart. Env0, the trivial case, contains only a straight path. Env1 creates a curved path. Env2 inherits Env0 and places 4 static obstacles randomly along the path. Env3 inherits the properties of Env2 and adds 17 dynamic obstacles with random headings, sizes, and velocities around the path. Env4 inherits Env3 and adds curvature to the path. Finally, MovingObstaclesNoRules increases the number of static obstacles from 4 to 11 and scatters them randomly on and around the path; this represents the original training environment as defined in Meyer et al. (2020b).