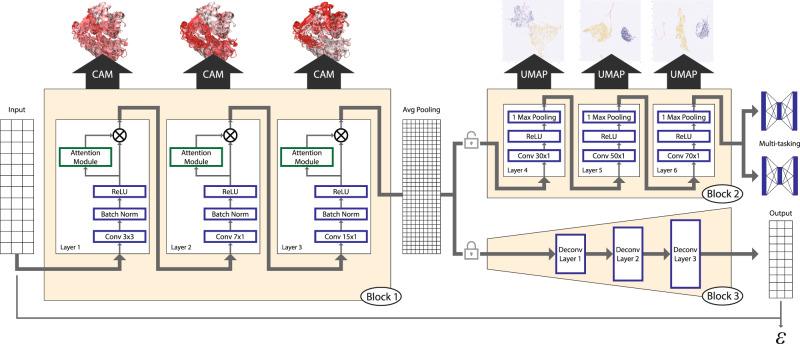

Fig. 1. Overall schematics of the deep-learning model used.

A three-state secondary structure prediction matrix for each sequence is used as input to Block 1. Block 1 includes the first three sequential one-dimensional convolutional layers with attention for feature maps refinement. Feature maps from Block 1 are passed through a global average pooling for dimension reduction and fed into Block 2 with three additional convolutional layers, and finally used to make predictions for both fold and family. Blocks 1 and 2 constitute the deep CNN model for classification. Using Grad-CAM, features from Block 1 are mapped back into sequences and structures for interpretation. Features from Block 2 are passed to UMAP for dimensionality reduction and visualization. Weights and features from Block 1 are frozen and used in an encoder that is passed to Block 3 which is the decoder with multiple deconvolution steps that complete an autoencoder model. Reconstruction error (ε) from this model is used to make predictions of fold type on GT families with unknown folds (GT-u).