Abstract

Despite possessing the capacity for selective attention, we often fail to notice the obvious. We investigated participants’ (n = 39) failures to detect salient changes in a change blindness experiment. Surprisingly, change detection success varied by over two-fold across participants. These variations could not be readily explained by differences in scan paths or fixated visual features. Yet, two simple gaze metrics–mean duration of fixations and the variance of saccade amplitudes–systematically predicted change detection success. We explored the mechanistic underpinnings of these results with a neurally-constrained model based on the Bayesian framework of sequential probability ratio testing, with a posterior odds-ratio rule for shifting gaze. The model’s gaze strategies and success rates closely mimicked human data. Moreover, the model outperformed a state-of-the-art deep neural network (DeepGaze II) with predicting human gaze patterns in this change blindness task. Our mechanistic model reveals putative rational observer search strategies for change detection during change blindness, with critical real-world implications.

Author summary

Our brain has the remarkable capacity to pay attention, selectively, to important objects in the world around us. Yet, sometimes, we fail spectacularly to notice even the most salient events. We tested this phenomenon in the laboratory with a change-blindness experiment, by having participants freely scan and detect changes across discontinuous image pairs. Participants varied widely in their ability to detect these changes. Surprisingly, two low-level gaze metrics—fixation durations and saccade amplitudes—strongly predicted success in this task. We present a novel, computational model of eye movements, incorporating neural constraints on stimulus encoding, that links these gaze metrics with change detection success. Our model is relevant for a mechanistic understanding of human gaze strategies in dynamic visual environments.

Introduction

We live in a rapidly changing world. For adaptive survival, our brains must possess the ability to identify relevant, changing aspects of our environment and distinguish them from irrelevant, static ones. For example, when driving down a busy road it is critical to identify changing aspects of the visual scene, such as vehicles shifting lanes or pedestrians crossing the street. Our ability to identify such critical changes is facilitated by visual attention–an essential cognitive capacity that selects the most relevant information in the environment, at each moment in time, to guide behavior [1].

Yet, our capacity for attention possesses key limitations. One such limitation is revealed by the phenomenon of “change blindness”, in which observers fail to detect obvious changes in a sequence of visual images with intervening discontinuities [2,3]. Previous literature suggests that observers’ lapses with detecting changes occur if the changes fail to draw attention; for example if the change is presented concurrently with distracting events, such as an intervening blank or transient noise patches. Change blindness, therefore, provides a useful framework for studying visual attention mechanisms and its lapses [4]. Such lapses have important real-world implications: observers’ success in change blindness tasks has been linked to their driving experience levels [5,6] and safe driving skills [7].

In the laboratory, change blindness is tested, typically, by presenting an alternating sequence of (a pair of) images that differ in one important detail (Fig 1A, “flicker” paradigm) [2,3]. Participants are instructed to scan the images, with overt eye movements, to locate and identify the changing object or feature. While many previous studies have investigated the phenomenon of change blindness itself [8–10], very few studies have directly identified gaze-related factors that determine observers’ success in change blindness tasks [4]. In this study, we tested 39 participants in a change blindness experiment with 20 image pairs (Fig 1A). Surprisingly, participants differed widely (by over 2-fold) in their success with detecting changes.

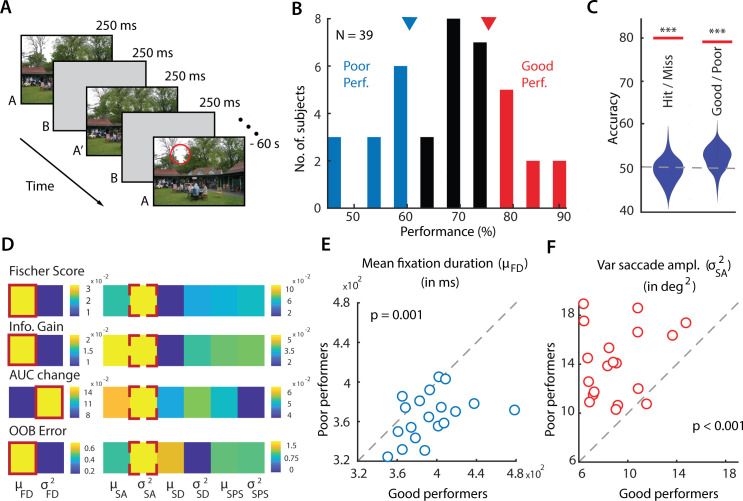

Fig 1. Gaze metrics predict success in a change blindness experiment.

A. Schematic of a change blindness experiment trial, comprising a sequence of alternating images (A, A’), displayed for 250 ms each, with intervening blank frames (B) also displayed for 250 ms (“flicker” paradigm), repeated for 60 s. Red circle: Location of change (not actually shown in the experiment). All 20 change image pairs tested are available in Data Availability link. B. Distribution of success rates of n = 39 participants in the change blindness experiment. Red and blue bars: good performers (top 30th percentile; n = 9) and poor performers (bottom 30th percentile; n = 12), respectively. Inverted triangles: Cut-off values of success rates for classifying good (red) versus poor (blue) performers. C. Classification accuracy, quantified with area-under-the-curve (AUC), for classifying trials as hits versus misses (left horizontal line) and performers as good versus poor (right horizontal line), obtained with a support vector machine classifier. Violin plots: Null distributions of classification accuracies based on a permutation test (*** p<0.001). Error bars: Clopper-Pearson binomial confidence intervals. D. Feature selection measures for identifying the most informative features that distinguish good from poor performers. From top to bottom: Fisher score, Information gain, Change in area-under-the-curve (AUC) and bag of decision trees (for details, see Feature Selection Metrics in the Materials and Methods). Brighter colors indicate more informative features. Solid red outline: most informative feature in the fixation feature subgroup (left); dashed red outline: most informative feature in the saccade feature subgroup (right). FD—fixation duration, SA—saccade amplitude, SD—saccade duration, SPS—saccade peak speed. μ and σ2 denote mean and variance of the respective parameter. E. Distribution of mean fixation duration (μFD, in milliseconds) across 19 change images for good performers (x-axis) versus poor performers (y-axis); one change image pair, successfully detected by all performers, was not included in these analyses (see text). Each data point denotes average value of μFD, across each category of performers, for each image tested. Dashed diagonal line: line of equality. p-value corresponds to significant difference in mean fixation duration between good and poor performers. F. Same as in E, but comparing variance of saccade amplitudes (in squared degrees of visual angle) for good versus poor performers. Other conventions are the same as in panel E.

To understand the reason for these striking differences in performance, first, we analyzed participants’ eye movement data, acquired at high spatial- and temporal- resolution, as they scanned each pair of images. We discovered that two key gaze metrics–mean fixation duration and the variance in the amplitude of saccades–were consistently predictive of participants’ success. Next, we developed a model of overt visual search based on the Bayesian framework of sequential probability ratio testing [11–14] (SPRT), in which subjects decided the next, most probable location for making a saccade based on a posterior odds ratio test. In our SPRT model, we also incorporated biological constraints on stimulus encoding and transformation, based on well-known properties of the visual processing pathway [15,16] (e.g. bounded firing rates, Poisson variance, foveal magnification, and saliency computation).

Our neurally-constrained model mimicked key aspects of human gaze strategies in the change blindness task: model success rates were strongly correlated with human success rates, across the cohort of images tested. In addition, the model exhibited systematic variation in change detection success with fixation duration and saccade amplitude, in a manner closely resembling human data. Finally, the model outperformed a state-of-the-art deep neural network (DeepGaze II [17]) in predicting probabilistic patterns in human saccades in this change blindness task. We propose our model as a benchmark for mechanistic simulations of visual search, and for modeling human observer strategies during change detection tasks.

Results

Fixation and saccade metrics predict change detection success

39 participants performed a change blindness task (Fig 1A). Each experimental session consisted of a sequence of trials with a different pair of images tested on each trial. Images presented included cluttered, indoor or outdoor scenes (see Data Availability link). To ensure uniformity of gaze origin across participants, each trial began when subjects fixated continuously on a central cross for 3 seconds. This was followed by the presentation of the change blindness image pair: alternating frames of two images, separated by intervening blank frames (250 ms each, Fig 1A). Of the image pairs tested, 20 were “change” image pairs, in that these differed from each other in one of three key respects (S1 Table): (i) size of an object changing; (ii) color of an object changing or (iii) change involving the appearance (or disappearance) of an object. The remaining (either 6 or 7 pairs; Materials and Methods) were “catch” image pairs, which comprised an identical pair of images; data from these “catch” trials were not analyzed for this study (Materials and Methods; complete change image set in Data Availability link). Change- and catch- image pairs were interleaved and tested in the same pseudorandom order across subjects. Subjects were permitted to freely scan the images to detect the change, for up to a maximum of 60 seconds per image pair. They indicated having detected the change by foveating at the location of change for at least 3 seconds. A response was marked as a “hit” if the subject was able to successfully detect the change within 60 seconds, and was marked as a “miss” otherwise.

We observed that participants varied widely in their success with detecting changes: success rates varied over two-fold–from 45% to 90%–across participants (Fig 1B). These differences may arise from innate differences in individual capacities for change detection as well as other experimental factors (see Discussion). Nonetheless, we tested if individual-specific gaze strategies when scanning the images could explain these variations in change detection success.

First, we ranked subjects in order of their change detection success rates. Subjects in the top 30th (n = 9) and bottom 30th (n = 12) percentiles were labelled as "good" and "poor" performers, respectively (Fig 1B). This choice of labeling ensured robust differences in performance between the two classes: change detection success for good performers varied between 75% and 90%, whereas that for and poor performers varied between 45% and 61%. Nevertheless, the results reported subsequently were robust to these cut-offs for selecting good and poor performers (see S1 Fig for results based on performance median split). Next, we selected four gaze metrics from the eye-tracker: saccade amplitude, fixation duration, saccade duration and saccade peak speed (justification in the Materials and Methods) and computed the mean and the variance of these four metrics for each subject and trial. These eight quantities were employed as features in a classifier based on support vector machines (SVM) to distinguish good from poor performers (Materials and Methods). One image pair (#20), for which all participants correctly detected the change, was excluded for these analyses (Figs 1–3).

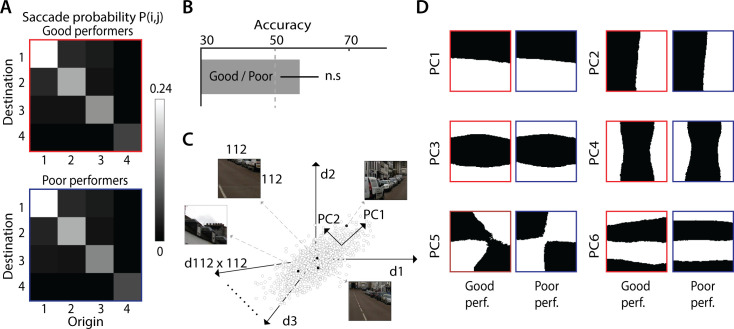

Fig 3. Saccade probabilities and fixated features are similar across good and poor performers.

A. Average saccade probability matrices for the good performers (top; red outline) and poor performers (bottom; blue outline). These correspond to probabilities of making a saccade between different “domains” (1–4), each corresponding to a (non-contiguous) collection of image regions, ordered by frequency of fixations: most fixated regions (domain 1) to least fixated regions (domain 4). Cell (I, j) (row, column) of each matrix indicates the probability of saccades from domain j to domain i. B. Classification accuracy for classifying good versus poor performers based on the saccade probability matrix features, using a support vector machine classifier. Other conventions are as in Fig 1D. Error bars: s.e.m. C. Identifying low-level fixated features across good and poor performers. 112x112 image patches were extracted, centered around each fixation, for each participant; each point in the 112x112 dimensional space represents one such image patch. Principal component analysis (PCA) was performed to identify low-level spatial features explaining maximum variance among the fixated image patches, separately for good and poor performers. D. Top 6 principal components, ranked by proportion of variance explained, corresponding to spatial features explaining greatest variance explained across fixations, for good performers (left panels) and poor performers (right panels). These spatial features were highly correlated across good and poor performers (median r = 0.20, p<0.001, across n = 150 components).

Classification accuracy (area-under-the-curve/AUC) for distinguishing good from poor performers was 79.9% and significantly above chance (Fig 1C, p<0.001, permutation test, Materials and Methods). We repeated these same analyses, but this time classifying each trial as a hit or miss. Classification accuracy was 77.7% and, again, significantly above chance (Fig 1C, p<0.001). Taken together, these results indicate that fixation- and saccade- related gaze metrics contained sufficient information to accurately classify change detection success.

Next, we identified gaze metrics that were the most informative for classifying good versus poor performers. This analysis was done separately for the fixation and saccade metric subsets: these were strongly correlated within each subset and uncorrelated across subsets (S2A Fig). For each metric, we performed feature selection with four approaches–Fisher score [18], AUC change [19] and Information Gain [20] and bag of decision trees (OOB error) [21]. A higher value of each selection measure reflects a greater importance of the corresponding gaze metric for classifying between good and poor performers. Among fixation metrics, mean fixation duration was assigned higher importance based on three out of the four feature selection measures (Fig 1D, solid red outline). Among the saccade metrics, variance of saccade amplitudes was assigned highest importance, based on all four feature selection measures (Fig 1D, dashed red outline). We confirmed these results post hoc: mean fixation duration was significantly higher for good performers, across images (Fig 1E; p = 0.0015, Wilcoxon signed rank test), whereas variance of saccade amplitude was significantly higher for poor performers (Fig 1F; p<0.001, Wilcoxon signed rank test).

We considered the possibility that the differences in fixation duration and saccade amplitude variance between good and poor performers could arise from differences in multiple, distinct modes of these, respective distributions. Nonetheless, statistical tests provided no significant evidence for multimodality in either fixation duration or saccade amplitude distributions for either class of performers (S2B Fig) (Hartigan’s dip test for unimodality; fixation duration: p>0.05, in 8/9 good performers with median p = 0.74, and in 8/12 poor performers with median p = 0.31; saccade amplitudes: p>0.05, in 9/9 good performers with median p = 0.99 and in 10/12 poor performers with median p = 0.99).

In sum, these results indicate that two key gaze metrics–mean fixation duration and variance of saccade amplitude–were strong and sufficient predictors of change detection success in a change blindness experiment.

Next, we tested if more complex features of eye movements–such as scan paths, fixation maps or fixated object features–differed systematically between good and poor performers.

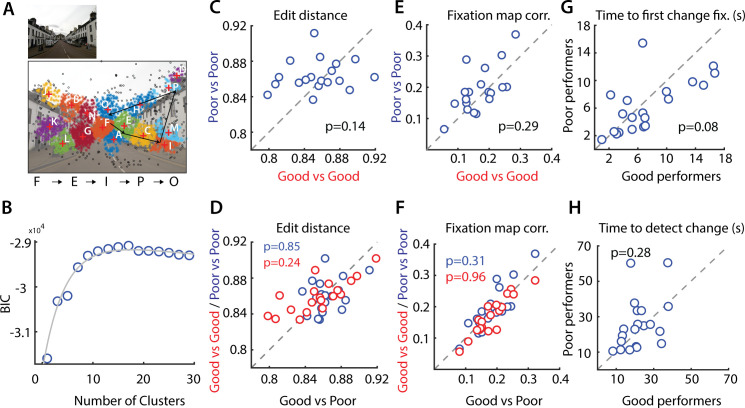

Scan path data is challenging to compare across individuals because scan paths can vary in terms of both the number and sequence of image locations samples. We compared scan paths across participants by encoding them into a “string” sequences (Materials and Methods). Briefly, fixation points for each image were clustered, with data pooled across subjects, and individual subjects’ scan paths were encoded as strings based on the sequence of clusters visited across successive fixations (Fig 2A and 2B). We then quantified the deviation between scan paths for each pair of subjects using the edit distance [22]. Median scan path edit distances were not significantly different between good and poor performer pairs (Fig 2C, p = 0.14, Wilcoxon signed rank test). We also tested if the median inter-category edit distance between the good and poor performer categories would be higher than the median intra-category edit distance among the individual (good or poor) performer categories (Fig 2D). These edit distances were also not significantly different (p>0.1, one-tailed signed rank test).

Fig 2. Scan paths and fixation maps do not distinguish good from poor performers.

A. (Left) Representative image used in the change blindness experiment (Image #6 in Data Availability link). (Right) Clustering of the fixation points based on the peak of the fitted BIC (n = 13) profile. Fixation points in different clusters are plotted in different colors. Black fixations occurred in fixation sparse regions that were not included in the clustering. Black arrows show a representative scan path–a sequence of fixation points. The character “string” representation of this scan path is denoted on the right side of the image. B. Variation in the Bayesian Information Criterion (BIC; y-axis) with clustering fixation points into different numbers of clusters (x-axis; Materials and Methods). Circles: Data points. Gray curve: Bi-exponential fit. C. Distribution of edit distances among good performers (x-axis) versus edit distances among poor performers (y-axis). Each data point denotes median edit distance for each image tested (n = 19). Other conventions are the same as in Fig 1E. D. Distribution of intra-category edit distance (y-axis), among the good or among the poor performers, versus the inter-category edit distance (x-axis), across good and poor performers. Red and blue data: intra-category edit distance for good and poor performers respectively. Each data point denotes the median for each image tested (n = 19). Other conventions are the same as in panel C. E. Same as panel C, but comparing Pearson correlations of fixation maps among good (x-axis) and poor performers (y-axis). Other conventions are the same as in panel C. F. Same as in panel D, but comparing intra- versus inter-category Pearson correlations of fixation maps. Other conventions are the same as in panel D. G. Distribution of time to first fixation within the region of change (in seconds) for good performers (x-axis) versus poor performers (y-axis). Other conventions are the same as in panel C. H. Same as in E, but comparing time to detect change (in seconds) for good versus poor performers. Other conventions are the same as in panel G.

Second, we asked if fixation “maps”–two-dimensional density maps of the distribution of fixations [23]–were different across good and poor performers. For each image, we correlated fixation maps across every pair of participants (Materials and Methods). Again, we observed no significant differences between fixation map correlations between good- and poor- performer pairs (Fig 2E, p = 0.29, Wilcoxon signed rank test), nor significant differences between intra-category (good vs. good and poor vs. poor) fixation map correlations and inter-category (good vs. poor) correlations (Fig 2F, p>0.1, one-tailed signed rank test).

Third, we asked if overall statistics of saccades were different across good and poor performers. For this, we computed the probabilities of saccades between specific fixation clusters (“domains”), ordered by the most to least fixated locations on each image (Materials and Methods). The saccade probability matrix, estimated by pooling scan paths across each category of participants, is shown in Fig 3A (average across n = 19 image pairs). Visual inspection of the saccade probability matrices revealed no apparent differences between the good and poor performers (difference in S3A Fig). In addition, we tested if we could classify between good and poor performers based on individual subjects’ saccade probability matrices. Classification accuracy with an SVM based on saccade probability matrix features (~56.67%, Fig 3B) was not significantly different from chance (p>0.1, permutation test).

Fourth, we tested whether good and poor performers differed in terms of fixated image features, as estimated with principal components analysis (Fig 3C, Materials and Methods). These fixated features typically comprised horizontal or vertical edges at various spatial frequencies, and were virtually identical between good and poor performers (Fig 3D, first six principal features for each class). We observed significant correlations across components of identical rank between good and poor performers (median r = 0.22, p<0.001, across top n = 150 components that explained ~80% of the variance). Similar correlations were obtained with fixated features obtained with the saliency map [24] (median r = 0.20, p<0.001, S3B Fig).

Fifth, we tested whether good and poor performers differed systematically in the spatial distributions of fixations relative to the change location, before change was detected. For this, we computed the frequency of fixations and the total fixation duration, based on the distance of fixation relative to the center of the change location (binned in concentric circular windows of increasing radii, in steps of 50 pixels, Materials and Methods). We observed no systematic differences in the distributions of either total fixation duration, or frequency of fixations, relative to the change location between good and poor performers (S4 Fig; p = 0.99 for fixation duration, p = 0.97 for fixation frequency, Kolmogorov-Smirnov test). In other words, the spatial distribution of fixations, relative to the change location, was similar between good and poor performers.

Finally, we tested whether good and poor performers differed in the time to first fixation on the region of change, or the time to detect changes (on successful trials). Again, we observed no significant differences in the distributions of either time to first fixation, or time to detect changes, between good and poor performers (Fig 2G and 2H; p = 0.08 for time to first fixation in change region, p = 0.28 for time to detect change, signrank test). Taken together with the previous analysis, these results indicate that poor performers fixated as often and as close to areas near the change, but simply failed to detect these changes successfully.

Overall, these analyses indicate that relatively simple gaze metrics like fixation durations and saccade amplitudes predicted successful change detection. More complex metrics like scan paths, fixated image features or the spatial distribution of fixations, were not useful indicators of change detection success. In other words, “low-level” gaze metrics, rather than “high-level” scanning strategies, determined participants’ success with change detection.

A neurally-constrained model of eye movements for change detection

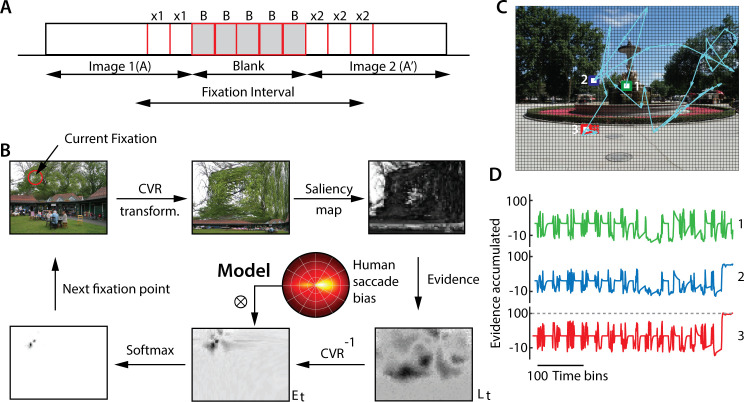

We developed a neurally-constrained model of change detection to explain these empirical trends in the data. Briefly, our model employs the Bayesian framework of Sequential Probability Ratio Testing (SPRT) framework [14,15] to simulate rational observer strategies when performing the change blindness task. We incorporated key neural constraints, based on known properties of stimulus encoding in the visual processing pathway, into the model. For ease of understanding we summarize key steps in our model’s saccade generation pipeline (Fig 4A and 4B), first; a detailed description is provided thereafter.

Fig 4. A Bayesian model of gaze strategies for change detection.

A. Schematic showing a typical fixation across the pair of images (A, A’) and an intervening blank. B. Detailed steps for modeling change detection (see text for details). (Clockwise from top left) At each fixation, a Cartesian variable resolution (CVR) transform is applied to mimic foveal magnification, followed by a saliency map computation to determine firing rates at each location. Instantaneous evidence for change versus no change (log-likelihood ratio, log L(t)) is computed across all regions of the image. An inverse CVR transform is applied to project the evidence back into the original image space, where noisy evidence is accumulated, (sequential probability ratio test, drift-diffusion model). The next fixation point is chosen using a softmax function applied over the accumulated evidence (Et). To model human saccadic biases, a distribution of saccade amplitudes and turn angles is imposed on the evidence values prior to selecting the next fixation location (polar plot inset). C. A representative gaze scan path following model simulation (cyan arrows). Colored squares: specific points of fixation (see panel D). Grid: Fine divisions over which the image was sub-divided to facilitate evidence computation. Green (1), blue (2) and red (3) squares denote first (beginning of simulation), intermediate (during simulation) and last (change detection) fixation points, respectively. D. Evidence accumulated as a function of time for the same three representative regions as in panel D; each color and number denotes evidence at the corresponding square in panel C. When the model fixated on the green or blue squares (in panel C), the accumulated evidence did not cross the threshold for change detection. As a result, the model continued to scan the image. When the model fixated on the red square (in the change region), the accumulated evidence crossed threshold (horizontal, dashed gray line) and the change was detected.

In the model, distinct neural populations, with (noisy) Poisson firing statistics, encode the saliency of the foveally-magnified image at each region. During fixation, following each alternation (Fig 1A, either A followed by A’, or vice versa) the model computes a posterior odds ratio for change versus no change at each region and at each instant of time (Eq 1), and accumulates this ratio as “evidence” (Eq 2, Results). If the accumulated evidence exceeds a predetermined (positive) threshold for change detection at the location of fixation, the model is deemed to have detected the change. If, the accumulated evidence dips below a predetermined (negative) threshold for “no change” at the fixated location, the observer terminates the current fixation. The next fixation location is chosen based on a stochastic (softmax) decision rule (Eq 3), with the probability of saccade to a region being proportional to the accumulated evidence at that region. Note that both images—odd and even—must be included in these computations to generate each saccade. The model continues scanning over the images in the sequence until either the change is detected or until the trial duration has elapsed (as in our experiment), whichever occurs earlier.

Neural representation of the image pair

At the onset of each fixation, the image was magnified foveally based on the center of fixation [25], with the Cartesian Variable Resolution (CVR) transform [26] (Materials and Methods; S5 Fig). Next, a saliency map was computed with the frequency-tuned salient region detection method [24] for each image of each pair. Saliency computation was performed on the foveally-magnified image, rather than on the original image, to mimic the sequence of these two computations in the visual pathway; we denote these foveally-transformed saliency values as S and S’, for each image (A) and its altered version (A’), respectively.

Each image was partitioned into a uniform 72x54 grid of equally-sized regions. We index each region in each image pair as A1, A2 …, AN and A’1, A’2…, A’N, respectively (N = 3888). Distinct, non-overlapping, neural populations encoded the saliency value (Si, Si’) in each region of each image. While in the brain, neural receptive fields typically overlap, we did not model this overlap here, for reasons of computational efficiency (Materials and Methods). The firing rates for each neural population were generated from independent Poisson processes. The average firing rate for each region λi was modeled as a linear function of the average saliency of image patch falling within that region as: , where is the saliency value of the kth pixel in region Ai, and the angle brackets denote an average across all pixels in that region. In other words, when the change between images A and A’ occurred in region i, the difference in firing rates between λi and λ’i was proportional to the difference in saliency values across the change.

We modeled each change detection trial (total duration T, Table 1), as comprising of a large number of time bins of equal duration (Δt, Table 1). At every time bin, the number of spikes from each neural population was drawn a Poisson distribution whose mean was determined by the average saliency of all pixels within the region. At the end of each fixation, the model either indicated its detection of change, thereby terminating the simulation, or shifted gaze to a new location. The precise criteria for signaling change versus shifting gaze are described next.

Table 1. Model parameters and their default values.

| Parameters | Symbol | Value | Description |

|---|---|---|---|

| Time bin | Δt | 25 ms | Unit of time for the model |

| Image duration | τ | 10Δt | Duration for which each image or blank is shown |

| Trial duration | -- | 60 s | Total duration of trial |

| Temperature | T | 0.01 | Modulates stochasticity of next saccade |

| Decay factor | γ | 0.004 | Decay of the evidence with time (inversely related) |

| Decay scale | β | 4.0 grid units | Spatial range of evidence decay |

| Noise scale | W | U(-5, 5) | Models noise in evidence accumulation |

| Prior odds ratio | P | 0.1 | Prior odds of change to no change |

| Change threshold | Fc | 100 | Threshold to determine change |

| “No change” threshold | Fn | -20 | Threshold to determine “no change”. |

| Threshold decay | ζ | 0 | Decay rate of no-change threshold |

| Foveal magnification factor | FMF | 0.05 | Magnification of the fixated region on the fovea according to the CVR transform |

| Firing rate bounds | λmin, λmax | 5, 120 spikes/bin | Minimum and maximum firing rates |

| Firing rate prior | μf | 3 spikes/bin | Expected difference in firing rates |

For ease of description, we depict a typical fixation in Fig 4A. The first image of the pair (say, A) persists m time-bins from the onset of the current fixation. Next, a blank epoch occurs from m+1 to p time-bins. Following this, the second image of the pair (A’) appears for an interval from p+1 to n time bins, until the end of fixation. We denote the number of spikes produced by neural population i at time t by . Xi and Yi represent the total number of spikes produced by neural population i when fixating at the first and second images respectively, during the current fixation. Thus, . We denote the number of spikes in the blank period as For simplicity, we assume that no spikes occurred during the blank period (Bi = 0), although this is not a strict requirement, as the key model computations rely on relative rather than absolute firing rates. In sum, the observer must perform change detection with a noisy neural representation derived from saliency map of the foveally-magnified image.

Modeling change detection with an SPRT rule

The observer faces two key challenges with change detection in this change blindness task. First, were the images not interrupted by a blank, a simple pixel-wise difference of firing rates over successive time epochs would suffice to localize the change. For example, computing |⟨Xi⟩−⟨Yi⟩| (where |x| denotes the absolute value of x, and angle brackets denote average over many time bins), and testing if this difference is greater than zero at any region i, suffices to identify the location of change. On the other hand, such an operation does not suffice when images are interleaved with a blank, as in change blindness tasks. For example, a pixel-wise subtraction of each image from the blank (|⟨Xi⟩−⟨Bi⟩| or |⟨Yi⟩−⟨Bi⟩|) yields large values at all locations of the image. Therefore, when images are interrupted by a blank, information about the first image must be maintained across the blank interval and compared with second image following the blank, for detecting the change. Second, even if no blank occurred between the images, a pixel-wise differencing operation would not suffice, due to the stochasticity of the neural representation: a non-zero difference in the number of spikes from a particular region, i (|Xi−Yi|) is not direct evidence of change at that location. In other words, the observer’s strategy for this change blindness task must take into account both the occurrence of the blank between the two images, as well as the, stochasticity in the Poisson neural representation of the image, for successfully detecting changes.

To address both of these challenges, we adopt an SPRT-based search rule. First, we compute the difference in the number of spikes between the first and second image at each region Ai in the image. We denote the random variable indicating this difference by Zi = Xi−Yi, and its value at end of time bin t as z. We then compute a likelihood ratio for change (C) versus no change (N), as:

| (1) |

Specifically, the observer tests if the observed value of Zi was more likely to arise from two generating processes (Change, C), or could from a single, underlying generating process (No Change, N). This computation is performed at each time step following the onset of the second image (t>p) of each pair. Details of computing this likelihood ratio for Poisson processes are provided in the Materials and Methods; for our model this computation involves an infinite sum, which we calculate using Bessel functions and efficient analytic approximations [27]. The functional form of the log-likelihood ratio resembles a piecewise linear function of firing rate differences (S6A and S6B Fig, see next section), which can be readily achieved by the output of simple neural circuits [28–30].

Second, the observer integrates the “evidence” for change at location Ai, by accumulating the logarithm of the likelihood ratio log(Li(t)), along with the log of the prior odds ratio (Pi), as in the SPRT framework.

| (2) |

where ɣi ∈[0,1] is a decay parameter for evidence accumulation at location Ai, which simulates “leaky” evidence accumulation [15,31] with larger values of ɣi, indicating greater “leak” in evidence accumulation, Pi is the prior odds ratio of change to no change (P(C)/P(N)) at each location, Wi(t) represents white noise, sampled from a uniform distribution (Table 1), to mimic noisy evidence accumulation [32]. Here we assume that the prior ratio is constant across time and space, but nonetheless study the effect of varying prior ratios on model performance (next section). Both of these features–leak and noise in evidence accumulation–are routinely incorporated in models of human decision-making [31], and are grounded in experimental observations in brain regions implicated in decision-making [15]. Evidence accumulation occurs in the original, physical space of the image, and not in the CVR transformed space (Fig 4B). Note that this formulation of an SPRT decision involves evaluating and integrating fully the logarithm of the Bayesian posterior odds ratio (product of the prior odds and likelihood ratio, Pi × Li(t)).

Evidence accumulation is performed for each region in the image; Ei(t) for each region is calculated independently of the other regions. If the accumulated evidence Ei(t) crosses a positive threshold, Fc (Table 1), the observer stops scanning the image and region Ai, at which the threshold Fc was crossed, is declared the “change region”. If, on the other hand, the accumulated evidence crosses a negative (no-change) threshold Fn (Table 1), the observer terminates the current fixation and determines the next region to fixate, Ak, based on a softmax probability function:

| (3) |

where Ei is the evidence value for region i, N is the number of regions in the image, and T is a temperature parameter which controls the stochasticity of the saccade (decision) policy (Materials and Methods; see also next section). For selecting the next point of gaze fixation, we also matched directional saccadic biases typically observed in human data [33] (Fig 4B, described in Materials and Methods section on “Comparison of model performance with human data”). In some simulations we also decayed the no-change threshold (Fn) with different decay rates (ζ; Table 1) and studied its effect on model performance. Because we observed virtually no false alarms (signaling a no-change location as change) in our experimental data (0.06% of all trials; Materials and Methods) we did not model decay in the change threshold (Fc), which would have yielded significantly more false alarms.

Note that although we have not explicitly modeled inhibition-of-return (IOR), this feature emerges naturally from the evidence accumulation rule in the model. Following each fixation, the accumulated evidence for no-change decays gradually (Eq 2), thereby reducing the probability that subsequent fixations occur, immediately, at the erstwhile fixated location. This feature encourages the model to explore the image more thoroughly. We illustrate gaze shifts by the model in an exemplar change blindness trial (Fig 4C and 4D). The model’s scan path is indicated by cyan arrows showing a sequence of fixations, ultimately terminating at the change region. When the model fixated, initially, on regions with no change (Fig 4C, squares with green/1 or blue/2 outline), transient evidence accumulation occurred either favoring a change (positive fluctuations) or favoring no change (negative fluctuations) (Fig 4D, green and blue traces, respectively). In each case, evidence decayed to baseline values rapidly during the blank epochs, when no new evidence was available, and the accumulated evidence did not cross threshold. Finally, when the model fixated on the change region (Fig 4C, square with red outline/3), evidence for a change continued to accumulate, until a threshold-crossing occurred (Fig 4D, red trace, threshold: dashed gray line). At this point, the change was deemed to have been detected, and the simulation was terminated.

Model trends resemble qualitative trends in human experimental data

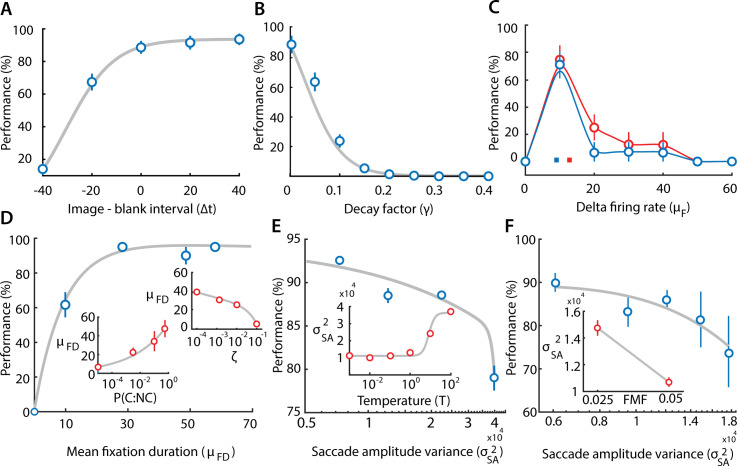

We tested the effect of key model parameters on change detection performance, to test for qualitative matches with our experimental findings. We simulated the model and measured change detection performance by varying each model parameter in turn (Table 1, default values), while keeping all other parameters fixed at their default values. For these simulations we employed the frequency-tuned salient region detection method [24] to generate the saliency map. The first three simulations (Fig 5A–5C) tested whether the model performed as expected based on its inherent constraints. The last three simulations (Fig 5D–5F) evaluated whether emergent trends in the model matched empirical observations regarding gaze metrics in our study (Fig 1E and 1F). The results reported represent averages over 5–10 repetitions of each simulation.

Fig 5. Effect of model parameters on change detection success.

A. Change in model performance (success rates, % correct) with varying the relative interval of the images and blanks, measured in units of time bins (Δt = 25 ms/time bin; Table 1), while keeping the total image+blank interval constant (at 50 time bins). Positive values on the x-axis denote larger image intervals, as compared to blanks, and vice versa, for negative values. Blue points: Data; gray curve: sigmoid fit. B. Same as in panel (A), but with varying the maximum decay factor (γ; Eq 2). Curves: Sigmoid fits. C. Same as in panel (A) but with varying the firing rate prior (μf) for image pairs with the lowest (blue; bottom 33rd percentile) and highest (red; top 33rd percentile) magnitudes of firing rate changes. Curves: Smoothing spline fits. Colored squares: μf corresponding to the center of area of the two curves. D. Same as in panel (A), but with varying the mean fixation duration (μFD; measured in time bins, Δt = 25 ms/time bin). (Inset; lower) Variation of μFD with prior ratio of change to no change (P(C:NC)). (Inset; upper) Same as lower inset but with varying threshold decay rate ζ (Table 1). E. Same as in panel (A), but with varying saccade amplitude variance (σ2SA). (Inset) Variation of σ2 SA with the softmax function temperature parameter (T) (see text for details). F. Same as in panel (A), but with varying saccade amplitude variance (σ2SA). (Inset) Variation of σ2 SA with the foveal magnification factor (FMF). Other conventions in B-F are the same as in panel A. Error bars (all panels): s.e.m.

First, we tested the effect of varying the relative durations of the image and the blank, while keeping their overall presentation duration (image+blank) constant. Note that no new evidence accrues during the blanks, whereas decay of accumulated evidence continues. Therefore, extending the duration of the blanks, relative to the image, should cause a substantial deterioration in the performance of the model. The simulations confirmed this hypothesis: performance deteriorated (or improved) systematically with decreasing (or increasing) durations of the image relative to the blank (Fig 5A).

Second, we tested the effect of varying the magnitude of the decay factor (ɣ, Table 1). Decreasing ɣ prolongs the (iconic) memory for evidence relevant to change detection; ɣ = 1 represents no memory (immediate decay; no integration) of past evidence, whereas ɣ = 0 indicates reliable memory (zero decay; perfect integration) of past evidence (refer Eq 2). Model success rates were at around 80% for ɣ = 0 and performance degraded systematically with increasing ɣ (Fig 5B); in fact, the model was completely unable to detect change for ɣ values greater than around 0.2, suggesting the importance of the transient memory of the image across the blank for successful change detection.

Third, we tested the effect of varying μf, the prior on the magnitude of the difference between the firing rates (across the image pair) in the change region (Fig 5C). For this, we divided images into two extreme subsets (highest and lowest 1/3rd), based on a tercile (three-way) split of firing rate magnitude differences. The performance curve for the highest tercile (largest firing rate differences in change region) of images was displaced rightward relative to the performance curve for the lowest tercile (smallest firing rate differences). Specifically, μf corresponding to the center of area of the performance curves was systematically higher for the images with higher firing rate differences (Fig 5C, colored squares).

Fourth, we tested the effect of varying mean fixation duration (μFD)–a key parameter identified in this study as being predictive of success with change detection. The mean fixation duration is not a parameter of the model. We, therefore, varied the mean fixation duration, indirectly, by varying the prior odds ratio (P) and the decay rate (ζ) of the no-change threshold (Fn). A lower prior odds ratio of change to no-change biases evidence accumulation toward the no-change threshold, leading to shorter fixations (and vice versa; Fig 5D, lower inset). On the other hand, a higher decay rate of the no-change threshold leads to a greater probability of bound crossing of the evidence in the negative direction, again leading to shorter fixations (and vice versa; Fig 5D; upper inset). In either case, we found that decreasing (increasing) the mean fixation duration produced systematic deterioration (improvement) in the performance of the model (Fig 5D). These results recapitulate trends in the human data, indicating that increased fixation duration may be a key gaze metric indicating change detection success.

Fifth, we tested the effect of varying the saccade amplitude variance ()–the other key parameter we had identified as being predictive of change detection success. Again, because the variance of the saccade amplitude is not a parameter of the model, we varied this, indirectly, by varying the temperature (T) parameter in the softmax function: a higher temperature value leads to random sampling from many regions of the image, thereby increasing whereas a low temperature value leads to more deterministic sampling, thereby reducing (Fig 5E, inset). With increasing saccade variance, performance dropped steeply (Fig 5E).

Finally, we also explored the effect of varying the foveal magnification factor (FMF) across a two-fold range. Saccade amplitude variance decreased robustly as the FMF increases (Fig 5F, inset) (see Discussion). As with the previous simulation, we observed a systematic decrease in performance with increasing saccade amplitude variance (Fig 5F), again, recapitulating trends in the human data.

Taken together, these results show that gaze metrics that were indicative of change detection success in the change blindness experiment also systematically influenced change detection performance in the model. Specifically, the two key metrics indicative of change detection success in humans, namely, fixation duration and variance of saccade amplitude, were also predictive of change detection success in the model. These effects could be explained by changing specific, latent parameters in the model (e.g. decay rate of the no-change threshold, prior ratios, foveal magnification factors). Our model, therefore, provides putative mechanistic links between specific gaze metrics and change detection success in the change blindness task.

Model performance mimics human performance quantitatively

In addition to these qualitative trends, we sought to quantify similarities between model and human performance in this change blindness task. For this analysis, we modeled biases inherent in human saccade data (S7 Fig) by matching key saccade metrics in the model–amplitude and turn angle of saccades–with human data (Figs 6A and 7A, r = 0.822, p<0.01; see Materials and Methods section on “Comparison of model performance with human data”). For these simulations, and subsequent comparisons with a state-of-the-art deep neural network model (DeepGaze II) [17] we used the saliency map generated by the DeepGaze network rather than the frequency-tuned salient region detection algorithm, so as to enable a direct comparison between our model and DeepGaze.

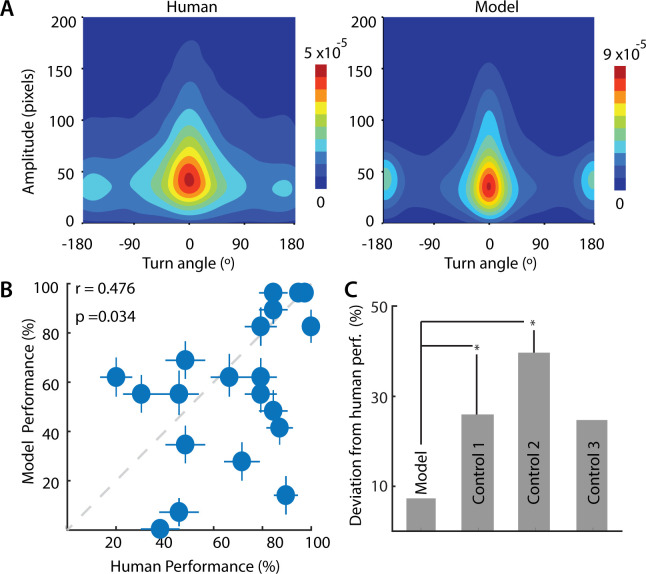

Fig 6. Comparison between human and model performance.

A. (Left) Joint distribution of saccade amplitude and saccade turn angle for human participants (averaged over n = 39 participants). Colorbar: Hotter colors denote higher proportions. (Right) Same as in the left panel, but for model, averaged over n = 40 simulations. B. Correlation between change detection success rates for human participants (x-axis) and the model (y-axis). Each point denotes average success rates for each of the 20 images tested, across n = 39 participants (human) or n = 40 iterations (model). Error bars denote standard error of the mean across participants (x-axis) or simulations (y-axis). Dashed gray line: line of equality. C. Average absolute deviation from human performance of the sequential probability ratio test (SPRT) model (Model, leftmost bar), for a control model in which evidence decayed rapidly (Control 1, γ = 1; second bar from left), for a control model in which the stopping rule was based on the derivative of the posterior odds ratio (Control 2; third bar from left), or for a control model which employed a random search strategy (Control 3, T = 104; rightmost bar). p-values denote significance levels following a paired signed rank test, across n = 20 images (*p < 0.05).

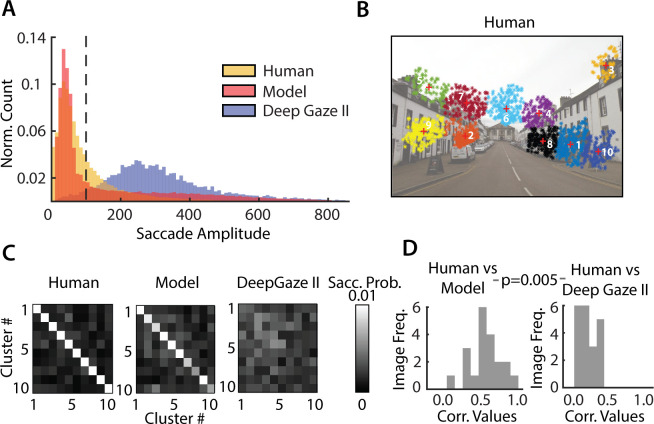

Fig 7. Comparison between human, model and Deep Gaze II performance.

A. Distribution of saccade amplitudes for human participants (yellow), sequential probability ratio test (SPRT) model (red) and the Deep Gaze II neural network (blue). B. Top 10 clusters of human fixations, ranked by cumulative fixation duration (rows/columns 1–10). Increasing indices correspond to progressively lower cumulative fixation duration. C. Saccade probability matrix (left) averaged across all images and all participants, (middle) for simulations of the sequential probability ratio test (SPRT) model, and (right) for the Deep Gaze II neural network. D. Distribution, across images, of the correlations (r-values) of saccade probability matrices between human participants and sequential probability ratio test (SPRT) model (left) and human participants and Deep Gaze II neural network (right). p-value indicates pairwise differences in these correlations across n = 20 images.

As a first quantitative comparison, we tested whether image pairs in which human observers found difficult to detect changes (S2C Fig), were also challenging for the model. For this, we compared the model’s success rates across images with observers’ success rates in the change blindness experiment. Remarkably, the model’s success rates, averaged across 40 independent runs, correlated significantly with human observers’ average success rates (Fig 6B, r = 0.476, p = 0.034, robust correlations across n = 20 images).

We compared the Bayesian SPRT search rule, as specified in our model, against three alternative control models, each with a different search strategy or stopping rule: (i) a model in which evidence decayed rapidly, so that the decision to signal change was based on the instantaneous posterior odds ratio alone; (ii) a model in which the stopping rule was based on crossing a threshold “rate of change” of the posterior odds ratio, and (iii) a model that employed a random search strategy (Materials and Methods). For each of these models, the average absolute difference in performance with the human data was significantly higher, compared with that of the original model (Fig 6C; p<0.05 for 2/3 control models; Wilcoxon signed-rank test). Moreover, none of the control model’s success rates correlated significantly with human observers’ success rates (r = 0.09–0.42, p>0.05, for all 3/3 control models; robust correlations).

Finally, we tested whether model gaze patterns would match human gaze patterns beyond that achieved by state-of-the-art fixation prediction with a deep neural network: DeepGaze II [17]. First, we quantified human gaze patterns by computing the probability of saccades pair-wise among the top 10 clusters with the largest number of fixations (e.g. Fig 7B) for each image. We then compared these human saccade probability matrices (Fig 7C, left) with those derived from simulating the model (Fig 7C, middle) as well as with those generated by the DeepGaze network (Fig 7C, right). For the latter, saccades were simulated using the same softmax rule as employed in our model (Eq 3) along with inhibition-of-return [34] (Materials and Methods); in addition, for each image, we identically matched the distribution of fixation durations between DeepGaze and our model (Materials and Methods).

The model’s saccade probability matrix (Fig 7C, middle) closely resembled the human saccade probability matrix (Fig 7C, left), indicating that model was able to mimic human saccades patterns closely. On the other hand, the DeepGaze saccade matrix (Fig 7C, right) deviated significantly from the human saccade probability matrix. Confirming these trends, we observed significantly higher correlations between the human saccade probabilities and our model’s saccade probabilities (Fig 7D, left) as compared to those with DeepGaze’s saccade probabilities (Fig 7D, right) (human-SPRT model: median r = 0.51, human-DeepGaze II: median r = 0.14; p<0.01 for significant difference in correlation values across n = 20 images, signed rank test). These results were robust to the underlying saliency map in our SPRT model: replacing DeepGaze’s saliency map with the frequency-tuned salient region detection method yielded nearly identical results (S8A and S8B Fig).

The chief reason for these differences was readily apparent upon examining the saccade amplitude distributions across the human data, our SPRT model and DeepGaze: whereas the human and model distributions contained many short saccades, the DeepGaze distribution contained primarily long saccades (Fig 7A). Consequently, we repeated the comparison of saccade probabilities limiting ourselves to the range of saccade amplitudes in the DeepGaze model. Again, we found that our model’s saccade probabilities were better correlated with human saccade probabilities (S8C and S8D Fig) (human-SPRT model: median r = 0.29, human-DeepGaze II: median r = 0.10; p<0.001). We propose that these differences occurred because DeepGaze saccades are generated based on relative saliencies of different regions across the image, whereas saliency computation, per se, may be insufficient to model human saccade strategies in change blindness tasks or, in general, in change detection tasks.

In summary, change detection success rates were robustly correlated between human participants and the model. Moreover, our model outperformed a state-of-the-art deep neural network in predicting gaze shifts among the most probable locations of human gaze fixations in this change blindness task.

Discussion

The phenomenon of change blindness reveals a remarkable property of the brain: despite the apparent richness of visual perception, the visual system encodes our world sparsely. Stimuli at locations to which attention is not explicitly directed are not effectively processed [4]. Even salient changes in the visual world sometimes fail to capture our attention and remain undetected. Visual attention, therefore, plays a critical role in deciding the nature and content of information that is encoded by the visual system.

In a laboratory change blindness experiment, we observed that participants varied widely in their ability to detect changes. These differences cannot be directly attributed to participants’ inherent change detection abilities. Nevertheless, a recent study evaluated test-retest reliability in change blindness tasks, and found that observers’ change detection performance was relative stable over periods of 1–4 weeks [35]. In our study, participants whose fixations lasted marginally longer, on average, and whose saccades were less spatially variable, were best able to detect changes. Given the intricate link between mechanisms for directing eye-movements and those governing visual attention [36–38], our results suggest the hypothesis that spatial attention shifts more slowly in time (higher fixation durations), and less erratically in space (lower saccade variance), in order to enable participants to detect changes effectively.

To explain our experimental observations mechanistically, we developed, from first principles, a neurally-constrained model based on the Bayesian framework of sequential probability ratio testing [15,31]. Such SPRT evidence accumulation models have been widely employed in modelling human decisions [31], and also appear to have a neurobiological basis [15]. In our model, we incorporated various neural constraints including foveal magnification, saliency maps, Poisson statistics in neural firing and human saccade biases. Even with these constraints, the model was able to faithfully reproduce key trends in the human change detection data, both qualitatively and quantitatively (Figs 5 and 6). The model’s success rates correlated with human success rates, and the model reproduced key saccade patterns in human data, outperforming competing control models (Figs 6B and 6C, 7C and S8).

On the one hand, our study follows a rich literature on human gaze models, that fall, loosely, into two classes. The first class of “static” models use information in visual saliency maps [23,37,39] to predict gaze fixations. These saliency models, however, do not capture dynamic parameters of human eye fixations, which are important for understanding strategies underlying visual exploration in search tasks, like change blindness tasks. The second class of “dynamic” models seek to predict the temporal sequence of gaze shifts [40–43]. Nevertheless, these approaches were developed for free-viewing paradigms, and comparatively few studies have focused on gaze sequence prediction during search tasks [44,45]. On the other hand, several previous studies have developed algorithms to address the broader problem of “change point” detection [46–48]. Yet, none of these algorithms are neurally-constrained (e.g. foveal magnification, Poisson statistics), and none models gaze information or saccades. To the best of our knowledge, ours is the first neurally-constrained model for gaze strategies in change blindness tasks, and developing and validating such a model is a central goal of this study.

Specifically, our model outperformed a state-of-the-art deep neural network (DeepGaze II), in terms of predicting saccade patterns in this change blindness task. Yet, a key difference must be noted when comparing our model with DeepGaze. Our model relies on a decision rule based on posterior odds for generating saccades: For this, it must compare evidence for change versus no change across the two images. In our simulations, in contrast, the DeepGaze model generates saccades independently on the two images, without comparing them. Based on these simulations, we found that our model’s gaze patterns provided a closer match to human data compared to gaze patterns from DeepGaze (Figs 7C and 7D and S8). Because DeepGaze is a model tailored for predicting free-viewing saccades, this comparison serves only to show that even a state-of-the-art free-viewing saliency prediction algorithm is not sufficient to accurately predict gaze patterns in the context of a change detection (or change blindness) task. In other words, saccades made with the goal of detecting changes are likely to be different from saccades made in free-viewing conditions.

Our model exhibited several emergent behaviors that matched previous reports of human failures in change blindness tasks. First, the model’s success rates improved systematically as the blank interval was reduced (Fig 5A); this trend mimics previously-reported patterns in human change blindness tasks, in which shortening the interval of the intervening blank improves change detection performance [4]. Second, the model’s success rates improved systematically with reducing the evidence decay rate across the blank (Fig 5B). In other words, retaining information across the blank was crucial to change detection success. This result may have intriguing links with neuroscience literature, which has shown that facilitating neural activity in oculomotor brain regions (e.g. the superior colliculus) during the blank epoch counters change blindness [49]. Third, the model’s ability to detect changes improved when its internal prior (expected firing rate difference) aligns with the actual firing rate difference at the change region (Fig 5C). These results may explain a results from a previous study [50], which found that familiarity with the context of the visual stimulus was predictive of change detection success.

Finally, the model provided mechanistic insights about key trends observed in our own experiments, specifically, a critical dependence of success rates on mean fixation durations and the variance of saccade amplitudes (Fig 5D, 5E and 5F). Fixation durations in the model varied systematically either with altering the prior odds ratio or the decay rate of the no-change bound. Note that the prior odds ratio corresponds to an individual’s prior belief in the prior probability of change to no change. The lower this ratio, the higher the degree of belief in no change, and the sooner the individual seeks to break each fixation. In our model, this was achieved by having the prior ratio bias evidence accumulation toward the no-change (negative) bound. Similarly, faster decay of the no-change bound, possibly reflecting a stronger “urgency” to break fixations, resulted in faster bound crossing and, therefore, shorter fixations. Regardless of the mechanism, shorter fixation durations resulted in impaired change detection performance (Fig 5E), providing a putative mechanistic link between fixation durations and change detection success in the experimental data. In addition, saccade amplitude variance modulated systematically with changes in the foveal magnification factor (FMF). With higher foveal magnification the model is, perhaps, able to better distinguish features in regions proximal to the fixation location, and saccade to them, thereby resulting in overall shorter saccades, and lower variance. Moreover, the higher foveal magnification, enables analyzing the region of change with higher resolution, thereby leading to better change detection performance. As a consequence performance degraded systematically with increased saccade amplitude variance (Fig 5F), the common underlying cause for each being the change in the foveal magnification factor. This provides a plausible mechanism for higher variance of saccade amplitudes in “poor” performers.

We implemented three control models in this study. The first control model—in which evidence decayed rapidly (γ = 1)—mimics the scenario of rapidly decaying short-term memory; this model signals the change based on threshold crossing of the instantaneous, rather than the accumulated, posterior odds. In the second control model, we employed an alternative stopping rule: a rapid, large change in the posterior odds ratio sufficed to signal the change. Such a “temporally local” stopping rule obviates the need for evidence accumulation (short-term memory) and may be implemented by neural circuits that act as temporal change detectors (differentiators). The third control model mimicked a random saccade strategy, with a high temperature parameter (T = 104) of the softmax function. This model establishes baseline (chance) levels of success, if an observer were to ignore model evidence and saccade randomly to different locations on each image, and arrive at the change region “by chance”. Each of these control models fell short of our SPRT model in terms of their match to human performance.

Nonetheless, our SPRT model can be improved in a few ways. First, saliency maps in our model were typically computed with low-level features (e.g. Fig 5; the frequency tuned salient region method). Incorporating more advanced saliency computations (e.g. semantic saliency) [51] into the saliency map could render the model more biologically realistic. Second, although our neurally-constrained model provides several biologically plausible mechanisms for explaining our experimental observations, it does not identify which of these mechanisms is actually at play in human subjects. To achieve this objective, model parameters may be fit with maximum likelihood estimation [52] or Bayesian methods for sparse data (e.g. hierarchical Bayesian modelling)[53]. Yet, in its current form, such fitting is rendered challenging because the model is not identifiable: multiple parameters in the model (e.g. prior ratios or decay of the no-change threshold) produce similar effects on specific gaze parameters (e.g. fixation durations, Fig 5D). Future extensions to the model, for example, by measuring and modeling more gaze metrics for constraining the model, may help overcome this challenge. Such model-fitting will find key applications for identifying latent factors contributing to inter-individual differences in change detection performance.

Our simulations have interesting parallels with recent literature. With a battery of cognitive tasks Andermane et al. [35] identified two factors that were critical for predicting change detection success: “visual stability”–the ability to form stable and robust visual representation–and “visual ability”–indexing the ability to robustly maintain information in visual short term memory. Other studies have identified associated psychophysical factors, including attentional breadth [54] and visual memory [55] as being predictive of change detection success. We propose that (higher) fixation durations and (lower) variability of saccade amplitudes may both index a (higher) “visual stability” factor, indexing the ability to form more stable visual representations. In contrast, the temporal decay factor (Table 1, γ) and spatial decay scale (Table 1, β) may correspond to visual memory and attentional breadth, respectively; each could comprise key components of the “visual ability” factor, indexing robust maintenance of information in short-term memory. Our model provides a mechanistic test-bed to systematically explore the contribution of each of these factors and their constituent components to change detection success in change blindness experiments.

A mechanistic understanding of the behavioral and neural processes underlying change blindness will have important real-world implications: from safe driving [56] to reliably verifying eyewitness testimony [57]. Moreover, emerging evidence suggests that change blindness (or a lack thereof) may be a diagnostic marker of neurodevelopmental disorders, like autism [58–60]. Our model characterizes gaze-linked mechanisms of change blindness in healthy individuals and may enable identifying the mechanistic bases of change detection deficits in individuals with neurocognitive disorders.

Materials and methods

Ethics statement

Informed written consent was obtained from all participants. The study was approved by the Institutional Human Ethics Committee (IHEC) at the Indian Institute of Science (IISc), Bangalore.

Experimental protocol

We collected data from n = 44 participants (20 females; age range 18–55 yrs) with normal or corrected-to-normal vision and no known impairments of color vision. Of these, data from 4 participants, who were unable to complete the task due to fatigue or physical discomfort, were excluded. Data from one additional participant was irretrievably lost due to logistical errors. Thus, we analyzed data from 39 participants (18 females).

Images were displayed on a 19-inch Dell monitor at 1024x768 resolution. Subjects were seated, with their chin and forehead resting on a chin rest, with eyes positioned roughly 60 cm from the screen. Each trial began when subjects continually fixated on a central cross for 3 seconds. This was followed by presentation of the change image pair sequence for 60s: each frame (image and blank) was 250 ms in duration. The trial persisted until the subjects indicated the change by fixating at the change region for at least 3 seconds continuously (“hit”), or if the maximum trial duration (60 s) elapsed and the subjects failed to detect the change (“miss”). An online algorithm tracked, in real-time, the location of the subjects’ gaze and signaled the completion of a trial based on whether they were able to fixate stably at the location of change. Each subject was tested on either 26 or 27 image pairs, of which 20 pairs differed in a key detail (available in Data Availability link); we call these the “change” image pairs. The remaining image pairs (7 pairs for 30 subjects and 6 pairs for 9 subjects) contained no changes (“catch” image pairs); data from these image pairs were not analyzed for this study (except for computing false alarm rates, see next). To avoid biases in performance, the ratio of “change” to “catch” trials was not indicated to subjects beforehand, but subjects were made aware of the possibility of catch trials in the experiment. We employed a custom set of images, rather than a standardized set (e.g.[4]), due to the possibility that some subjects might have been familiar with change images used in earlier studies.

Overall, the proportion of false-alarms–proportion of fixations with durations longer than 3s in catch trials–was negligible (~0.06%, 17/32248 fixations across 264 catch trials) in this experiment. To further confirm if the subjects indeed detected the change on hit trials, a post-session interview was conducted in which each subject was presented with one of each pair of change images in sequence and asked to indicate the location of perceived change. The post-session interview indicated that about 5.7% (31/542) of hit trials were not recorded as such; in these cases, the total trial duration was 60 s indicating that even though the subject fixated on the change region, the online algorithm failed to register the trial as a hit. In addition, 2.9% (7/238) of miss trials, in which the subjects were unable to detect the location of change in the post-session interview, ended before the full trial duration (60 s) had elapsed; in these cases, we expect that subjects triggered the termination of the trial by accidentally fixating for a prolonged duration near the change. We repeated the analyses excluding these 4.8% (38/780) trials and observed results closely similar to those reported in the text. Finally, eye-tracking data from 0.64% (5/780) trials were corrupted and, therefore, excluded from all analyses.

Subjects’ gaze was tracked throughout each trial with an iViewX Hi-speed eye-tracker (SensoMotoric Instruments Inc.) with a sampling rate of 500 Hz. The eye-tracker was calibrated for each subject before the start of the experimental session. Various gaze parameters, including saccade amplitude, saccade locations, fixation locations, fixation durations, pupil size, saccade peak speed and saccade average speed, were recorded binocularly on each trial, and stored for offline analysis. Because human gaze is known to be highly coordinated across both eyes, only monocular gaze data was used for these analyses. Each session lasted for approximately 45 minutes, including time for instruction, eyetracker calibration and behavioral testing.

SVM classification and feature selection based on gaze metrics

We asked if subjects’ gaze strategies would be predictive of their success with detecting changes. To answer this question, as a first step, we tested if we could classify good versus poor performers (Fig 1C) based on their gaze metrics alone. As features for the classification analysis, we computed the mean and variance of the following four gaze metrics: saccade amplitude, fixation duration, saccade duration and saccade peak speed recorded by the eyetracker. We did not analyze two other gaze metrics acquired from the eyetracker: saccade average speed and pupil diameter for these analyses. Saccade average speed was highly correlated with saccade peak speed across fixations (r = 0.93, p<0.001), and was a redundant feature. In addition, while pupil size is a useful measure of arousal [61], it is often difficult to measure reliably, because slight, physical movements of the eye or head may cause apparent (spurious) changes in pupil size that can be confounded with real size changes. Before analysis, feature outliers were removed based on Matlab’s boxplot function, which considers values as outliers if they are greater than q3 + w × (q3 –q1) or less than q1 –w × (q3 –q1), where q1 and q3 are the 25th and 75th percentiles of the data, respectively, and setting w = 1.5 provides 99.3 percentile coverage for normally distributed data. To avoid biases in estimating gaze metrics for good versus poor performers this last fixation at the change location (a minimum of 3 seconds of data) were removed from the eyetracking data for all “hit” trials before further analyses.

Following outlier removal, these eight measures were employed as features in a classifier based on support vector machines (SVM) to classify good from poor performers (fitcsvm function in Matlab). The SVM employed a polynomial kernel, and other hyperparameters were set using hyperparameter optimization (OptimizeHyperParameters option in Matlab). Features from each image were included as independent data points in feature space. Classifier performance was assessed with 5-fold cross validation, and quantified with the area-under-the-curve (AUC [62]). For these analyses, we included gaze data from all but one image (Image #20, see Data Availability link), in which every subject detected the change correctly. Significance levels (p-values) of classification accuracies were assessed with permutation testing by randomly shuffling the labels of good and poor performers across subjects 100 times and estimating a null distribution of classification accuracies; significance values correspond to the proportion of classification accuracies in the null distribution that were greater than the actual classification accuracy values. A similar procedure was used for SVM classification of trials into hits and misses except that, in this case, class labels were based on whether the trial was a hit or a miss, and permutation testing was performed by shuffling hit or miss labels across trials. Because we employed summary statistics (e.g. mean, variance) of the gaze metrics in these feature selection analysis, we tested for unimodality of the logarithm of the respective gaze metric distributions with Hartigan’s dip test for unimodality [63].

Next, we sought to identify gaze metrics that best distinguished good from poor performers. For this we employed four standard metrics—Fisher score [18], AUC change [19] and Information Gain [20] and bag of decision trees [21]–which quantify the relative importance of each feature for distinguishing the two groups of subjects (Fig 1D). A detailed description of these metrics is provided next.

Feature selection metrics

(i) Fisher score computes the “quality” of features based on their extent of overlap across classes. In a two-class scenario, Fisher Score for the jth feature is defined as,

| (4) |

where, is the average value of the jth feature. Similarly and are the average of jth feature for the positive and negative category respectively. Here and denote the jth feature of ith sample-index for each category, with n(+) and n(-) being the number of positive and negative instances respectively. A more discriminative feature has a higher Fisher score.

(ii) AUC change describes the change in area-under-the-curve (AUC) with removing each feature in turn. The AUC (A) is the area under the ROC curve, plotted by varying the discrimination threshold and plotting the True Positive Rate (TPR) as a function of the False Positive Rate (FPR).

| (5) |

A more discriminative feature’s absence produces a higher deterioration in classification accuracy.

(iii) Information gain is a classifier-independent measure of the change in entropy upon partitioning the data based on each feature. A more discriminative feature has a higher information gain. Given binary class labels Y for a feature X, the entropy of Y (E(Y)) is defined as,

| (6) |

where, p+ is the fraction of positive class labels and p− is the fraction of negative class labels.

The Information Gain given Y for a feature X is given by,

| (7) |

where, nX>div(i) and nX<div(i) is the number of entries of X greater than and less than div(i), YX>div(i) and YX<div(i) are the entries of Y for which the corresponding entries of X are greater than and less than div(i) respectively and Xsorted(i) indicates a feature vector with its values sorted in ascending order. A more discriminative feature has a higher Information Gain.

(iv) Out-of-bag error based on a bag of decision trees is an approach for feature selection using bootstrap aggregation on an ensemble of decision trees. Rather than using a single decision tree this approach avoids overfitting by growing an ensemble of trees on independent bootstrap distributions drawn from the data. The most important features are selected by out-of-bag estimates of feature importance in the bagged decision trees (OOB error). We used the Treebagger function, as implemented in Matlab, with saccade and fixation features as inputs to the model, which classified if the data belonged to a good or poor performer. The number of trees was set to 6, with all other hyperparameters set to their default values.

Analysis of scan paths and fixated spatial features

We compared scan paths and low-level fixated (spatial) features across good and poor performers. To simplify comparing scan paths across participants, we adopted the following approach: we encoded each scan path into a finite length string. As a first step, fixation maps were generated to observe where the subjects fixated the most. Very few fixations occurred in object-sparse regions (e.g. sky), or had uniform color or texture, like the walls of a building (Fig 2A). In contrast, many more fixations around crowded regions with more intricate details. For each image, fixation points of all subjects were clustered, and each cluster was assigned a character label. The entire scan path, comprising a sequence of fixations, was then encoded as a string of cluster labels.

Before clustering fixation points, we sought to minimize the contributions of regions with very low fixation density. To quantify this we adopted the following approach: Let xi be a fixation point and let denote the average Euclidean distance of xi from the set of other fixation points which are at a radius r from it. Let denote the inverse of . Now, we distributed all the fixation points uniformly on the image; let U denote this set. We find the point yi in U that was closest (in Euclidean distance) to xi, and compute as before. Then, the fixation density at the fixation point xi was defined as . Thus, all points with density less than 1 indicate regions which were sampled with less density than that corresponding to a uniform sampling strategy. These fixation points with very low fixation density were grouped into a single cluster since these occurred in regions that were explored relatively rarely. For these analyses r was set to 40 pixels, although the results were robust to variations of this parameter. The remaining fixation points were clustered using k-means clustering algorithm.