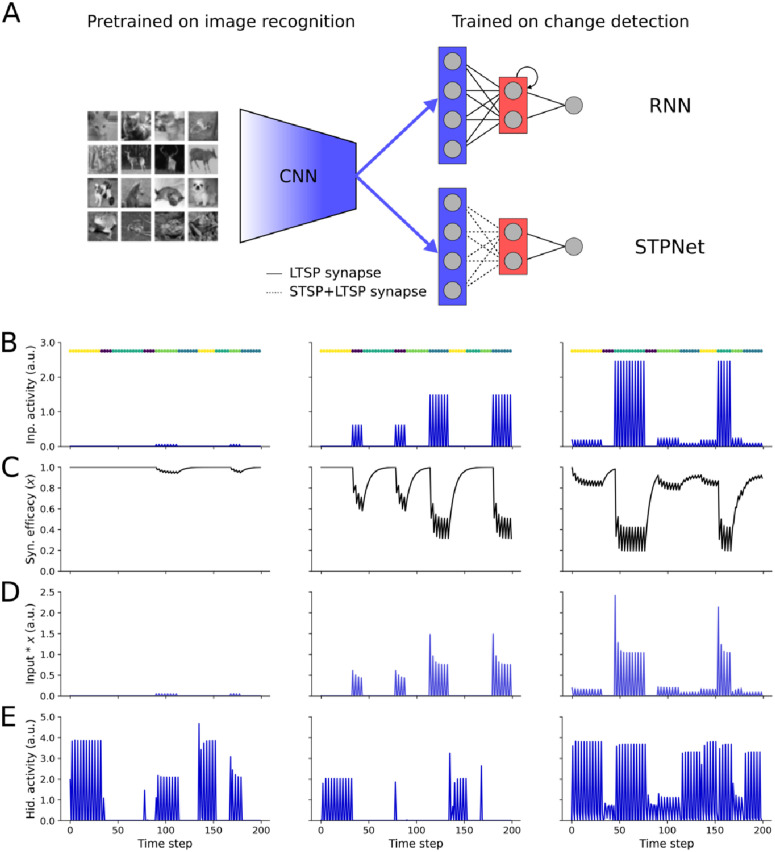

Fig 2. Overview of the models tested.

(A) Image features are derived from the last fully-connected layer of a convolutional neural network (CNN) pre-trained on a grayscale version of the CIFAR-10 image recognition task. This encoder network maps an input image to a lower-dimensional feature space, which serves as input to the models to the right. Two models of short-term memory were tested based on persistent neural activity (RNN, top) or short-term synaptic plasticity (STPNet, bottom). Models consisted of three layers, roughly corresponding to sensory, association, and motor areas. Model weights were trained with backpropagation (LTSP synapses, solid lines), with the input synapses in STPNet also being modulated by short-term synaptic depression (STSP synapses, dotted lines). (B) Input activity of three example units during the change detection task. Images were presented for 250 ms (one time step) followed by a 500 ms gray screen (two time steps). The left unit ends up responding to only one image and weakly. The center unit responds to two images, one stronger than the other. The right unit responds to four images, in a graded fashion. Image presentation times are color-coded and shown above each plot. (C) Input-dependent changes in synaptic efficacy (depression) for the units from STPNet shown in panel C. (D) Input activity modulated by short-term depression for the units from STPNet shown in panel C. (E) Activity of three example hidden units from a recurrent neural network model, which show more persistent activity. The original images in panel A are reproduced from the CIFAR-10 dataset [22].