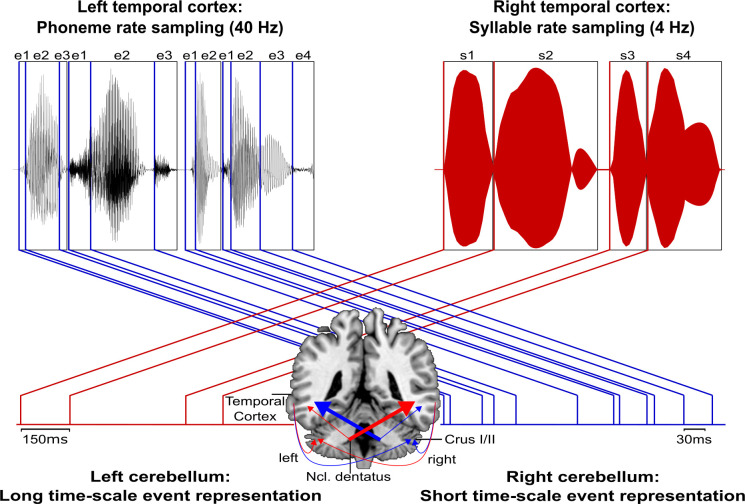

Figure 6. Schematic conceptualization of temporo-cerebellar interaction for internal model construction in audition.

Differential temporo-cerebellar interaction model depicting hypothesized connectivity between areas in the temporal lobe and cerebellum that may underlie sound processing at different timescales. Left and right cerebellum contribute to the encoding of event boundaries across long (red) and short (blue) timescales, respectively (Callan et al., 2007). These event representations are extracted from salient modulations of sound properties, that is, changes in the speech envelope (fluctuations in overall amplitude, red) corresponding to syllables (s1–s4) and the fine structure (formant frequency transitions, blue) corresponding to phonemes (e1–e4) (Rosen, 1992; Weise et al., 2012). Reciprocal ipsi- and cross-lateral temporo-cerebellar interactions between temporal cortex, crura I/II, and dentate nuclei yield unitary temporally structured stimulus representations conveyed by temporo-ponto-cerebellar and cerebello-rubro-thalamo-temporal projections (arrows). The resulting internal representation of the temporal structure of sound sequences, for example, speech, fits the detailed cortical representation of the auditory input to relevant points in time to guide the segmentation of a continuous input signal (waveform) into smaller perceptual units (boxes). This segmentation is further guided through weighting of information (symbolized by arrow thickness) towards the short and long timescale of sound processing in the left and right temporal cortex, respectively. This process allows distinctive sound features (e.g., word initial plosives /d/ (e1 in s1), /t/ (e1 in s2), and /b/ (e1 in s3) varying in voicing or place of articulation) to be optimally integrated at the time of their occurrence.