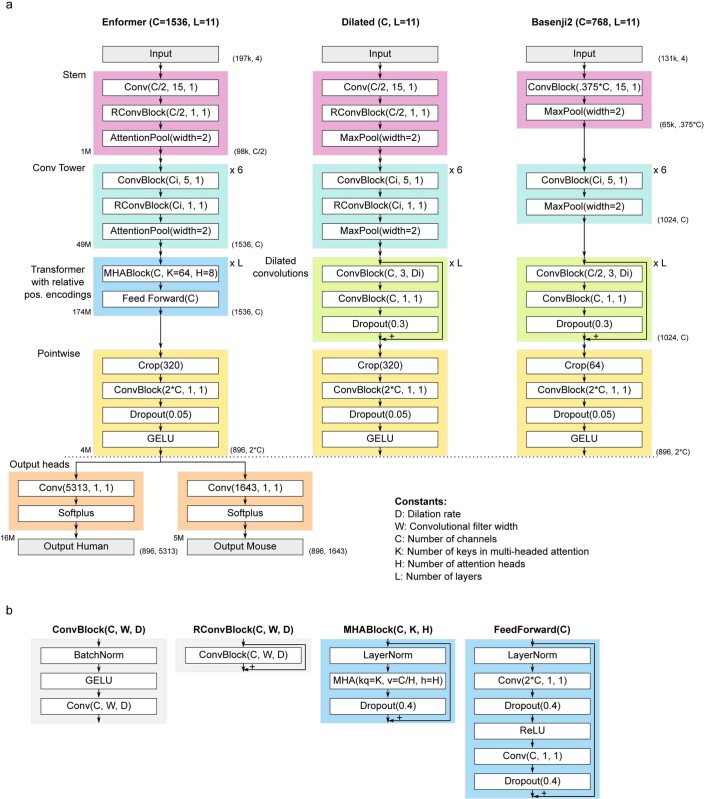

Extended Data Fig. 1. Enformer model architecture and comparison to Basenji2.

a) From left to right: Enformer model architecture, ‘dilated’ architecture used in ablation studies obtained by replacing the transformer part of the model with dilated convolutions, and Basenji22. Output shapes (without batch dimensions) are shown as tuples on the right side of the blocks. The number of trainable parameters for different parts of Enformer are shown on the left side of the blocks. The two main hyperparameters of the model are the number of transformer/dilated layers, L, and the number of channels, C. All models have the same two output heads as shown on the Enformer at the bottom. The number of channels in the convolutional tower Ci was increased by a constant multiplication factor to reach C channels starting from C/2 (or 0.375*C for Basenji2) in 6 layers. For dilated layers, we increased the dilation rate Di by a factor of 1.5 at every layer (rounded to the nearest integer). b) Definition of different network blocks in terms of basic neural network layers. MHA denotes multi-headed attention using relative positional encodings with kq representing the number of key/query size, v representing the value size and h the number of heads. Number of relative positional basis functions is equal to value size v.