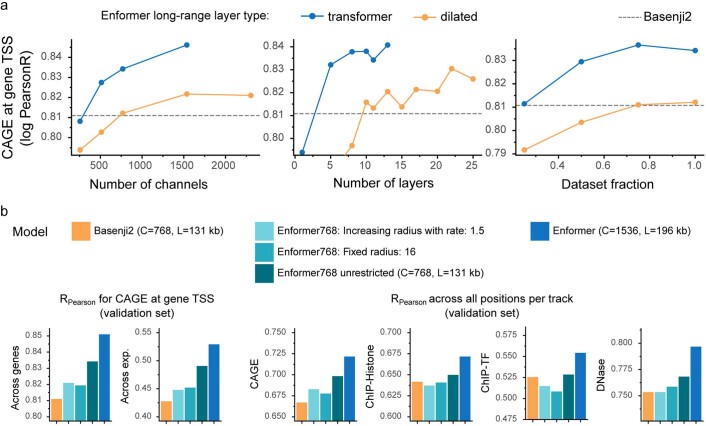

Extended Data Fig. 5. Comparison to dilated convolutions.

a) Enformer with original transformer layers (Extended Data Fig. 1a left) performs better than Enformer with dilated convolutions (Extended Data Fig. 1a center) across different model sizes and training dataset subsets as measured CAGE gene expression correlation in the validation set (same metric as in Fig. 1b across genes). At 15 dilated layers, the model starts to reach outside of the input sequence range (receptive field of 224,263 bp). Note that all the evaluations here are limited by TPU memory preventing you from using more layers or channels. b) Performance comparison to Basenji2 (left) and Enformer (right) to Enformer with the same receptive field (44 kb) as Basenji2 by either allowing a fixed attention radius of 16 across all layers where query can attend to at most 16 positions away (Enformer 769: Fixed radius: 16) or by exponentially increasing the respective field in the same way as the dilation rate in Basenji2. Enformer768 was trained with the same number of 768 channels and 131 kb input sequences as Basenji2, whereas Enformer uses two times more channels and 1.5 times longer sequence. Same evaluation metrics are shown as in Fig. 1.