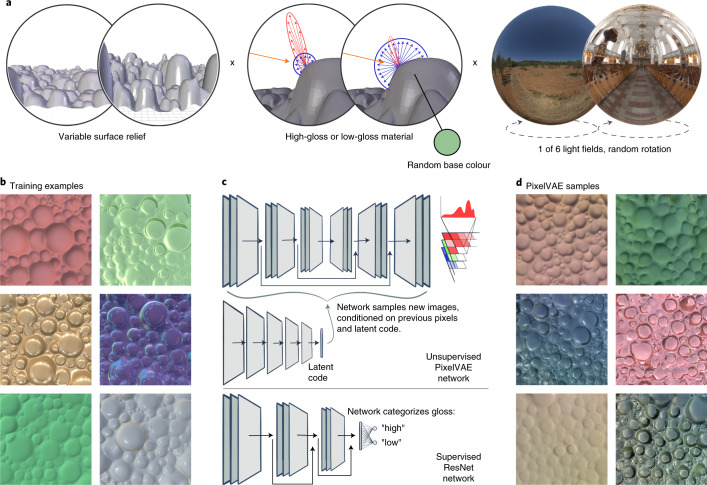

Fig. 1. An unsupervised neural network learns to generate plausible novel images from a simulated visual world.

a, The simulated physical world consisted of a bumpy surface viewed from above. The scene varied in depth of surface relief (left), surface base colour and whether the surface material had high or low gloss (middle), and illumination environment (right). b, Six examples from a training set of 10,000 images rendered from the simulated world by randomly varying the world factors. c, The dataset was used to train an unsupervised PixelVAE network (top). The network learns a probability distribution capturing image structure in the training dataset, which can be sampled from one pixel at a time to create novel images with similar structures. A substream of the network learns a highly compressed 10D latent code capturing whole-image attributes, which is used to condition the sampling of pixels. The same dataset was used to train a supervised ResNet network (bottom), which output a classification of ‘high gloss’ or ‘low gloss’ for each input image. The penultimate layer of the supervised network is a 10D fully connected layer, creating a representation of equivalent dimensionality for comparison with the unsupervised model’s latent code. d, Six example images created by sampling pixels from a trained PixelVAE network, conditioning on different latent code values to achieve diverse surface appearances. These images are not reconstructions of any specific images from the training set; they are completely novel samples from the probability distribution that the network has learned.