Abstract

There is strong evidence that neuronal bases for language processing are remarkably similar for sign and spoken languages. However, as meanings and linguistic structures of sign languages are coded in movement and space and decoded through vision, differences are also present, predominantly in occipitotemporal and parietal areas, such as superior parietal lobule (SPL). Whether the involvement of SPL reflects domain-general visuospatial attention or processes specific to sign language comprehension remains an open question. Here we conducted two experiments to investigate the role of SPL and the laterality of its engagement in sign language lexical processing. First, using unique longitudinal and between-group designs we mapped brain responses to sign language in hearing late learners and deaf signers. Second, using transcranial magnetic stimulation (TMS) in both groups we tested the behavioural relevance of SPL’s engagement and its lateralisation during sign language comprehension. SPL activation in hearing participants was observed in the right hemisphere before and bilaterally after the sign language course. Additionally, after the course hearing learners exhibited greater activation in the occipital cortex and left SPL than deaf signers. TMS applied to the right SPL decreased accuracy in both hearing learners and deaf signers. Stimulation of the left SPL decreased accuracy only in hearing learners. Our results suggest that right SPL might be involved in visuospatial attention while left SPL might support phonological decoding of signs in non-proficient signers.

Keywords: Sign language, Visuospatial attention, Superior parietal lobule, fMRI, TMS

1. Introduction

Research on sign languages has provided new perspectives into the nature of human languages. Although they fundamentally differ from speech with respect to perceptual and articulatory systems required for production and comprehension, striking parallels are also present–including both formal linguistic aspects as well as overlapping neural substrates (Emmorey, 2002; Poeppel et al., 2012). A number of previous functional magnetic resonance imaging (fMRI) studies (i.e. Emmorey et al., 2014; MacSweeney et al., 2004; 2006; 2008a) have provided strong evidence that fundamental bases for language processing are remarkably similar for sign and spoken language. For example, sign language comprehension engages the left-lateralized perisilvian network. These areas–inferior frontal gyrus (IFG), superior temporal gyrus (STG) and inferior parietal lobule (including supramarginal and angular gyri), have been therefore highlighted as a universal, largely independent of the modality, language processing core. Despite the extensive overlap between brain networks supporting sign and speech processing, key differences are also present. Sign languages convey linguistic information through visuospatial properties and movement, which is reflected in the greater activity within modality-dependent neural systems located predominantly in occipitotemporal (e.g. inferior/middle temporal and occipital gyri; ITG, MTG) and parietal regions, such as superior parietal lobule (SPL). All together, these patterns of neural activity have been consistently observed in native signers–both deaf and hearing (who acquired sign language in early childhood; Corina et al., 2007; Emmorey et al., 2014; Jednoróg et al., 2015; MacSweeney et al., 2002; 2004; 2006; Newman et al., 2015; Sakai et al., 2005) as well as hearing late learners (Johnson et al., 2018; Williams et al., 2016).

However, it remains uncertain whether the involvement of modality-dependent regions is linguistically relevant or rather exclusively linked to bottom-up perceptual mechanisms. Here we focus on the functional involvement of the parietal cortex–in particular, the SPL–during sign language processing. The unique engagement of SPL in processing of sign language has been reported in several studies of both sign production (e.g., Emmorey et al., 2007; Emmorey et al., 2016) and sign comprehension (e.g., Braun et al., 2001; Emmorey et al., 2014; MacSweeney, Woll, Campbell, Calvert, et al., 2002; McCullough et al., 2012). SPL is hypothesized to play an important role in the analysis of spatial elements (e.g., locations on the body or in space) that carry linguistic meaning in sign languages (see Corina et al., 2006; MacSweeney, Capek, et al., 2008, and MacSweeney & Emmorey, 2020, for reviews). However, SPL has been also associated with non-linguistic functions related to processing movement in space (Grefkes et al., 2004) or understanding of human manual actions, such as grasping, reaching and tool-use (see Creem-Regehr, 2009, for review). Thus, whether its involvement has an essential domain-specific contribution to sign language comprehension is still an open question.

Along the same line, whether SPL activation during sign language processing is dependent on proficiency or age of acquisition remains elusive. Some evidence about the characteristics of SPL involvement in sign language comes from studies on hearing adult participants learning to sign. In the longitudinal fMRI study of Williams et al. (2016), participants performed a phonological task. At pre-exposure, sign-naïve individuals activated left SPL while analyzing unknown signs only at the sensory, visuomotor level. At later learning stages, the transition to phonological processing occurred and was reflected in the subsequent recruitment of language-related areas and enhanced recruitment of the occipitotemporal and parietal regions, including bilateral SPL (Williams et al., 2016). Nevertheless, direct contrasts between first and subsequent time points did not reveal any significant difference in the strength of SPL activation. Similarly, a cross-sectional study by Johnson et al. (2018) showed that when acquired late in life and at a basic level of proficiency, sign language activated bilateral SPL in hearing learners performing lexical and sentential tasks. However, with respect to laterality of SPL engagement in sign language comprehension, earlier research with deaf and hearing native signers provided mixed reports. Among these studies some reported only left-hemisphere (MacSweeney et al., 2002, 2004), only right-hemisphere (Corina et al., 2007) or bilateral activation (Emmorey et al., 2002, 2014; MacSweeney et al., 2002a, 2008a).

Here we conducted two experiments to investigate the role of SPL in sign language comprehension. First, using a longitudinal fMRI study design we explored the pattern of neural changes throughout the course of sign language acquisition in hearing learners (HL). Subsequently, to uncover the potential influences of age of acquisition and proficiency on SPL involvement in sign language, we compared brain activation of deaf signers and HL, when the latter were still naïve to sign language (before the sign language course) and 8 months later at the peak of their skills (after the sign language course). Second, using transcranial magnetic stimulation (TMS) we tested in both deaf signers and HL after the course whether SPL engagement is behaviorally relevant for sign language comprehension, and if there are hemispheric differences.

If SPL involvement in sign language reflects only the low-level spatial properties of sign language itself, while not being linguistically relevant then we should observe brain activation in SPL in deaf signers as well as HL before and after the course. Furthermore, no changes in the level of activity in HL over learning time would be observed. If however SPL activity is involved in sign language comprehension, then we should observe a significant change in its recruitment resulting from sign language acquisition. Considering findings in spoken languages (see Abutalebi, 2008; Stowe & Sabourin, 2005; van Heuven & Dijkstra, 2010, for review) we also predicted that HL after the course would display a higher level of activation than deaf signers, related to the lower level of automatization and greater requirement of cognitive resources. Finally, we expected TMS administered to the SPL to hinder performance in both hearing and deaf participants, with possible hemispheric differences related to each group’s different proficiency in sign language.

2. Materials and methods

No part of the study procedures or analyses was preregistered prior to the research being conducted. We report how we determined our sample size, all data exclusions, all inclusion/exclusion criteria, whether inclusion/exclusion criteria were established prior to data analysis, all manipulations, and all measures in the study.

2.1. Experiment 1 – fMRI

2.1.1. Participants

Thirty-three hearing females were recruited to participate in the study. Ten participants dropped out of the study due to personal or medical reasons. Three participants were excluded from the analysis due to technical problems with registration of their responses. Therefore, data from 20 participants were included in the fMRI analysis (mean age at pre-exposure = 23.0, SD = 1.4, range = 20.3–25.7). Those participants come from a larger longitudinal MRI study on sign language acquisition. Sample size and gender were matched with another (all female) group for a separate study of tactile Braille alphabet and spoken language (Greek). In addition, sample size of hearing participants was also determined having in consideration participants’ comfort and suitable learning environment during PJM lessons. The participants reported Polish as their first language and were naïve to Polish Sign Language (polski język migowy–PJM) prior to enrolment in the study.

Twenty-one deaf females were also recruited to participate in the experiment. We aimed to match the sample size of hearing and deaf groups. One participant was excluded from the analysis due to technical problems with registration of responses, and one participant was excluded due to scoring below the age norms on the Raven Progressive Matrices test. Six participants dropped out of the study due to personal or medical reasons. Therefore, 13 deaf participants were included in the fMRI analysis (mean age = 27.7, SD = 4.1, range = 19.8–34.8). Similar sample size of deaf participants was reported in previous studies targeting brain activity in deaf population in response to sing language (Emmorey et al., 2010, N = 14; Jednoróg et al., 2015, N = 15; McCullough et al., 2012, N = 12). All of the deaf participants were born into deaf, signing families and reported PJM as their first language. Twelve individuals were congenitally deaf; one person reported hearing loss at the age of three. The mean hearing level, as determined by audiogram data, was 93.3 dB for the right ear (range = 70–120 dB) and 96.9 dB for the left ear (range = 80–120 dB). The majority of deaf participants were using hearing aids (N = 8) and their speech comprehension with the aid varied from poor to very good (see Table S1 for details). They were assisted by a PJM interpreter during the whole study.

All participants included in the final analyses were righthanded, healthy, had normal or corrected-to-normal vision and nonverbal IQ (Raven Progressive Matrices) within the age norms. They had 13 or more years of formal education (one hearing and four deaf participants completed higher education). Both hearing and deaf participants had no contraindications to the MRI, gave written informed consent and were paid for participation. The study was approved by the Committee for Research Ethics of the Institute of Psychology of the Jagiellonian University.

2.1.2. Polish Sign Language course and behavioral measurements

Participants underwent a PJM course specifically designed for the purpose of this study. The course was executed and accredited by a PJM school–EduPJM (http://edupjm.pl/) and run by two certified teachers of PJM, who were deaf native signers. The classes were 1.5 h long and took place twice a week [57 meetings, 86 h, M = 73.5 h of instruction (range = 45.0–84.0, SD = 9.9), due to absences]. The program of the course provided an increasing complexity of applied themes and activities. At the end, learners reached A1/A2 proficiency level, being able to describe immediate environment and matters, hold a conversation or comprehend a simple monologue.

2.1.3. Tasks and stimuli

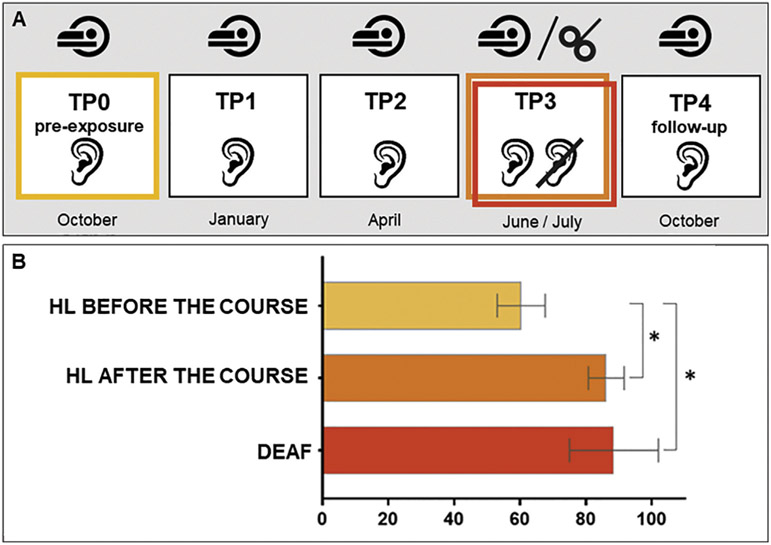

Hearing participants underwent five fMRI sessions performed in the ~2.5-month intervals: Time Points TP0-TP4, where TP0 was a pre-exposure scan, TP3 was a scan at the end of the course, and TP4 a follow-up scan. Deaf signers participated in one fMRI session that was matched in time to TP3 (Fig. 2A).

Fig. 2 –

A) Hearing participants underwent five fMRI sessions performed in the ~2.5-month intervals: Time Points TP0-TP4, where TP0 was a pre-exposure scan, TP3 was a scan at the end of the course and TP4 a follow-up). Deaf signers participated only in a single fMRI session that was matched in time to TP3. Approximately six weeks after TP3 hearing and deaf participants underwent the TMS session. B) Behavioral results for the sign language lexical decision task. Differences in accuracy scores for hearing learners (HL) before and after the course and between HL and deaf participants are indicated with an asterisk. *p ≤ .001; error bars represent SD.

The experimental task was based on lexical processing (Lexical Decision Task; LDT; Binder et al., 2009), presented in two conditions–Explicit (EXP), requiring a linguistic decision, and Implicit (IMP), involving gender discrimination (no explicit linguistic decision was required, but implicit language processing could occur). In order to control for nonspecific repetition effects, HL performed an additional control task of reading in L1 (Polish) that was implemented at each TP. Our assumption was that a lack of differences between time points in L1 would provide strong evidence that functional changes observed in L2 were indeed training-specific and not a consequence of task repetition.

PJM task: LDT EXP required a lexical decision about whether a presented stimulus was an existing sign (e.g., FRIEND) or a pseudosign (a non-meaningful, but possible PJM sign created by changing at least one phonological parameter of an existing sign, such as movement, handshape, location or orientation; Emmorey et al., 2011). For the IMP condition, stimuli of the same type were presented, however, participants were asked to indicate the gender of the sign model for each stimulus.

Sign stimuli were verbs, nouns and adjectives, covering a wide range of everyday categories. For each TP, signs were adjusted to match participants’ skills–only those that had already been learned were included. The task difficulty was balanced across TPs: at each session the presented stimuli were derived from all signs learned prior to that TP, so at TP1 they included signs learned during the first 3 months, while the stimuli presented at TP2 and TP3 consisted of signs acquired not only in the last learning period, but also earlier during the course. Stimuli presented at TP0 and TP4 were also taken from all learning periods, but were different from those presented at TP3. Since at TP0 participants were naïve to PJM, stimuli presented at TP4 were identical (however, the stimuli were presented in a different order). Stimuli presented to deaf signers were those used at TP3.

In total, 320 video clips were recorded by native Deaf signers (one female and one male), dressed in black t-shirts and standing in front of a grey screen, with full-face and torso exposed. They were asked not to produce large mouth movements (“mouthings”) that are closely associated with a Polish translation of a sign, in order to avoid lip reading. Videos were displayed using Presentation software (Neurobehavioral Systems, Berkeley, CA) on a screen located in the back of the scanner, reflected in the mirror mounted on the MRI head coil. Sample stimuli are listed in Table S2, and the experimental material are available at: https://osf.io/bgjsq/

Polish L1 control task: in the LDT condition HL were asked to discriminate written words (e.g. “BANANA”) and pseudowords (e.g. “BAPANA”). In the visual search condition, random letter strings were displayed on the screen. Half of the strings contained two “#” (e.g. KB#T#) and half did not (URCJW), and participants were asked to discriminate both types of letter strings.

2.1.4. Procedure

The PJM and Polish L1 tasks were presented in separate runs, in a mixed block/event design. The PJM EXP and IMP conditions were presented alternately and counterbalanced across participants. The task consisted of 5 EXP and 5 IMP blocks with 8 (4 signs/pseudosigns or words/pseudowords) pseudorandomized trials per block. Before each block, a fixation cross was presented for 6–8 s, followed by 2 s of a visual cue informing participants about the type of incoming block (EXP or IMP) followed by another fixation cross (1–2 s). In PJM the total duration of LDT was on average 8.1 min (mean block duration = 43 sec; mean stimuli length = 2.2 s; answer window: 2 s; Inter Stimulus Interval (ISI): 1 s. The total duration of the Polish control task was 6.5 min (block duration = 32 s; stimuli length = 1s; answer window = 2 s; ISI = 1 s).

2.1.5. Imaging parameters

MRI data were acquired on a 3 T Siemens Trio Tim MRI scanner using 12-channel head coil. T1-weighted (T1-w) images were acquired with the following specifications: 176 slices, slice-thickness = 1 mm, TR = 2530 ms, TE = 3.32 ms, flip angle = 7 deg, FOV = 256 mm, matrix size: 256 × 256, voxel size: 1 × 1 × 1 mm. An echo planar imaging (EPI) sequence was used for functional imaging. Forty-one slices were collected with the following protocol: slice-thickness = 3 mm, TR = 2500, flip angle = 80 deg, FOV = 216 × 216 mm, matrix size: 72 × 72, voxel size: 3 × 3 × 3 mm).

2.1.6. fMRI analyses

The pre-processing and statistical analyses of fMRI scans were performed using SPM12 (Wellcome Imaging Department, University College, London, UK, http://fil.ion.ucl.ac.uk/spm), run in MATLAB R2013b (The MathWorks Inc. Natick, MA, USA). First, if needed, structural and functional images were manually reoriented to origin in Anterior Commissure. Next, functional volumes acquired at all TPs were together realigned to the first scan and motion corrected. Then, in the case of hearing participants, the structural longitudinal registration SPM toolbox was used to create average T1-weighted image from five scans, to assure an identical normalization procedure over time. Functional images were normalized to MNI (Montreal Neurological Institute) space using deformation fields acquired from T1-w (averaged in case of hearing participants), co-registered to mean functional image. Finally, normalized images were smoothed with 6 mm full width at half maximum Gaussian kernel.

Statistical analysis was performed on participant (1st) and group (2nd) levels using General Linear Models. At the 1st level, onsets of correct and incorrect trials in the EXP and IMP condition as well as onsets of missing responses were entered into design matrices with the addition of six head movement regressors of no interest. Obtained functions were then convolved with the hemodynamic response function as implemented in SPM12. Data were filtered with 1/160 Hz high-pass filter, adjusted to the duration of LDT block (mean = 43 s). At the 2nd level, a set of analysis was performed for HL pre- and post-training and deaf signers. Beta estimates of correct trials in the EXP condition were used to compute statistical models. First, using one-sample t-tests, the LDT was investigated in each group. Then, two-sample t-tests were performed to compare brain activity between hearing (at TP0 and TP3) and deaf participants. Next, EXP and IMP conditions from TP3 were entered into a flexible factorial model, with 2 (group: HL and deaf) x 2 (condition: EXP and IMP) factors and additional subject factor. Group factor was specified with unequal variance, condition and subject factors were specified with equal variance. Then, a contrasts testing a group × condition interaction was computed. In order to explore the pattern of neural changes in hearing participants between TP0 and TP4, the EXP and IMP conditions from all TPs were entered into a flexible factorial model, with 5 (time point) x 2 (condition: EXP and IMP) factors–both specified with unequal variance–and subject factor, specified with equal variance and a contrast testing the main effect of time was computed. Finally, post-hoc pairwise comparisons between consecutive time points in EXP condition were performed (TP0 vs. TP1, TP1 vs. TP2, TP2 vs. TP3 and TP3 vs. TP4; the results can be found in supplementary materials 1.1., Figure S1 and Table S4).

Polish L1 control task: At the 1st level, task and time point-specific timings of all conditions together with six head movement regressors were entered in the model. At the 2nd level, a one-way within subject ANOVA 5 (time point) x 1 (LDT condition) model was computed using a mask of task positive activations from the experimental (PJM) and control conditions (Brennan et al., 2013).

In the main effect of time analysis task-related responses were considered significant at p < .05, using a voxel-level Family Wise Error correction (FWE). An additional extent threshold of >20 voxels was applied. In the rest of the models task-related responses were considered significant at p < .05, using cluster-level FWE correction (FWEc). Anatomical structures were identified with the probabilistic Harvard–Oxford Atlas (http://www.cma.mgh.harvard.edu/) for cortical and subcortical areas and the AAL atlas (Tzourio-Mazoyer et al., 2002) for cerebellar areas. Finally, to illustrate the pattern of activity changes over time, as well as the interaction between group and condition in left and right SPL, independent, anatomically-instructed regions of interest (ROIs) were defined using the Harvard–Oxford Atlas.

2.2. Experiment 2 (TMS)

2.2.1. Participants

Eighteen hearing participants who underwent Experiment 1 also participated in the subsequent TMS study. Four individuals were excluded from the analysis due to incomplete data, problems with localizing target structures or reported discomfort during stimulation. Two participants, who were previously excluded from Experiment 1 due to technical issues, took part in the TMS study. Therefore, 14 hearing participants were included in the TMS analysis. Additionally, 13 deaf participants previously enrolled in Experiment 1 participated in the TMS session, among whom one was previously excluded from fMRI analyses due to technical problems.

Both hearing and deaf participants had no contraindications to TMS, gave written informed consent and were paid for their participation. The experiment was approved by the Committee for Research Ethics of the Institute of Psychology of the Jagiellonian University.

2.2.2. Task and stimuli

Approximately six weeks after TP3 (hearing group: mean = 5.7 weeks, SD = 1.6, range = 4.6–10.7), a repetitive TMS (rTMS) experiment was conducted (Fig. 2A). Hearing and deaf participants were instructed to watch sign language video clips and perform the LDT EXP task requiring discrimination between signs and pseudosigns. The stimuli were produced by the same native PJM models as from the fMRI experiment, with full-face and torso exposed, presented in short videos (~2 s long; Fig. 1A). Responses were collected using a Cedrus response pad RB-840 (https://cedrus.com/rb_series/). The response pad was placed in front of the participants who were sitting by the table. They were asked to press a button with their right hand using the index finger for one decision (sign) and middle finger for the other decision (pseudosign). In total, 480 video clips were used in the TMS study (240 signs and 240 pseudosigns).

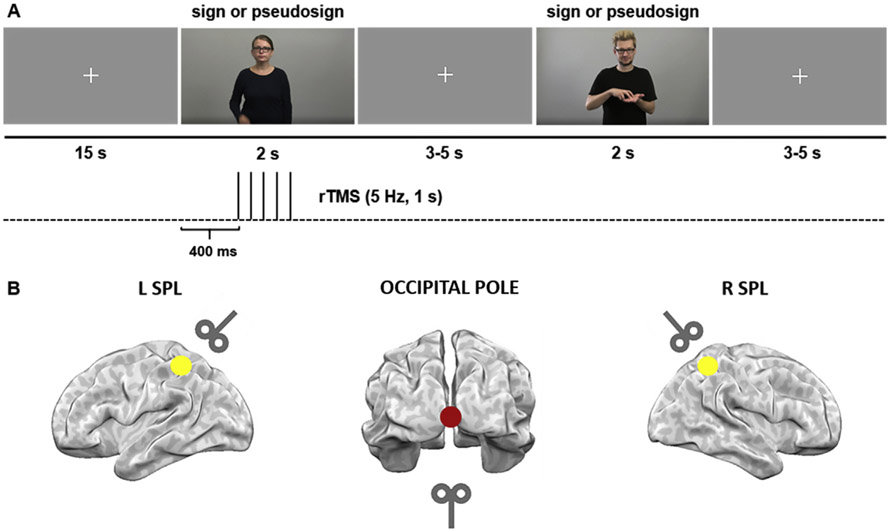

Fig. 1 –

Experimental design of the TMS study. A) Hearing and deaf participants performed a lexical task in sign language, requiring discrimination between signs and pseudosigns. Each run started with a fixation cross (15 s). The stimuli were ~2 s long and were followed by a fixation cross displayed for 3–5 s. Response time was counted from the stimulus onset until 1 s after its end. Pulses were administered at 400, 600, 800, 1000 and 1200 ms post-stimulus onset (5 Hz). B) TMS was delivered to three target sites–right SPL, left SPL and a control site–the occipital pole at 110% of the individual motor threshold.

2.2.3. Localization of TMS sites

During the experiment TMS was delivered to three target sites–right SPL (R SPL), left SPL (L SPL) and a control site–occipital pole (OP; Fig. 1B). Both SPLs were marked on each participant’s structural MRI scan. In the hearing group TMS delivery was based on individual structural MRI/fMRI data at TP3, using peaks of activation from the LDT EXP condition. The OP was localized anatomically for each participant and the coil was placed at the ~45° angle, so that the center of the coil was not touching the skull. Three participants used MRI-compatible glasses during the fMRI session correcting for insufficient vision, which caused T1-w image artifacts. Therefore their target regions were localized on a standard MNI template. Since in the deaf group fMRI analysis did not reveal significant clusters of activation in bilateral SPL, all of the target regions were assessed based on anatomical landmarks in the native structural images (T1-w). To verify the accuracy of our localization procedure, single-subject coordinates for right and left SPL were normalized to the MNI space and averaged across participants. The obtained mean MNI coordinates for the HL group were: x = −30 ± 9, y = −55 ± 8, z = 42 ± 8 (left SPL) and x = 33 ± 8, y = −55 ± 5, z = 44 ± 8 (right SPL) and for deaf group: x = −30 ± 9, y = 59 ± 8, z = 56 ± 7 (left SPL) and x = 30 ± 7, y = −60 ± 7, z = 56 ± 5 (right SPL; see Table S5 with MNI coordinates for individual participants).

2.2.4. TMS protocol

A MagPro ×100 stimulator (MagVenture, Hückelhoven, Germany) with a 70 mm figure-eight coil was used to apply the TMS. A neuronavigation system (Brainsight software, Rogue Research, Montreal, Canada) was used with a Polaris Vicra infrared camera (Northern Digital, Waterloo, Ontario, Canada) to guide stimulation.

Pulses were administered to each target site at 400, 600, 800, 1000 and 1200 ms post-stimulus onset (5 Hz; Fig. 1A). The first TMS pulse was administered 400 ms after the start of the video because the onset of the sign or pseudosign occurred ~400 ms after video onset which began with the model’s hands at rest along the body. Intensity was set to 110% of the individual motor threshold, measured by a visible twitch of the hand during single TMS pulses administered to the hand area in the left primary motor cortex (average intensity = 40% of the maximum stimulator output power; SD = 6%, range = 27–54%). Pulses were applied pseudorandomly on half of the trials (TMS vs. no TMS conditions). There were three experimental runs, one run per anatomical structure. The order of stimulated structures was counterbalanced across participants.

2.2.5. Procedure

After participants provided informed consent and completed a safety screening questionnaire, the structural MRI scan with the marked TMS target sites was co-registered to the participant’s head. Next, the resting motor threshold was measured. In order to familiarize participants with the task and TMS protocol, two short training sessions were performed without and with TMS. The actual TMS experiment was subsequently conducted. Each run started with a fixation cross (15 s) and consisted of 160 stimuli, counterbalanced between TMS and no TMS conditions (that is, a given sign was in the TMS condition for half of participants, and in the no TMS condition for the other half). Trials were followed by a fixation cross displayed for 3–5 s and response time was counted from the stimulus onset until 1 s after its end (Fig. 1A). Participants responded using a dedicated response pad. During each run participants were provided with two short breaks. In total the duration of experimental runs was ~20 min. Each run was followed by a break lasting a few minutes.

3. Results

3.1. Experiment 1 – fMRI

3.1.1. Behavioral results

Two-sample t-tests were performed in order to explore the differences in the accuracy in LDT between hearing and deaf participants. First, HL before the course were compared to the deaf signers. This comparison revealed that the deaf group performed significantly better [t (31) = 7.69; p < .001]. The comparison between HL post-training and deaf signers showed no significant differences between the groups (p = .45). Additionally, the comparison between performance of HL before and after the course using paired t-tests revealed an improvement at the end of the course of PJM, reflected in a significantly higher accuracy for post-training than pretraining [t (19) = 11.65; p < .001; Fig. 2B]. Details about participants’ scores can be found in Table S3).

3.1.2. fMRI results

3.1.2.1. PJM processing in hearing learners before the course.

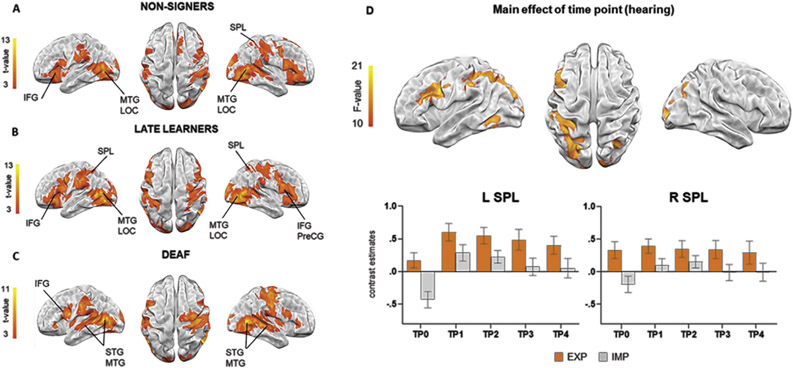

The LDT performed by hearing participants at pre-exposure, resulted in activation in the right, but not in the left SPL. Bilateral activation was also observed in IFG pars opercularis, precentral gyrus (PreCG), postcentral gyrus and supramarginal gyrus (SMG). Furthermore, significant clusters were observed in bilateral MTG and the superior part of lateral occipital cortex (LOC). Additionally, subcortical regions such as thalamus and putamen were engaged bilaterally (see Fig. 3B and Table 1).

Fig. 3 –

A-C) Brain activations during lexical processing of sign language for each group (p < .05; FWEc). D) Results from the main effect of time point in hearing learners (p < .05; FWE); bar graphs of independently defined ROIs are shown to illustrate the time course of changes. Error bars represent SEM. EXP: explicit condition; IMP: implicit condition (gender discrimination).

Table 1 –

Results from one-sample t-test for each group and main effect of time in hearing learners.

| MNI Coordinates |

|||||

|---|---|---|---|---|---|

| Brain regions | Cluster size | F-value | x | y | z |

| HL before the course | |||||

| Left hemisphere | |||||

| Lateral Occipital Cortex (inferior) | 1957 | 11,3 | −46 | −68 | 4 |

| Temporal Occipital Fusiform Cortex | 7,8 | −42 | −50 | −18 | |

| Middle Temporal Gyrus (temporooccipital) | 7,3 | −52 | −58 | 0 | |

| Inferior Frontal Gyrus (opercularis) | 1768 | 7,0 | −52 | 10 | 8 |

| Postcentral Gyrus | 906 | 7,4 | −58 | −20 | 26 |

| Superior Parietal Lobule | 134 | 5,0 | −30 | −50 | 58 |

| Right hemisphere | |||||

| Lateral Occipital Cortex (inferior) | 8594 | 17,3 | 48 | −66 | 2 |

| Postcentral Gyrus | 10,3 | 62 | −18 | 38 | |

| Temporal Occipital Fusiform Cortex | 9,6 | 40 | −56 | −20 | |

| Paracingulate Gyrus | 878 | 6,8 | 4 | 20 | 44 |

| Frontal Pole | 4,8 | 8 | 44 | 50 | |

| Brain-Stem | 349 | 6,4 | 4 | −26 | −2 |

| Thalamus | 5,5 | 10 | −12 | 2 | |

| Putamen | 182 | 5,8 | 20 | 6 | 6 |

| HL after the course | |||||

| Left hemisphere | |||||

| Inferior Frontal Gyrus (opercularis) | 2597 | 10,4 | −48 | 10 | 28 |

| Frontal Pole | 9,1 | −44 | 36 | −2 | |

| Postcentral Gyrus | 1554 | 7,7 | −58 | −18 | 28 |

| Supramarginal Gyrus (anterior) | 7,7 | −54 | −28 | 34 | |

| Left Thalamus | 1384 | 8,9 | −18 | −30 | −2 |

| Insular Cortex | 123 | 7,3 | −38 | −4 | 14 |

| Right hemisphere | |||||

| Lateral Occipital Cortex (inferior) | 11262 | 17,2 | 46 | −62 | 0 |

| Occipital Pole | 13,9 | 18 | −98 | 6 | |

| Inferior Frontal Gyrus (triangularis) | 2411 | 9,8 | 56 | 34 | 16 |

| Precentral Gyrus | 9,4 | 60 | 12 | 28 | |

| Right Thalamus | 1384 | 8,4 | 8 | −14 | 2 |

| Paracingulate Gyrus | 232 | 5,1 | 4 | 18 | 48 |

| Deaf | |||||

| Left hemisphere | |||||

| Supramarginal Gyrus (anterior) | 2506 | 12,9 | −54 | −30 | 36 |

| Lateral Occipital Cortex (inferior) | 10,5 | −46 | −64 | 10 | |

| Inferior Frontal Gyrus (opercularis) | 662 | 9,2 | −52 | 10 | 20 |

| Occipital Pole | 657 | 14,3 | −10 | −100 | −2 |

| Occipital Fusiform Gyrus | 5,8 | −14 | −82 | −10 | |

| Amygdala | 249 | 10,2 | −22 | −6 | −12 |

| Inferior Frontal Gyrus (triangularis) | 80 | 5,5 | −50 | 28 | −2 |

| Frontal Orbital Cortex | 4,7 | −52 | 22 | −8 | |

| Frontal Operculum Cortex | 4,1 | −38 | 26 | 0 | |

| Right hemisphere | |||||

| Lateral Occipital Cortex (inferior) | 5279 | 11,9 | 52 | −66 | 2 |

| Postcentral Gyrus | 1886 | 10,4 | 50 | −18 | 42 |

| Putamen | 800 | 9,4 | 30 | −2 | −4 |

| Precentral Gyrus | 347 | 9,5 | 54 | 10 | 14 |

| Supplementary Motor Cortex | 110 | 7,3 | 6 | 6 | 68 |

| Superior Frontal Gyrus | 4,9 | 6 | 20 | 68 | |

| Central Opercular Cortex | 94 | 7,7 | 38 | 0 | 14 |

| Inferior Frontal Gyrus (triangularis) | 92 | 6,4 | 52 | 34 | 6 |

| Frontal Orbital Cortex | 5,3 | 42 | 28 | −2 | |

| Inferior Frontal Gyrus (opercularis) | 5,1 | 54 | 16 | 2 | |

| Main effect of time point – HL | Cluster size | F-value | x | y | z |

| Left hemisphere | |||||

| Lateral Occipital Cortex (superior) | 1021 | 18,7 | −28 | −70 | 28 |

| Superior Parietal Lobule | 16,6 | −32 | −56 | 46 | |

| Supramarginal Gyrus (anterior) | 15,6 | −50 | −30 | 38 | |

| Precentral Gyrus | 521 | 21,3 | −42 | 4 | 32 |

| Inferior Frontal Gyrus (triangularis) | 14,2 | −40 | 26 | 20 | |

| Temporal Occipital Fusiform Cortex | 175 | 15,5 | −44 | −56 | −16 |

| Right hemisphere | |||||

| Occipital Fusiform Gyrus | 144 | 15,5 | 18 | −76 | −10 |

| Lateral Occipital Cortex (superior) | 128 | 16,6 | 32 | −66 | 32 |

| Precuneous | 45 | 14,9 | 20 | −56 | 22 |

| Occipital Pole | 42 | 13,0 | 12 | −96 | 18 |

3.1.2.2. PJM processing in hearing learners after the course and in deaf signers.

One sample t-tests revealed that HL and deaf signers activated prefrontal regions, including bilateral IFG and PreCG, together with occipitotemporal areas of MTG and LOC. Additionally, both groups activated SMG as well as subcortical regions such as thalamus and putamen. In addition, deaf signers recruited bilateral STG. Post-training, the HL participants recruited bilateral SPL, whereas no activation in these regions was observed in the deaf participants (see Fig. 3B and C and Table 1).

3.1.2.3. Main effect of time point in hearing learners.

Over the course of PJM learning, brain activation in hearing individuals during LDT changed in left hemisphere cortical regions–PreCG, IFG and SMG as well as SPL. No significant changes in activation over time were observed in the right SPL. Additional significant clusters were found in bilateral LOC and Fusiform Cortex (Fig. 3D and Table 1). For more detailed results and discussion of above analysis see supplementary materials 1.2. Finally, pairwise comparisons between consecutive time points revealed significant activation increases at TP1 > TP0 in bilateral LOC extending to SPL in the left hemisphere as well as left PreCG and IFG (see supplementary materials 1.1., Figure S1 and Table S4). An analogous contrast exploring the main effect of time in the control task of reading in L1 did not reveal any significant clusters.

3.1.2.4. Differences in PJM processing between groups.

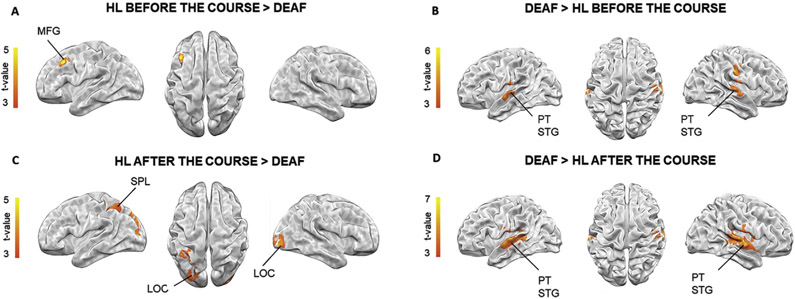

Two sample t-tests with the contrasts deaf > HL before the course and deaf > HL after the course revealed greater activation in bilateral planum temporale and STG in both comparisons. The contrast HL before the course > deaf revealed only one cluster in the left MFG. The HL after the course > deaf comparison showed greater activity in the bilateral LOC and left SPL (see Fig. 4 and Table 2).

Fig. 4 –

Brain activation differences during lexical processing of sign language between groups at p < .05; FWEc.

Table 2 –

Results from two-sample t-tests showing differences during lexical processing of sign language between groups.

| MNI Coordinates |

|||||

|---|---|---|---|---|---|

| Brain regions | Cluster size | t-value | x | y | z |

| HL before the course > deaf | |||||

| Left hemisphere | |||||

| Middle Frontal Gyrus | 138 | 5,0 | −42 | 18 | 38 |

| Deaf > HL before the course | |||||

| Left hemisphere | |||||

| Central Opercular Cortex | 260 | 5,2 | −56 | −14 | 18 |

| Superior Temporal Gyrus (anterior) | 4,9 | −62 | −10 | −2 | |

| Cerebelum VI | 129 | 4,0 | −20 | −54 | −20 |

| Right hemisphere | |||||

| Superior Temporal Gyrus (posterior) | 188 | 4,9 | 66 | −20 | 4 |

| Planum Temporale | 4,5 | 58 | −24 | 8 | |

| Postcentral Gyrus | 135 | 4,9 | 52 | −16 | 38 |

| HL after the course > deaf | |||||

| Left hemisphere | |||||

| Lateral Occipital Cortex (superior) | 211 | 5,0 | −30 | −84 | 32 |

| Superior Parietal Lobule | 152 | 4,8 | −42 | −46 | 52 |

| Lateral Occipital Cortex (superior) | |||||

| Right hemisphere | |||||

| Lateral Occipital Cortex (inferior) | 132 | 4,5 | 38 | −88 | −4 |

| Deaf > HL after the course | |||||

| Left hemisphere | |||||

| Planum Temporale | 889 | 5,8 | −60 | −18 | 4 |

| Temporal Occipital Fusiform Cortex | 219 | 3,9 | −24 | −62 | −20 |

| Right hemisphere | |||||

| Planum Temporale | 1246 | 6,7 | 60 | −22 | 8 |

| Superior Temporal Gyrus (posterior) | 6,4 | 66 | −10 | 2 | |

| Lingual Gyrus | 219 | 5,0 | 4 | −76 | −16 |

| Temporal Pole | 226 | 4,5 | 30 | 6 | −20 |

| Right Amygdala | 128 | 4,1 | 32 | 6 | −20 |

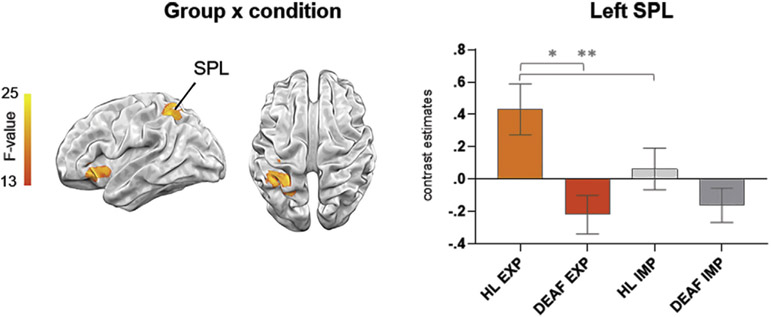

Lastly, a significant interaction between group and condition was revealed in the left SPL (p < .05, FWEc) and left insula. Subsequently, ROI analysis of the left SPL (derived independently from Harvard–Oxford atlas) using mixed 2 (group) x 2 (condition) rmANOVA revealed a significant main effect of group group [F (1, 31) = 6.95, p < .05, eta-squared = .18] and an interaction between group and condition [F (1, 31) = 6.64, p < .05, eta-squared = .18]. Post hoc t-tests showed that activity of the left SPL was significantly greater in the HL than in the deaf group only in the EXP condition and significantly greater during EXP than IMP condition in HL group (Fig. 5.).

Fig. 5 –

Whole-brain interaction of group (HL and deaf) by condition (EXP and IMP) at TP3 at p < .05; FWEc; bar graphs of the independently defined ROI in the left SPL are shown to illustrate the obtained interaction, *p < .005, **p = .001, Bonferroni corrected. Error bars represent SEM.

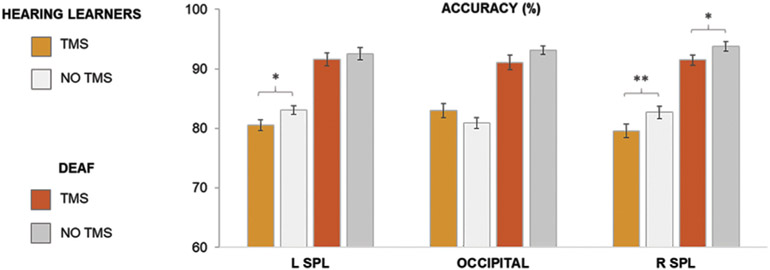

3.2. Experiment 2 – TMS

The three-way mixed analysis of variance (ANOVA) with structure (left SPL, right SPL and OP), group (hearing learners/deaf signers) and condition (TMS/no TMS) as factors was computed. The results showed a significant main effect of group [F (1, 25) = 147.54, p < .001, eta-squared = .86], condition [F (1, 25) = 18.09, p < .001, eta-squared = .42] as well as interactions: condition x structure [F (2, 50) = 3.28, p < .05, eta-squared = .12] and group x structure x condition [F (2, 50) = 3.99, p < .05, eta-squared = .14]. Subsequently, three two-way ANOVA models were computed for each structure separately (left SPL, right SPL and OP) with group (hearing learners/deaf signers) and condition (TMS/no TMS) as factors. This analysis indicated a significant main effect of group for the left SPL [F (1, 25) = 82.51, p < .001, eta-squared = .77], right SPL [F (1, 25) = 77.47, p < .001, eta-squared = .76] and OP [F (1, 25) = 60.41, p < .001, eta-squared = .71], a main effect of condition for the left SPL [F (1, 25) = 5.04, p < .05, eta-squared = .17] and right SPL [F (1, 25) = 22.59, p < .001, eta-squared = .46], as well as a significant interaction between condition and group for the OP [F (1, 25) = 6.79, p < .05, eta-squared = .21]. Since we were particularly interested if similar effects are found in both hearing and deaf participants we tested the effect of condition in both groups. TMS delivered to the right SPL resulted in a decrease of accuracy in LDT in both hearing (p ≤ .001) and deaf (p < .05) participants. For left SPL the TMS stimulation negatively affected the performance only in the hearing group (p < .05). There was no significant TMS effect in the control structure (OP) in either group (see Fig. 6). Details about participants’ scores can be found in Table S3.

Fig. 6 –

Accuracy results from the TMS experiment: percentage of correct responses in the Lexical Decision Task in hearing learners (after the course) and deaf signers during TMS/no TMS conditions, *p ≤ .05; **p ≤ .001, Bonferroni corrected. For brevity, only differences between TMS and no TMS conditions are indicated. Error bars represent SEM.

4. Discussion

In the current study, we sought to investigate the role of SPL in sign language comprehension. We asked how sign language learning changes the pattern of SPL response to PJM signs in hearing late learners and what are the differences between hearing individuals (prior to and after the sign language course) and deaf individuals in SPL engagement during sign language comprehension. Therefore, in the first fMRI experiment, we combined within subjects longitudinal and between-groups designs. Subsequently, to further test if SPL engagement is behaviorally relevant for performing the same lexical task and if there are hemispheric differences between hearing late learners and deaf signers we conducted a second experiment using TMS.

4.1. Sign language processing by hearing learners before the course

During pre-exposure hearing participants had no access to the linguistic meaning of the signs. Thus, they likely performed the task by focusing on the sensory properties of observed meaningless gestures, which was reflected in bilateral activation in the occipitotemporal network (MTG, LOC) involved in visual and motor processing. Additionally, involvement of frontal areas (IFG/PreCG) and parietal regions (postcentral gyrus/SMG extending to the SPL in the right hemisphere) was found, and these areas have been identified as hubs within the mirror neuron system (Buccino et al., 2004; Cattaneo & Rizzolatti, 2009; Rizzolatti & Sinigaglia, 2008) – a unified network engaged in processing a broad spectrum of human actions. Notably, participants were aware of the linguistic context of the task and even though they did not know the meaning of signs, they might have tried to extract its linguistic aspects (a similar effect was also discussed by Emmorey et al. (2010) and Liu et al. (2017)).

This pattern of neural activity is in line with previous results in non-signers performing a task with signs that are meaningless to them (Corina et al., 2007; Emmorey et al., 2010; MacSweeney et al, 2004, 2006; Newman et al., 2015; Williams et al., 2016). With respect to SPL, the activation in the right hemisphere was in line with the findings of Corina et al. (2007), showing common parietal involvement for observation of three types of human action: self-oriented grooming gestures, object-oriented actions, and sign language. In other studies activation of SPL in non-signers observing sign language stimuli was found either in the left hemisphere (MacSweeney et al., 2006; Williams et al., 2016) or in both hemispheres (Emmorey et al., 2010; MacSweeney et al., 2004). Our data suggest the right SPL is dominant during processing of human action that does not contain any linguistic meaning.

4.2. Sign language processing by hearing learners after the course and deaf signers

In line with prior studies of native signers (e.g. Emmorey et al., 2014, 2003; MacSweeney, Capek, et al., 2008, 2006; Sakai et al., 2005) and hearing late learners (Johnson et al., 2018; Williams et al., 2016), the classical language region, IFG, located in the left hemisphere responded during the sign language lexical decision task both in HL after the course and deaf signers. In the context of linguistic processes, IFG has been described as a language core mediating language production and comprehension regardless of modality (Binder et al., 2009; Corina & Knapp, 2006; Emmorey et al., 2014; Friederici, 2012; Hickok & Poeppel, 2007; Johnson et al., 2018; MacSweeney et al, 2002, 2008a; Sakai et al., 2005; Williams et al., 2016) and a key node subserving unification, integration and memory retrieval at various linguistic levels (Hagoort, 2013). Additionally, active observation and understanding of action and movement engaged left PreCG, in line with other studies (Emmorey et al., 2014; Schippers & Keysers, 2011).

In addition, HL after the course and deaf signers recruited temporal areas (MTG and ITG) together with occipital regions (LOC), likely reflecting motion-related perception of the body (Emmorey et al., 2014; Liu et al., 2017; MacSweeney, Capek, et al., 2008; Williams et al., 2016). Both groups additionally engaged SMG, which has been previously attributed to phonological analysis and working memory demands of sign language (MacSweeney, Waters, et al., 2008; Rönnberg et al., 2004). Finally, the activation of bilateral SPL was observed in HL after the course, but deaf individuals showed no involvement of SPL for the lexical decision task.

Further, to investigate with greater precision which regions were prone to activation changes with sign language acquisition in HL, we performed a longitudinal analysis including all TPs. We found that the most pronounced alterations in activity occurred predominantly in the left hemisphere–IFG, LOC and SPL. This result suggests that left, but not right SPL, forms a sign language comprehension network over the course of learning. However, no significant changes in SPL activation were observed in previous longitudinal study of Williams et al. (2016), which might be due to the linguistically less complex task that they used (i.e., a low level form-based decision).

Several theoretical frameworks and mechanisms for learning-driven brain reorganization have been proposed. For example Wenger et al. (2017) suggested that during learning, neuroplasticity follows a sequence of expansion, selection, and renormalization. In this context neural alterations would be observed as an initial increase in activation (e.g. through the generation of new dendritic spines or synaptogenesis), which is then followed by partial or complete return to baseline level after an optimal neural circuit has been selected. Although the expansion-renormalization model (Wenger et al., 2017) refers to structural plasticity, it is in line with the neural efficiency theory (Haier et al., 1992), which postulates that better performance on a cognitive task requires fewer neural resources and thus reflects in lower brain activity. However, we found no statistically significant alterations of brain activity after the first three months of PJM learning, despite continued improvements in performance. This result is likely due to the fact that the PJM learners did not reach a level of proficiency that would allow for neural optimization to take place. The mechanisms underlying brain plasticity following sign language learning are discussed in details elsewhere Banaszkiewicz et al. (2020).

4.3. Differences in sign language processing between groups

During lexical processing deaf signers, but not HL, engaged STG to a larger extent. This result is in line with previous studies reporting cross-modal plasticity of the auditory cortex in congenitally deaf individuals (e.g. Campbell et al., 2008; Cardin et al., 2013; MacSweeney, Capek, et al., 2008).

HL before the course had greater activation than deaf signers in the left MFG, a part of the prefrontal system frequently related to a wide range of cognitive functions, i.e. attention: control, selection, orientation etc. (Kane & Engle, 2002; Thompson & Duncan, 2009). It has also been suggested to be a part of the Ventral Attention Network (see Corbetta et al., 2008 for review) and mirror neuron system (Filimon et al., 2007). Thus, this result might suggest enhanced attention and reliance on the sensory aspects of stimuli when sign-naïve participants perform the task.

Lastly, we explored the unique pattern of activation for the same group of hearing participants after they had acquired skills essential for processing the sign stimuli linguistically, in comparison to deaf individuals (HL after the course > deaf signers). We predicted enhanced neural activity in HL, related to greater effort and a lower level of automatization in the lexical processing, compared to fluent deaf signers. Indeed, while the behavioral data did not reveal any group differences, HL exhibited greater involvement in the occipito-parietal visuospatial network–bilateral LOC and left SPL. Greater neural demands in late than early signers (both deaf and hearing) were observed by Twomey et al. (2020) in the left occipital segment of intraparietal sulcus, in close proximity to the currently observed cluster in SPL. Similarly in a previous study of Mayberry et al. (2011) on early and late deaf signers, a positive relationship between the age of onset of sign language acquisition and the level of activation in the occipital cortex was found. With the support of previous behavioral data (e.g., Mayberry & Fischer, 1989; Morford et al., 2008), both the Twomey et al. (2020) and the Mayberry et al. (2011) studies suggest shallower language processing and the hypersensitivity to the perceptual properties of signs in late learners. Our results suggest that these greater demands occur not only in the occipital cortex, but also extend to left SPL.

4.4. Differences between the right and left SPL revealed with TMS

Lastly, using TMS we tested if SPL is relevant for sign language comprehension in both deaf signers and hearing late learners and if hemispheric differences are present. Stimulation of both right and left SPL decreased performance compared to the control site (occipital pole). Specifically, TMS stimulation of the right SPL resulted in a decrease in accuracy for both late learners and deaf signers. This finding is in line with insights provided by previous non-linguistic studies, suggesting right-hemisphere dominance in the parietal cortex for visuospatial attention (Cai et al., 2013; Corbalis et al., 2014). In addition, Wu et al. (2016) demonstrated that TMS applied to the right, but not left SPL, resulted in an increase in reaction time for a visuospatial attention task, confirming that the right SPL controls functions supporting visuospatial attention. Here, using a visuospatial linguistic (lexical) task, we suggest the right SPL is also involved in visuospatial attention processes in both skilled and beginning users of sign language. This notion is also supported by the results of Experiment 1, showing no functional changes in the right SPL over the course of sign language learning in the hearing participants.

Secondly, when TMS pulses were applied to the left SPL, the level of accuracy declined only in the group of HL after the course, as shown by the differential pattern of simple effects. Together with our fMRI result showing an increase in activation when the HL started to comprehend signs, our TMS result suggests that left SPL is linguistically relevant in visuospatial linguistic processing in novice signers. Given the previously reported role of SPL in hand movement processing, we suggest that the left SPL might be more specifically involved in decoding visuospatial aspects of the sign language phonology, such as locations on the face or body, hand configuration and orientation, and movement trajectories. According to behavioral studies, sign language processing in non-native users is characterised by phonological errors (i.e. Mayberry, 1994; Mayberry & Fischer, 1989; Morford et al., 2008). Mayberry and Fischer (1989) have argued that late learners experience a “phonological bottleneck” that causes more effortful and less automatic access to the lexical meaning of the signs.

4.5. Limitations

Several limitations of the current experiment should be noted. First, even though participants performed phonological exercises during the PJM course (e.g., exercises requiring production of signs based on given handshapes), they were never explicitly taught about the phonology of sign language. Although discriminating signs from pseudosigns (created by changing at least one phonological parameter of an existing sign) requires sub-lexical, implicit phonological encoding, the Lexical Decision Task explicitly entails lexical, rather than phonological processing. Therefore our conclusion that the left SPL in hearing learners reflects phonological decoding, based on reverse inference, should be verified in the future based on tasks specifically focused on phonological processing.

Additionally, our paradigm does not disentangle bottom-up perceptual and top-down linguistic processes in the left SPL. In order to make stronger claims about distinct cognitive functions of both left and right SPL, a control nonlinguistic task should be implemented in both fMRI and TMS experiments in the future. We also note the TMS localization of the HL group was based on functional activation during the LDT EXP fMRI task, while in deaf group target regions were defined based on anatomical landmarks. We initially aimed to perform an individually-defined localization procedure also in the deaf participants, however, SPL activity could not be localized in the majority of these participants. This inconsistency might be a potential limitation of the current study. Moreover, both HL and deaf signers are difficult to access groups, we consider our sample size in the TMS experiment as relatively small. Lastly, the lack of significant interaction between group (hearing learners/deaf signers) and condition (TMS/no TMS) in the left SPL precludes us from drawing strong inferences about distinct effects of TMS in hearing and deaf participants. Therefore, further studies are needed to confirm our results about the role of SPL in sign language processing.

5. Conclusions

Taken together, our fMRI and TMS results suggest that SPL participates in the processing of sign language stimuli, however its function might be distinct depending on the hemisphere. Specifically, we propose that right SPL might be involved in the allocation of attention functions and left SPL in the identification and integration of linguistic forms.

Supplementary Material

Open practices.

The study in this article earned an Open Materials badge for transparent practices. Materials and data for the study are available at https://osf.io/bgjsq/.

Acknowledgments

The study was supported by the National Science Centre Poland (2014/14/M/HS6/00918) awarded to AM. AB was additionally supported by National Science Centre Poland (2017/27/N/HS6/02722 and 2019/32/T/HS6/00529). PM and PR were supported under the National Programme for the Development of Humanities of the Polish Ministry of Science and Higher Education (0111/NPRH3/H12/82/2014). KE was supported by National Institutes of Health, grant #R01 DC010997.

The study was conducted with the aid of CePT research infrastructure purchased with funds from the European Regional Development Fund as part of the Innovative Economy Operational Programme, 2007–2013.

We gratefully acknowledge all our participants.

Footnotes

Declaration of competing interest

Authors declare no competing interests.

Supplementary data

Supplementary data to this article can be found online at https://doi.org/10.1016/j.cortex.2020.10.025.

Data availability statement

Partial datasets generated during the current study are available at: https://osf.io/bgjsq/. The conditions of our ethics approval do not permit sharing of the raw MRI data obtained in this study with any individual outside the author team under any circumstances.

REFERENCES

- Abutalebi J (2008). Neural aspects of second language representation and language control. Acta Psychologica, 128(3), 466–478. 10.1016/j.actpsy.2008.03.014 [DOI] [PubMed] [Google Scholar]

- Banaszkiewicz A, Matuszewski J, Bola Ł, Szczepanik M, Kossowski B, Rutkowski P, … Marchewka A (2020). Multimodal imaging of brain reorganization in hearing late learners of sign language. Human Brain Mapping. 10.1002/hbm.25229. In press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Binder JR, Desai RH, Graves WW, & Conant LL (2009). Where is the semantic system? A critical review and metaanalysis of 120 functional neuroimaging studies. Cerebral Cortex, 19(12), 2767–2796. 10.1093/cercor/bhp055 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Braun AR, Guillemin A, Hosey L, & Varga M (2001). The neural organization of discourse: An H215O-PET study of narrative production in English and American sign language. Brain: a Journal of Neurology, 124(10), 2028–2044. 10.1093/brain/124.10.2028 [DOI] [PubMed] [Google Scholar]

- Brennan C, Cao F, Pedroarena-leal N, Mcnorgan C, & Booth JR (2013). Reading acquisition reorganized the phonological awareness network only in alphabetic writing systems. Human Brain Mapping, 34(12), 1–24. 10.1002/hbm.22147 (Reading). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Buccino G, Binkofski F, & Riggio L (2004). The mirror neuron system and action recognition. Brain and Language, 89(2), 370–376. 10.1016/S0093-934X(03)00356-0 [DOI] [PubMed] [Google Scholar]

- Cai Q, Van der Haegen L, & Brysbaert M (2013). Complementary hemispheric specialization for language production and visuospatial attention. Proceedings of the National Academy of Sciences, 110, E322–E330. 10.1073/pnas.1212956110 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Campbell R, MacSweeney M, & Waters D (2008). Sign language and the brain: A review. The Journal of Deaf Studies and Deaf Education, 13(1), 3–20. 10.1093/deafed/enm035 [DOI] [PubMed] [Google Scholar]

- Cardin V, Orfanidou E, Rönnberg J, Capek CM, Rudner M, & Woll B (2013). Dissociating cognitive and sensory neural plasticity in human superior temporal cortex. Nature Communications, 12(4). 10.1038/ncomms2463 [DOI] [PubMed] [Google Scholar]

- Cattaneo L, & Rizzolatti G (2009). The mirror neuron system. Archives of Neurology, 66(5), 557–560. 10.1001/archneurol.2009.41 [DOI] [PubMed] [Google Scholar]

- Corballis MC (2014). Left brain, right brain: Facts and fantasies. Plos Biology, 12(1), Article e1001767. 10.1371/journal.pbio.1001767 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Corbetta M, Patel G, & Shulman GL (2008). The reorienting system of the human brain: From environment to theory of mind. Neuron, 58(3), 306–324. 10.1016/j.neuron.2008.04.017 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Corina D, Chiu YS, Knapp H, Greenwald R, San Jose-Robertson L, & Braun A (2007). Neural correlates of human action observation in hearing and deaf subjects. Brain Research, 1152(1), 111–129. 10.1016/j.brainres.2007.03.054 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Corina DP, & Knapp H (2006). Sign language processing and the mirror neuron system. Cortex; a Journal Devoted To the Study of the Nervous System and Behavior, 42(4), 529–539. 10.1016/S0010-9452(08)70393-9 [DOI] [PubMed] [Google Scholar]

- Creem-Regehr S (2009). Sensory-motor and cognitive function of the human posterior parietal cortex involved in manual actions. Neurobiology of Learning and Memory, 91(2), 166–171. 10.1016/j.nlm.2008.10.004, 166–171. [DOI] [PubMed] [Google Scholar]

- Emmorey K (2002). Language, cognition and the brain: Insights from sign language research. Mahwah, NJ: Lawrence Erlbaum Associates. [Google Scholar]

- Emmorey K, Grabowski T, McCullough S, Damasio H, Ponto LLB, Hichwa RD, & Bellugi U (2003). Neural systems underlying lexical retrieval for sign language. Neuropsychologia, 41(1), 85–95. 10.1016/S0028-3932(02)00089-1 [DOI] [PubMed] [Google Scholar]

- Emmorey K, McCullough S, Mehta S, & Grabowski TJ (2014). How sensory-motor systems impact the neural organization for language: Direct contrasts between spoken and signed language. Frontiers in Psychology, 5(May), 1–13. 10.3389/fpsyg.2014.00484 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Emmorey K, Mehta S, & Grabowski TG (2007). The neural correlates of sign versus word production. Neuroimage, 36(2007), 202–208. 10.1016/j.neuroimage.2007.02.040 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Emmorey K, Mehta S, McCullough S, & Grabowski TG (2016). The neural circuits recruited for the production of signs and fingerspelled words. Brain and Language, 160, 30–41. 10.1016/j.bandl.2016.07.003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Emmorey K, Xu J, & Braun A (2011). Neural responses to meaningless pseudosigns: Evidence for signes-based phonetic processing in superior temporal cortex. Brain and Language, 117(1), 34–38. 10.1016/j.bandl.2010.10.003.Neural [DOI] [PMC free article] [PubMed] [Google Scholar]

- Emmorey K, Xu J, Gannon P, Goldin-Meadow S, & Braun A (2010). CNS activation and regional connectivity during pantomime observation: No engagement of the mirror neuron system for deaf signers. Neuroimage, 49(1), 994–1005. 10.1016/j.neuroimage.2009.08.001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Filimon F, Nelson JD, Hagler DJ, & Sereno MI( (2007). Human cortical representations for reaching: Mirror neurons for execution, observation, and imagery. Neuroimage, 37(4), 1315–1328. 10.1016/j.neuroimage.2007.06.008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Friederici AD (2012). The cortical language circuit: From auditory perception to sentence comprehension. Trends in Cognitive Sciences, 16(5), 262–268. 10.1016/j.tics.2012.04.001 [DOI] [PubMed] [Google Scholar]

- Grefkes C, Ritzl A, Zilles K, & Fink GR (2004). Human medial intraparietal cortex subserves visuomotor coordinate transformation. NeuroImage. 10.1016/j.neuroimage.2004.08.031 [DOI] [PubMed] [Google Scholar]

- Hagoort P (2013). MUC (memory, unification, control) and beyond. Frontiers in Psychology, 4, 1–13. 10.3389/fpsyg.2013.00416 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hickok G, & Poeppel D (2007). The cortical organization of speech processing. Nature Reviews Neuroscience, 8(5), 393–402. 10.1038/nrn2113 [DOI] [PubMed] [Google Scholar]

- Jednoróg K, Bola Ł, Mostowski P, Szwed M, Boguszewski PM, Marchewka A, & Rutkowski P (2015). Three-dimensional grammar in the brain: Dissociating the neural correlates of natural sign language and manually coded spoken language. Neuropsychologia, 71(April), 191–200. 10.1016/j.neuropsychologia.2015.03.031 [DOI] [PubMed] [Google Scholar]

- Johnson L, Fitzhugh MC, Yi Y, Mickelsen S, Baxter LC, Howard P, & Rogalsky C (2018). Functional neuroanatomy of second language sentence Comprehension : An fMRI study of late learners of American sign language. Frontiers in Psychology, 9(September), 1–20. 10.3389/fpsyg.2018.01626 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kane MJ, & Engle RW (2002). The role of prefrontal cortex in working-memory capacity, executive attention, and general fluid intelligence: An individual-differences perspective. Psychonomic Bulletin & Review, 9, 637–671. 10.3758/BF03196323 [DOI] [PubMed] [Google Scholar]

- Liu L, Yan X, Liu J, Xia M, Lu C, Emmorey K, Chu M, & Ding G (2017). Graph theoretical analysis of functional network for comprehension of sign language. Brain Research, 1671, 55–66. 10.1016/j.brainres.2017.06.031 [DOI] [PMC free article] [PubMed] [Google Scholar]

- MacSweeney M, Campbell R, Woll B, Brammer MJ, Giampietro V, David AS, Calvert GA, & McGuire PK (2006). Lexical and sentential processing in British sign language. Human Brain Mapping, 27(1), 63–76. 10.1002/hbm.20167 [DOI] [PMC free article] [PubMed] [Google Scholar]

- MacSweeney M, Campbell R, Woll B, Giampietro V, David AS, McGuire PK, Calvert GA, & Brammer MJ (2004). Dissociating linguistic and nonlinguistic gestural communication in the brain. Neuroimage, 22(4), 1605–1618. 10.1016/j.neuroimage.2004.03.015 [DOI] [PubMed] [Google Scholar]

- MacSweeney M, Capek CM, Campbell R, & Woll B (2008a). The signing brain: The neurobiology of Sign Language. Trends in Cognitive Sciences, 12(11), 432–440. 10.1016/j.tics.2008.07.010 [DOI] [PubMed] [Google Scholar]

- MacSweeney M, & Emmorey K (2020). The neurobiology of sign language processing. In Poeppel D, Mangun G, & Gazzaniga M (Eds.), The cognitive neurosciences VI (pp. 851–858). Cambridge, MA: The MIT Press. [Google Scholar]

- MacSweeney M, Waters D, Brammer MJ, Woll B, & Goswami U (2008b). Phonological processing in deaf signers and the impact of age of first language acquisition. Neuroimage, 40(3), 1369–1379. 10.1016/j.neuroimage.2007.12.047 [DOI] [PMC free article] [PubMed] [Google Scholar]

- MacSweeney M, Woll B, Campbell R, Calvert GA, McGuire PK, David AS, Simmons A, & Brammer MJ (2002b). Neural correlates of British sign language comprehension: Spatial processing demands of topographic language. Journal of Cognitive Neuroscience, 14(7), 1064–1075. 10.1162/089892902320474517 [DOI] [PubMed] [Google Scholar]

- MacSweeney M, Woll B, Campbell R, McGuire PK, David AS, Williams SCR, Suckling J, Calvert GA, & Brammer MJ (2002a). Neural systems underlying British Sign Language and audio-visual English processing in native users. Brain: a Journal of Neurology, 125(7), 1583–1593. 10.1093/brain/awf153 [DOI] [PubMed] [Google Scholar]

- Mayberry RI (1994). The importance of childhood to language acquisition: Evidence from American Sign Language. In Goodman JC, & Nusbaum HC (Eds.), The development of speech perception: The transition from speech sounds to spoken words (pp. 57–90). Cambridge, MA: The MIT Press. [Google Scholar]

- Mayberry RI, Chen JK, Witcher P, & Klein D (2011). Age of acquisition effects on the functional organization of language in the adult brain. Brain and Language, 119(1), 16–29. 10.1016/j.bandl.2011.05.007 [DOI] [PubMed] [Google Scholar]

- Mayberry RI, & Fischer SD (1989). Looking through phonological shape to lexical meaning: The bottleneck of non-native sign language processing. Memory & Cognition, 17(6), 740–754. 10.3758/BF03202635 [DOI] [PubMed] [Google Scholar]

- McCullough S, Saygin AP, Korpics F, & Emmorey K (2012). Motion-sensitive cortex and motion semantics in American Sign Language. Neuroimage, 63(1), 111–118. 10.1016/j.neuroimage.2012.06.029 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morford J, Grieve-Smith AB, MacFarlane J, Stanley J, & Waters G (2008). Effects of language experience on the perception of American Sign Language. Cognition, 109(1), 41–53. 10.1016/j.cognition.2008.07.016 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Newman AJ, Supalla T, Fernandez N, Newport EL, & Bavelier D (2015). Neural systems supporting linguistic structure, linguistic experience, and symbolic communication in sign language and gesture. Proceedings of the National Academy of Sciences, 112(37), 11684–11689. 10.1073/pnas.1510527112 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Poeppel D, Emmorey K, Hickok G, & Pylkkanen L (2012). Towards a new neurobiology of language. Journal of Neuroscience, 32(41), 14125–14131. 10.1523/JNEUROSCI.3244-12.2012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rizzolatti G, & Sinigaglia C (2008). Mirrors in the Brain. How our minds share actions and emotions. Oxford University Press. [Google Scholar]

- Rönnberg J, Rudner M, & Ingvar M (2004). Neural correlates of working memory for sign language. Cognitive Brain Research, 20(2), 165–182. 10.1016/j.cogbrainres.2004.03.002 [DOI] [PubMed] [Google Scholar]

- Sakai KL, Tatsuno Y, Suzuki K, Kimura H, & Ichida Y (2005). Sign and speech: Amodal commonality in left hemisphere dominance for comprehension of sentences. Brain: a Journal of Neurology, 128(6), 1407–1417. 10.1093/brain/awh465 [DOI] [PubMed] [Google Scholar]

- Schippers MB, & Keysers C (2011). Mapping the flow of information within the putative mirror neuron system during gesture observation. Neuroimage, 57(1), 37–44. 10.1016/j.neuroimage.2011.02.018 [DOI] [PubMed] [Google Scholar]

- Stowe LA, & Sabourin L (2005). Imaging the processing of a second language: Effects of maturation and proficiency on the neural processes involved. International Review of Applied Linguistics in Language Teaching, 43, 329–353. 10.1515/iral.2005.43.4.329 [DOI] [Google Scholar]

- Thompson R, & Duncan J (2009). Attentional modulation of stimulus representation in human fronto-parietal cortex. NeuroImage, 48(1), 436–448. 10.1016/j.neuroimage.2009.06.066 [DOI] [PubMed] [Google Scholar]

- Twomey T, Price CJ, Waters D, & MacSweeney M (2020). The impact of early language exposure on the neural system supporting language in deaf and hearing adults. Neuroimage, 201(1), 116411. 10.1016/j.neuroimage.2019.116411 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tzourio-Mazoyer N, Landeau B, Papathanassiou D, Crivello F, Etard O, Delcroix N, … Joliot M (2002). Automated anatomical labeling of activations in SPM using a macroscopic anatomical parcellation of the MNI MRI single-subject brain. NeuroImage, 15(1), 273–289. 10.1006/nimg.2001.0978 [DOI] [PubMed] [Google Scholar]

- van Heuven WJB, & Dijkstra T (2010). language comprehension in the bilingual brain: fMRI and ERP support for psycholinguistic models. Brain Research Reviews, 64(1), 104–122. 10.1016/j.brainresrev.2010.03.002 [DOI] [PubMed] [Google Scholar]

- Williams JT, Darcy I, & Newman SD (2016). Modality-specific processing precedes amodal linguistic processing during L2 Sign Language acquisition: A longitudinal study. 1Cortex, 75, 56–67. 10.1016/j.cortex.2015.11.015 [DOI] [PubMed] [Google Scholar]

- Wenger E, Brozzoli C, Lindenberger U, & Lövdén M (2017). Expansion and renormalization of human brain structure during skill acquisition. Trends in Cognitive Science, 21(12), 930–939. 10.1016/j.tics.2017.09.008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wu Y, Wang J, Zhang Y, Zheng D, & Zhang J (2016). The neuroanatomical basis for posterior superior parietal lobule control lateralization of visuospatial attention. Frontiers in Neuroanatomy, 10, 32. 10.3389/fnana.2016.00032 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

Partial datasets generated during the current study are available at: https://osf.io/bgjsq/. The conditions of our ethics approval do not permit sharing of the raw MRI data obtained in this study with any individual outside the author team under any circumstances.